Use 'Print preview' to check the number of pages and printer settings.

Print functionality varies between browsers.

Printable page generated Friday, 26 April 2024, 1:26 PM

Read: The purpose of this website

1 Overview

This website has been created as a public access site for visitors to explore how the Open University has contributed to the development of open source eAssessment systems during its stewardship of the Moodle Quiz module from 2006 - 2014. These developments provided new features in Moodle 1.6 - 2.0 and continue to provide further features in Moodle 2.1 - 2.6. The majority of these new Moodle features come from the 'assessment for learning' design that underpins the OpenMark system which we have been developing since 1996. This site contains a little of the history behind these developments but primarily provides a range of examples that visitors can interact with.

The Moodle Quiz and OpenMark together form the core of the Open University’s eAssessment facilities. They both allow Course Teams to set interactive computer-marked assessments (iCMAs) that are automatically marked by the computer with feedback being available to help students with their learning. OpenMark will provide instant feedback while the Moodle Quiz will provide either instant feedback or delayed feedback where course teams wish to withhold answers until after a specified date. From April 2008 the OU systems have become more closely integrated enabling Moodle Quizzes to include OpenMark questions and OpenMark test scores to be transferred to the Moodle Gradebook.

For Moodle 2.1 the Open University undertook a complete rewrite of the Moodle question engine and this question engine continues to underpin the Moodle Quiz eAssessment system.

Phil Butcher (email:philip.butcher@open.ac.uk)

OU VLE eAssessment project leader

2 A commitment to open source software

Through 2004 and 2005 the Open University considered how best to develop its online presence. It is not the purpose of this website to record how the decision to follow an open source route was taken but to describe what followed the announcement in October 2005 that the OU was going to develop its future VLE around Moodle. To reinforce its decision, and in recognition that none of the commercial or open source VLEs of that time were considered to be flexible enough to meet our needs, the university was prepared to invest to build some of the required flexibility into Moodle. £5 million was committed to the initial development programme that started in 2005 and ended in July 2008. This sum was spread across 11 projects, of which eAssessment was one. But the programme was not only about technical development. It had to take out its message to multiple areas of the university and the investment had to include many student facing areas, from the electronic library to considerations of the impact on associate lecturing staff (associate lecturers are the OU’s face on the ground conducting tutorials, marking essays, moderating forums and more.). So while £5 million was committed for development this had to cover technical, process and people development. Since August 2008 the university has continued to develop both Moodle and OpenMark through its normal systems support mechanisms and in 2010-2011 there was a further period of accelerated development and it was during this period that a fully re-engineered Moodle Question engine emerged for Moodle 2.1. It is this engine that continues to power the examples that are running on this site.

This website will focus primarily on the technical developments of Moodle itself from Moodle 1.6 to Moodle 2.6, developments that built on the efforts of others and are now in place for others to take further.

As well as the general commitment to financing further developments of Moodle the OU made one further important commitment to the Moodle eAssessment module (the Quiz module) when the university agreed to provide the Quiz Lead Developer for the global Moodle community. Tim Hunt has filled this role admirably for the past eight years, overseeing all Moodle Quiz developments as well as leading the OU’s own technical developments.

Here is a historical picture of the OU's eAssessment development team from 8th January 2008 as OU Moodle 1.9, went live.

In 2014, and now augmented by Colin Chambers, this is still the main OU Quiz development team. In 2012 we were pleased to collaborate closely with Chris Sangwin on making STACK available as a Moodle question type, while Jamie Pratt has been a regular contractor to us in developing new question types and improved Quiz reports.

3 Promoting learning with instant feedback

The importance of feedback for learning has been highlighted by a number of authors, emphasising its role in fostering meaningful interaction between student and instructional materials (Buchanan, 2000), its contribution to student development and retention (Yorke, 2001), but also its time-consuming nature for many academic staff (Gibbs, 2006). In distance education, where students work remotely from both peers and tutors, the practicalities of providing rapid, detailed and regular feedback on performance are vital issues.

Gibbs and Simpson suggest eleven conditions in which assessment supports student learning (Gibbs and Simpson 2004).

- Assessed tasks capture sufficient study time and effort.

- These tasks distribute student effort evenly across topics and weeks.

- These tasks engage students in productive learning activity.

- Assessment communicates clear and high expectations to students.

- Sufficient feedback is provided, both often enough and in enough detail.

- The feedback is provided quickly enough to be useful to students.

- Feedback focuses on learning rather than on marks or students themselves.

- Feedback is linked to the purpose of the assignment and to criteria.

- Feedback is understandable to students, given their sophistication.

- Feedback is received by students and attended to.

- Feedback is acted upon by students to improve their work or learning.

Four of these conditions, those in italics, are particularly apposite with regard to the use of eAssessment within distance education. They are reflected in the design of OpenMark and are amplified in the rationale behind the development of the S151: Maths for Science, online assessments (Ross, Jordan and Butcher, 2006) where:

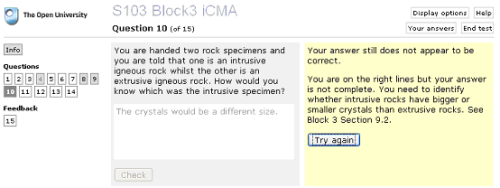

- The assessment questions provide individualised, targeted feedback, with the aim of helping students to get to the correct answer even if their first attempt is wrong.

- The feedback appears immediately in response to a submitted answer, such that the question and the student's original answer are still visible.

- Students are allowed up to three attempts at each question, with an increasing amount of feedback being given after each attempt.

The Interactive with multiple tries mode in Moodle is based on the OpenMark design.

References

4 Evidence that instant feedback is effective at promoting learning

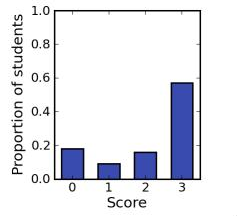

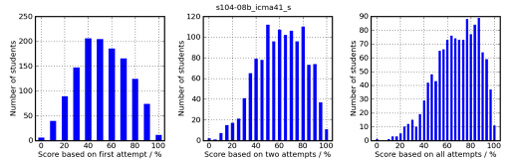

Here is some raw evidence for the effect of instant feedback. The charts were prepared for questions used in a summative iCMA and we are clear that the summative nature of the test made students pay attention to and act on the feedback.

The charts show scores on a question and iCMA taken by ~1,000 students studying S104: Exploring science.

Figure 4.1 shows that approximately 57% of students answered the question correctly at the first try and were awarded 3 marks. After a first hint a further ~16% gave the correct answer and were awarded two marks. And after a second more detailed hint a further 9% of students reached the correct answer and were awarded 1 mark. 18% failed to get the correct answer and scored zero.

Figure 4.2 combines all question scores across a summative iCMA to show the effect of feedback and multiple tries. If we add up the scores on the first try we arrive at the left hand chart; the sort of chart an examiner might well like to see with almost a normal distribution.

The middle chart shows the effect of adding in the scores of students who gave the correct answer on the second try and the overall effect is clearly to move the peak of the distribution to the right, to a higher score.

The right hand chart completes the story and provides clear evidence that students are acting upon the instant feedback that these iCMAs provide and are learning in the process.

5 The influence of OpenMark

OpenMark is an Open University computer-assisted assessment (CAA) system that has its foundations in computer-assisted learning. It had existed in various guises for almost a decade prior to the arrival of Moodle and it had been designed by OU staff for use in the OU’s open learning model. It differs from traditional CAA systems in:

- The emphasis we place on feedback. All Open University students are distance learners and within the university we emphasise the importance of giving feedback on written assessments. The design of OpenMark assumes that feedback, perhaps at multiple levels, will be included.

- Allowing multiple attempts. OpenMark is an interactive system, and consequently we can ask students to act on feedback that we give 'there and then', while the problem is still in their mind. If their first answer is incorrect, they can have an immediate second, or third, attempt.

- The breadth of interactions supported. We aim to use the full capabilities of modern multimedia computers to create engaging assessments. We do not hide the computer. Instead we harness the computing power available to us to enrich the learning process.

- The design for anywhere, anytime use. OpenMark assessments are designed to enable students to complete them in their own time in a manner that fits with normal life. They can be interrupted at any point and resumed later from the same location or from elsewhere on the internet.

Our experiences in planning, developing, using and evaluating OpenMark (Whitelock, 1998; Jordan et al, 2003) over such a long period provided us with a clear vision of what we might achieve during our stewardship of the Moodle Quiz. In terms of student interactions we wanted Moodle to be more like OpenMark and we asked ourselves how we might achieve this.

It took three years for Tim Hunt to formulate his design for the Moodle Question engine so that he could include our local, OpenMark influenced, changes to OU Moodle within Moodle core. In 2009 we gave Tim our backing to go ahead with the redevelopment and he spent much of 2010 working on this major re-engineering exercise. His new engine has been powering the questions at the OU since December 2010 and has been available to others since the release of Moodle 2.1.

References

6 Styles of interactive computer marked assessments

Discussions within the university have revealed that faculties see two major ways for students to interact with questions and assessments. These are described in Moodle as deferred feedback mode and interactive with multiple tries mode. As this is a website that is designed to help you learn about eAssessment in Moodle the bulk of the example tests on this site run in interactive with multiple tries mode.

Deferred feedback

Students are allowed one try at each question, though they may revisit and amend their response prior to submission. All responses are submitted simultaneously and feedback is provided either immediately (formative mode) or after a cut-off date (summative mode).

Interactive with multiple tries

Questions are answered one by one and instant feedback is provided. For those who answer incorrectly the questions are designed to allow multiple tries with stepped feedback. Interactive mode assessments can be used both formatively and summatively.

Others

The question engine supports other 'question behaviours' beyond those used at the OU and the STACK examples on this site use the 'adaptive' behaviour.

7 Usage at the OU

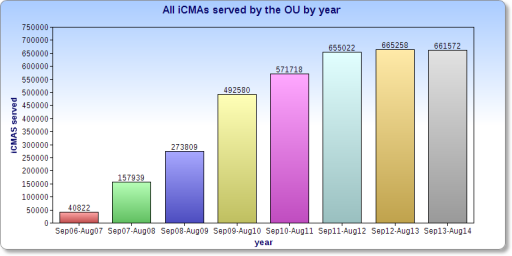

Annual take-up of interactive Computer-Marked Assignments (iCMAs) since 2006. The charts combine both OpenMark and Moodle iCMAs.

In 2013 the ratio of formative (inc. diagnostic) to summative was ~4:1.