Use 'Print preview' to check the number of pages and printer settings.

Print functionality varies between browsers.

Printable page generated Friday, 19 April 2024, 4:19 AM

How to create questions in Moodle

Introduction

Before you start – pasting from Word

Copying from Word and pasting directly into the Moodle editor takes too much Microsoft formatting information with it. Authors who wish to paste in text from Word are asked to ensure they use the 'paste from Word' button. ![]() Going via this route will strip much of the unwanted formatting information.

Going via this route will strip much of the unwanted formatting information.

Please be aware that several authors have not followed this advice with the consequence that their questions failed to operate correctly. They, of course, blamed Moodle but investigations revealed that the problems were of their own making.

1 The Question bank

The Question bank can be accessed from the site Administration block.

There are four question bank actions for creating and editing questions, creating categories in which to store the questions, import (from a Moodle XML format file) and export (to a Moodle XML format file).

Understanding these tools will enable you to store and retrieve your questions more efficiently.

Questions: Where the questions are held in the VLE. Your questions will normally be held within your module where they will be visible to all your colleagues who are 'Website updaters' on this module. When the module is rolled forwards for the next year the questions will be included. It is also possible to share questions across modules by storing the questions in categories at the Faculty or School level - please read on.

Categories: By creating categories for different topics, books or blocks of the module you are creating a logical storage structure so that you and all your colleagues will be able to locate all the questions on that topic in one area. It is also possible to create categories that span Faculties or Schools.

Import: It is possible to import files of questions in Moodle XML format.

Export: It is possible to export questions for import into another module. Please ensure that you use ‘Moodle XML format’.

1.1 Categories

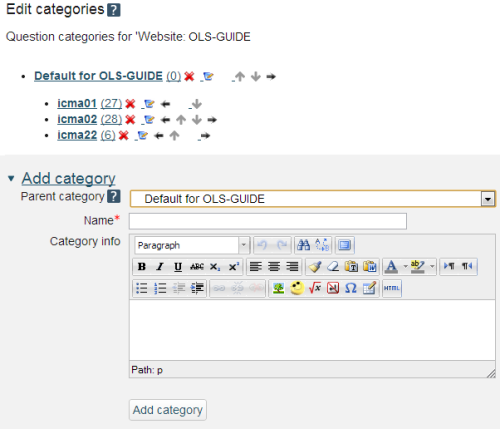

Click on 'Categories' and you will be taken to the following screen where you can create your own categories.

Possible structures can be based on Blocks, Books, Chapters or Units. We anticipate that most questions will be used within individual modules and consequently we anticipate that most question categories will be created within the module. In the example above there are three main categories of questions for iCMA01, iCMA02 and iCMA22.

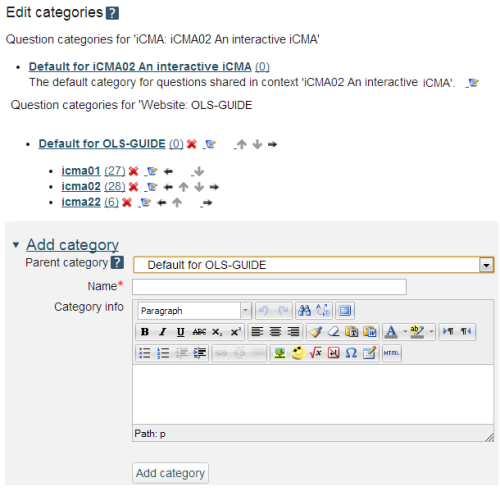

Please note that it is also possible to reach the question bank from the Quiz administration block and if you do this you will also find a category for this particular quiz.

Please be aware that entering the question bank from the site Administration block (Figure 1.2) does not show the categories associated with individual quizzes. Please compare and contrast Figures 1.2 and 1.3.

1.2 Import and export

Importing and exporting questions

Should you wish to export questions from one module and import them into another module you will be offered a range of possible formats. Our experience however is that only one of these is reliable and we recommend that you use 'Moodle XML format'.

Importing and exporting questions with images or audio-visual content

In Moodle 2 images and any audio-visual content are included within questions when exporting and importing. If there are multiple large videos in your questions than it is possible that you will exceed the memory allocated to the export system. If you encounter this problem and your intention is to move questions within OU VLE modules please talk to LTS staff who are able to move questions within the VLE via higher level faculty categories in the question bank (and thereby circumvent the export).

2 Question types

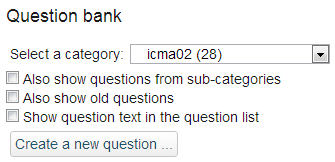

Having created your categories click on the Questions link in the administration block to show:

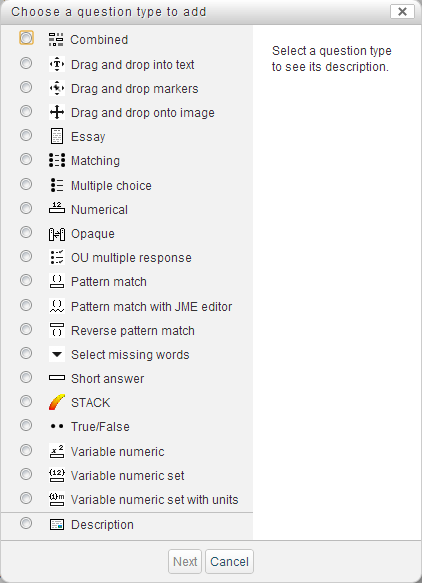

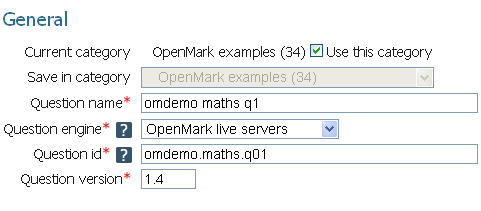

In the Question bank select the category that you wish to store your question in and then choose one of the question types from 'Create a new question…'. This will bring up the ‘Choose a question type to add’ dialogue box.

These Moodle question types can be categorised by the type of interaction that they support.

- Numeric response

- Text response

- Selection

- 2D - i.e some form of spatial input

- Mathematical response

- External

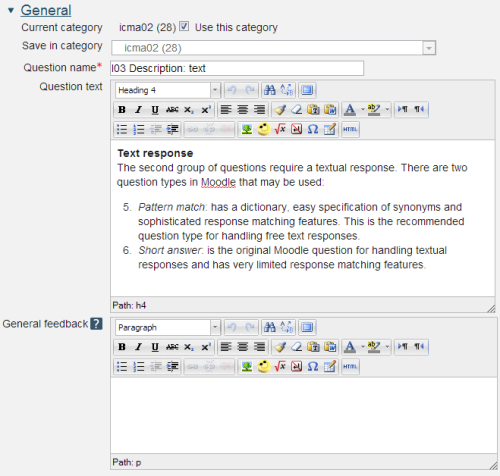

There is also a seventh category, Description, which enables you to include blocks of information within an assessment.

2.1 Numeric response

There are a variety of question types that accept numeric responses.

Numerical

The simplest to use but also the most limited.

Variable numeric types

- Variable numeric:

- Questions can include random numbers generated at run time.

- Variable numeric set:

- Sets of values are specified from which one set is chosen at random.

- Variable numeric set with units:

- Sets of values are specified from which one set is chosen at random.

- Has a Pattern match field to handle the matching of units.

All Variable numeric types:

- Calculations can be made based on the values in the set.

- Making it straightforward to create multiple variants of a question.

- Scientific input can be enabled for the student.

- Answers can be requested to a number of significant figures.

- There is built in feedback for common errors such as giving the wrong number of significant figures, rounding the wrong way or being out by a factor of 10.

- A sequence of questions can be specified to use the same random number.

Combined

One or more numeric response fields can be combined with any of Pattern match, OU multiple response and Select missing words.

STACK and Pattern match

The STACK question type has the Maxima computer algebra system behind it and as such offers a complete mathematical sub-system for handling a wide variety of problems that result in numeric answers.

When you include a number in a Pattern match response string it is treated like a number, that is match(5) with match 5, 5.0, 5e0 etc.

These two question types are described in detail elsewhere on thie website

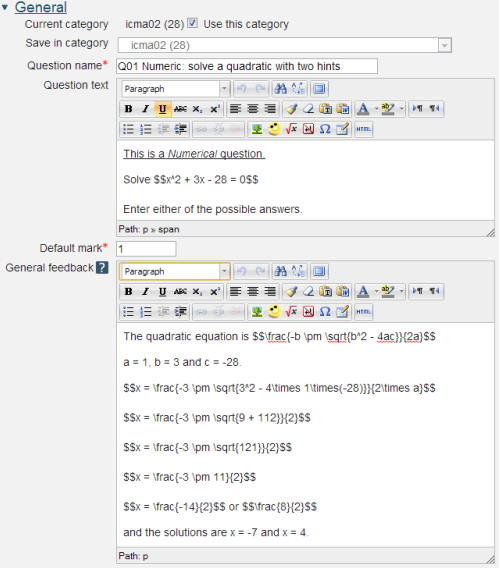

2.1.1 Numerical

Question name: A descriptive name is sensible. This name will not be shown to students.

Question text: You may use the full functionality of the editor to state the question.

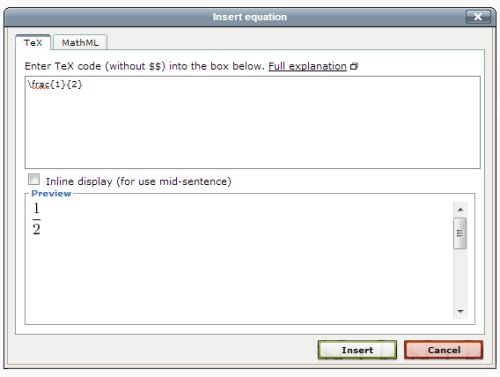

You may also include formatted mathematics using TeX or MathML. The text surrounded by two $ symbols in this example will be interpreted by the TeX filter and converted to nicely formatted mathematics. Please see the Including TeX and MathML section below.

Placing the response box

The response box may be placed within the question rubric by including a sequence of underscores e.g. _____. At runtime the response box will replace the underscores and the length will be determined by the number of underscores. The minimum number of underscores required to trigger this action is 5. If no underscores are present the response box will be placed after the question and will be a full length box.

Default mark: Decide on how to score your questions and be consistent.

General feedback: We recommend that all questions should have this box completed with the correct answer and a fully worked explanation. The contents of this box will be shown to all students irrespective of whether their response was correct or incorrect. We do not recommend that authors rely on using the machine generated 'Right answer' (from the iCMA definition form) as this is:

- written by a computer

- frequently not the full right answer

- not necessarily in the correct format.

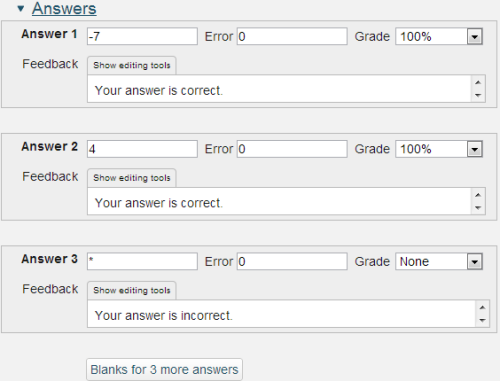

Answer: an integer or decimal number. Use 'E' format for very large or very small values. '*' matches all other answers.

Error: Only rely on exact values for integers, for all other values specify a suitable range.

Grade: The percentage of the mark to be allocated for this answer. The correct answer must be graded at 100%.

Feedback: Feedback that is specific to the answer.

Responses are matched in the order that they are entered into the form.

A note on handling decimal numbers

If you are asking your user to enter an integer then it is OK to leave 'Error' empty. This means you are looking for the exact value.

But if you are expecting a non-integer value, or if any of your 'units' calculations will result in a non-integer answer, then you should be aware that the computer will treat the student's answer as a 'real', i.e. decimal, number. And because of the digital nature of computers and the finite number of 'bits' that are used to store 'reals' it is often the case that the real number cannot be held exactly; try writing 2/3 in decimal format where you only have 6 digits available and you'll see the problem. So if you are testing a 'real' value you should always use a small 'Accepted error'. In the example of 2/3, you may choose to look for a value of 0.665 and an 'Accepted error' of 0.0051, which will allow for both 0.66 or 0.67 and everything in-between such as 0.6667.

The Numerical question type does not allow students to input powers and the Unit handling and Units sections provide ways around this. An alternative approach is provided in the Variable numeric with units question type.

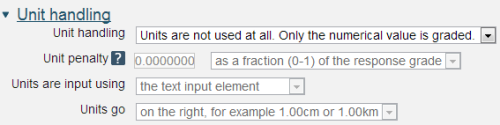

Unit handling: a choice of three options:

- Units are not used at all. Only the numerical value is graded.

- Units are optional. If a unit is entered, it is used to convert the response to Unit 1 before grading

- The unit must be given and will be graded.

Unit penalty:

Units are input using: A choice of three options:

- the text input element

- a multiple choice selection

- a drop down menu

Units go: left or right.

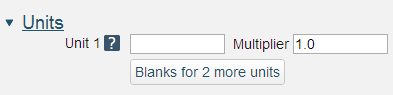

The multiplier is the factor by which the correct numerical response will be multiplied.

The first unit (Unit 1) has a default multiplier of 1. Thus if the correct numerical response is 5500 and you set W as unit at Unit 1 which has 1 as default multiplier, the correct response is 5500 W.

If you add the unit kW with a multiplier of 0.001, this will add a correct response of 5.5 kW. This means that the answers 5500W or 5.5kW would be marked correct.

Note that the accepted error is also multiplied, so an allowed error of 100W would become an error of 0.1kW.

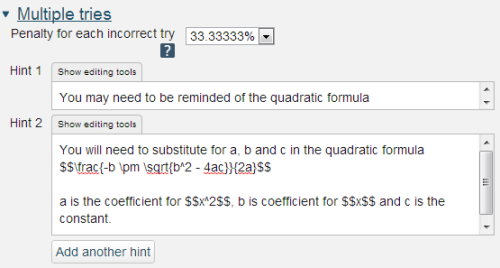

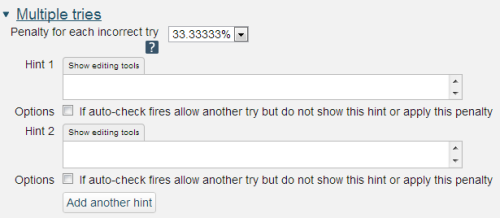

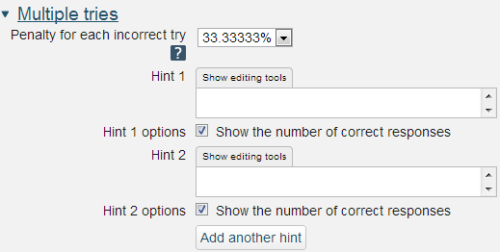

If you are configuring your question to run in 'Interactive with multiple tries' mode such that students can answer questions one by one you can also provide hints and second (and third) tries by entering appropriate feedback into the boxes provided in the 'Multiple tries' section of the question definition form.

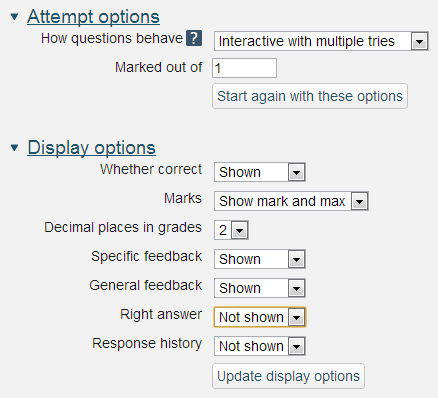

To use this section of the question form please ensure that on the iCMA definition form you have chosen 'How questions behave' = 'Interactive with multiple tries'.

Penalty for each incorrect try: The available mark is reduced by the penalty for second and subsequent tries. In the example above a correct answer at the second try will score 0.6666667 of the available marks and a correct answer at the third try will score 0.3333334 of the available marks.

Hint: You can complete as many of these boxes as you wish. If you wish to give the student three tries at a question you will need to provide two hints. At runtime when the hints are exhausted the question will finish and the student will be given the general feedback and the question score will be calculated.

N.b. If you create a hint and then decide to delete it such that there is one less hint to the question you must ensure that all HTML formatting is also removed. To do this click on the 'HTML' icon in the editor toolbar which will display the HTML source. If any HTML formatting (in '') is present delete it and click on the HTML icon again to return to normal view.

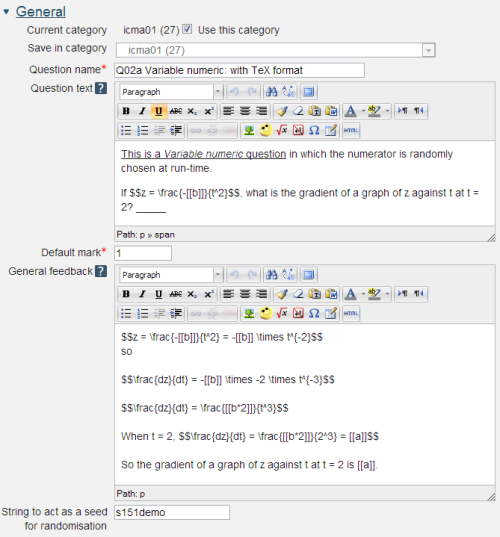

2.1.2 Variable numeric

Variable numeric questions use numeric values and calculations that are performed at runtime following rules laid down in the question. As such it is straightforward to produce numerous variations on a question. However this places an onus on the author to be sure that all possible variations will function properly and not inadvertently run into ‘division by zero’ or ‘square root of a minus number’ errors. Please see Variable numeric sets questions for a ‘safer’ alternative.

Question name: A descriptive name is sensible. This name will not be shown to students.

Question text: You may use the full functionality of the editor to state the question.

Displaying the values of variables

Variables that are calculated by the computer are included in text fields by placing the names of the variables within double square brackets e.g. [[b]]. The formatting of the numbers may be controlled using sprinf() style controls (http://php.net/ manual/ en/ function.sprintf.php ). For example [[b,.3e]] will display the value of a in scientific notation with 3 decimal places. The most usual formatting choices are ‘d’ for integers, ‘f’ for floating point or ‘e’ for scientific notation.

You may also include formatted mathematics using TeX or MathML. The text surrounded by two $ symbols in this example will be interpreted by the TeX filter and converted to nicely formatted mathematics. Please see the Including TeX and MathML section below.

Placing the response box

The response box may be placed within the question rubric by including a sequence of underscores e.g. _____. At runtime the response box will replace the underscores and the length will be determined by the number of underscores. The minimum number of underscores required to trigger this action is 5. If no underscores are present the response box will be placed after the question and will be a full length box.

General feedback: We recommend that all questions should have this box completed with the correct answer and a fully worked explanation. The contents of this box will be shown to all students irrespective of whether their response was correct or incorrect. We do not recommend that authors rely on using the machine generated 'Right answer' (from the iCMA definition form).

String to act as seed for randomisation: Sometimes it is desirable to base a sequence of questions on the same set of numbers. To do this use the same string e.g. ‘mystring’ as the ‘seed for randomisation’ in each question in the sequence.

Please note that this then generates the same set of numbers across a sequence of questions for a student but different students get different sets of numbers.

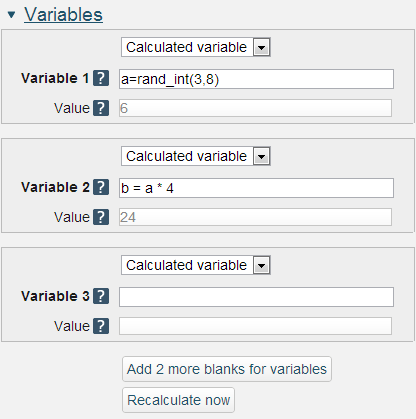

The names of variables must be lower case

As shown on this page variable names should use lower case letters.

The formula for all calculations should start with a variable name followed by an equals (=) sign and may use common mathematical operators +, -, *, /, brackets, (, ) and other variables defined within the question.

The function rand_int(n,m)provides a random integer within the range n to m inclusive. rand_int(n,m)uses the seed described above.

The mathematical processing that is available is similar to that provided within the Moodle Gradebook and includes

- average(a, b…): Returns the average of a list of arguments.

- max(a, b...): Returns the maximum value in a list of arguments

- min(a,b...): Returns the minimum value in a list of arguments

- mod(dividend, divisor): Calculates the remainder of a division.

- ln(number): Returns the natural logarithm of number.

- log(number): Returns the natural logarithm of number.

- pi(): Returns the value of the number Pi.

- power(base,exponent): Calculates the value of the first argument raised to the power of the second argument.

- round(number, count): Rounds a number to a predefined accuracy.

- sqrt, abs, exp

- sin, sinh, arcsin, asin, arcsinh, asinh

- cos, cosh, arcos, acos, arccosh, acosh

- tan, tanh, arctan, atan, arctanh, atanh

Recalculate now: With all variables specified the ‘Recalculate now’ button will calculate a set of values within the greyed out boxes.

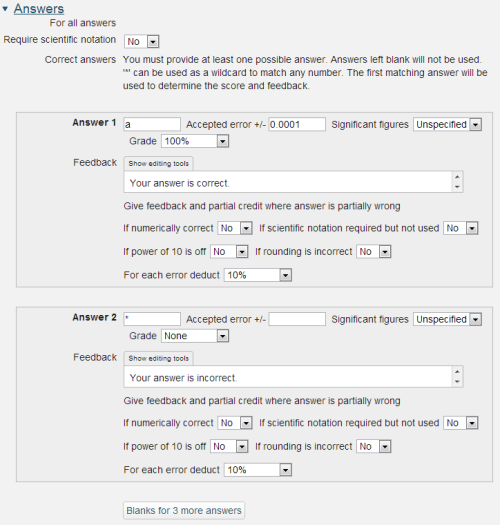

Require scientific notation:It is possible to require the student to enter their response using scientific notation. The input box is provided with a superscript facility and all of the following formats are accepted: 1.234e5, 1.234x105, 1.234X105 and 1.234*105.

For keyboard users the superscript may be accessed with the up-arrow key. Down-arrow returns entry to normal. You may wish to include this information within your question. If you do please note that the up-arrow and down-arrow provided in the HTML editor’s ‘insert custom characters’ list are not spoken by a screen reader and you should also include the words ‘up-arrow’ and ‘down-arrow’.

Answer: This may be a numeric value, a variable as above or a mathematical expression.

Accepted error: Computers store numbers to a finite accuracy e.g. 1/3 is stored as a finite number of recurring 3s in 0.33333… When used in calculations these minor infelicities can soon propagate and as such we strongly recommend that answers are matched within a suitable numerical range as shown here.

Significant figures: It is possible to request that responses be given to a specified number of significant figures.

Dealing with responses that are partially wrong

Typically these are responses that have a variation on the correct numerical value e.g.

- are not in scientific format when this has been requested

- have an incorrect number of significant figures

- are wrong by a factor of 10

- have been rounded incorrectly.

In all of these four cases specific feedback can be supplied using these settings.

If you are configuring your question to run in 'Interactive with multiple tries' mode such that students can answer questions one by one you can also provide hints and second (and third) tries by entering appropriate feedback into the boxes provided in the 'Multiple tries' section of the question definition form.

To use this section of the question form please ensure that on the iCMA definition form you have chosen 'How questions behave' = 'Interactive with multiple tries'.

Penalty for each incorrect try: The available mark is reduced by the penalty for second and subsequent tries. In the example above a correct answer at the second try will score 0.6666667 of the available marks and a correct answer at the third try will score 0.3333334 of the available marks.

Hint: You can complete as many of these boxes as you wish. If you wish to give the student three tries at a question you will need to provide two hints. At runtime when the hints are exhausted the question will finish and the student will be given the general feedback and the question score will be calculated.

N.b. If you create a hint and then decide to delete it such that there is one less hint to the question you must ensure that all HTML formatting is also removed. To do this click on the 'HTML' icon in the editor toolbar which will display the HTML source. If any HTML formatting (in '') is present delete it and click on the HTML icon again to return to normal view.

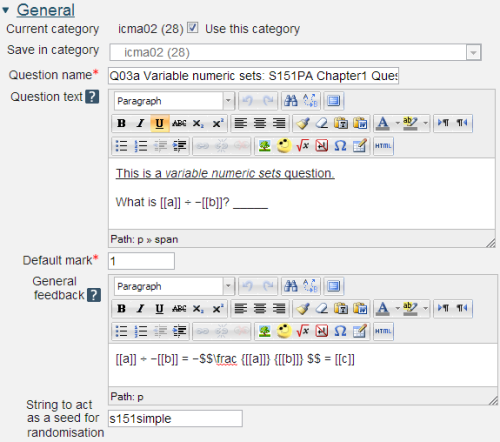

2.1.3 Variable numeric sets

Variable numeric sets questions are similar to Variable numeric questions but are limited to ‘sets’ of pre-specified values. As such Variable numeric sets questions are safer as all possible variations can be tested before the question is released to students.

Variable numeric sets questions use numeric values and calculations that are performed at runtime following rules laid down in the question.

Question name: A descriptive name is sensible. This name will not be shown to students.

Question text: You may use the full functionality of the editor to state the question.

Displaying the values of variables

Variables that are calculated by the computer are included in text fields by placing the names of the variables within double square brackets e.g. [[a]]. The formatting of the numbers may be controlled using sprinf() style controls (http://php.net/ manual/ en/ function.sprintf.php ). For example [[a,.3e]] will display the value of a in scientific notation with 3 decimal places. The most usual formatting choices are ‘d’ for integers, ‘f’ for floating point or ‘e’ for scientific notation.

You may also include formatted mathematics using TeX or MathML. The text surrounded by two $ symbols in this example will be interpreted by the TeX filter and converted to nicely formatted mathematics. Please see the Including TeX and MathML section below.

Placing the response box

The response box may be placed within the question rubric by including a sequence of underscores e.g. _____. At runtime the response box will replace the underscores and the length will be determined by the number of underscores. The minimum number of underscores required to trigger this action is 5. If no underscores are present the response box will be placed after the question and will be a full length box.

General feedback: We recommend that all questions should have this box completed with the correct answer and a fully worked explanation. The contents of this box will be shown to all students irrespective of whether their response was correct or incorrect. We do not recommend that authors rely on using the machine generated 'Right answer' (from the iCMA definition form).

String to act as seed for randomisation: Sometimes it is desirable to base a sequence of questions on the same set of numbers. To do this use the same sets of numbers and the same string e.g. ‘mystring’ as the ‘seed for randomisation’ in each question in the sequence.

Please note that this then selects the same set of numbers across a sequence of questions for a student but different students get different sets of numbers.

The names of variables must be lower case

As shown on this page variable names should use lower case letters.

The formula for all calculations should start with a variable name followed by an equals (=) sign and may use common mathematical operators +, -, *, /, brackets, (, ) and other variables defined within the question.

The mathematical processing that is available is similar to that provided within the Moodle Gradebook and includes

- average(a, b…): Returns the average of a list of arguments.

- max(a, b...): Returns the maximum value in a list of arguments

- min(a,b...): Returns the minimum value in a list of arguments

- mod(dividend, divisor): Calculates the remainder of a division.

- ln(number): Returns the natural logarithm of number.

- log(number): Returns the natural logarithm of number.

- pi(): Returns the value of the number Pi.

- power(base,exponent): Calculates the value of the first argument raised to the power of the second argument.

- round(number, count): Rounds a number to a predefined accuracy.

- sqrt, abs, exp

- sin, sinh, arcsin, asin, arcsinh, asinh

- cos, cosh, arcos, acos, arccosh, acosh

- tan, tanh, arctan, atan, arctanh, atanh

Recalculate now: With all variables specified the ‘recalculate now’ button will calculate a set of values within the greyed out boxes.

Require scientific notation: It is possible to require the student to enter their response using scientific notation. The input box is provided with a superscript facility and all of the following formats are accepted: 1.234e5, 1.234x105, 1.234X105 and 1.234*105.

For keyboard users the superscript may be accessed with the up-arrow key. Down-arrow returns entry to normal. You may wish to include this information within your question. If you do please note that the up-arrow and down-arrow provided in the HTML editor’s ‘insert custom characters’ list are not spoken by a screen reader and you should also include the words ‘up-arrow’ and ‘down-arrow’.

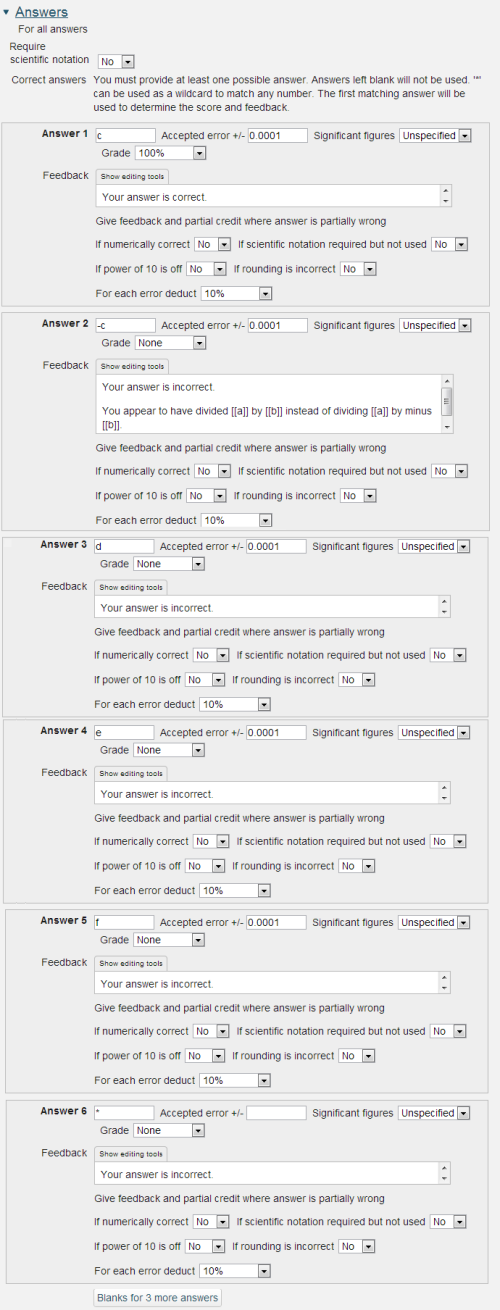

Answer: This may be a numeric value, a variable as above or a mathematical expression. For the example shown here it would have been quite acceptable to place ‘a/(-b)’ in the Answer box.

Accepted error: Computers store numbers to a finite accuracy e.g. 1/3 is stored as a finite number of recurring 3s in 0.33333… When used in calculations these minor infelicities can soon propagate and as such we strongly recommend that answers are matched within a suitable numerical range as shown here.

Significant figures: It is possible to request that responses be given to a specified number of significant figures.

Dealing with responses that are partially wrong

Typically these are responses that have a variation on the correct numerical value e.g.

- are not in scientific format when this has been requested

- have an incorrect number of significant figures

- are wrong by a factor of 10

- have been rounded incorrectly.

In all of these four cases specific feedback can be supplied using these settings.

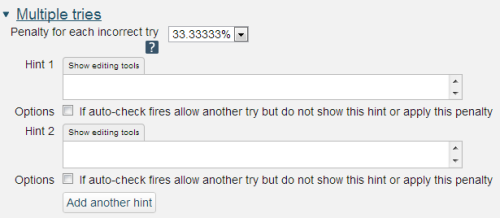

If you are configuring your question to run in 'Interactive with multiple tries' mode such that students can answer questions one by one you can also provide hints and second (and third) tries by entering appropriate feedback into the boxes provided in the 'Multiple tries' section of the question definition form.

To use this section of the question form please ensure that on the iCMA definition form you have chosen 'How questions behave' = 'Interactive with multiple tries'.

Penalty for each incorrect try: The available mark is reduced by the penalty for second and subsequent tries. In the example above a correct answer at the second try will score 0.6666667 of the available marks and a correct answer at the third try will score 0.3333334 of the available marks.

Hint: You can complete as many of these boxes as you wish. If you wish to give the student three tries at a question you will need to provide two hints. At runtime when the hints are exhausted the question will finish and the student will be given the general feedback and the question score will be calculated.

N.b. If you create a hint and then decide to delete it such that there is one less hint to the question you must ensure that all HTML formatting is also removed. To do this click on the 'HTML' icon in the editor toolbar which will display the HTML source. If any HTML formatting (in '') is present delete it and click on the HTML icon again to return to normal view.

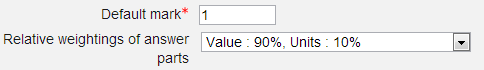

2.1.4 Variable numeric sets with units

This is an extension of Variable numeric sets by the addition of a Pattern match response matching field to match the units.

The description below deals only with the differences from Variable numeric sets questions.

General

The grade for the numeric value and the unit can have different weights.

Units

The question type splits the numeric value from the units before matching the units using the Pattern match algorithm.

The full capabilities for the Pattern match question is described in section 2.4.1.

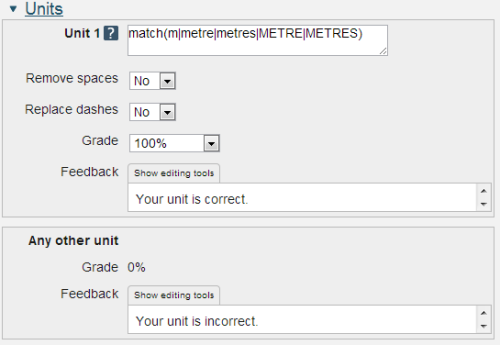

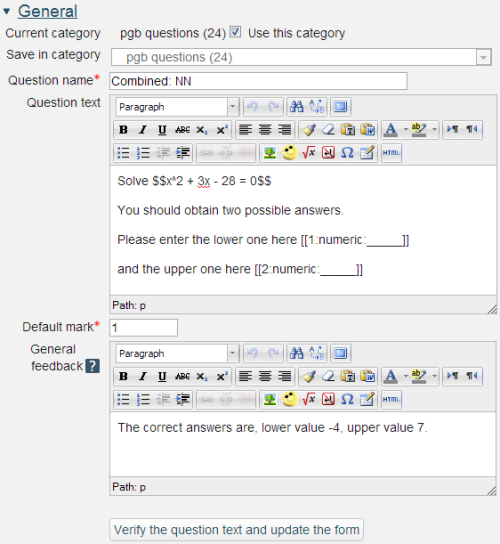

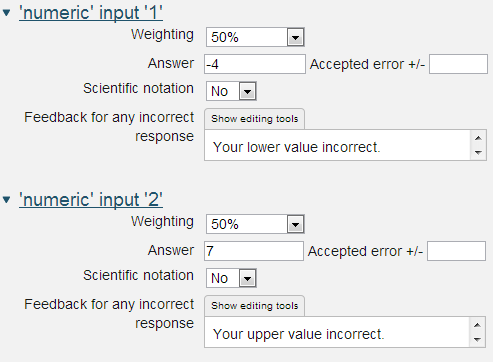

2.1.5 Combined

The Combined question enables multiple numeric responses to be matched.

The full capabilities of the Combined question are described in section 2.4.1.

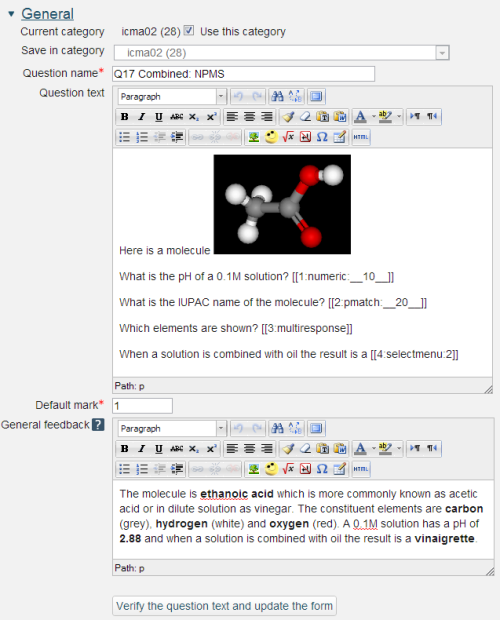

Question name: A descriptive name is sensible. This name will not be shown to students.

Question text: You may use the full functionality of the editor to state the question.

Numeric response fields

The response fields have the form

- [[

: : ]]

may be alphanumeric up to 8 characters. In our example we’ve just used numbers. is 'numeric'. should be one of - __10__ is the width of the displayed input box in characters.

- _____ (i.e. without a number) provides a box equivalent in length to the number of underscores.

After adding new input fields and to remove empty unwanted input fields click the ‘Verify the question text and update the form’ button. At this point your question text will be validated.

General feedback: We recommend that all questions should have this box completed with the correct answer and a fully worked explanation. The contents of this box will be shown to all students irrespective of whether their response was correct or incorrect. There is no system generated 'Right answer' (from the iCMA definition form) for Combined questions.

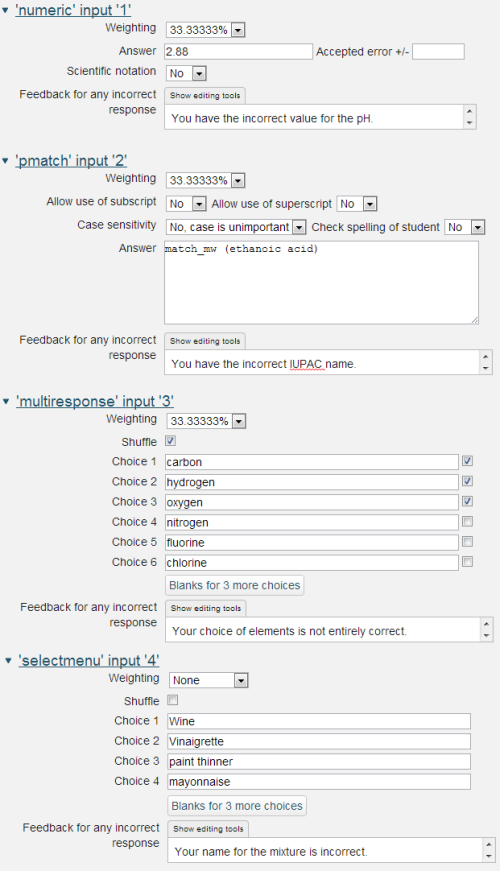

Thie Combined question uses a restricted form of the Variable numeric response matching.

Weighting. Different responses can have different percentages of the total mark. The weightings must add to 100%.

Answer: an integer or decimal number. Use 'E' format for very large or very small values.

Accepted error: Only rely on exact values for integers, for all other values specify a suitable range.

Scientific notation:It is possible to enable the student to enter their response using scientific notation. The input box is provided with a superscript facility and all of the following formats are accepted: 1.234e5, 1.234x105, 1.234X105 and 1.234*105.

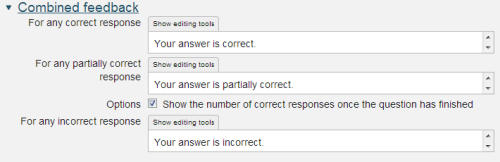

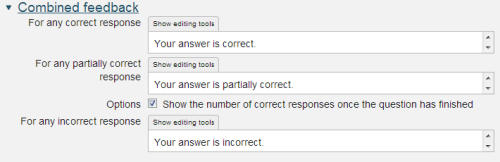

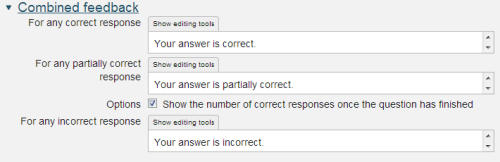

Feedback for any incorrect response: Where some fields are answered correctly and others incorrectly the intention is that the feedback associated with the field is used to say what is wrong. Correct answers can be counted up using the the option to 'Show the number of correct responses' in the Combined feedback and Hints fields.

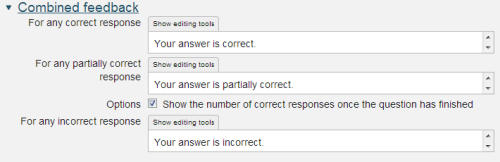

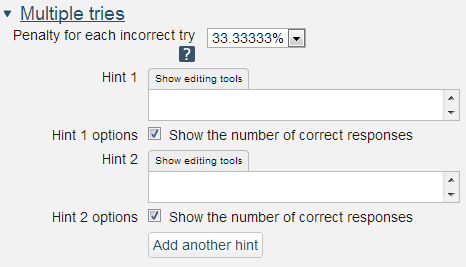

If you are configuring your question to run in 'Interactive with multiple tries' mode such that students can answer questions one by one you can also provide hints and second (and third) tries by entering appropriate feedback into the boxes provided in the 'Multiple tries' section of the question definition form.

To use this section of the question form please ensure that on the iCMA definition form you have chosen 'How questions behave' = 'Interactive with multiple tries'.

Penalty for each incorrect try: The available mark is reduced by the penalty for second and subsequent tries. In the example above a correct answer at the second try will score 0.6666667 of the available marks and a correct answer at the third try will score 0.3333334 of the available marks.

Hint: You can complete as many of these boxes as you wish. If you wish to give the student three tries at a question you will need to provide two hints. At runtime when the hints are exhausted the question will finish and the student will be given the general feedback and the question score will be calculated.

2.2 Text response

Four question types will accept typed textual responses.

Pattern match

Pattern match has a range of features:

- the ability to cater for misspellings, with and without an English dictionary

- specification of synonyms and alternative phrases

- flexible word order

- checks on the proximity of words.

Short answer

This is the original Moodle text matching question type but it is very limited.

Combined

One or more Pattern match response fields can be combined with any of numeric, OU multiple response and Select missing words.

Essay

The Essay question has to be marked by hand.

2.2.1 Pattern match

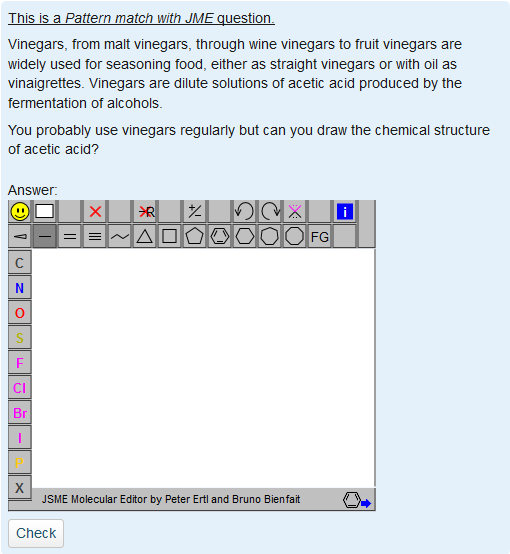

The Pattern match question type is used to test if a short free-text student response matches a specified response pattern.

Pattern match is a more sophisticated alternative to the Short answer question type and offers:

- the ability to cater for misspellings, with and without an English dictionary

- specification of synonyms and alternative phrases

- flexible word order

- checks on the proximity of words.

For certain types of response it has been shown to provide an accuracy of marking that is on a par with, or better than, that provided by a cohort of human markers.

Pattern match works on the basis that you have a student response which you wish to match against any number of response matching patterns. Each pattern is compared in turn until a match is found and feedback and marks are assigned.

The key to using Pattern match is in asking questions that you have a reasonable hope of marking accurately. Hence writing the question stem is the most important part of writing these questions.

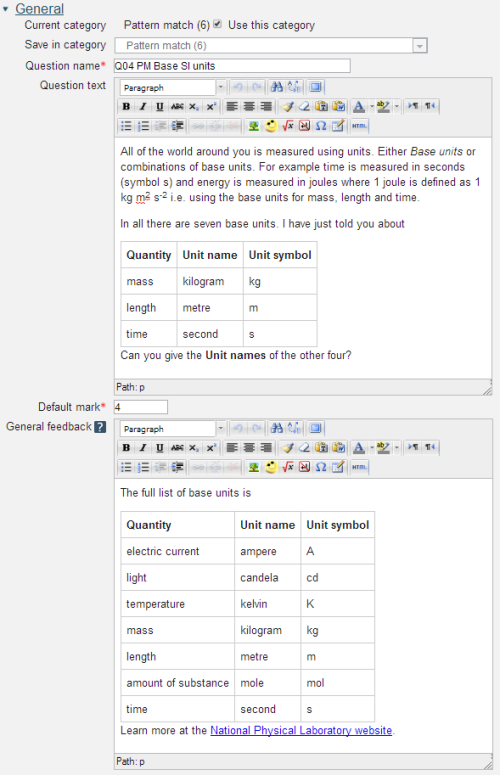

Question name: A descriptive name is sensible. This name will not be shown to students.

Question text: You may use the full functionality of the editor to state the question.

Placing the response box

The response box may be placed within the question rubric by including a sequence of underscores e.g. _____. At runtime the response box will replace the underscores. The minimum number of underscores required to trigger this action is 5. The size of the input box may also be specified by __XxY__ e.g. __20x1__ will produce a box 20 columns wide and 1 row high. If no underscores are present the response box will be placed after the question.

Default mark: Decide on how to score your questions and be consistent.

General feedback: We recommend that all questions should have this box completed with the correct answer and a fully worked explanation. The contents of this box will be shown to all students irrespective of whether their response was correct or incorrect. For the Pattern match question type you cannot rely on using the machine generated 'Right answer' (from the iCMA definition form).

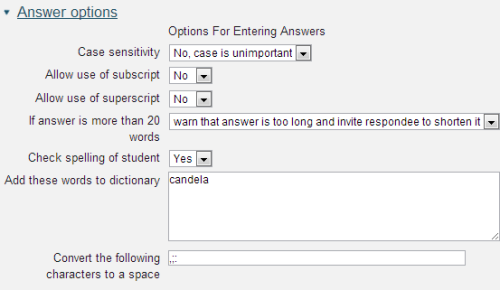

Case sensitivity: No or yes.

Allow use of subscript/superscript: No or Yes. Any subscripts entered by the student are contained in their response between the standard tags of and or and . For example:

- The formula for water is H2O

- The speed of light is approximately 3x108 m s-1

At run time keyboard users may move between normal, subscript and superscript by using the up-arrow and down-arrow keys. You may wish to include this information within your question. If you do please note that the up-arrow and down-arrow provided in the HTML editor’s ‘insert custom characters’ list are not spoken by a screen reader and you should also include the words ‘up-arrow’ and ‘down-arrow’.

If answer is more than 20 words: We strongly recommend that you limit responses to 20 words. Allowing unconstrained responses often results in responses that are both right and wrong – which are difficult to mark consistently one way or the other.

Check spelling of student: How many ways do you know of to spell ‘temperature’? We’ve seen 14! You will improve the marking accuracy by insisting on words that are in Moodle system dictionary.

Add these words to dictionary: When dealing with specialised scientific, technical and medical terms that are not in a standard dictionary it is most likely that you will have to add them by using this field. Enter your words leaving a space between them.

Convert the following characters to space: In Pattern match words are defined as sequences of characters between spaces. The exclamation mark and question mark are also taken to mark the end of a word. The period is a special case; as a full stop it is also a word delimiter but as the decimal point it is not. All other punctuation is considered to be part of the response but this option lets you remove it.

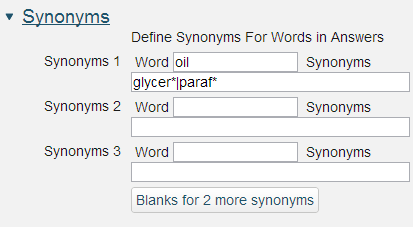

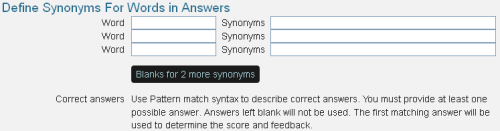

Words and synonyms: All words and synonyms are specified as they are to be applied by the response matching. They do not have to be full words but can be stems with a wildcard.

Synonyms may only be single words i.e. the ability to specify alternate phrases in synonym lists is not allowed.

From the example above any occurrence of the word oil in the response match will be replaced by oil|glycer*|paraf* before the match is carried out.

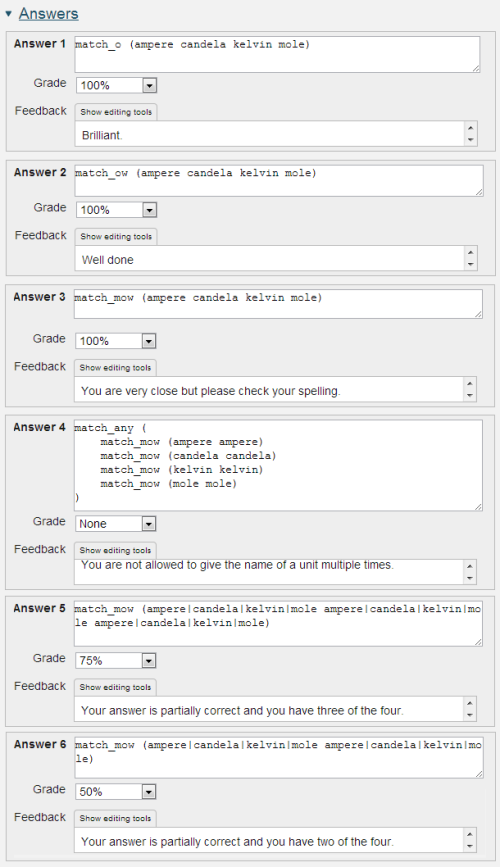

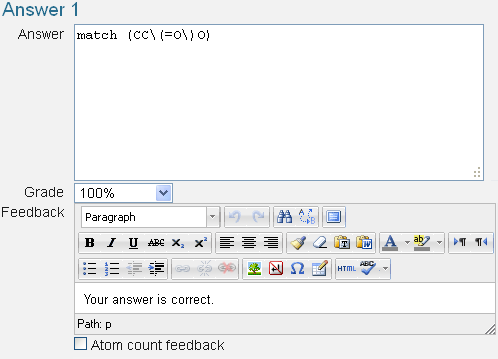

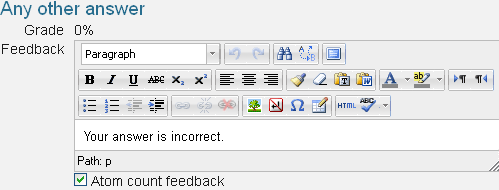

Answer: This example shows that Pattern match will support complex response matching.

Take the first answer field match_o(ampere candela kelvin mole) is the exact match for the four words with the additional feature that the matching option 'o' allows the words to be given in any order.

The answer field match_ow(ampere candela kelvin mole) requires the same four words, again in any order, but also allows other words.

The third answer field match_mow(ampere candela kelvin mole) allows for misspellings which are still in the dictionary e.g. mule instead of mole.

Please see the section on Pattern match syntax for a full description.

Grade: Between ‘none’ and 100%. At least one response must have a mark of 100%.

Feedback: Specific feedback that is provided to anyone whose response is matched by the response matching rule in Answer.

The feedback for all non-matched responses should go into the ‘Any other answer’ field.

________________________________________________________________________

The basic unit of the student response that Pattern match operates on is the word, where a word is defined as a sequence of characters between spaces. The full stop (but not the decimal point), exclamation mark and question mark are also treated as ending a word.

Numbers are special instances of words and are matched by value and not by the form in which they are given. match_w(25 ms-1)will match the following correct responses; 25 ms-1, 2.5e1 ms-1, 2.5x10-1 ms-1

With the exception of numbers and the word terminators (

The response is treated as a whole with the exception that words that are required to be in proximity must also be in the same sentence.

Pattern match syntax

The match syntax can be considered in three parts.

- the matching options e.g. mow

- the words to be matched e.g.tom dick harry together with the in-word special characters.

- and, or and not combinations of matches e.g. match_any()

| Matching option | Symbol | Description |

| allowExtraChars | c | Extra characters can be anywhere within the word. Authors are expected to omit allowExtraChars when using the misspelling options below. |

| allowAnyWordOrder | o | Where multiple words are to be matched they can be in any order. |

| allowExtraWords | w | Extra words beyond those being searched for are accepted. |

| misspelling: allowReplaceChar | mr | Will match a word where one character is different to those specified in the pattern. The pattern word must be 4 characters or greater, excluding wildcards, for replacement to kick in. Authors are expected to omit allowExtraChars when using this option. |

| misspelling: allowTransposeTwoChars | mt | Will match a word where two characters are transposed. The pattern word must be 4 characters or greater, excluding wildcards, for transposition to kick in. Authors are expected to omit allowExtraChars when using this option. |

| misspelling: allowExtraChar | mx | Will match a word where one character is extra to those specified in the pattern. The pattern word must be 3 characters or greater, excluding wildcards, for extra to kick in. Authors are expected to omit allowExtraChars when using this option. |

| misspelling: allowFewerChar | mf | Will match a word where one character is missing from those specified in the pattern. The pattern word must be 4 characters or greater, excluding wildcards, for fewer to kick in. Without this 'no' would be reduced to just matching 'n' or 'o'. Authors are expected to omit allowExtraChars when using this option. |

| misspelling | m | This combines the four ways of misspelling a word described above i.e. m is equivalent to mxfrt. Authors are expected to omit allowExtraChars when using this option. |

| misspellings | m2 | Allows two misspellings, as defined by option 'm', in pattern words of 8 characters or more, excluding wildcards. Authors are expected to omit allowExtraChars when using this option. N.b. use this option sparely. It introduces a huge number of possible acceptable spellings all of which have to be checked and the time taken for the match to complete goes up and up. |

| allowProximityOf0 | p0 | No words, or full stops, are allowed in between any words specified in the proximity sequence. |

| allowProximityOf1 | p1 | One word is allowed in between any two words specified in the proximity sequence. The words must not span sentences. |

| allowProximityOf2 | p2 | (Default value) Two words are allowed in between any two words specified in the proximity sequence. The words must not span sentences. |

| allowProximityOf3 | p3 | Three words are allowed in between any two words specified in the proximity sequence. The words must not span sentences. |

| allowProximityOf4 | p4 | Four words are allowed in between any two words specified in the proximity sequence. The words must not span sentences. |

The matching options are appended to the word match with an intervening underscore and may be combined. A typical match combines the options ‘mow’ to allow for

- misspellings

- any word order

- any extra words

and is written match_mow(words to be matched).

________________________________________________________________________

Within a word 'special characters' provide more localised control of the patterns.

| Special character | Symbol | Description |

| Word AND | space | 'space' delimits words and acts as the logical AND. |

| Word OR | | | | between words indicates that either word will be matched. | delimits words and acts as the logical OR. |

| Proximity control | _ | Words must be in the order given and with no more than n (where n is 0 - 4) intervening words. All words under the proximity control must be in the same sentence. _ delimits words and also acts as logical 'AND'. Other words included in the match that are not under proximity control must be outside the words under the proximity control in the response for them to be matched.For example: 'match(abcd_ffff ccc)'does match the response 'abcd ffff ccc' but does not match the response 'abcd ccc ffff' |

| Word groups | [ ] | [ ] enables multiple words to be accepted as an alternative to other single words in OR lists. [ ] may not be nested. Single words may be OR'd inside [ ]. Where a word group is preceded or followed by the proximity control the word group is governed by the proximity control rule that the words must be in the order given. |

| Single character wildcard | ? | Matches any single character. |

| Multiple character wildcard | * | Matches any sequence of characters including none. |

It is possible to match some of the special characters by ‘escaping’ them with the ‘\’ character. So match(\|) will match ‘|’. Ditto for _, [, ], and *. And if you wish to match round brackets then match(\(\)) will match exactly ‘()’.

________________________________________________________________________

| match_all() | All matches contained within the brackets must be true. | match_all(

|

| match_any() | Just one of the matches contained within the brackets must be true. | match_any(

|

| not() | The match within the brackets must be false. | not (

|

match_all(), match_any()and not() may all be nested.

________________________________________________________________________

| Student response | Matching options | Pattern match | match method return |

| tom dick harry | empty | tom dick harry | True. This is the exact match. |

| thomas | c | tom | True. Extra characters are allowed anywhere within the word. |

| tom, dick and harry | w | dick | True. Extra words are allowed anywhere within the sentence. |

| harry dick tom | o | tom dick harry | True. Any order of words is allowed. |

| rick | m | dick | True. One character in the word can differ. |

| rick and harry and tom | mow | tom dick harry | True. |

| dick and harry and thomas | cow | tom dick harry | True. |

| arthur, harry and sid | mow | tom|dick|harry | True. Any of tom or dick or harry will be matched. |

| tom, harry and sid | mow | tom|dick harry|sid | True. The pattern requires either tom or dick AND harry or sid. Note that 'tom,' is only allowed because m allows the extra character, the comma, in 'tom,'. |

| tom was mesmerised by maud | mow | [tom maud]|[sid jane] | True. The pattern requires either (tom and maud) or (sid and jane). |

| rick | empty | ?ick | True. The first character can be anything. |

| harold | empty | har* | True. Any sequence of characters can follow 'har'. |

| tom married maud, sid married jane. | mow | tom_maud | True. Only one word is between tom and maud. |

| maud married tom, sid married jane. | mow | tom_maud | False. The proximity control also specifies word order and over-rides the allowAnyWordOrder matching option. |

| tom married maud, sid married jane. | mow | tom_jane | False. Only two words are allowed between tom and jane. |

| tom married maud | mow | tom|thomas marr* maud | True. |

| maud marries thomas | mow | tom|thomas marr* maud | True. |

| tom is to marry maud | mow | tom|thomas marr* maud | True. |

| tempratur | m2ow | temperature | True. Two characters are missing. |

| temporatur | m2ow | temperature | True. Two characters are incorrect; one has been replaced and one is missing. |

________________________________________________________________________

How can you possibly guess the multiplicity of phrases that your varied student cohort will use to answer a question? Of course you can’t, but you can record everything and over time you will build a bank of student responses on which you can base your response matching. And gradually you might be surprised at how well your response matching copes.

Before we describe the response matching it’s worth stressing:

- The starting point is asking a question that you believe you will be able to mark accurately.

- How you phrase the question can have a significant impact.

- Pattern match works best when you are asking for a single explanation that you will mark as simply right or wrong. You will find that dealing with multiple parts in a question or apportioning partial marks is a much harder task that will quickly turn into a research project.

- A bank of marked responses from real students is an essential starting point for developing matching patterns. Consider using the question in a human marked essay to get your first bank of student responses. Alternatively first use the question in a deferred feedback test where marks are allocated once all responses are received.

- This question type possibly more than all others demands that you monitor student responses and amend your response matching as required. There will come a point at which you believe that the response matching is ‘good enough’ and might wonder ‘how many responses might I need to check?’ Unfortunately there is no easy answer to this question. Sometimes 200 responses will be sufficient. At other times you may have to go much further than this. If you do bear in mind that

- Asking students to construct their response, as opposed to choosing it from a list, asks more of the student i.e. it is worth your while to do this.

- Human markers following a mark scheme are fallible too. Ditto.

- Stemming is the accepted method of catering for different word endings e.g. mov* will cater for ‘moved’ and ‘moves’ and ‘moving’ etc.

- Students do not type responses that are deliberately wrong. Why should they? This is in contrast to academics who try to defeat the system. Consequently you will not find students entering the right response but preceding it with ‘it is not..’ And consequently you do not have to match deliberate errors.

- The proximity control enables you to link one word to another.

- It is often the case that marking obvious wrong responses early in your matching scheme will improve your overall accuracy.

- Dealing with responses that contain both right and wrong answers requires you to take a view. You will either mark all such responses right or wrong. The computer will carry out your instructions consistently c.f. asking a group of human markers to apply your mark scheme consistently.

- You should aim for an accuracy of >95% (in our trials our human markers were in the range 92%-96%). But, of course, aim as high as is reasonable given the usual diminishing returns on continuing efforts.

History

The underlying structure of the response matching described here was developed in the Computer Based Learning Unit of Leeds University in the 1970s and was incorporated into Leeds Author Language. The basic unit of the word, the matching options of allowAnyChars, allowAnyWords, allowAnyOrder and the word OR feature all date back to Leeds Author Language.

In 1976 the CALCHEM project which was hosted by the Computer Based Learning Unit, the Chemistry Department at Leeds University and the Computer Centre of Sheffield Polytechnic (now Sheffield Hallam University) produced a portable version of Leeds Author Language.

A portable version for microcomputers was developed in 1982 by the Open University, the Midland Bank (as it then was; now Midland is part of HSBC) and Imperial College. The single and multiple character wildcards were added at this time.

The misspelling, proximity and Word groups in 'or' lists additions were added as part of the Open University COLMSCT projects looking at free text response matching during 2006 - 2009.

References

Philip G. Butcher and Sally E. Jordan, A comparison of human and computer marking of short free-text student responses, Computers & Education 55 (2010) 489-499

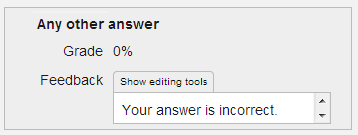

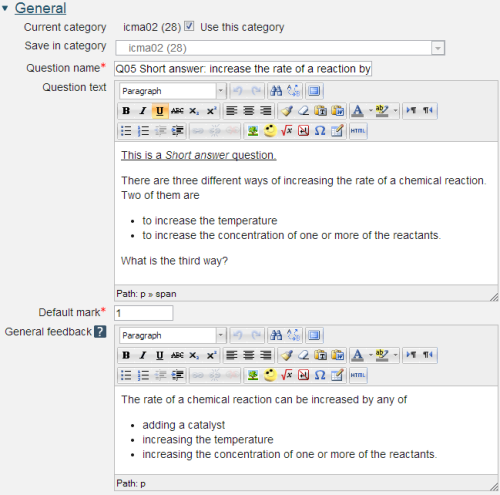

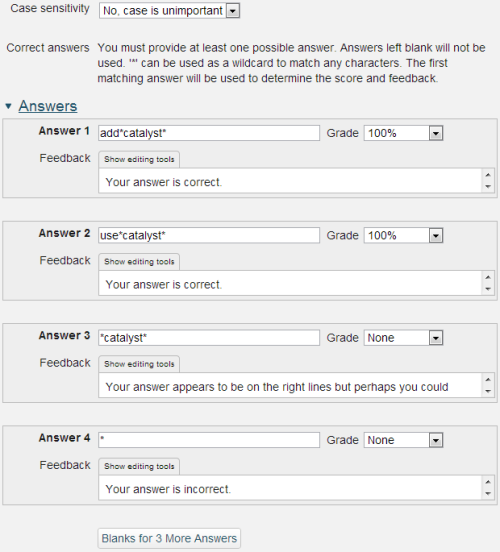

2.2.2 Short answer

The response matching in the Short answer question type is very limited. Consider using the Pattern match question type instead.

Question name: A descriptive name is sensible. This name will not be shown to students.

Question text: You may use the full functionality of the editor to state the question.

Placing the response box

The response box may be placed within the question rubric by including a sequence of underscores e.g. _____. At runtime the response box will replace the underscores and the length will be determined by the number of underscores. The minimum number of underscores required to trigger this action is 5. If no underscores are present the response box will be placed after the question and will be a full length box.

Default mark: Decide on how to score your questions and be consistent.

General feedback: We recommend that all questions should have this box completed with the correct answer and a fully worked explanation. The contents of this box will be shown to all students irrespective of whether their response was correct or incorrect. We do not recommend that authors rely on using the machine generated 'Right answer' (from the iCMA definition form).

Case sensitivity: The author can specify whether or not the case of the response is important.

Answer: The Short answer question type will search for a sequence of consecutive characters within a response. The wildcard character '*' allows other words and characters, including space, to be present.

Beyond this wildcard there are no other response handling facilities. The Pattern match question type offers more sophisticated features. For example if you wished to look for ‘Tom’ and ‘Dick’ and ‘Harry’ in any order then Pattern match allows you to do this easily but Short answer requires you to specify all possible sequences.

The wildcard '*' on its own in the 'answer' field will match 'any other answer'.

Feedback: Use this to give feedback to the responses that are matched by the current response match.

Improving your response matching

Do remember that Moodle keeps all the responses that students enter and as such it is straightforward to gauge the accuracy of your response matching. If you are writing this type of question you should allow time to modify your response matching in the light of actual student responses.

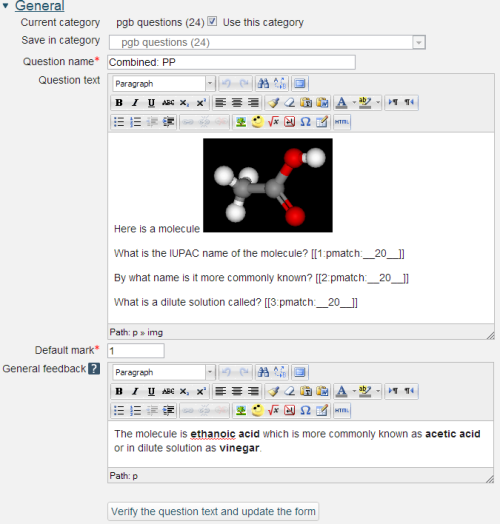

2.2.3 Combined

The Combined question enables multiple text responses to be matched.

The full capabilities for the Combined question is described in section 2.4.1.

Question name: A descriptive name is sensible. This name will not be shown to students.

Question text: You may use the full functionality of the editor to state the question.

Pattern match response fields

The response fields have the form

- [[

: : ]]

may be alphanumeric up to 8 characters. In our example we’ve just used numbers. is 'pmatch'. should be one of - __20__ is the width of the displayed input box in characters.

- _____ (i.e. without a number) provides a box equivalent in length to the number of underscores.

After adding new input fields and to remove empty unwanted input fields click the ‘Verify the question text and update the form’ button. At this point your question text will be validated.

General feedback: We recommend that all questions should have this box completed with the correct answer and a fully worked explanation. The contents of this box will be shown to all students irrespective of whether their response was correct or incorrect. There is no system generated 'Right answer' (from the iCMA definition form) for Combined questions.

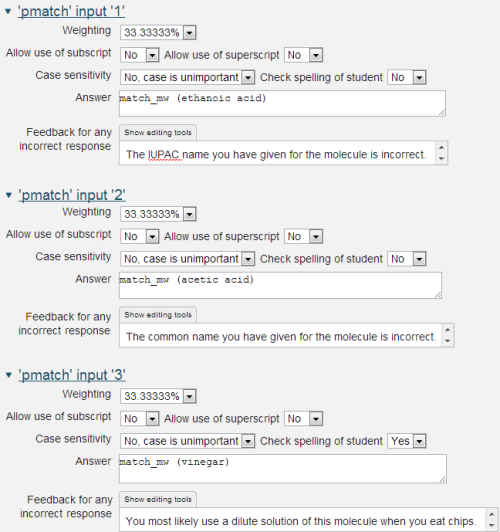

Thie Combined question uses a restricted form of the Pattern match response matching.

Weighting. Different responses can have different percentages of the total mark. The weightings must add to 100%.

Allow use of subscript/superscript: for students to include powers or chemical formulae.

Case sensitivity: Yes or no.

Check spelling of student: Yes or no.

Answer: Use Pattern match syntax.

Feedback for any incorrect response: Where some fields are answered correctly and others incorrectly the intention is that the feedback associated with the field is used to say what is wrong. Correct answers can be counted up using the the option to 'Show the number of correct responses' in the Combined feedback and Hints fields.

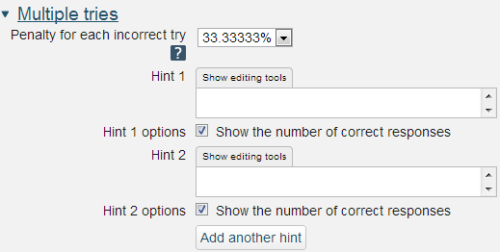

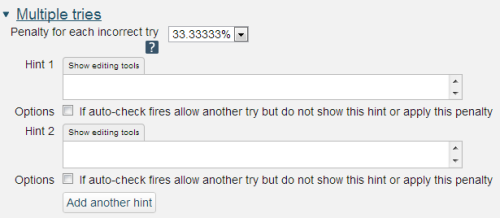

If you are configuring your question to run in 'Interactive with multiple tries' mode such that students can answer questions one by one you can also provide hints and second (and third) tries by entering appropriate feedback into the boxes provided in the 'Multiple tries' section of the question definition form.

To use this section of the question form please ensure that on the iCMA definition form you have chosen 'How questions behave' = 'Interactive with multiple tries'.

Penalty for each incorrect try: The available mark is reduced by the penalty for second and subsequent tries. In the example above a correct answer at the second try will score 0.6666667 of the available marks and a correct answer at the third try will score 0.3333334 of the available marks.

Hint: You can complete as many of these boxes as you wish. If you wish to give the student three tries at a question you will need to provide two hints. At runtime when the hints are exhausted the question will finish and the student will be given the general feedback and the question score will be calculated.

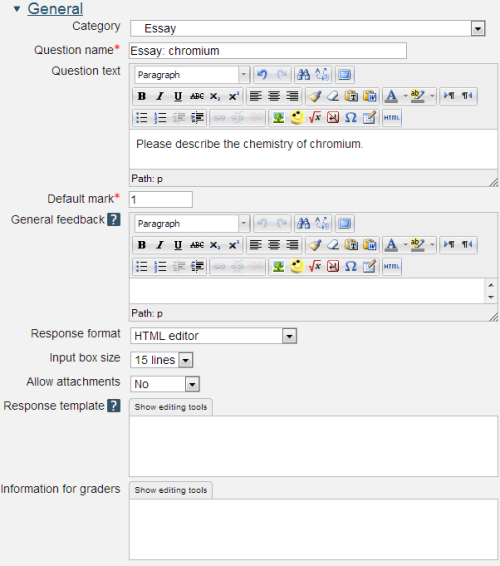

2.2.4 Essay

Essay questions are not marked automatically unlike other questions in the iCMA. If an Essay question is to be marked this has to be undertaken by an appointed marker who is given appropriate permission by Exams and Assessments; the Tutor role in OU Moodle does not have permission to mark questions.

We recommend that essay questions are shown one per page.

Question text: You may use the full functionality of the editor to state the question.

General feedback: Perhaps use this for a model answer.

Response format: With or without the normal on-screen editor.

Input box size

Allow attachments: Any text entered here will be displayed in the response input box when a new attempt at the question starts.

Response template: Any text entered here will be displayed in the response input box when a new attempt at the question starts.

Information for graders: The mark scheme can be placed here.

Essay questions and iCMAs running with 'interactive with multiple tries' behaviour

Essay questions must be marked manually and as such cannot exhibit 'interactive with multiple tries' behaviour. If an essay question is included in an iCMA that is running with 'interactive with multiple tries' behaviour the essay question will be shown without a 'Check' button and will function with 'deferred feedback' behaviour.

Essay questions that are designed to capture student comments and are not marked

Essay questions are useful for collecting student feedback during an iCMA and when used for this purpose module teams do not wish the essay to be marked or to interfere with the marking process. Please indicate this to the system by giving such questions a 'Default mark' of 0. Essay questions with a 'Default mark' of 0 are set to 'Complete' when the iCMA is submitted, c.f. essay questions with a non-zero 'Default mark' which require human marking before the full results for the iCMA appear.

2.3 Selection questions

Questions in this category provide the correct answers together with distractors and ask the student to choose or place their choices.

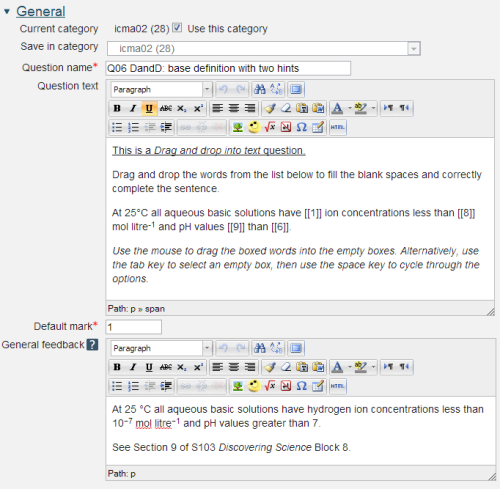

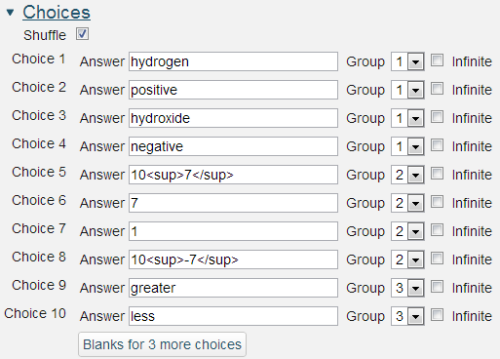

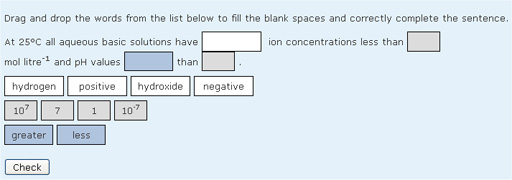

2.3.1 Drag and drop into text

Drag and drop questions for completing texts are a popular form of 'selection' question consisting of words which can be dragged from a list and dropped into pre-defined gaps in the text.

Question name: A descriptive name is sensible. This name will not be shown to students.

Question text: You may use the full functionality of the editor to state the question.

The Question text is written with two sets of square brackets '[[n]]' indicating the positioning of gaps and a number 'n' inside the brackets indicating the correct choice from a list which follows the question.

It is allowed to place the drop zones in lists and tables.

General feedback: We recommend that all questions should have this box completed with the correct answer and a fully worked explanation. The contents of this box will be shown to all students irrespective of whether their response was correct or incorrect. We do not recommend that authors rely on using the machine generated 'Right answer' (from the iCMA definition form).

Limited formatting of text using , , , , and is allowed.

Choices that are within the same Group are colour coded and may only be dropped in a gap with the corresponding colour.

Choices that are marked as 'infinite' may be used in multiple locations.

It is not possible to have drag boxes containing multiple lines. If you want to drag long sentences - don't. Give each a label and drag the label.

The resulting question when run in 'interactive with multiple tries' style looks as follows:

Accessibility

Drag and drop questions are keyboard accessible. Use the

Scoring

All gaps are weighted identically; in the above example each gap is worth 25% of the marks. Only gaps that are filled correctly gain marks. There is no negative marking of gaps that are filled incorrectly.

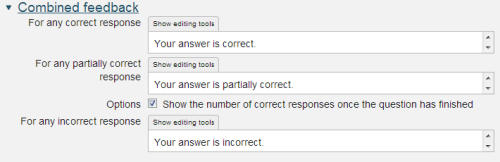

The display of Combined feedback is governed by the Specific feedback setting on the iCMA definition form.

In interactive with multiple tries mode Combined feedback is shown after every try as well as when the question completes.

The option ‘Show the number of correct responses’ is over-ridden in interactive with multiple tries mode by the same settings in the ‘Settings for multiple tries’ section of the editing form.

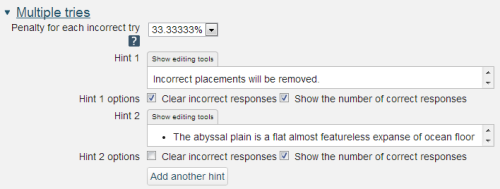

Penalty for each incorrect try: The available mark is reduced by the penalty for second and subsequent tries. In the example above a correct answer at the second try will score 0.6666667 of the available marks and a correct answer at the third try will score 0.3333334 of the available marks.

If the question is used in 'interactive with multiple tries' behaviour the marking is modified as follows:

- The mark is reduced for each try by the penalty factor.

- Allowance is made for when a correct choice is first chosen providing it remains chosen in subsequent tries.

Hint: You can complete as many of these boxes as you wish. If you wish to give the student three tries at a question you will need to provide two hints. At runtime when the hints are exhausted the question will finish and the student will be given the general feedback and the question score will be calculated.

Clear incorrect responses: When ‘Try again’ is clicked incorrect choices are cleared.

Show the number of correct responses: Include in the feedback a statement of how many choices are correct.

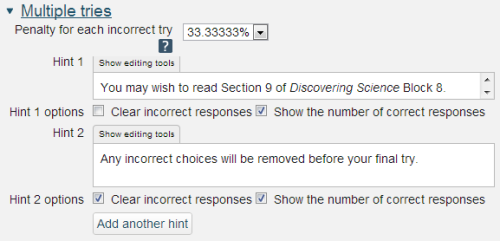

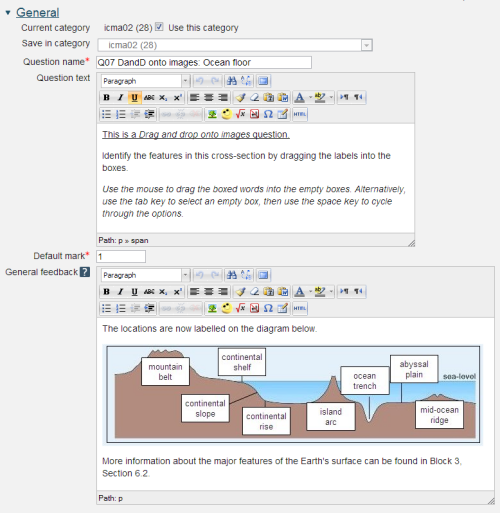

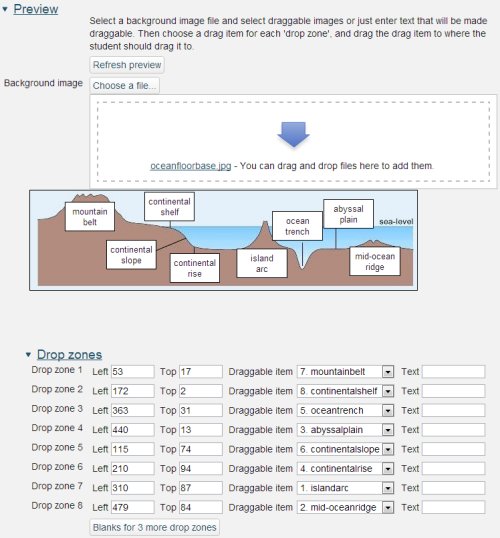

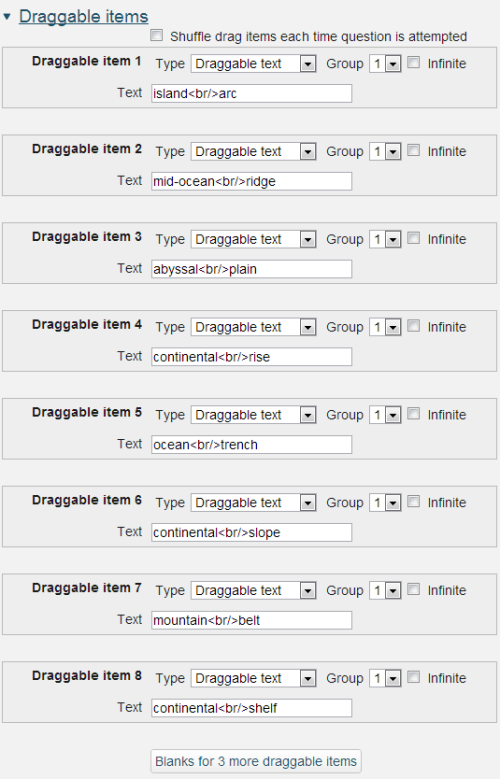

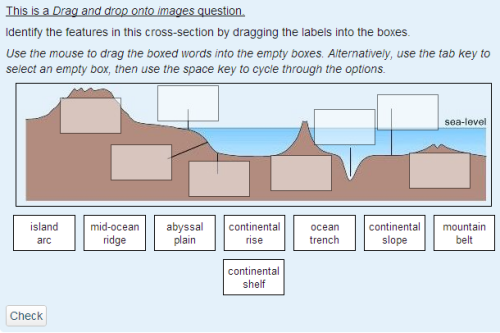

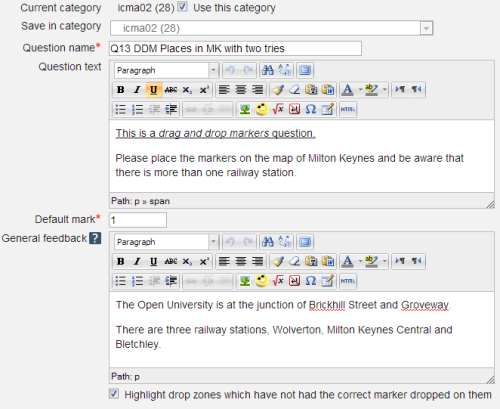

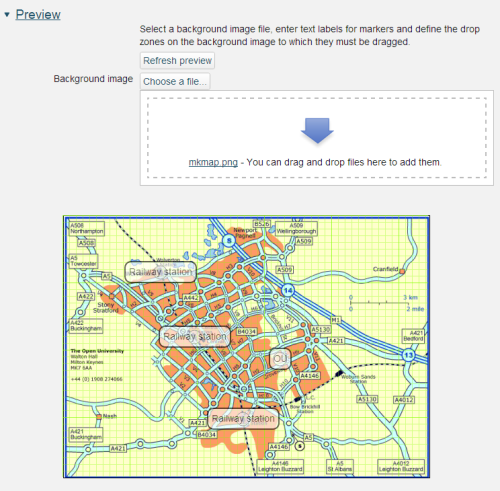

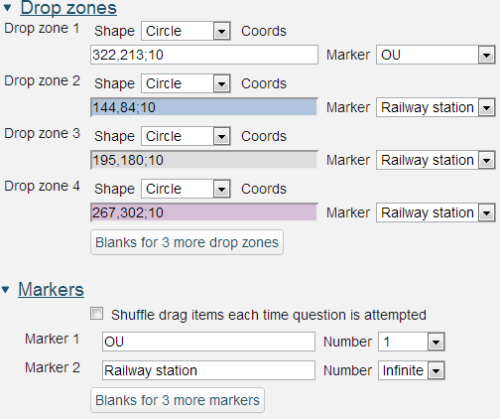

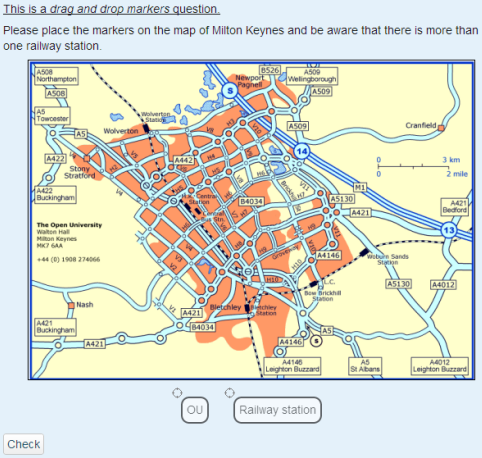

2.3.2 Drag and drop onto image

Drag and drop questions for labelling images are a popular form of 'selection' question consisting of images or words which can be dragged from a list and dropped into pre-defined gaps on the base image.

Question name: A descriptive name is sensible. This name will not be shown to students.

Question text: You may use the full functionality of the editor to state the question.

General feedback: We recommend the correctly labelled image be included in this box. Students who did not answer completely correctly can then compare and contrast to see where they made an error. The contents of this box will be shown to all students irrespective of whether their response was correct or incorrect.

Start by uploading a background image. There is a maximum size of 600 x 400 pixels for this image. Please note that we recommend a maximum image width of 570 pixels so as to fit all parts of an iCMA into a 1024 wide window. When you have done this it will appear in the preview area.

Next create the drag items. These may be text items as shown here, or images. There is a maximum import size for images of 150 x 100 and images larger than this will be scaled on import. If you choose to use draggable images please ensure that you add a short text descriptor. For each item having entered the text please press Enter to complete the addition of the draggable item and register it in the drop-down lists of Drop zones.

Now place the drag items onto the background image by completing the Drop zones section above. Once you have established the draggable item for a drop zone the item will appear beneath the background image. At this point you may position the item either by dragging it or by completing the Left and Top boxes for the item.

The drag item will be placed at the top and left coordinates as measured in pixels from the top left of the background image.

Within draggable text items limited formatting of text using , , , , and is allowed.

is also allowed.

Choices that are within the same Group are colour coded and may only be dropped on a drop zone with the corresponding colour. Choices that are marked as 'infinite' may be used in multiple locations.

The resulting question when run in 'interactive with multiple tries' style looks as follows:

Accessibility

Drag and drop questions are keyboard accessible. Use the

Scoring

All gaps are weighted identically and in the above example each drop zone is worth 12.5% of the marks. Only drop zones that are filled correctly gain marks. There is no negative marking of drop zones that are filled incorrectly.

Whether or not Combined feedback is shown to students is governed by the Specific feedback setting on the iCMA definition form.

Penalty for each incorrect try: The available mark is reduced by the penalty for second and subsequent tries. In the example above a correct answer at the second try will score 0.6666667 of the available marks and a correct answer at the third try will score 0.3333334 of the available marks.

If the question is used in 'interactive with multiple tries' style the marking is modified as follows:

- The mark is reduced for each try by the penalty factor.

- Allowance is made for when a correct choice is first chosen providing it remains chosen in subsequent tries.

Hint: You can complete as many of these boxes as you wish. If you wish to give the student three tries at a question you will need to provide two hints. At runtime when the hints are exhausted the question will finish and the student will be given the general feedback and the question score will be calculated.

Clear incorrect responses: When ‘Try again’ is clicked incorrect choices are cleared.

Show the number of correct responses: Include in the feedback a statement of how many choices are correct.

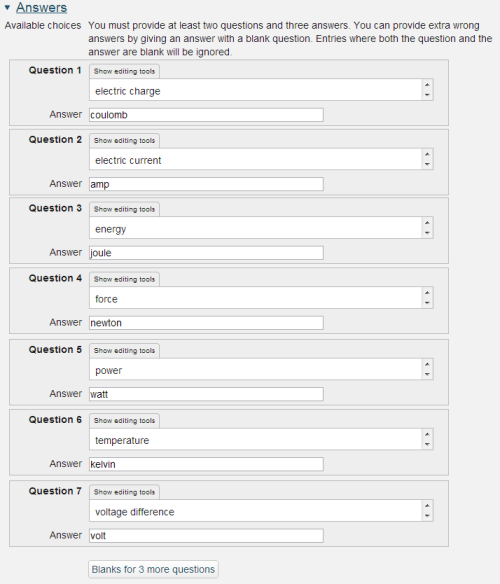

2.3.3 Matching

Matching questions are created by the author supplying several questions and their correct answers. The computer lays out the questions and shuffles the answers in a drop-down list. Matching questions raise the same issues as multiple choice in that all answers are supplied and students are 'matching' answers rather than providing them. If the same number of matching options is provided as there are questions then it is the case that students do not have to know all the correct answers to obtain full marks; if there is one answer they do not know then it is clearly the one that is left over after all other questions have been answered. However it is possible to add extra answers as distractors to make the question more challenging.

Please note that once a Matching question has been used by students you should not edit it. This is because of the way that the components of the question are held in the database.

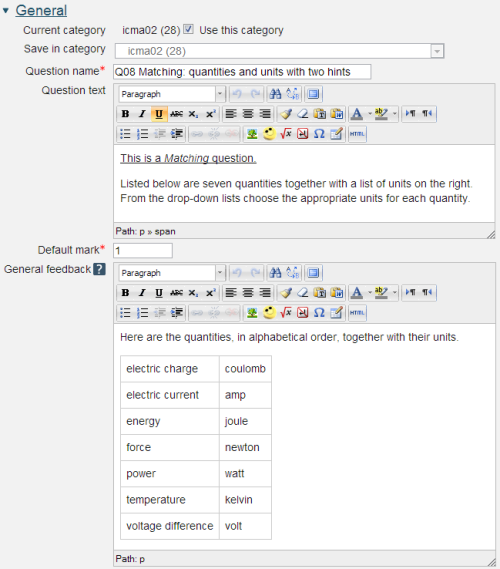

Question name: A descriptive name is sensible. This name will not be shown to students.

Question text: You may use the full functionality of the editor to state the question.

General feedback: We recommend the correct answer be included in this box. Students who did not answer completely correctly can then compare and contrast to see where they made an error. The contents of this box will be shown to all students irrespective of whether their response was correct or incorrect.

The answer fields in Matching questions can only contain plain text.

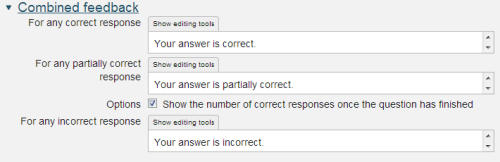

Whether or not Combined feedback is shown to students is governed by the Specific feedback setting on the iCMA definition form.

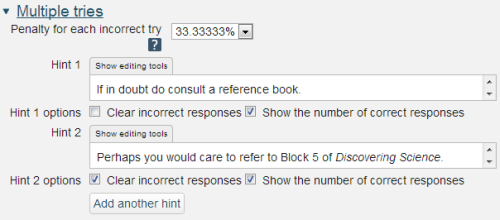

Hint: You can complete as many of these boxes as you wish. If you wish to give the student three tries at a question you will need to provide two hints. At runtime when the hints are exhausted the question will finish and the student will be given the general feedback and the question score will be calculated.

Clear incorrect responses: When ‘Try again’ is clicked incorrect choices are cleared.

Show the number of correct responses: Include in the feedback a statement of how many choices are correct.

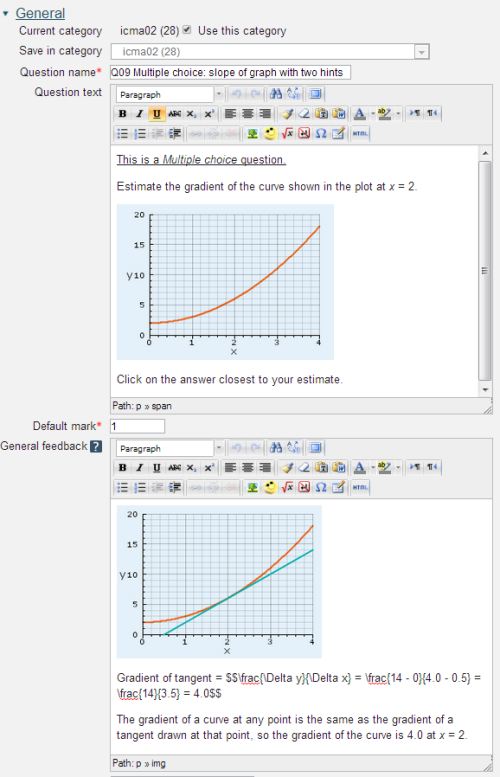

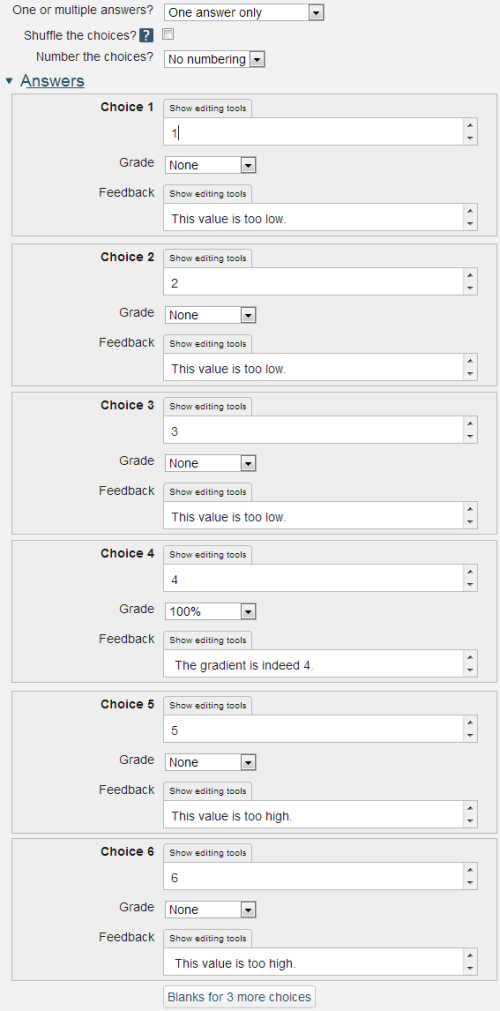

2.3.4 Multiple choice

The Multiple Choice question type is the most common form of question used in the OU's paper-based CMA system which was used by large numbers of modules in the 1970s, 1980s and 1990s and remains in use today.

Typically the student is provided with a question and a range of possible answers and asked to choose one. The skill in writing multiple choice questions is in providing questions that expose misunderstanding of the materials under test such that suitable distractors can be written. The response to the distractors can then be used to provide remedial feedback to help students overcome their misunderstanding.

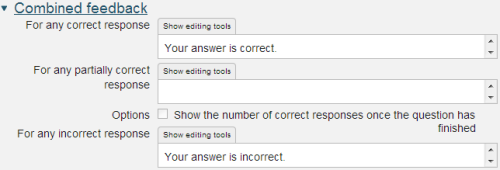

A problem with all forms of multiple choice questions is that students can guess the answer from the range of options provided. To discourage this various penalty mechanisms have been suggested to try and prevent students from simply guessing. In its current form Moodle allows the author to attach negative marks to options though most users at the OU do not use this facility and score the correct choice at 100% and incorrect choices at 0%.

Question name: A descriptive name is sensible. This name will not be shown to students.

Question text: You may use the full functionality of the editor to state the question.

General feedback: We recommend the correctanswer be included in this box. The contents of this box will be shown to all students irrespective of whether their response was correct or incorrect.

One or multiple answers? It is possible to use the Multiple choice question to create a Multiple response question that requires the student to choose multiple options. However marking these questions can become complex. If for example a correct response requires that options (a), (b) and (c) be chosen then each could be allocated 33.3% of the marks, but what marks are allocated to options (d), (e) and (f)? Should it be zero or a negative value; if the latter at what level, -33.3%, -100%? This can become difficult and because of this we strongly recommend that authors wishing to create Multiple Response questions use the OU Multiple Response question type which incorporates the mark schemes used in the original paper-based CMA system.

Penalty for each incorrect try: The available mark is reduced by the penalty for second and subsequent tries. In the example above a correct answer at the second try will score 0.6666667 of the available marks and a correct answer at the third try will score 0.3333334 of the available marks.

Hint: You can complete as many of these boxes as you wish. If you wish to give the student three tries at a question you will need to provide two hints. At runtime when the hints are exhausted the question will finish and the student will be given the general feedback and the question score will be calculated.

Clear incorrect responses: When ‘Try again’ is clicked incorrect choices are cleared.

Show the number of correct responses: Include in the feedback a statement of how many choices are correct. Clearly this should not be used for questions with single choices.

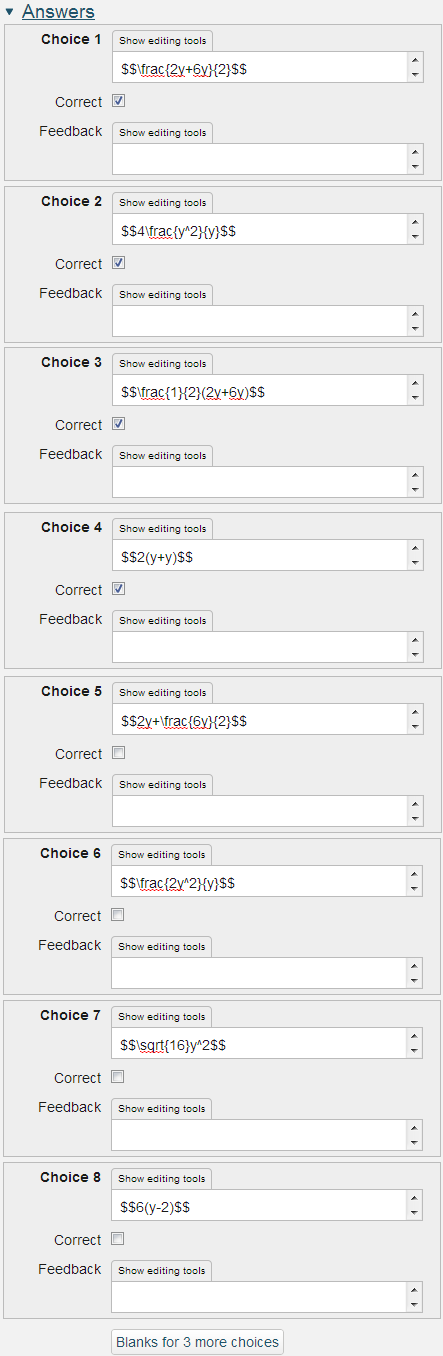

2.3.5 OU multiple response

Along with the Multiple Choice question type, the Multiple Response question type is the most common form of question used in the OU's paper-based CMA system. The Multiple Response question type requires the student to choose multiple options.

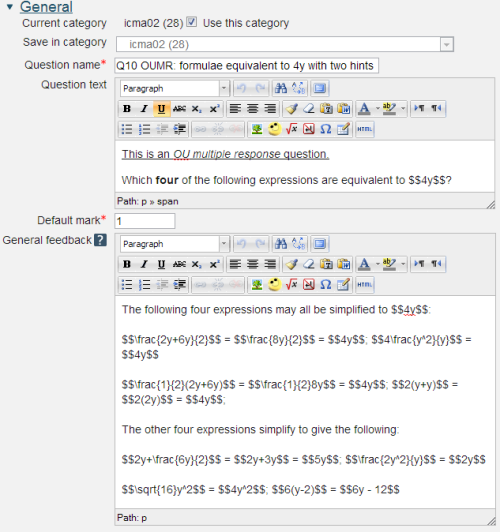

Question name: A descriptive name is sensible. This name will not be shown to students.

Question text: You may use the full functionality of the editor to state the question.

General feedback: We recommend the correct answer be included in this box. Students who did not answer completely correctly can then compare and contrast to see where they made an error. The contents of this box will be shown to all students irrespective of whether their response was correct or incorrect.

The OU Multiple Response question type simplifies the authoring process by incorporating the marking scheme developed for the OU paper-based CMA system. In this marking scheme

- Choices are marked as correct or incorrect. If a question has n correct choices each correct choice is given a mark of (Question grade)/n.

- If a student selects more than n choices the marks are based on the worst n choices.

- It follows that the number of incorrect choices should equal or exceed the number of correct choices (otherwise a student choosing all choices will receive marks).

Example: A question has five choices of which only 'a' and 'b' are correct. How student responses are marked:

- a b: 100% correct

- a c: 50% correct

- a b c: 50% correct - only the two worst choices are marked

- a b c d: 0% correct - only the two worst choices are marked

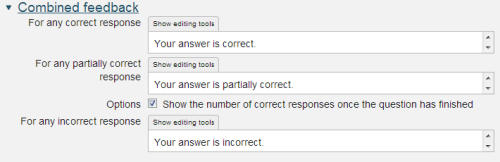

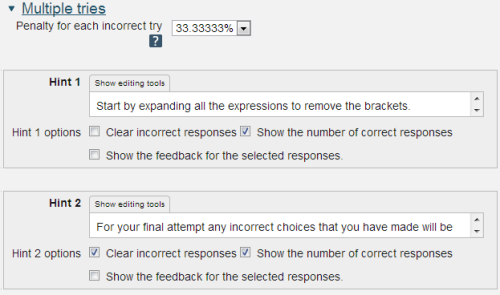

Whether or not Combined feedback is shown to students is governed by the Specific feedback setting on the iCMA definition form.

Penalty for each incorrect try: The available mark is reduced by the penalty for second and subsequent tries. In the example above a correct answer at the second try will score 0.6666667 of the available marks and a correct answer at the third try will score 0.3333334 of the available marks.

If the question is used in interactive with multiple tries mode the marking is modified as follows:

- At each try only the worst n choices are scored.

- The mark is reduced for each try by the penalty factor.

- Allowance is made for when a correct response is first chosen providing it remains chosen in subsequent tries.

Hint: You can complete as many of these boxes as you wish. If you wish to give the student three tries at a question you will need to provide two hints. At runtime when the hints are exhausted the question will finish and the student will be given the general feedback and the question score will be calculated.

Clear incorrect responses: When ‘Try again’ is clicked incorrect choices are cleared.

Show the number of correct responses: Include in the feedback a statement of how many choices are correct.

Show the feedback for the selected responses: This setting controls the feedback to individual choices however if too many choices are made it is suppressed.

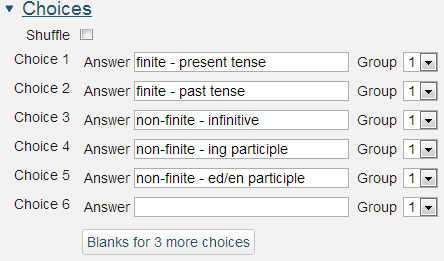

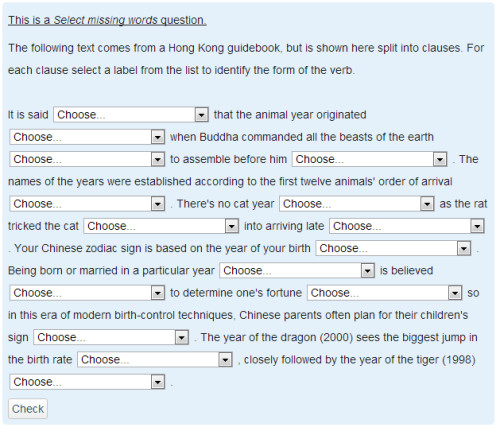

2.3.6 Select missing words

The Select missing words question type is very similar to the Drag and drop words into text question type. It is useful for questions where the on-screen space is insufficient to provide space for both the question and the drag items – if the drag items are off-screen it’s rather hard to drag them anywhere.

This question type will therefore most likely be used when the question author has a large text that they wish the student to label or complete by selecting missing words from a drop-down list. Though, of course, some authors might prefer to use it to the drag and drop alternative.

Question name: A descriptive name is sensible. This name will not be shown to students.

Question text: You may use the full functionality of the editor to state the question.

The Question text is written with two sets of square brackets '[[n]]' indicating the positioning of gaps and a number 'n' inside the brackets indicating the correct choice from a list which follows the question.

General feedback: We recommend the correct answer be included in this box. Students who did not answer completely correctly can then compare and contrast to see where they made an error. The contents of this box will be shown to all students irrespective of whether their response was correct or incorrect.

Answer: Unlike the Drag and drop words into text question type the Select missing words question allows no formatting of the words to be selected.

Group: Choices that are within the same Group appear in the same drop-down lists.

The resulting question when run in 'interactive with multiple tries' style looks as follows:

Accessibility

Missing words questions are keyboard accessible. Use the

Scoring

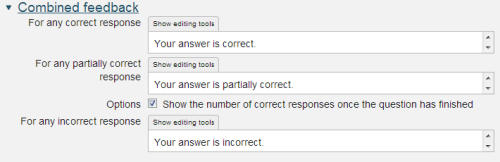

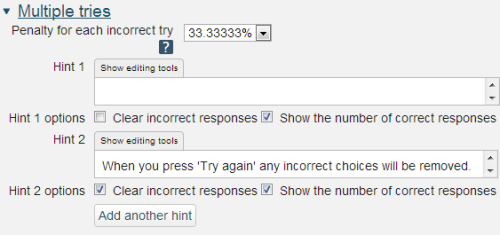

All gaps are weighted identically. Only gaps that are filled correctly gain marks. There is no negative marking of gaps that are filled incorrectly.

Whether or not Combined feedback is shown to students is governed by the Specific feedback setting on the iCMA definition form.

Penalty for each incorrect try: The available mark is reduced by the penalty for second and subsequent tries. In the example above a correct answer at the second try will score 0.6666667 of the available marks and a correct answer at the third try will score 0.3333334 of the available marks.

If the question is used in 'interactive with multiple tries' style the marking is modified as follows:

- The mark is reduced for each try by the penalty factor.

- Allowance is made for when a correct choice is first chosen providing it remains chosen in subsequent tries.

Hint: You can complete as many of these boxes as you wish. If you wish to give the student three tries at a question you will need to provide two hints. At runtime when the hints are exhausted the question will finish and the student will be given the general feedback and the question score will be calculated.

Clear incorrect responses: When ‘Try again’ is clicked incorrect choices are cleared.

Show the number of correct responses: Include in the feedback a statement of how many choices are correct.

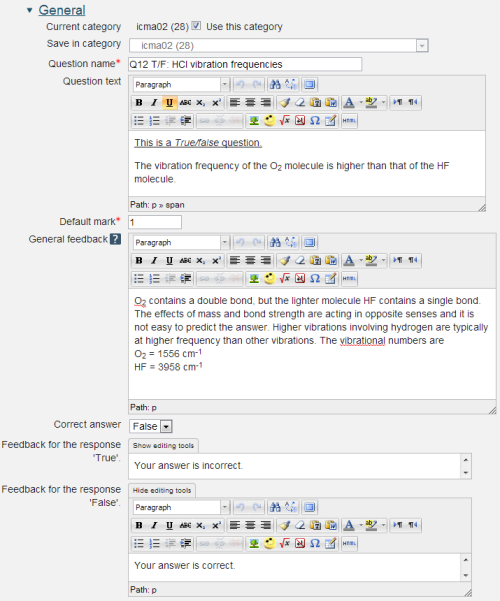

2.3.7 True/false

The True/false question is the simplest question type. There is one right answer, worth 100% and one wrong answer worth 'none'.

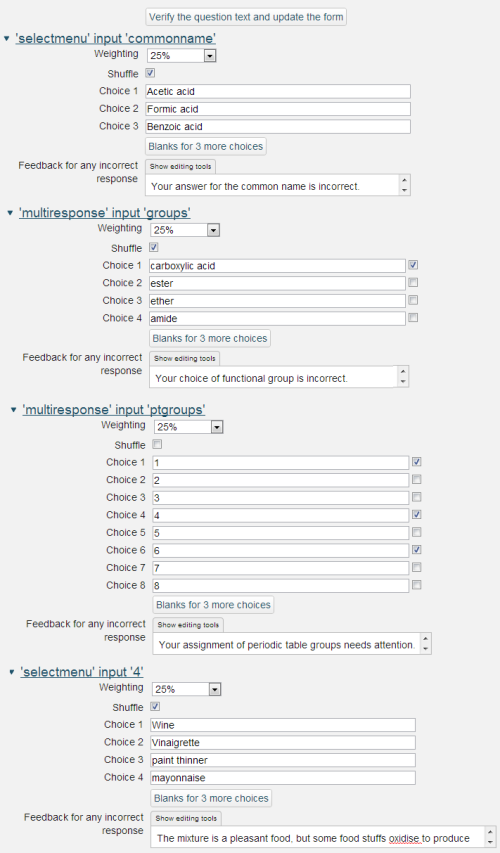

2.3.8 Combined

The Combined question enables multiple OU multiple response and Select missing words responses to be matched.

The full capabilities for the Combined question is described in section 2.4.1.

Question name: A descriptive name is sensible. This name will not be shown to students.

Question text: You may use the full functionality of the editor to state the question.

Response fields

The response fields have the form [[

may be alphanumeric up to 8 characters. here is multiresponse or selectmenu. are dependent upon the type of response field.

Options

Multiresponse can be displayed vertically or horizontally

- v for vertical display. This is the default.

- h for horizontal display. If the rendering is too long it wraps at a choice.

Selectmenu must be followed by the correct choice.

Note that it is possible to have multiple choices that use the same menu choices e.g. The quick brown [[4:selectmenu:2]] jumps over the lazy [[4:selectmenu:4]].

Where the choices are, cow, fox, cat, dog.

After adding new input fields and to remove empty unwanted input fields click the ‘Verify the question text and update the form’ button. At this point your question text will be validated.

General feedback: We recommend that all questions should have this box completed with the correct answer and a fully worked explanation. The contents of this box will be shown to all students irrespective of whether their response was correct or incorrect. There is no system generated 'Right answer' (from the iCMA definition form) for Combined questions.

These are restricted forms of the Selectmenu and OU multiple response response matching fields.

Where some fields are answered correctly and others incorrectly the intention is that the feedback associated with the field is used to say what is wrong. Correct answers can be counted up using the the option to 'Show the number of correct responses' in the Combined feedback and Hints fields.

2.4 Multiple inputs

There are two question types that accept responses to multiple inputs.

Combined

The Combined question enables multiple Numeric, Pattern match, OU multiple response and Select missing words responses to be matched.

STACK

The STACK question supports complex matching on multiple response fields.

The STACK question type is described in 2.6.

2.4.1 Combined

The Combined question incorporates features of four existing question types into one composite question. The four question types that are included are:

- Numeric (n.b. based on Variable numeric and not Numerical)

- Pattern match

- OU multiple response

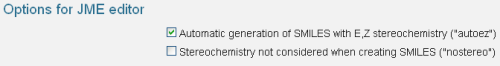

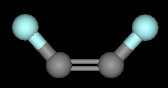

- Select missing words.