3 The brain's solution: the machinery of language

3.1 Speech perception

Now that we have examined the processes involved in understanding a sentence in some detail, we will turn to the issue of how the brain achieves the task. We will begin with the initial capture and analysis of the speech signal.

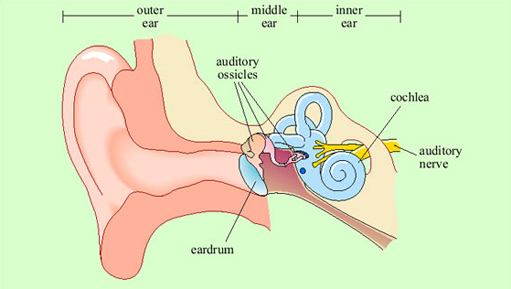

Vibrations in the air are channelled by the structure of the external ear into the ear canal (Figure 10). In the middle ear, they encounter a taut membrane or eardrum stretched across the ear canal. The vibrations in the air set up sympathetic vibrations in the eardrum. Inside the middle ear is a set of three tiny bones, the auditory ossicles. The auditory ossicles in their turn are caused to vibrate by the vibrations of the eardrum. The inner end of the auditory ossicles abuts a fluid-filled coiled structure called the cochlea. Vibration of the ossicles is transferred into the fluid of the cochlea and particularly into a thin membrane that runs along its length called the basilar membrane. Adjacent to the basilar membrane is a layer of small receptor cells, each with tiny cilia or hairs on it. Indeed, these receptors are known as hair cells (Figure 11). Movement of the basilar membrane causes movement of the hairs, which is converted into changes in electrical activity within the cell. Hair cells form synaptic connections with adjacent neurons, and thus electrical changes within them trigger neuronal action potentials. These neurons join into the auditory nerve, which relays the action potentials from the ear to the brain.

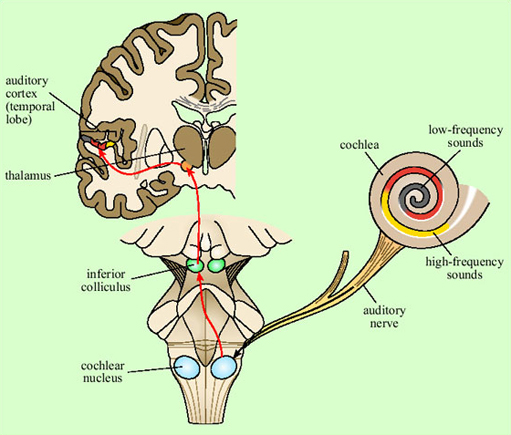

The pattern of vibration of the eardrum and ossicles is simply a reflection of the pattern of vibration in the air. In the cochlea, transformation of the signal begins. Because of the structure of the cochlea, high-frequency vibrations cause displacement of the basilar membrane at the outer end, and low-frequency vibrations cause displacement further along. Thus different hair cells at different positions will respond to different frequencies of sound, by virtue of being adjacent to different sections of the basilar membrane.

Thus, two crucial things have happened at the cochlear stage. First, a mapping of sounds of different frequencies onto different places on the basilar membrane has been set up. This is called tonotopic organisation. Second, any sound which consists of patterns of acoustic energy at several different frequencies will have been broken down into its component frequencies. This is because each formant within the complex sound will cause vibration at a different position along the basilar membrane and hence cause different subsets of hair cells to respond. Action potentials generated in the neurons that connect to the hair cells are transmitted to the brain via the auditory nerve. What will be transmitted to the brain, then, already contains information about pitch (coded by which cells are firing), and a preliminary breakdown into formants.

The auditory nerve feeds into the brainstem (at the cochlear nucleus), from where the auditory pathway ascends into the medial geniculate nucleus of the thalamus, the relay station in the middle of the forebrain (Figure 12). The main route for auditory information from here is to the auditory cortex of the superior temporal lobe on both sides of the brain (though there is also another pathway to the amygdala, not shown on the figure). Neurons in the auditory cortex generally respond to information from the ear on the opposite side of the body, though some integration of information from the two ears occurs at the brainstem level.

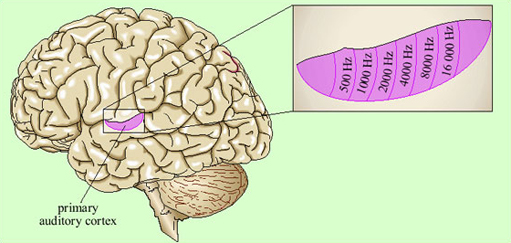

Representation of the signal in the primary auditory cortex is tonotopic. That is, cells at different locations respond to sounds at different frequencies, resulting in a ‘map’ of the frequency spectrum of the sound laid out across the surface of the brain (Figure 13). Recognition of sounds depends not on the absolute pitch of the formants but on their relationship to each other. We assume that this is processed in deeper layers of the auditory cortex, though exactly where or how is not yet understood.

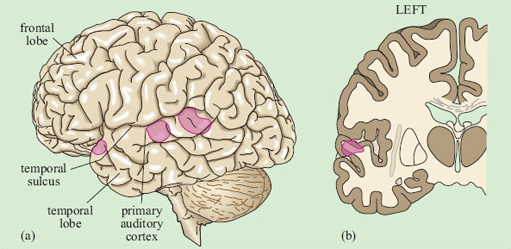

There is some evidence that within the primary auditory cortex, there are populations of neurons specialised for speech. This was shown by brain imaging experiments that compared patterns of activation in response to speech, scrambled cocktails of speech sounds, and non-speech sounds which were matched to the speech sounds on basic acoustic features (Moore, 2000). Areas in the superior temporal sulcus, on both sides of the brain, responded preferentially to real and scrambled speech (Figure 14), rather than to the other sounds.

Listening to speech produces activation on both sides of the brain in the auditory cortex. Damage to the superior temporal lobe on either side causes difficulties with speech recognition, though the pattern of the difficulties may be somewhat different on the two sides.

Thus it seems that the initial perception of speech is processed by some of the neurons in the auditory areas of the superior temporal lobe, on both sides of the brain. As we have seen, though, there is a great deal more to language than identifying phonemes and formants; similarly, there are many more brain areas which, when damaged, cause problems with speech and language. We must thus consider the architecture of the whole language processing system.