3.5 Electrophysiological studies of language processing

Brain imaging and aphasic studies helped us localise the subparts of language processing within the brain. However, they have shed little light on how processing unfolds in real time. This is because contemporary brain imaging is quite poor at showing changes in activity through time in fine detail, so it is hard to pick up something that may be happening slightly before something else.

In Section 2, we identified several tasks that the language processor has to perform – phonological analysis, syntactic analysis, retrieval of word meaning and so on. We stressed that you cannot always complete one without reference to the others. For example, a signal which is phonologically ambiguous might be resolved by the context, or the rest of the sentence might determine which of several possible meanings should be given to a particular word. But which happens first? Do they all take place in parallel? Do they each work independently, or is there cross-talk between them?

There is a general controversy within linguistics about just how much the different processing components interact. In one school of thought, for example, the identification of phonemes and word boundaries goes on autonomously, without access to the likely meanings of words or the context. This is a modular model, with processing going on in separate watertight subsystems. The alternative would be an interactive approach. In an interactive model, phoneme recognition and segmentation processes would already be influenced by information about what meanings are likely to be conveyed in the context. Even the modular model admits there must be some interaction between different processes. For example, in (7) (in Section 2.3), we rejected the second segmentation (7b) on the basis not of its phonological implausibility but because it doesn't mean anything. The difference between the two models lies in where they see the interaction happening. In an interactive account, considerations of meaning enter into the very process of identifying and segmenting the words, blocking (7b) and causing the processor to output (7a).

-

(7a) [my] [dads] [tu] [tor]

-

(7b) [mide] [ad] [stewt] [er]

In a modular account, phonological processing uses acoustic information only. It then outputs to the semantic and syntactic processors ‘here are two possible segmentations of this signal’ (i.e. 7a and 7b). The semantic and syntactic processors then accept one and suppress the other.

Rather similar issues arise with ambiguous words, as in (19):

-

(19a) The robber walked into the bank.

-

(19b) The canoe crashed into the bank.

It has long been known that if the word bank is flashed onto a screen, then for quite a long time afterwards, all the meanings associated with banks are primed in a person's brain. The classic way of testing this is what is called a lexical decision task. The participant is shown a string of letters and has to decide if they make up a word. The time it takes him to do this is recorded. People who have earlier seen the word bank on a screen are subsequently much quicker to decide that money is a word. They are also quicker to decide that river is a word than those who have not seen the word bank earlier. However, they are no quicker to decide that giraffe is a word. Thus, the word bank partially activates the whole realm of meanings related to it, such that you are then quicker to recognise other elements of that realm when you encounter them. The question, then, is whether in (19a), only the money house meaning is ever accessed for bank, or whether the river meaning is also accessed. If the former is the case, it would be evidence for an interactive account; elements of the context affect the processing of bank to the extent that the river meaning is never accessed. If the latter is the case, it would be evidence for a modular account; bank primes river, automatically, and without regard for the context.

Interestingly, what happens in this case is that river is primed for just a few seconds after presentation of (19a), but the priming then fades away rapidly. By contrast, money is primed immediately after presentation, and then stays primed. Sentence (19b) produces the opposite effect. This can be taken as evidence for a modular account; all the possible meanings of bank are primed, automatically, by the processing of bank. It is only subsequently that the integration of the word bank into the sentence context suppresses the other meanings.

This stage-like model is supported by direct recording of brain activity whilst linguistic processing is going on. This recording is done by placing electrodes over the scalp, and they pick up small changes in electrical activity caused by changes in neuronal activity in the brain. The participant is then given an experimental and a control task to do. Differences between the patterns of electrical activity in the control and the experimental task can then be investigated. This technique is known as event-related potential (ERP) recording. It lacks the precision of localisation that is provided by brain imaging but it has the advantage of very fine temporal resolution, and thus is informative about the time course of the brain's response to a stimulus.

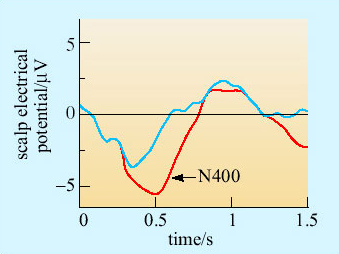

ERPs are hard to interpret without well-designed experiments. The classic ERP paradigm for studying language is to construct sentences that are for some reason hard to process, and compare the ERP trace of these sentences to that of ordinary ones (Friederici, 2002). For example, sentences where one word is semantically out of context and thus for which it is hard to find a meaning, produce a deviation in the activity trace across the middle of the brain about 400 milliseconds after the deviant word (Figure 18). This deviation is known as the N400. It is fairly consistent in two respects: it begins at around 400 milliseconds after the key word, and it is only provoked by semantic anomalies, that is sentences which are grammatically correct but weird in meaning.

SAQ 15

In Figure 18, which sentence is semantically normal, and which is semantically anomalous?

Answer

The shirt was ironed is semantically normal. The thunderstorm was ironed is semantically anomalous. We do not expect thunderstorms to be ironed – indeed it is hard to see what this could mean. (The thunderstorm was ironed produces an N400 deviation.)

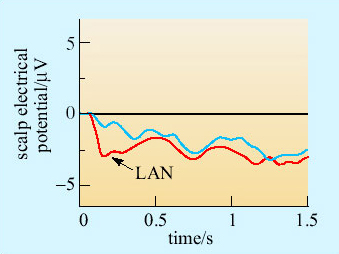

Sentences where the difficulty is syntactic provoke two kinds of deviation. An ungrammatical sentence, like The shirt was on ironed produces a deviation in activity over the left frontal lobe, often within a couple of hundred milliseconds of the word ironed (Figure 19). This deviation is called the LAN (left anterior negativity).

SAQ 16

Why do you think the LAN is observed specifically over the left frontal lobe?

Answer

This is the site of Broca's area, which we believe to have important syntactic functions.

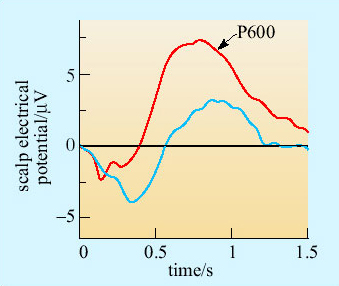

A second deviation is also produced by syntactic complexities, somewhat later and more posterior in the brain. This deviation is called the P600, and typically peaks around 600 milliseconds after the key word (Figure 20). The P600 is produced by grammatical violations, and also by sentences that are syntactically tricky or ambiguous. Sentences like The shirt was on ironed produce a LAN and a P600. Other sentences, like The horse raced past the barn fell produce a P600 but no LAN.

SAQ 17

What do you think the cause of the P600 might be, and how does this differ from the LAN?

Answer

Both the P600 and the LAN follow syntactic anomalies. The LAN is triggered by gross violations of syntax which are immediately obvious. In The shirt was on ironed there is no way that the string of words can ever be grammatical, since on must be followed with a noun phrase, and ironed is a verb. The horse raced past the barn fell is not ungrammatical. There is no violation and therefore the LAN is not produced. However, it is a difficult sentence to integrate conceptually; one tends to start off down one track and then have to switch interpretations. The P600 reflects this extra cognitive work: the search for an appropriate conceptual representation of the sentence.

Note that the LAN happens more quickly than the response to the semantics of the words (the N400), and that the P600 occurs after the initial syntactic and semantic responses have occurred. This has been taken as evidence for a three-stage account of sentence processing. First, there is an initial syntactic analysis of the sentence, mainly in or around Broca's area. This happens within a couple of hundred millseconds, and produces the LAN. It probably identifies the key lexical elements, whose individual meanings must then be processed.

Semantic processing of the key components of the sentence then follows. This happens further back in the brain, and produces the N400. Finally, the structure and meaning of the sentence is established by integrating semantic and syntactic information. This is straightforward for simple sentences, but where the words or structure are ambiguous, it may mean revising the initial syntactic analysis or suppressing alternative meanings. This is probably the stage at which the unwanted senses of bank are suppressed in (19). It is the stage at which you decide whether sentences like They are hunting dogs is a description of dogs or a description of what the hunters are doing. The extra work that may be required at this stage is reflected in the P600 which is produced by complex and ambiguous sentences.

Neat evidence for this three-stage model comes from the response to doubly anomalous sentences, like (20).

(20) The thunderstorm was in ironed.

SAQ 18

What ERP responses might you expect that (20) would produce?

Answer

You would expect a LAN, because the sentence is grossly ungrammatical. You would also expect an N400, because of the semantic anomaly of thunderstorm … ironed.

What is observed in response to (20) is in fact a LAN, but no N400. This means that the sentence gets blocked at the initial syntactic analysis stage, and is not ‘passed on’ to the semantic system for meaning analysis.

Such evidence supports a stage-like and somewhat modular account of sentence processing. First the phonology makes available a representation of the sentence. Then an initial syntactic analysis (in the left frontal lobe) assigns a basic structure to the sentence, and then passes on the key words for processing the meaning. The semantic system then makes available the possible meanings. This all happens within half a second. Finally, there is a conceptual integration of syntax and semantics, starting at about 600 milliseconds. Note that the sentence itself must be kept available in a buffer or short-term memory all this time, in case the parsing proves wrong and a re-analysis is necessary.