2 Beware of the bubble!

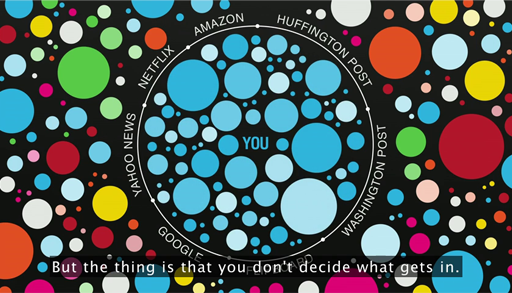

Not only does Google look for websites that match the search request you enter, it also looks for sites that match you. Based on your search history, location and any other data it holds about you, it tailors which results to display, so that eventually you might end up trapped in your own private ‘bubble’, which limits your horizons.

Similarly, Facebook’s ‘News Feed’ shows you ‘stories that … are influenced by your connections and activity on Facebook’.

Activity 3 The filter bubble

Watch this TED talk on the ‘filter bubble’ by Eli Pariser.

Transcript: Video 1

Many people see filter bubbles as a threat to democracy. This is because they may result in us only sharing ideas with like-minded people, so that we are not exposed to differing points of view. We simply end up having our existing opinions and beliefs continually reinforced.

In his farewell address [Tip: hold Ctrl and click a link to open it in a new tab. (Hide tip)] , President Barack Obama spoke of the ‘retreat into our own bubbles, ... especially our social media feeds, surrounded by people who look like us and share the same political outlook and never challenge our assumptions ...’.

Activity 4 Reflecting

Having watched Eli Pariser’s talk, take a few moments to consider whether you are in a filter bubble.

Do you think you are getting a balanced information diet?