1. Getting Started: Readings

| Site: | OpenLearn Create |

| Course: | Design and Build Digital Assessments |

| Book: | 1. Getting Started: Readings |

| Printed by: | Visiteur anonyme |

| Date: | Tuesday, 3 March 2026, 7:15 AM |

1. Types of Assessment

Diagnostic – assessment of a learner’s knowledge and skills at the outset of a course and at any point during a course to guide teaching strategy.

It can also be used in open and distance learning and combined with self -assessment to indicate different options for study.

Self - Assessment – long used in open and distance learning. The student is asked a question or given a problem to solve and then they can look up the correct answer to compare to their own work. This is intended to prompt reflection by the learner and help embed learning.

Formative – assessment that supports developmental feedback to a learner on his or her current understanding and skills of the subject.

Formative assessment can also be described as ‘just for learning’ since it produces no final qualification, instead it is to prompt learners to reflect and adjust their own learning activities.

It can also help the teacher adapt their strategy in light of the results – so can also fulfil a diagnostic function as well.

Peer Assessment – these are assessment activities carried out by students with each other. This can be a powerful student engagement and learning technique as the students engage deeply with the criteria for a particular outcome in order to assess each other – improving understanding of their own learning targets. Having to explain their assessment to their peers also helps in their own understanding, while getting feedback from a peer in their own language provides another channel for learning.

Summative – the final assessment of a learner’s achievement, usually leading to a formal qualification or certification of a skill, also sometimes referred to as assessment of learning.

2. Levels of Assessment

Assessment of any kind can be referred to as low, medium or high stakes. A low-stakes assessment is usually diagnostic, self-assessment, formative or peer, with results recorded locally. A medium-stakes assessment is one in which results may be recorded locally and nationally, but is not ‘life changing’. A high-stakes assessment, however, is one in which the outcomes are of high importance to both the examining centre and candidates, affecting progression to subsequent roles and activities.

3. Principles of Assessment

This may seem a bit odd at first – asking what our principles are and defining them, perhaps it seems a bit of a philosophical detour? But it does make good sense when adopting new technologies to stand back a little and reflect. Otherwise there is strong tendency to continue with existing attitudes and practices that use the technology with poor results. If your institution has defined values and strategies about teaching, then look at them and see how they might be enacted with the use of technology via e-assessment in this section we look at some examples.

Assessment using projects – at City of Glasgow College

For instance, the City of Glasgow College has a teaching and learning strategy, which stresses the need to adopt a project-based teaching model; this in turn has major implications for assessment design and SQA verification procedures. The approach is described as:

“Here’s how the project model works

We need to make sure that our students develop the skills employers need. We also need to create a learning experience that mirrors the working environment as closely as possible. To do this, we’re adopting a project-based model.

The project brief is developed by lecturers, alongside input from employers, guaranteeing that the outputs are directly relevant to industry. The outputs could even be practical results that are applied in the workplace, support the work of a social enterprise, or contribute to the community.

Through integration, each project is designed to meet the outcomes of some of the constituent units of the course. In some cases, it is appropriate to include elements that involve cross-disciplinary working between different curriculum areas. The students’ work on the project is supported in a variety of ways. This includes workshops, team teaching, group work, independent study - including use of MyCity [the VLE] – work experience and other experiential learning, such as trips and visits. Students work collaboratively with their colleagues but also with lecturers, and even employers. The outputs are then assessed by peers, lecturers and employers.”

From: The City of Glasgow College Staff Induction Guide

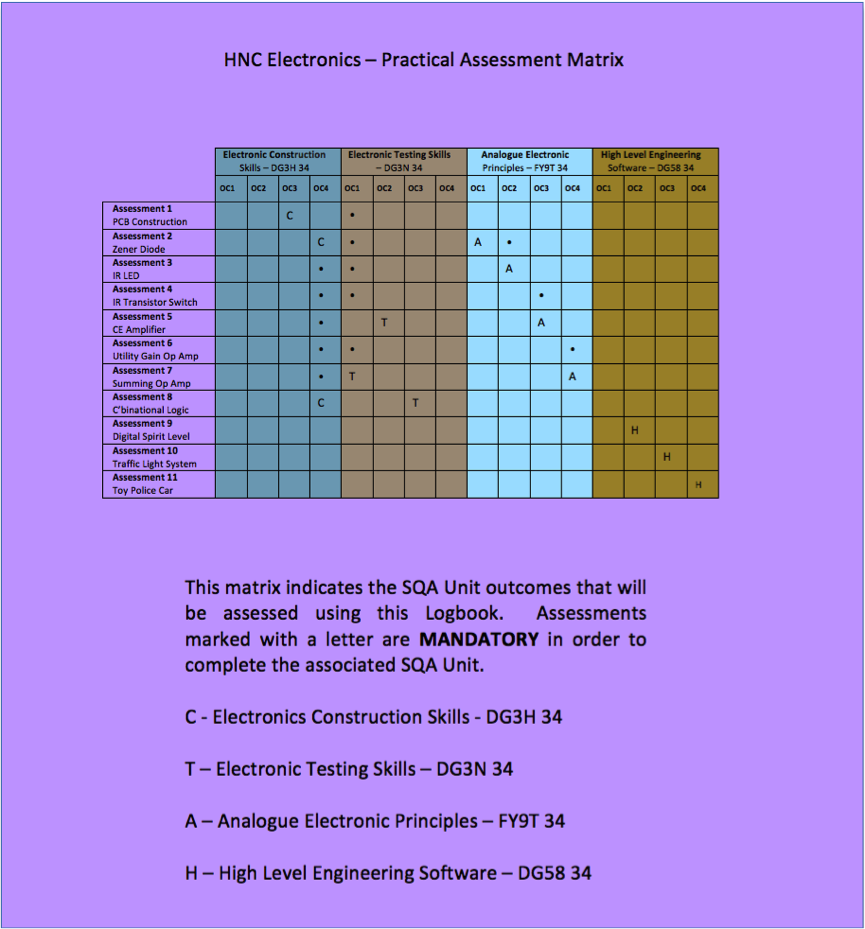

In several development projects at the college this has involved negotiation and consultation with the SQA in order to propose assessment methods and instruments, which would incorporate several single units together into a single project. This would need to be done anyway whether e-assessment was involved or not. Below is an image of a graphical matrix representing the remapping of the individual SQA unit assessments across the project as a whole:

Caption: Assessments mapped across several units to support project-based teaching Photo: CIT-eA Project

The Scottish Curriculum for Excellence Principles

When designing assessments, we also need to think about the principles connected to the Scottish Curriculum for Excellence(CFE) this has as its general aims to develop 4 key capacities in each young person to be:

-

a successful learner

a confident individual

a responsible citizen

an effective contributor

The table below from the Education Scotland [1] breaks down these capacities into attributes and capabilities.

Students entering the FE and HE sectors from 2015 on will have been educated in this system and the government has an expectation that these sectors will engage with these aims in the design of their provision. So when redesigning an assessment this is also an opportunity to ‘design in’ elements of the CFE.

Principles of Assessment Design from the Scottish REAP Project

The ideas developed by the REAP project bring a broader view of assessment that are useful to refer to when redesigning your existing assessments. Below are the REAP principles of ‘good assessment design for the development of learner self-regulation’.

The first seven are about using assessment tasks to develop learner independence or learner self-regulation ("empowerment").

The final four principles are about using assessment tasks to promote time on task and productive learning ("engagement").

Balancing the "engagement" and "empowerment" principles is important in the early years of study in HE and FE.

Eleven General principles of good assessment design

"Empower"

-

Engage students actively in identifying or formulating criteria

Facilitate opportunities for self-assessment and reflection

Deliver feedback that helps students self-correct

Provide opportunities for feedback dialogue (peer and tutor-student)

Encourage positive motivational beliefs and self-esteem

Provide opportunities to apply what is learned in new tasks

Yield information that teachers can use to help shape teaching

"Engage"

Capture sufficient study time and effort in and out of class

Distribute students’ effort evenly across topics and weeks.

Engage students in deep not just shallow learning activity

Communicates clear and high expectations to students.

Twelve Principles of good formative assessment and feedback

Each

principle is followed by questions to help you contextualise it:

-

Help clarify what good performance is (goals, criteria, standards). To what extent do students in your course have opportunities to engage actively with goals, criteria and standards, before, during and after an assessment task?

Encourage ‘time and effort’ on challenging learning tasks. To what extent do your assessment tasks encourage regular study in and out of class and deep rather than surface learning?

Deliver high quality feedback information that helps learners self-correct. What kind of teacher feedback do you provide – in what ways does it help students self-assess and self-correct?

Provide opportunities to act on feedback (to close any gap between current and desired performance). To what extent is feedback attended to and acted upon by students in your course, and if so, in what ways?

Ensure that summative assessment has a positive impact on learning. To what extent are your summative and formative assessments aligned and support the development of valued qualities, skills and understanding?

Encourage interaction and dialogue around learning (peer and teacher-student. What opportunities are there for feedback dialogue (peer and/or tutor-student) around assessment tasks in your course?

Facilitate the development of self-assessment and reflection in learning. To what extent are there formal opportunities for reflection, self-assessment or peer assessment in your course?

Give choice in the topic, method, criteria, weighting or timing of assessments. To what extent do students have choice in the topics, methods, criteria, weighting and/or timing of learning and assessment tasks in your course?

Involve students in decision-making about assessment policy and practice. To what extent are your students in your course kept informed or engaged in consultations regarding assessment decisions?

Support the development of learning communities. To what extent do your assessments and feedback processes help support the development of learning communities?

Encourage positive motivational beliefs and self-esteem. To what extent do your assessments and feedback processes activate your students’ motivation to learn and be successful?

Provide information to teachers that can be used to help shape the teaching. To what extent do your assessments and feedback processes inform and shape your teaching?

[1] You can find out more about the Curriculum for Excellence at this weblink http://www.educationscotland.gov.uk/learningandteaching/thecurriculum/whatiscurriculumforexcellence/thepurposeofthecurriculum/index.asp↩

4. More than Marking

More than Marking

It’s

useful to step back at this stage, before we get into the technology, and make

sure we take a wider view of assessment as being about ‘more than just

marking’. It is especially important to view assessment as a (varied) tool that

can drive and support learning in a number of ways and not solely as a means of

evaluating / measuring student knowledge and skills. This subtle but important

distinction is part of moving teaching into the more ‘design intensive’ mode

that is needed to make the best use of technology. In our project we found that

introducing e-assessment into existing courses sparked quite a lot of wider

course redesign on the part of the lecturers. Choosing how, when, where and

what we assess can have a major impact on student learning and is a crucial

part of good course design.

The move to project-based learning at City of Glasgow College has resulted in major redesign of some courses in terms of what is taught and when. For instance, making sure theory is taught and assessed in practical contexts, where previously theory had been taught in isolation – resulting in poorer student outcomes. Thus, assessment comes to be seen as a tool for learning and not just as a means of measuring learning. This shift in perspective has big implications and the reader is directed to the REAP project website [1] for more guidance on assessment in general.

[1] http://www.reap.ac.uk/↩

5. The Assessment System Lifecycle

The Assessment System Lifecycle

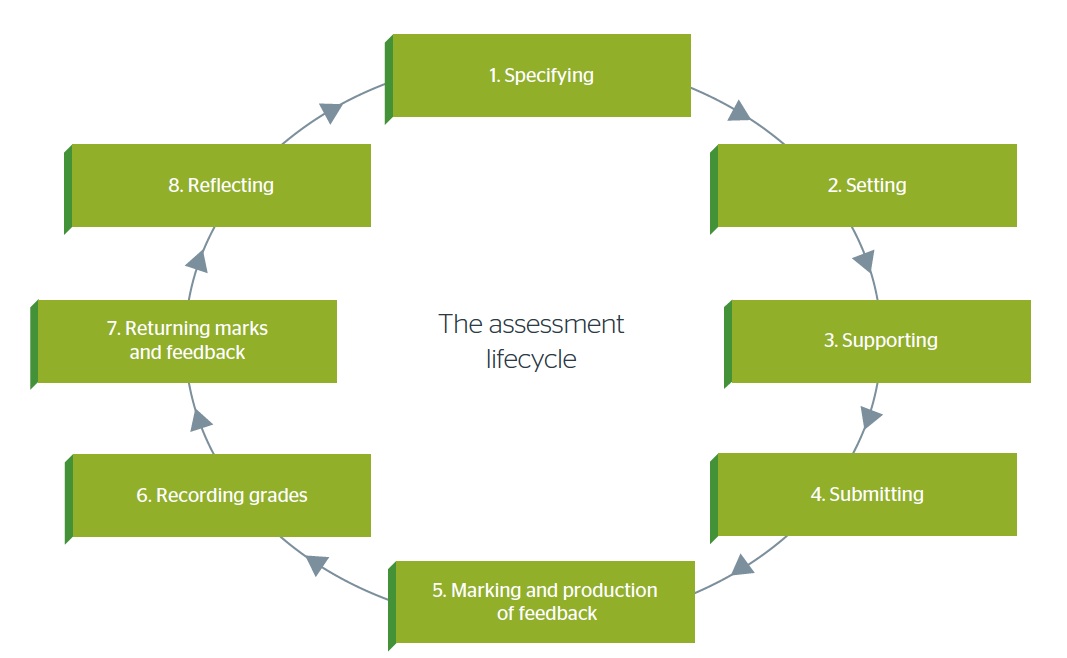

The concept of an ‘Assessment Lifecycle’ is useful and fits very well with the approach we are taking in this guide - to encourage a systematic way of looking at things. The image below provides a useful overview of the concept.

Caption: The Jisc Assessment Lifecycle, Jisc / Gill Ferrell, (Image License BY). Based on Forsyth, R., Cullen, R., Ringan, N., & Stubbs, M. (2015). Supporting the development of assessment literacy of staff through institutional process change. London Review of Education, 13(34-41).

The lifecycle model provides a useful means of mapping the processes involved and the potential for technologies to support this – it can be used for analysing a single course or across an institution. The use of a shared model like this can be useful when dealing with different groups in an institution[1] to help them understand how their work interacts with others, by emphasising the connected nature of these activities. Below we provide brief summaries of each stage in the lifecycle to help you think about interpreting the model in your own situation.

Specifying – the key stage

Although colleges frequently design programmes of study, the qualifications content on which these are, in general based, are in general, specified by the SQA in consultation with subject experts. Similarly, SQA specifies the assessment criteria but colleges choose the assessment methods and instruments. So you might think that this part of the cycle is taken care of, but before we move on it is worth taking a closer look at this important stage.

Even where the assessment specifications appear to be ‘nailed down’ by an awarding body, it is worth bearing in mind that they might still contain some errors, contradictions and lack of clarity (especially when translated into a technological form from a previous paper-based assessment) so it is essential to check them out. This is crucial when adopting new modes of assessment as in some cases the unit specifications may be quite dated and appear to exclude e-assessment by its use of language. The main thing to bear in mind when redesigning your assessments to use technology is that as long as the original assessment criteria and methods are followed and the evidence generated matches them it should not matter if the mode of assessment is digital and the evidence is digital – as long as it conforms to the specifications. The SQA wants colleges to adopt e-assessment methods, as their forward to this guide makes clear.

Because digital instruments and evidence might appear to be different from the original unit descriptor / specification, then it is always sensible to record the changes (however small) in the relevant college quality systems and the rationale for making the change – as part of the Internal Verification (IV) process. Different colleges have different systems for doing this; many map the changes explicitly onto the unit descriptors making clear where the changes are. We think it is good practice to also record the reasons for these changes and provide the ‘story’ for doing so in the form of a simple narrative (we have produced some design templates for this available from the project website). This is important to store with the rest of the quality control records for a particular unit so that future members of staff will be able to understand what is going on.

Providing this information is also crucial for the external component of the quality control process – External Verification (EV) – where external subject experts and teachers ‘inspect the books’. The EV process checks that the unit specification has been adhered to (including assessments) and that the student work submitted is of a sufficient standard. It is in the interests of the colleges to make the task of the EV’s as easy as possible. The EV process is often still largely paper based with copies of the units specifications / descriptors kept in folders together with records of the Internal Verification (IV) process that have been ‘stamped’ as accepted by the internal quality officers as well as copies of the student work. When this process moves into the digital realm it is important for the colleges to have clear procedures for where to store the digital equivalents of the paper folders. This includes simple but essential things like naming conventions for files and folders/directories and ways of storing content and controlling access etc. There are different methods and technologies used for doing this (simple is usually best!), the main thing is that this is done. The quality function of the college is a good ally to cultivate in this process – we discuss this further in the Collaborative Frameworks section.

Setting the assessment

This is where the criteria, methods and instruments from the specification stage are used / interpreted in detail for a specific context– i.e. a college, a cohort of students, a department, lecturers etc. What we found in our project in moving from paper to electronic means of assessments is that this stage is the point where deep reflection can occur and creative solutions start to appear. Thus it is important to provide lecturers with support and time at this critical point. In practice this equates to the design stage of our toolkit. This is a good opportunity to think about the timing of assessment in courses and if possible to move some assessment to an earlier stage in the course rather than having it all bunched up at the end. Having early formative / diagnostic e-assessments is a good idea and objective / MCQ testing can provide rapid feedback to students. At this stage you also need to think about how the timings of your assessment plans fit into the workload of your students.

Changing the assessment technology from paper to electronic can be a kind of prompt to see things differently. We found that teachers often used the opportunity to fix or improve aspects of their courses that they were unhappy with.

Supporting

This part of the lifecycle is concerned with how you support the students while they are in the process of doing the assessment. As we explain in the Analysis section of the toolkit you need to think about the digital skills the students and staff [2] will need in order to complete the assessment using whatever systems the college uses. The Jisc EMA report makes some really useful observations about things to check at this stage:

- Do the students understand the type of assessment that they are being asked to do? Are they familiar with these methods? Ask them to make sure! Getting them to explain their understanding of the assessment can be a useful diagnostic technique, you could do this using a classroom voting system

Make sure you and your colleagues use the same terminology about the assessment with the students – in fact agree a glossary of terms beforehand (small things make a big difference)

You might need to put in place tutorials and seminars workshops etc. that deal specifically with the new assessment methodology

If using Objective / MCQ formative tests for summative assessment, then it is essential to do a ‘trial run’ beforehand to familiarise your students with the technology and the college facilities – doing so with a formative assessment is an efficient approach

If you want students to submit draft assignments for marking and feedback before the final submission it will be sensible to set up two ‘Dropboxes’ on the college system that are clearly labelled and with the dates and have the draft one ‘disappear’ to leave only the final one visible

Submitting

The advantages of electronic online submission are listed in the sections below called ‘Why change? Some Advantages of e-Assessment’. This is also a definite ‘pinch point’ where things can go wrong such as the online test or ‘dropbox’ not being correctly set up, the students not knowing how to use the system or being confused by it. There is definitely a need to develop contingency plans here for system failure and human error. So, have a clear ‘Plan B’ for emergencies and make sure that you, your colleagues and your students know what that is – be especially clear about how students can let you and the system admin know that something might be wrong. This may include deadline extensions, student email alerts, system alerts to students (via the VLE or email etc.), having a helpline for students and making sure they know about it, a backup email address for emergency submission and even allowing paper submissions if needed.

Marking and Feedback

As the Jisc EMA report makes clear this is where the usability of existing technical systems present some challenges. This is why starting with pilot projects for low stakes formative e-assessments makes a lot of sense. It allows you and your colleagues (and students) to understand the systems you are using and to work out methods that are simple and robust to work around some of these limitations. Then, with that foundation, you can undertake summative assessment.

In our project the use of online rubrics was a revelation for lecturers and they all took to it immediately – seeing the benefits of greater marking consistency, clear feedback for students and speeding up the whole marking cycle. For similar reasons, they also really like the creation of templates in the e-Portfolios system for students to complete.

Recording / Managing Grades

Many of the SQA units in qualifications taught in colleges are marked on criteria that leads to either or pass or a fail. However, there is a presumption built in to many of the technical systems that marking will be in numerical figures or percentages. This can be problematical in technical systems that expect percentages or figures to be used to assign grades of performance to students. With some thought this can be made to work in these systems

The grading systems in VLE’s and e-Portfolios can be difficult to use, often requiring a great deal of scrolling to view the correct data for a student. A common issue is that the student record system in a college is regarded as the definitive version of grading information for students, but this is rarely linked to the VLE or e-portfolio systems where online marking takes place. This results in the marks having to be manually transferred between systems with the potential for error and delay. There is also the issue of lecturers keeping marking information outside the college systems due to skills / trust / access problems, again leading to the risk of error, delay and loss.

Returning Marks and Feedback

Students need to know how to submit an e-assessment but just as importantly they need to know how to receive and find their marks and feedback. Current systems do not make it clear to lecturers whether a student has received their marks and feedback. This is where getting both students and lecturers used to the system and how to work around the limitations is essential. Students (and lecturers) need clear information about when and how they will receive their marks and feedback.

Reflecting

Below we quote a useful example of institutional guidance about the Reflection part of the assessment lifecycle from Manchester Metropolitan University, who have been closely involved with Jisc in developing the lifecycle model. The change management process it describes maps onto the Internal Verification process used in Scottish FE colleges. The inclusion of a suggested annual review by unit and programme leaders is a particularly useful piece of advice. The guidance acknowledges the time and resource constraints that might get in the way of this. To make this happen we would advise that it is formalised – as it is such an essential element in maintenance of your e-assessment system.

“There are two parts to reflection on each assignment task: encouraging students to reflect on their own performance and make themselves a personal action plan for the future, and tutor reflection on the effectiveness of each part of the assessment cycle from setting to the return of work. It can be difficult to make time for either, with assessment usually coming at the end of a busy year, but it is worth making the effort.

If you wish to change any part of the assignment specification following review, then you will need to complete a minor modification form.

What Unit leaders need to do

Review the effectiveness of assignment tasks annually and report back to the programme team as part of Continuous Monitoring and Improvement

Encourage students to reflect on their previous assessment performance before beginning a similar assignment, even if in a different unit and at a different level.

What Programme leaders need to do

Review assessment across each level annually, using results and student and staff evaluations as a basis for discussion”.

[1] Research by Etienne Wenger and others into the management of knowledge and communities of practice within organisations identifies these kinds of tools as ‘boundary objects’ that help people to see how their work fits into the bigger picture.↩

[2] https://jisc.ac.uk/guides/developing-students-digital-literacy↩

[3] http://www.celt.mmu.ac.uk/assessment/lifecycle/8_reflecting.php↩

6. What is e-assessment?

Working with teaching staff in the project we have been struck by the range of misconceptions that exist around the term ‘e-assessment’, often it is assumed that it must mean some kind of automated testing – usually some form of Multiple Choice Questions (MCQs). But really it is a much more inclusive and general term than that and in a section below we provide a handy conceptual model (the e-assessment ‘continuum’) to help you think about where you and your organisation might be in terms of adopting e-assessment. Part of the problem with terminology in this area, and with e-learning more widely, is that it is heavily laden with commercially driven hype [1] to persuade people of the benefits of adopting (i.e. buying) technology – often with little or no evidence to back it up. We shall be ‘hype busting’ as we go along in this guide to clear the way forwards to enable more effective practice and, crucially, to widen our perspectives to enable more creative thinking.

Some Examples of e-Assessment

So, e-Assessment generally refers to the use of technology to deliver and manage assessment. It can be (and often is) very diverse due to a host of differing contextual factors such as access to the internet, location, situation of students, staff skills, college infrastructure, and money (of course!). Below we list just a few of the possibilities to help widen our view of what constitutes e-assessment:

It can be used with a wide range of learning models such as campus-based, ‘blended’ i.e. a mixture of face to face and self-directed learning with technology, or a fully distance model of learning - to deliver diagnostic, formative and summative assessments.

Assessments can include submitting an essay or assignment online via a VLE (Virtual Learning Environment), or even via email.

It can be an online MCQ test where students access the test and upload their answers to a pre-programmed ‘marking engine’

Assessments may take the form of self & peer assessment exercise, enabled by a specific technology (VLE, email, Twitter, Facebook etc.), in which students are required to assess each other's work on the basis of given criteria. e-Assessment can be used across a range of subjects and it is very popular in engineering, science, medical sciences and language disciplines.

In its broadest sense, e-assessment is the use of information technology for any assessment-related activity and from this perspective MCQs are just a subset of many differing options. It can be used to assess both cognitive and practical abilities e.g. ‘explaining’ (cognitive); such as a concept or method in graphic design or ‘choosing and using’ (practical); the right tool to produce a desired result in graphic design.

Using e-Portfolios for Assessment

A recent and important development in e-assessment has been the emergence of the e-portfolio. This uses technology to support and update the very old (and valuable) practice of students assembling a portfolio of their own work to provide tangible evidence of their achievements and to present for assessment. It is common in art education for instance, but can be applied across all disciplines and is very useful in supporting job applications and showing evidence of continuing professional development.

The essential difference between an e-Portfolio and a Virtual Learning Environment VLE is that the former is owned and controlled by the student while the latter is owned and controlled by the teacher. So, the e-Portfolio is a student-centred and controlled online space where the student can invite teachers (and other students) in to view content that they have created. In contrast, the VLE is a teacher-centric and college controlled space where students and teachers come together to undertake programmes of learning.

[1]http://www.gartner.com/technology/research/methodologies/hype-cycle.jsp↩

7. The e-assessment continuum

A continuum describes a range of values that can lie between two ends or extremes - it’s a useful idea for understanding, analysing, evaluating and sharing in relation to a given set of criteria. In our case we use the idea to describe the possible range of e-assessment methods and tools, graded by ease of use by teachers. Everyone’s continuum might look different (some maybe narrow while some may be wide). So, this is purely illustrative.

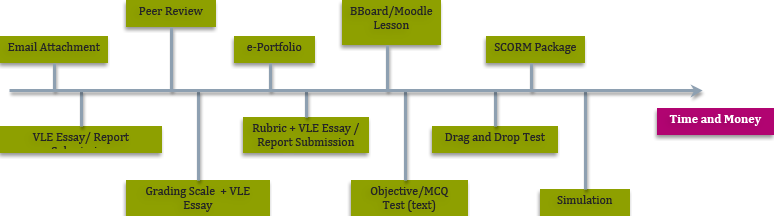

An E-Assessment Continuum

Caption: An e-assessment Continuum mapping methods and tools against the cost and time of setting them up and maintaining them

This is our project e-assessment continuum shown above. In our case we are using it to signify difficulty in designing, developing and maintaining e-assessments using technology – plotted from easy on the left to hard on the right. You should note that the terms ‘easy’ and ‘hard’ in this case are determined by both relative and contextual factors. A little explanation will help here. A lecturer may be really good, both technically and educationally, at creating Multiple Choice Questions (MCQs) that can be used in a college VLE. This would constitute a relative factor i.e. the ability of the lecturer. However, the lecturer might have such a heavy workload that they never can make time to create an MCQ in the first place. That would be the contextual factor. Looking at things this way is also part of adopting a ‘systems’ view of things – to see how different things and factors interact.

For our continuum we have in mind an ‘average’ lecturer in an ‘average’ college whose freedom of action is constrained by the kind of factors we have identified in the course of the CIT-eA project and are described in the rest of this guide.

8. Why change? Some benefits of e-assessment

There are a lot of good reasons for adopting e-assessment. Below are some examples that mix both traditional and innovative pedagogical approaches together with the use of technology (this may also be useful as a prompt list to use in the design phase of your work). In the list below you can also see example of assessment for learning rather than just of learning (i.e. purely summative). In the design section of this toolkit you will find a list of typical e-assessment tools and some of their typical and potential uses. Once you get thinking about this the list of advantages is practically endless:

Reduction in the use of paper for traditional assessment task such as essays and reports e.g. by going digital and using a simple VLE assignment submission ‘Dropbox’. This also brings potential advantages to help streamline and speed up assessment methods.

Students do not have to travel to the college to hand in the paper assignment (especially useful for those at work or at a distance)

Printing costs for students reduced

Automatic proof of submission

Work is safely stored and harder to lose

Students can receive electronic reminders about deadlines

Deadlines not governed by office hours or the working week

Not having to pick up carry and manage large piles of paper and folders

Increased speed, accuracy and consistency of marking

Being able to reuse common feedback and comments

Being able to make comments and feedback that are as long as needed (not limited by paper space) and clear of handwriting issues

Not having to decipher poor student handwriting

Students not having to decipher poor lecturer handwriting

Potential to add audio, video and graphical feedback

A big one, for lecturers, potentially. The ability to work from home or different locations when setting up, marking and providing feedback to students

Quicker, richer, better and more consistent student feedback on formative and summative assessments (critical for learning) e.g. by the use of a VLE and a rubric

Reducing marking workloads and coping with larger student cohorts e.g. by the use of a VLE and rubrics or by the use of objective / MCQ style testing

The ability for lecturers to virtually collaborate at a distance in order to assess students work and provide feedback to students – good for inter college collaborative course and even for international collaborations.

Supporting deeper learning by asking students to create short explanations of key subject area knowledge and skills for their peers and getting them to mark each other’s work using the assessment criteria. Then harvest the best to use as future learning resources e.g. by posting to a discussion board and discussing them and marking them there.

Support deeper learning and course engagement and provide feedback on course design by getting the students to explain what they think the learning outcomes mean e.g. by posting to a discussion forum or blog. This gets the students to focus on the course and how they approach the assessments.

Prepare students for assessment by getting them to mark and provide feedback on previous student work (anonymised) and then reveal the actual marks and feedback e.g. by use of the VLE and discussion board and rubrics

Improves existing paper-based assessments that currently work poorly and disadvantage some students.

Evaluate knowledge and skills in areas that are expensive / dangerous to do using current methods in labs / workshops / building sites / etc. e.g. via drag and drop interactive tests, scenarios, simulations etc.

Evaluate complex skills and practices e.g. by the use of an e-Portfolio that contains templates specifying the evidence that students have to generate to meet the outcomes

Provide immediate ‘real-time’, feedback e.g. by the use of objective / MCQ style testing and / or simulations

Support for collaborative learning e.g. by using an online discussion forum and getting students to peer assess / critique each other’s work or by joint authoring of reports and presentations that are submitted online

Assessing complex skills like problem-solving, decision making and testing hypotheses, which are more authentic to future work experiences e.g. by group working through questions on case studies in a discussion forum, using simulations etc.

Providing richer activities (authentic work based scenarios) that can lead to improved student engagement and potentially improved student performance e.g. by the use of case studies and linked objective / MCQ testing or simulations or game playing

Provide more engaging assessments and encourage the development of observation and analysis skills e.g. by providing short videos / case studies that do not include the conclusion and ask the students ‘what happened next and why?’ e.g. through video clips located in the VLE and the use of discussion boards

Increasing flexibility in the approach, format or timing of an assessment, without time or location constraints.

Assessment on demand – as is the case with many professional and vocational qualifications – individuals may be fast tracked depending on the results

Integrating formative and summative judgments by making both assessment and instruction simultaneous e.g. by using objective / MCQ style testing and / or simulations that include rich feedback that can include short video clips from teachers / experts

9. The virtues of paper - a sideways look

Before we leave the ‘paper economy’ of education behind in our exploration of e-assessment, it is well worth reflecting on why paper is still so prevalent in our education systems. So, this section presents a little ‘devil’s advocate’ exercise to, hopefully, provoke some critical thinking on your part and encourage a healthy degree of scepticism

We do not anticipate the complete disappearance of paper – we think there will be a ‘mixed economy’ of paper and digital into the foreseeable future in our colleges and elsewhere. And this is a good point to remind ourselves of ‘the virtues of paper’ and why it persists. There are 3 main reasons that we can see:

Current systems (the complete cycle) are built around paper

It’s very simple to use

It’s very resilient

Together, these make a powerful combination for the status-quo, paper has none of the systemic dependencies that we have identified for e-assessment. Paper and pen have few technical problems – you don’t have to worry about having the right version installed or what type of web browser works best. Neither do you have to worry about what data format to use to move information between the different administration and management information systems. You need very little infrastructure; just rooms, desks, chairs and adequate light. So, no worries about having the numbers of computers / the right type of software / the right web browsers / sufficient staff and student skills / adequate internet access / skilled support staff on hand etc. etc.

It’s not just education where paper retains a stronghold, some of the most technologically sophisticated organisations in the world still make extensive use of paper:

From Flickr the US Pacific Fleet (License BY-NC 2.0)

Caption: SAN DIEGO (Jan. 15, 2014) First Class Petty officers take the E7 advancement examination in the wardroom of Wasp-class amphibious assault ship USS Essex (LHD 2). The exam, which tests rating and basic military knowledge, will be taken by approximately 17,000 E6's throughout the fleet this cycle. (U.S. Navy photo by Mass Communication Specialist 2nd Class Christopher B. Janik/Released)