Use 'Print preview' to check the number of pages and printer settings.

Print functionality varies between browsers.

Printable page generated Tuesday, 3 February 2026, 10:23 PM

Session 6 The monitoring and evaluation of Living Labs

Session 6 The monitoring and evaluation of Living Labs

In this sixth session of the course we explain how we went about monitoring and evaluating our Living Labs . First, watch the following video on some key features of our approach to monitoring and evaluation and then do the reflective activity below.

Transcript

KEVIN COLLINS:

In this session, we look at the approach we use to monitor and evaluate our Living Labs. This approach flowed from our wish to learn in the Living Labs, to learn between the Living Labs, and learn beyond the Living Labs.

There are different contexts and innovatory services involved when we needed to have a flexible and adaptable approach, where we could share reflections and ideas using a common conceptual framework.

In particular, we regularly reflected on three central questions, known as the three E's: Efficacy - has each Living Lab achieved its purpose? Efficiency - has each Living Lab used resources well? Effectiveness - have the Living Labs contributed to their wider purpose within AgriLink?

The answers to these questions at any point in the Living Labs' timelines help monitor and evaluate progress and shape the subsequent focus and activities of the Living Labs.

Reflective Activity 12

Reflective Activity 12

What do you understand by monitoring and reflexive monitoring? Write your thoughts in the box below before looking at our answer.

Answer

Our answer uses the practice abstract on Reflexive monitoring for learning about Living Labs written by Kevin Collins of The Open University:

Monitoring is a process to assess and evaluate an activity, usually while it is underway. It is often done by an ‘independent’ monitor, such that the monitoring is ‘removed’ from the experience and understanding of those being monitored. In contrast, reflexive monitoring is undertaken by those directly involved in the situation of interest to gain insight into their own learning and to use this to design improvements during the activity. AgriLink has used reflexive monitoring to learn about the roles and practices of the six Living Labs established to promote innovation in agricultural advisory systems.

The primary mechanism for reflexive monitoring is the appointment of a dedicated monitor in each Living Lab. The monitor works with the Living Lab facilitator to establish and develop reflexive monitoring of its work, focus and activities. The monitor compiles quarterly and annual monitoring reports to document and review changes to the purpose, work and direction of the Living Labs, problems and opportunities encountered, and key learning points. A central AgriLink monitoring team reviews the annual reports and synthesises learning. Subsequent discussion between Living Lab monitors and AgriLink colleagues provides further opportunity to monitor learning and reflect on the next steps for each Living Lab. This includes a process where monitors engage in peer reviews of other Living Labs and, in so doing, reflect on their own Living Lab and ways in which its work could be enhanced.

There are many tools and techniques which can be used to promote reflexive learning, such as the three Es criteria of efficacy, efficiency, effectiveness (see other AgriLink PAs); systems diagrams; structured inquiries during field visits; and co-researching.

Let us look at reflexive monitoring in a bit more detail.

Now go to the next section.

Reflexive monitoring

Reflexive monitoring is one of the key principles of AgriLink’s Living Labs. It combines the concepts of reflexivity and monitoring. Reflexivity is a ‘second order’ process in which the observer sees her/himself as a part of the situation rather than apart from it (Ison, 2010; Fook, 2002). A reflexive process is one of critical self-awareness and thinking about thinking.

Figure 6.1 shows the main idea of reflexivity. When applied to the Living Labs, ‘practitioners’ might be researchers and other participants engaged in a critically reflective process about what they are doing and their underlying assumptions. Living Lab monitors had a key role in the reflexive monitoring of our Living Labs.

Equally, we have been clear about the framework of ideas we have used, as well as the methods and tools employed. Regular reporting on monitoring and evaluation provided opportunities and a structure for thus reflexivity.

You will recall from Session 2 that the aim in the development of new innovation support services is to better connect research and farmer-based innovations, while appreciating the diversity of farmers’ micro-AKIS. Achieving this requires a reflexive stance on the side of both researchers and practitioners, to critically look at their own practices, their views and their ways of doing things.

The Living Labs drew upon action research traditions that include reflexive monitoring as an integral part of their process, in particular the approaches of Reflexive Monitoring in Action developed at Wageningen University and Systemic Inquiry developed at The Open University (see Box 7.1 below). Both these approaches draw on a range of other traditions of theory and practice and both are framed as learning approaches to change.

Box 7.1 Reflexive Monitoring in Action and Systemic Inquiry

Reflexive Monitoring in Action (RMA) is an interactive methodology to encourage reflection and learning within groups of diverse actors that seek to contribute to system change in order to deal with complex problems. This approach builds on the assumption that recurrent collective reflection on a current system of interest (barriers as well as opportunities) helps to stimulate collective learning and to design and adapt targeted systemic interventions (Van Mierlo et al., 2010).

Systemic inquiry (SI) is a key form of practice for situations that are best understood as interdependent, complex, uncertain and possibly conflictual. Reflexive monitoring is an integral part of SI, as is facilitation. Doing systemic inquiry with others is a particular means of facilitating movement towards social learning, which is the sort of ‘concerted’ action associated with everyone working well together to address an issue of concern, making their contributions in a harmonious way.

The overall inquiry (system) is monitored, measures of performance articulated against acceptable criteria and control action taken. Measures of performance are not imposed from the outside. Iteration and concurrent action in different stages are common (Ison, 2010).

Systemic evaluation

Like monitoring, evaluating can be both formal at particular stages of a process and/or informal ‘as you go’. Evaluation can be qualitative and/or quantitative and is driven by a need to develop knowledge (e.g. about learning or the effectiveness of an intervention), and/or by a need for accountability (e.g. regarding how resources have been used and whether a project has achieved what it set out to do). Evaluation can apply to outputs, outcomes and processes.

Evaluation of a process, product or service is often portrayed as a systematic determination of merit, worth and significance against stated objectives, using set criteria. This systematic approach tends to be a ‘first order’ process focusing on the subject in hand rather than a second order process that also takes a wider systemic view. In AgriLink, we purposefully combined reflexive monitoring with ‘systemic evaluation’ meaning evaluation that is informed by systems thinking, i.e. a process that involves engaging with multiple perspectives, recognising and understanding inter-relationships, and reflecting on boundary judgements.

Systemic evaluation is often about making a judgement about whether something is on track or not in a way that takes account of its context.

Monitoring and evaluation as an iterative process of interventions and learning

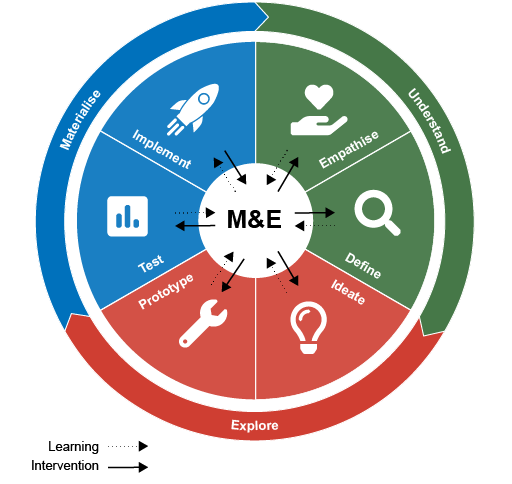

Monitoring and evaluation at the Living Lab level was also integrated with our use of design thinking, as set out as a cyclic process (Figure 6.2). This involved interventions and learning moving among the different stages of the design thinking process on an iterative basis as shown by the green and blue arrows in Figure 6.2.

There are many possible tools and techniques that can be used for different purposes for monitoring and evaluation within a Living Lab, and some have already been discussed. For example, some are suitable for the activities of data gathering (both quantitative and qualitative), some for data analysis, some for thinking, for action, for communication, for prediction, for involving people in the Living Lab process, and many more.

The AgriLink Living Lab teams considered their Living Labs as ‘systems of interest’ using systems thinking and systems diagrams, recognising that they have purposes, boundaries and environments that are not given but negotiated on an ongoing basis.

Reflexive monitoring is a learning process related to a system’s purpose and to intended interventions and in order to do this kind of monitoring ‘performance measures’ were needed for each Living Lab system of interest.

One way of developing performance measures was to use a technique from systemic inquiry (and soft systems methodology – see Checkland and Scholes, 1999), whereby Living Labs considered how they were getting on at different levels using a series of performance measures that begin with ‘E’:

Will your Living Lab system of interest do what it’s meant to? (efficacy)

Will it use resources (including time, energy and enthusiasm) well in doing it? (efficiency)

Will it contribute to our higher level purpose(s)? (effectiveness)

From this starting point, monitors had to develop some indicators and questions of their own:

| Indicators | Questions | |

| Efficacy | ||

| Efficiency | ||

| Effectiveness |

Questions associated with the characteristics of each Living Lab were used to consider their functioning in relation to co-creation, active user involvement, real-life setting, multi-stakeholder participation and a multi-method approach, but it was important to consider the whole process, not just the parts.

Indeed, the three ‘E’ questions were also applied at the level of the whole Living Lab, as exemplified here:

The efficacy of the innovation support service |

To what extent is the developed innovation support service being applied and really supporting innovation and learning on the core issue? |

The efficiency of the Living Lab process |

How well is the Living Lab being run in terms of our use of available resources? |

The effectiveness of the Living Lab |

To what extent is the Living Lab successful in facilitating learning for the development of improved innovation support services? |

The process of developing questions and indicators, including using the ENoLL characteristics and the three Es, was meant to support monitoring and evaluation, not constrain it. Individual Living Labs may have had some different characteristics besides those identified by ENoLL.

Over time, monitoring questions needed to be adapted in the light of the aim of an individual Living Lab, its outcomes and ever-changing context. A key challenge is deciding what is a minimum set of questions to monitor in order to get a good impression of what is going on and to harvest what is there to learn, including what might also be shared with others.

Reflective Activity 13

Reflective Activity 13

This course has put much emphasis on working with others when setting up and running a Living Lab.

From what we have covered so far and from your own experiences, what aspects of working with others might affect setting up and running a Living Lab, which need to be monitored and evaluated?

Answer

There are many possible things that could be unhelpful, but here is an evaluation of one set of factors delaying co-creation and progress of a Living Lab that resulted from reflexive monitoring as reported in AgriLink Practice Abstract 50 by Gunn-Turid Kvam and Egil Petter Straete from Ruralis in Norway:

Establishing a Living Lab (LL) brings actors with different knowledge, views and experience into a co-creation process for solving complex issues. The goal of the Norwegian LL is to develop a new advisory service for cooperation between farmers on crop rotation and the main actors are advisors, farmers and researchers.

Three main dialogues are evident in the LL. It is between advisors and farmers, where advisors contacted two groups of farmers with experience in crop rotation. These are two pilots of the LL, and the aim is that advisors learn from experience in working with these farmers. The second dialogue is between researchers and farmers, where researchers have contacted farmers joining the pilots and other farmers with experience in the field. During personal and focus group interviews, researchers gained knowledge about conditions for cooperation and discussed elements of a new service. The third dialogue is between advisors and researchers in project meetings to share knowledge and experience from the dialogue with the farmers, to reflect on these and discuss input to a new advisory service.

Different conditions have delayed the co-creational nature of the work. The Meta project that crop rotation is a part of has recently been reorganised involving new ownership, a new project leader and a reduction of budget and activities. Other conditions, such as lack of knowledge and experience in working in a LL and the advisors’ lack of prioritising, has influenced progress.

For researchers, it has been challenging getting involved in the project because of constant changes of project conditions and people involved. It takes time to get to know each other, develop reciprocity, openness and trust, which is decisive for the co-creation of a successful LL.

Running Living Labs is not as easy as it might seem from what I have said so far.

In the final two sessions of this course, I will look in more detail at what did and what did not work with the six AgriLink Living Labs.

Summary of Session 6

Living Labs are increasingly used as a concept and process to co-research with stakeholders in context.

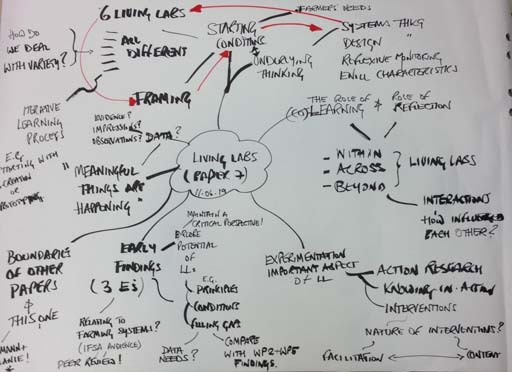

In AgriLink, the aim has been to use six Living Labs involving researchers, farmers and public and private advisors to learn about, monitor, evaluate and promote innovation in agricultural advisory and support systems. But working in context relevant ways means that each Living Lab is unique in its form, focus, stakeholders, composition, methods, practices and outputs. This uniqueness presents problems when monitoring and evaluating Living Labs, especially determining meaningful indicators and measures of progress which are generalisable but also capture and reflect diversity and context.

Rather than focusing on developing lists of individual indicators, we have drawn on systems ideas and concepts to understand Living Labs as learning systems. In particular we have used the established three Es for monitoring and evaluating systems: efficacy, efficiency and effectiveness.

Each criterion is expressed as a question:

| Efficacy – has the Living Lab achieved its purpose (as defined by the stakeholders)? |

| Efficiency – has the Living Lab used resources well (including time, energy and enthusiasm)? |

| Effectiveness – has the Living Lab contributed to its wider purpose within AgriLink? |

Used in our quarterly and annual monitoring and evaluation reports, the three Es allowed each of the Living Lab monitors to document context-specific answers and also monitor and evaluate learning about the aims, processes and activities of their and other Living Labs.

It also provided the wider AgriLink consortium with an understanding of the potential for Living Labs as learning systems for innovative practice in agricultural advisory services.

References for Session 6

Bootcamp Bootleg D.School, (2010) Modes of design thinking. D.School, University of Stanford. Available at: https://dschool.stanford.edu/s/METHODCARDS-v3-slim.pdf (Accessed: 2 April 2018).

Checkland, P. and Scholes, P. (1999) ‘Soft Systems Methodology in Action: Including a 30-Year Retrospective’, Journal of the Operational Research Society, 51(5):648.

Fook, J. (2002) Social Work: Critical Theory and Practice. London, Sage.

Ison, R. (2010) Systems Practice: How to Act in a Climate-Change World. Springer, London.

Van Mierlo, B, Arkesteijn, M, and Leeuwis, C. (2010) ‘Enhancing the reflexivity of system innovation projects with system analyses’, American Journal of Evaluation, 31(2): 143–61.

Go to Session 7: Living with a Living Lab: some dos and don’ts