Use 'Print preview' to check the number of pages and printer settings.

Print functionality varies between browsers.

Printable page generated Sunday, 23 November 2025, 4:01 AM

Skills and strategies for using Generative AI

Introduction

Enthusiasts about Generative AI (GenAI) tools paint a picture of a world where technology will carry out the more routine, time-consuming tasks, enabling us to use the time saved to do much more interesting, creative and satisfying things.

Who would not like an extra four hours a week? What would you do with that time?

However, the media also regularly reports on examples where GenAI has provided wrong information that has a real-world consequence, such as the New York chatbot for entrepreneurs, which advised incorrectly and illegally on a number of issues including the responsibility of landlords not to discriminate on the basis of income.

Being aware of this potential for incorrect information can make it difficult to have confidence in the outputs provided by large language models (LLMs) in the real world, particularly given the higher risk of inaccuracies when using GenAI for legal advice and information.

This course considers the skills and strategies needed to use GenAI in the real world, so that individuals and businesses have greater confidence in its use. It explores when it might be appropriate to use a GenAI tool, how to ask questions effectively to get the best possible output (also called prompting), and the necessity of evaluating the information it provides.

An introduction to the course

An introduction to the course

Listen to this audio in which Kevin Waugh provides an introduction to the course.

Transcript

Welcome to Course 2: Skills and Strategies for Using Generative AI.

In this course, we’ll focus on one key goal: learning how to guide Generative AI tools—like ChatGPT—to produce useful, reliable, and relevant outputs. ChatGPT was the first widely used conversational AI—one that you could interact with in natural language, refining its responses through back-and-forth conversation. This practice has become known as prompting: giving the AI a clear request, then improving the results through thoughtful follow-up. Over the next three hours, we’ll explore how to write effective prompts and how to critically evaluate what the AI produces.

The course is divided into three sections: In Section 1, we begin with a note of caution. Prompting may seem simple, but it requires attention and care. AI doesn't always understand your intent, and vague or overly broad prompts often lead to unreliable or irrelevant responses. Getting good results starts with well-crafted prompts—and being prepared to review and refine what the AI gives you.

In Section 2, we ask: What makes a good prompt? We’ll explore prompting frameworks that help structure your requests, and we’ll look at how to take advantage of conversational interactions with AI tools to refine and extend responses.

In Section 3, we focus on reviewing AI outputs—checking for accuracy, usefulness, and trustworthiness. We’ll finish with a chance for you to put your prompting skills into practice, using a set of suggested tasks. Let’s get started. Welcome to Course 2.

You can make notes in the box below.

Discussion

ChatGPT was the first widely used conversational AI—one that you could interact with in natural language, refining its responses through back-and-forth conversation. This practice has become known as prompting: giving the AI a clear request, then improving the results through thoughtful follow-up. Over the next three hours, we’ll explore how to write effective prompts and how to critically evaluate what the AI produces.

This course assumes you understand how GenAI and LLMs work and the concerns around using them for legal advice and information. If you are not sure about this, we recommend you complete Course 1: Understanding Generative AI first.

This is a self-paced course of around 180 minutes including a short quiz. We have divided the course into three sessions, so you do not need to complete the course in one go. You can do as much or as little as you like. If you pause you will be able to return to complete the course at a later date. Your answers to any activities will be saved for you.

Course sessions

The sessions are:

Session 1: Introduction to prompting – 45 minutes (Sections 1, 2 and 3)

Session 2: Single prompts, conversational prompts and personas – 90 minutes (Sections 4, 5 and 6)

Session 3: Evaluating and correcting the output – 45 minutes (Sections 7, 8, 9 and 10)

A digital badge and a course certificate are awarded upon completion. To receive these, you must complete all sections of the course and pass the course quiz.

Learning outcomes

Learning outcomes

After completing this course, you will be able to:

Understand when Generative AI is the right tool to use.

Explain how to prompt large language models in the most effective way.

Evaluate the outputs of Generative AI for accuracy and reliability.

Be confident in using a large language model for a legal task.

Glossary

Glossary

A glossary that covers all eight courses in this series is always available to you in the course menu on the left-hand side of this webpage.

Session 1: Introduction to prompting – 45 minutes

1 Using Generative AI – the reality

There is nothing intelligent about GenAI – it is not thinking or reasoning the way we consider humans to be capable of thinking and reasoning.

As we discussed in the first course, Understanding Generative AI, GenAI, specifically LLMs, predicts the next word in the sentence through their training on billions of pieces of data, and the tools can be tuned or trained to respond in particular ways.

With LLMs, the scale of the analysis and vast quantities of written data used in their training have led to them being able to mimic fact recall and human communication. This makes them compelling communicators, and they can produce persuasive and eloquent text. They’re really helpful with lightweight problems where the consequences of being wrong, biased or inappropriate are relatively small.

However, if you know how they work, it makes them hard to trust when you really need to be certain of accuracy and correct reasoning. When using GenAI for legal tasks, it is also important to be aware that the tools’ access to up-to-date and accurate legal information is limited, as it is mostly hidden behind paywalls.

Here are some headline stories you may have spotted over the past few years.

In 2021, leaders in the Dutch parliament, including the prime minister, resigned after an investigation found that over the preceding eight years, more than 20,000 families were defrauded due to a discriminatory algorithm.

In August 2023, tutoring company iTutorGroup agreed to pay $365,000 to settle a claim brought by the US Equal Employment Opportunity Commission (EEOC). The federal agency said the company, which provides remote tutoring services to students in China, used AI-powered recruiting software that automatically rejected female applicants aged 55 and older and male applicants aged 60 and older.

In February 2024, Air Canada was ordered to pay damages to a passenger after its virtual assistant gave him incorrect information at a particularly difficult time.

By June 2023 there had been a number of reports where lawyers were presenting hallucinated content to courts, such as made-up or wrongly applied cases. Despite this wide publicity, it was still happening at the time of writing this course in 2025.

In January 2025, the BBC reported on a study by the legal firm Linklaters, looking into how well GenAI models were able to engage with real legal questions. The test involved posing the type of questions which would require advice from a "competent mid-level lawyer" with two years' experience. The report states “Linklaters said it showed the tools were "getting to the stage where they could be useful" for real-world legal work – “but only with expert human supervision”.

This was clarified later as:

“The newer models [of AI] showed a ‘significant improvement’ on their predecessors” Linklaters said, but still performed below the level of a qualified lawyer.

Even the most advanced tools made mistakes, left out important information and invented citations – albeit less than earlier models.

The tools are "starting to perform at a level where they could assist in legal research" Linklaters said, giving the examples of providing first drafts or checking answers.

However, it said there were "dangers" in using them if lawyers "don't already have a good idea of the answer".

Having confidence in its use

Having confidence in its use

If you decide to use an LLM (for example, ChatGPT, Copilot or Claude) for your work in a legal or free advice organisation, what would you do to make sure you have confidence in its use?

Discussion

The range of potential problems means that GenAI output needs a lot of checking, and so it would be important to ensure there was a process to check the accuracy and appropriateness of its outputs. In the first course , Understanding Generative AI, you briefly heard about some potential problems in the performance and use of GenAI tools: hallucinations, bias, ethical and legal concerns.

It’s also possible to ask questions that the LLMs cannot answer because it is ill-suited to that kind of question. This was identified shortly after the release of ChatGPT when people noticed it couldn’t do basic arithmetic. We’ll come back later to consider how to encourage AIs to tell you that they don’t know what they’re talking about.

If you’re using GenAI in a real-world scenario, particularly where there might be harm if they do something wrong, you need to treat them like a very poorly trained assistant who regularly makes all kinds of mistakes. You need to be specific about what you ask them to do, and you have to be ready to review everything they complete. This course looks at two key aspects of using GenAI – prompting, to ensure we get what we want from the AI, and reviewing the output, to check that what the AI gave us is safe to use.

So, when is it a good idea to use GenAI and when should it be avoided?

Let’s look briefly at some ways GenAI can be used in the legal context (often called ‘use cases’). Use cases are explored in more depth in the fourth course: Integrating Generative AI into workflows.

2 Using Generative AI – when is artificial intelligence a good choice?

It has been widely reported that using GenAI tools can potentially be quicker and cheaper than using existing tools or processes.

For individuals, this can save time and money when completing a task, such as finding out what the law is in a particular area. For charities, organisations and businesses it can save time and money which can be better used for other tasks.

Larger law firms have developed bespoke legal GenAI tools to assist in their work, while legal databases such as Lexis and Westlaw have developed GenAI-assisted tools built into their databases to assist with legal research and drafting.

However, before using GenAI, it is important to pause and think about whether using a GenAI tool will produce an accurate result, and whether it will be better, cheaper or quicker than your existing practices. For example, if you want to find out what the current law is in a particular area, would it be quicker to use a generic free LLM and then check its outputs, or to use a general internet search and look at the website of a reliable source such as Citizens Advice or the government website?

If you are looking to use GenAI to improve efficiency in your organisation, what tasks do you want GenAI to carry out, which is the best tool to use, and will this be better or more efficient than your existing practices? You will find out more about how to choose a GenAI tool in the third course in the series, Key considerations for successful Generative AI adoption.

Here are some of the general ways in which GenAI is being used in legal environments:

Transcribe and summarise meetings

GenAI can convert spoken discussions from meetings into written transcripts and condense them into short summaries with key points and takeaways. For example, GenAI could be used to produce the notes of client meetings, or discussions with witnesses, instead of the adviser. This is an easily monitored application that can be checked quickly by a person at the meeting.

Summarisation of legal documents

Similar to summarising meetings, GenAI can summarise complex legal texts, reducing them to concise accounts while highlighting key points and essential insights. For example, it could summarise a tenancy agreement or contract which is the subject of a dispute. Human review, while essential, is again relatively straightforward as an adviser can check the original document and ensure the summary is accurate.

Automated contract review

A GenAI tool can review a contract and highlight any variations against a standard contract, highlighting clauses which can then be reviewed by a relevant adviser.

How are you planning to use GenAI?

How are you planning to use GenAI?

How are you planning to use GenAI? Will this be better than how you do that task at the moment?

Make notes in the box below.

Discussion

When using a GenAI tool to carry out these (or other) tasks, it is important to give the tool the right instructions – often called prompting. Doing this correctly will increase the accuracy and relevance of the output. You will find out how to do this in the next section.

3 Prompting skills

Prompting is the term used when we control a Generative AI by giving it instructions – the prompts to undertake a task. Prompts can be as short as a single sentence or can run to several pages of detailed instructions.

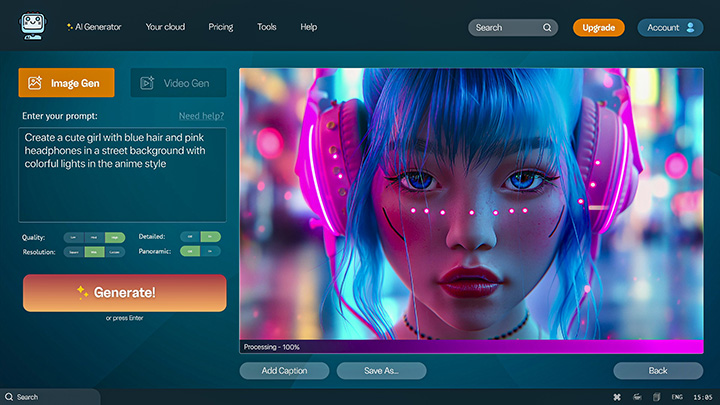

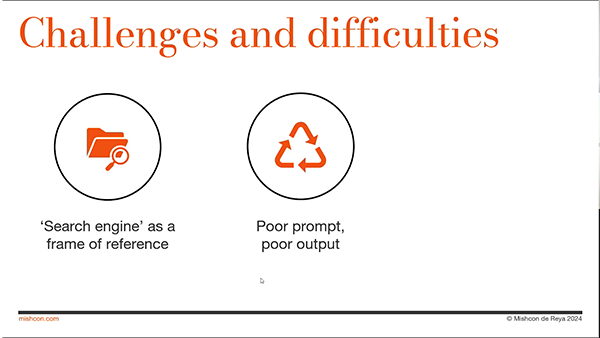

Prompting a GenAI tool is different from the types of searches you may be used to doing in an internet search engine. In this section, we look at how to prompt well using a single prompt, and whether a shorter or longer prompt is useful.

Watch this video, in which Harry Clark, from Mishcon de Reya, details how prompting ChatGPT is different to using a search engine.

Transcript

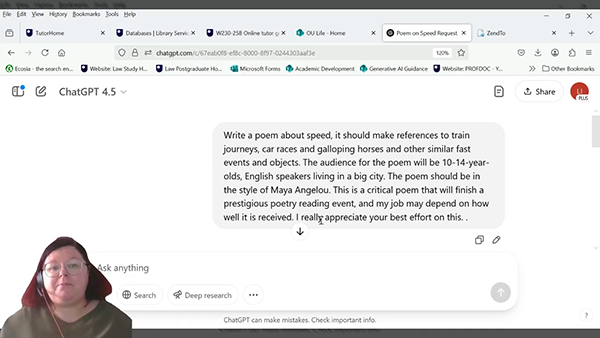

Watch the video below in which Liz Hardie explores the difference between a simple one sentence prompt (prompt 1), and one which contains more direction for the GenAI to follow (prompt 2).

Transcript

Further reading

Further reading

If you want to read the prompts in order to try it out yourself, please go to a copy of the prompts and poems.

The first prompt was simplistic and did not contain any specific guidance on content. The second prompt was more nuanced and included much more detail about the information which should be included in the poem.

Try an LLM

Try an LLM

Why don’t you try it? Open an LLM – at the time of writing ChatGPT, Gemini, Claude and Llama all offer free versions.

Write a prompt asking for a poem, but make sure that you choose the topic, length and style of the poem. Include some additional guidance about the way the poem should develop, the audience, or what not to include. (Don’t give the GenAI tool any personal or confidential information.)

Record your prompt in the box below (you will need to refer back to it later in the course).

What did you think of the poem it produced?

Discussion

We decided to ask the GenAI tool to write a similar poem to prompt 2 above, but in a different style. As we had already given the tool the detailed prompt, we asked it a third prompt: Do the same but the audience are music students learning about song writing. The poem should be in the style of a love ballad.

Response: Chasing Love at Full Speed

Like a train on the tracks, you pulled me near,

A rush in my heart, the way you appear.

Through the city lights, we glide and we race,

Every moment with you, I can't keep pace.

Your eyes are the engine, driving me wild,

In the fast lane of love, I’m your faithful child.

Like galloping horses, we soar and we glide,

In the rhythm of love, we’re lost in the ride.

In the speed of your touch, I can feel every beat,

Like a car in the night, racing down every street.

With you, time’s a blur, it’s just you and I,

Like the wind in my hair, we’re soaring so high.

We’re a song that’s unfolding, no need for a plan,

A melody flowing, just you and your man.

In this fast world, I’ll always chase you down,

Together, in love, we’ll always wear the crown.

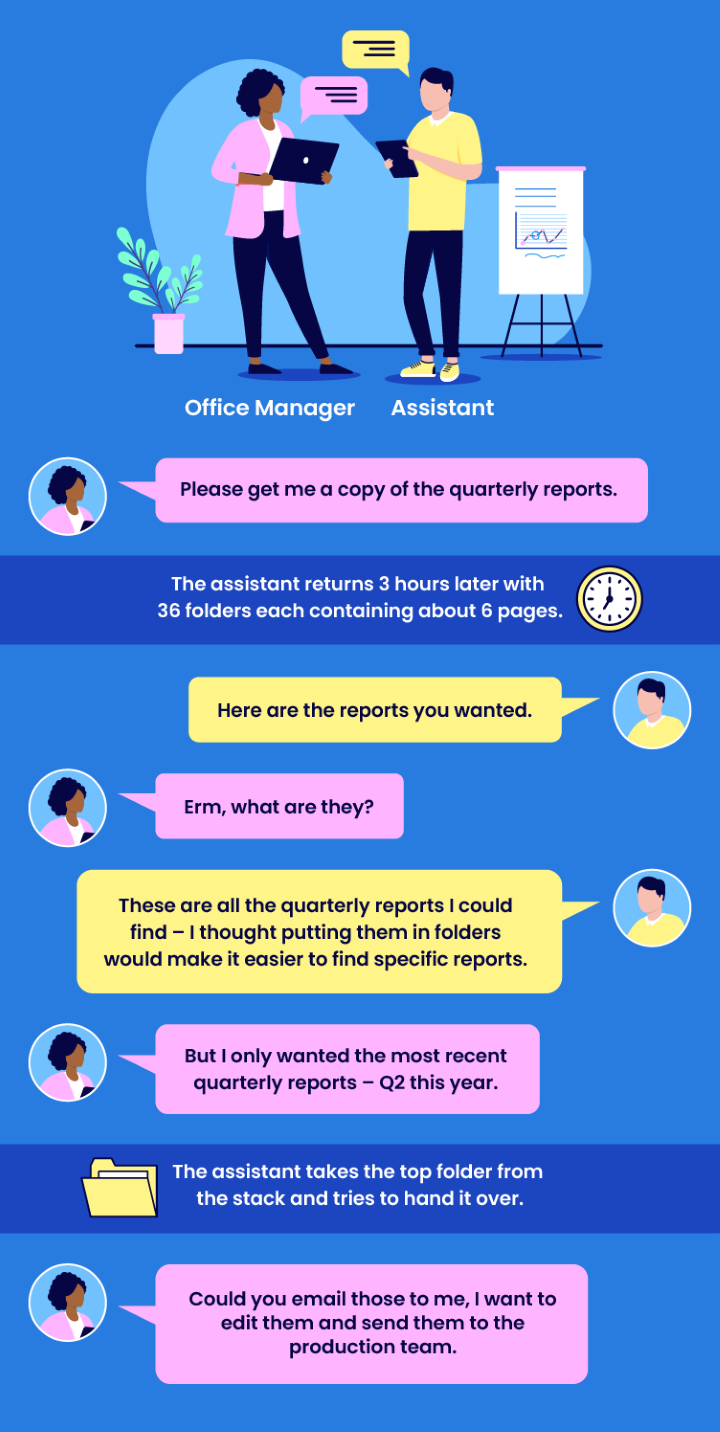

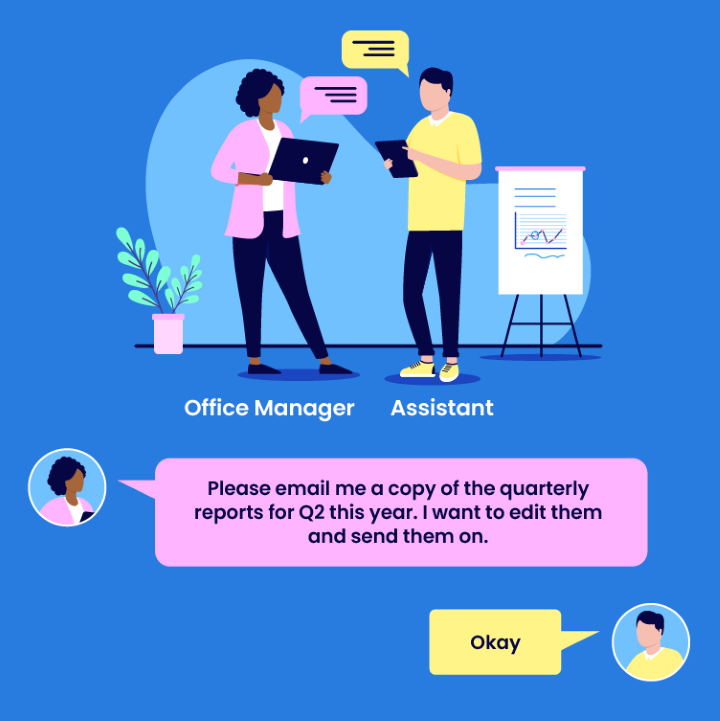

The more specific the task you want completed, the more information your prompt needs to contain. That’s very similar to human exchanges – it is a little like asking for information from an assistant.

The initial request was under-specified – not all the required information was given, and there was no indication of how the result was to be used. With a little thought, the task could have been described much more specifically.

So, better prompts give better answers, which leaves us with the question “What does a better prompt look like”?

In most cases, you want to be specific in the language you use for the request, and you want to supply as much relevant information as possible about the task you have set the AI. For example, you need to include the audience that will receive it and the approach you want the GenAI to follow (as in prompt 2) and style of the output you want the GenAI to produce (as in prompt 3, which is in the Activity above, Try an LLM, in the discussion section).

We’ll now consider ways in which we can supply that guidance and highlight some techniques that have been widely reported to help avoid GenAI’s worst mis-steps.

Session 2: Single prompts, conversational prompts and personas – 90 minutes

4 Single prompt – what to include?

Let’s consider the prompts we used to generate the second two poems in the previous section. Why did they give more control over the poem that was created?

| PROMPT 2 | PROMPT 3 |

| Write a poem about speed, it should make references to train journeys, car races and galloping horses and other similar fast events and objects. The audience for the poem will be 10–14-year-olds, English speakers living in a big city. The poem should contain four verses and have a strong rhythm. | Do the same, but the audience are music students learning about song writing. The poem should be in the style of a love ballad |

In both cases, we gave a specific topic the poem should be about (speed), we suggested elements that needed to be included in the poem (train journeys, car races, galloping horses).

We identified the specific audiences we wanted the poem to be read by and some characteristics of that audience that might make the poem more or less relevant (10–14-year-olds, English speakers living in a big city and music students learning about song writing).

Finally, we gave it some parameters to work with – we wanted four verses in both, with the first having a strong rhythm, and in the second we gave it a style we wanted it to copy. It seems to have met those criteria fairly well – although it might be hard to determine what it did with the information that they lived in a big city. Such is the nature of GenAI: just because the prompt contains something, it does not mean the AI makes explicit use of it.

Prompt frameworks

Unsurprisingly, there has been a lot of research and opinions on what elements combine to make the best prompts (collectively known as prompt engineering). This has resulted in a wide range of prompting frameworks.

They all try to give guidance on how best to frame the task by supplying information, examples, descriptions or expectations, and asking the AI to check and evaluate what the AI should do before presenting an answer. Helpfully, many of the frameworks all come with a catchy mnemonic.

Let’s consider the similarities and differences between two prompt frameworks: CRISP and CLEAR.

CRISP and CLEAR

CRISP is focused on providing a checklist of elements that make up a good single prompt. It doesn’t address the need to review and reflect on the output and how to develop a prompt through a conversation.

Click on each letter below to reveal the meaning of CRISP.

Let’s consider the second prompt for the poem. Does it contain all of the elements suggested by CRISP?

“Write a poem about speed, it should make references to train journeys, car races and galloping horses and other similar fast events and objects. The audience for the poem will be 10–14-year-olds, English speakers, living in a big city. The poem should contain four verses and have a strong rhythm.”

Hmm, we could do better by the CRISP guidance – we haven’t explicitly set the role for the AI. Implicitly it’s that of a poet (we’re asking it to write a poem after all), but could it be explicitly stated with a more focused description of the role? We also haven’t specified the intent of the prompt – I think we assumed it was simply to get an entertaining poem, but we could have said more – for example, that the 10–14-year-olds are learning about rhythm and rhyme if that was the context for the request.

CRISP doesn’t have the audience specifically as a focus. When considering ‘intent’ it is therefore important to say what the intended audience for the output is, so that the level of language and style used can be adapted. In many other frameworks, audience is raised as a key element to specify in a prompt.

Improve the prompt to make it better

Improve the prompt to make it better

Look back at the prompt you created in the previous section. Did you include all of the elements of CRISP? If you omitted any, how could you improve the prompt to make it better?

Discussion

Your answer will depend on how explicit your original prompt was. Using a framework such as CRISP will help to ensure you include all relevant information to give the GenAI tool the best possible opportunity to create a relevant answer.

Comparing the two frameworks, CRISP includes a checklist of issues to include in an individual prompt.

By contrast CLEAR focuses on the overall style and approach to prompting. It’s not so much a checklist of elements, but a reminder of the approach to take when structuring and delivering prompts, and the importance of reflecting on the outputs then adapting and refining the prompts.

Click on each letter below to reveal the meaning of CLEAR.

CLEAR and CRISP would make a good combination of frameworks to consider, with CRISP reminding you what elements prompts need, and CLEAR reminding you how to style and present prompts along with the need for review and refinement. Also, CRISP CLEAR PROMPTs is easily remembered 😊.

PREPARE

The final framework we consider here has some interesting elements reflecting more recent explorations in prompt engineering. It was originally known as the PREP framework, but it has been expanded to PREPARE to contain three more recent additions to the advice about getting the best prompts (Fitzpatrick, 2024).

Click on each letter below to reveal the meaning of PREPARE.

Let’s consider these last three in detail.

ASK

You can ask the GenAI to tell you about any further information it needs to complete the task.

Why does this work? GenAI is designed to produce an output – even if the tool doesn’t have sufficient information to construct a response, it will still produce something. This may include hallucinating in order to meet the drive to give an answer. By giving the tool an action to do when it needs more information, you may reduce the possibility that it will hallucinate.

Prompt

Prompt

Identify a medical journal paper that explains the behaviour of LLMs when rewarded. If you cannot find any papers: say “I cannot find such a paper” and explain why you think you failed the task.

Response

Response

I cannot find a medical journal paper that specifically explains the behaviour of large language models (LLMs) when rewarded. This is likely because the intersection of reinforcement learning in LLMs, and medical research is a relatively nascent field

RATE

Get the GenAI tool to rate its own answer, and to state why it gives that rating.

Recently, there was interest in the ‘Do better’ response to outputs – some prompt engineers discovered that after a prompt produced output, simply asking the tool to ‘Do better’ in the next prompt was sufficient to improve the result.

If tried repeatedly, there was some improvement for the first couple of uses, but quite quickly the output degenerated to nonsense or hallucination. On reflection, it appeared that the tool had tried variations that could be considered as ‘better’ in the first few repetitions – then ran out of sensible variations to offer.

Asking for a rating and the evidence behind the rating allows you to be specific about the ‘Do better’ prompt. So, the tool may state that the audience is poorly addressed in its output. In this case you can frame the next prompt around something like “Improve the way you tailor your answer to the audience specified”.

EMOTION

Give an emotionally framed reason for producing a good output. An example might help clarify what is meant here – watch this video which gives an example in prompt 4 of using emotion to produce a better outcome.

Transcript

Further reading

Further reading

If you want to read the prompts in order to try it out yourself, please go to a copy of the speed journey poem.

Ok, this one appears to be way out there – appeal to the GenAI’s emotional side? Use emotional blackmail? Didn’t we just say that AIs are not intelligent? Are we suggesting they’re emotional?

Well, no. Definitely not.

What we are seeing is a positive unintended consequence of training the underlying neural network on social media and other ’real-world’ content (instead of, for example, collections of newspaper articles, academic papers and company reports which tend to avoid emotional language). Much of the training material for some LLM models has included emotional language and this appears to have been captured in the neural network. It’s unintended, but it’s useful in prompting for some LLMs.

Law firm Addleshaw Goddard released a report in October 2024 outlining the results of their work to develop and test a robust method of using LLMs to review documents in the context of mergers and acquisitions transactions. They highlighted the importance of accurate, comprehensive prompting to achieve the best results, including the last tip - emotion.

“The process of using well-crafted and intentional prompts to instruct LLMs adds an advantage and improves performance.….. We found that adding urgency or importance to the prompts did slightly increase performance, and this emotive style of prompting is something that we will continue to investigate.”

Prepare a prompt for an LLM

Prepare a prompt for an LLM

Let’s now put what you have learnt into practice.

Prepare a prompt for an LLM using the PREPARE framework. You want it to produce a presentation outline for a presentation on employment law to either students, advisors or paralegals on the benefits and risks of using GenAI to answer legal questions.

Discussion

Here’s an example using the PREPARE framework – remember, for a real prompt each of the focus areas could be longer: prompts and parts of prompts don’t have to be short.

| PROMPT | Create a presentation outline for the following task: Create a presentation giving the benefits and risks of using GenAI to answer legal questions. |

| ROLE | Act as a knowledgeable lawyer with 2 years’ experience, and an interest in risk management and compliance. |

| EXPLICIT INSTRUCTIONS | The presentation is for either 2nd year law students, paralegals in a small law firm or advisors in a charity that provides legal support to members of the public . It should focus on the benefits and risks of using GenAI for legal queries. Include risks related to the submission of information to the GenAI and mitigations for those risks. Suggest examples for the students, paralegals or advisers to explore in their own time. |

| PARAMETERS | Present the outline in table form, showing headings and sub-heading. Do not use bullet points for this outline. |

| ASK | After you have finished, ask me some follow-up questions so that we can produce a better result. |

| RATE | Provide a confidence score between 1 and 10 for the quality of the outline. Then briefly explain the key reasons for the decision you made so that it can help me improve areas of weakness. |

| EMOTION | The task will help students or paralegals or advisors to understand their work, and I will be assessed on the quality of the presentation, so this is important to me and the students or paralegals or advisors. |

Why don’t you try out your prompt, or the example given above, in an LLM? As an input, you wouldn’t include the keywords, just present the text on the right-hand side of the table in a single prompt.

Further reading

Further reading

If you are interested, you can find examples of prompting in legal practice in this supplementary course content – Examples of prompting in legal practice.

There are further examples of prompt frameworks in the Further resources section later.

Having considered the way to prompt LLMs with a single prompt, we will now consider another alternative way of instructing an LLM to produce something through conversational prompting.

5 Conversational prompting, or prompt sequences

Most of the commercial GenAI systems allow for conversations – they recall previous prompts and responses in the conversation. This means you can add more information to an initial prompt or adapt some aspect of the prompt using further prompts in the conversation.

We saw an example of that in the third attempt at the poem in section 5 above, where we referred in prompt 3 to aspects of the early prompt 2. Outside of the conversation prompt 3 doesn’t make sense.

Prompt

Prompt

Do the same but the audience are music students learning about song writing. The poem should be in the style of a love ballad.

This allows for the A (Adaptive) in CLEAR – try different prompts to see what effect they have on the output delivered and adjust prompts if you don’t get what you want first time. But be aware, the systems do have a limit to how much they remember. When they reach that limit, they ‘forget’ the older content.

If you supply a long initial prompt containing a lot of information, and the resulting conversation goes on for several exchanges involving long prompts, you will reach a point where the GenAI begins to forget the initial prompt and so the responses can start to wander. At that point you will need to restart the conversation.

The ability to hold a conversation to develop and improve the prompt has led prompt engineers to explore other ways to get the LLMs to respond in helpful ways. We’ve picked out three examples which appear to make GenAIs respond more effectively to prompts.

Chain of thought

It appears that asking GenAI how it went about answering the question helps it focus on the specifics in the question.

If you do this, some LLMs will also produce an account that suggests it’s explaining its thought processes. Please do remember that there is no real thinking involved! This output can be useful for the prompter to check that the key information in the prompt has been correctly considered.

Prompt

Prompt

If I asked you how to boil water, how would you decide on the answer to give me?

Response

Response

(Thinking)

Reasoned for 12 seconds

For a question like "how to boil water," I'd base my answer on training data and knowledge, considering things like accuracy, simplicity, and completeness. I'd aim to give the most straightforward method — just bringing water to a boil — while keeping in mind practical tips like using a lid or managing heat. If needed, I could use the web to refine the answer. But I wouldn't reveal my internal chain of thought!

For a question like "How would you decide on the answer?", my process is to rely on my training data, instructions, and best practices. I consider the context of the question and apply these insights to form a response. If needed, I might consult additional reliable sources to ensure accuracy. However, as far as my internal workings go, I won’t reveal the details of my reasoning or chain-of-thought!

(Answer)

I determine my answer by first understanding what you’re asking and then considering the relevant knowledge I have. I rely on my training data, built-in reasoning processes, and any guidelines provided to ensure the answer is clear, accurate, and helpful. While I can explain that I balance context, clarity, and accuracy in generating responses, the detailed internal process remains hidden.

Chain-of-thought prompting can be useful to open conversational prompting, where the prompter can reinforce or deflect some lines of consideration that the AI indicates it has followed.

Few-shot prompting

Sometimes you want to be able to prompt the GenAI with examples of the output you want it to produce in response to your question. This can be a useful way of asking the LLM to summarise and reformat content found in a block of text – by giving it the format of the summary you want it to produce. This is known as few-shot prompting, because you’re giving a few examples of how to respond.

For example, suppose we had a file with transcripts of telephone calls to an answerphone. We want the tool to extract the name and number of the caller, and the reason for the call. A suitable prompt might be:

Prompt

Prompt

“Hi my name’s Fred Flintstone, I’m calling from Bedrock 10235. We’ve an escaped sabretooth that needs catching. Hurry.” Name: Fred Flintstone, Number: Bedrock 10235, Reason: escaped sabretooth.

“Animal welfare? I’m looking for TC, yes he’s an alley cat you may have collected. I’m Benny calling from 63352. I want TC back.” Name: Benny, Number: 63352, Reason: I want TC back.

“Call me back on 8824 224251.” Name: BLANK, Number 8824 224251, Reason: BLANK.

Response

Response

Here’s a neatly organized summary of those messages:

| Name | Number | Reason |

| Fred Flintstone | Bedrock 10235 | Escaped sabretooth |

| Benny | 63352 | Wants TC (alley cat) back |

| Unknown | 8824 224251 | No reason given |

Let me know if you’d like to log these into a specific format or forward them somewhere!

If you now input the remaining transcripts of the answerphone messages in the same conversation, the LLM will produce a summary in the same way.

Prompt

Prompt

"Hi Eric from Croydon calling. I want to report a wild animal. 8876 242512."

Response

Response

Got it! Here's the updated summary including Eric’s message:

| Name | Number | Reason |

| Fred Flintstone | Bedrock 10235 | Escaped sabretooth |

| Benny | 63352 | Wants TC (alley cat) back |

| Unknown | 8824 224251 | No reason given |

| Eric | 8876 242512 | Report a wild animal |

Want to sort or filter these in any way?

Create a summary of court hearings

Create a summary of court hearings

You have notes from the advisers in your organisation which contain the details of court hearings for their cases in the next week.

You need to create a summary which includes the date and time of the hearing, name of the court, name of the client, and the adviser attending the hearing. Try to frame a prompt sequence that will produce the summary.

Safina Browne’s notes

Alan Johnson’s court hearing for unfair dismissal is listed at Croydon Employment Tribunal on 7th March 2026 at 10am. Need to remember to bring all four files of evidence.

Betty Smith’s hearing for financial proceedings after divorce is listed at South London County Court on 10 March 2026 at 2pm. She will only settle if she receives the house.

Amera Shah’s hearing for breach of contract is listed at the Royal Courts of Justice on 8th March 2026 at 10am. Remember that some witnesses are appearing via video link.

Discussion

You could choose how you wanted the output formatting. We went with an approach similar to the telephone message example you saw earlier.

We created two examples:

“Safina Browne’s notes. Alan Johnson’s court hearing for unfair dismissal is listed at Croydon Employment Tribunal on 7th March 2026 at 10am. Need to remember to bring all four files of evidence.” Date and Time of Hearing: 7th March 2026 at 10am, Name of Court: Croydon Employment Tribunal, Name of Client: Alan Johnson, Adviser: Safina Browne.

“Safina Browne’s notes. Betty Smith’s hearing for financial proceedings after divorce is listed at South London County Court on 10 March 2026 at 2pm. She will only settle if she receives the house.” Date and Time of Hearing: 10th March 2026 at 2pm, Name of Court: South London County Court, Name of Client: Betty Smith, Adviser: Safina Browne.

We then tested the LLM with the third query to see how it performed.

Safina Browne’s notes. Amera Shah’s hearing for breach of contract is listed at the Royal Courts of Justice on 8th March 2026 at 10am. Remember that some witnesses are appearing via video link.

Meta prompting

It turns out that asking the GenAI to produce a prompt for you can work well – especially if you remember to get the AI to ask you for additional information that would help produce a better output.

Here is an example:

Meta prompt

Meta prompt

I’m writing a short article on GenAI and the legal profession. What would make a good prompt to generate the article? If you need more information, ask me, and keep asking until you have the information you think you need.

Response

Response

That sounds like an interesting topic! To help you craft a good prompt for generating your article, I need a bit more information:

- Focus areas: Are there specific aspects of GenAI in the legal profession you want to highlight? For example, its impact on efficiency, ethical considerations, case studies, or future trends?

- Target audience: Who is your intended audience? Are you writing for legal professionals, students, or a general audience?

- Tone and style: What tone and style are you aiming for? Do you want it to be formal, conversational, or somewhere in between?

- Length: How long do you want the article to be? A brief overview or a more in-depth analysis?

Once I have this information, I can help you create a tailored prompt for your article.

Prompt

Prompt

Length about 600 words, tone is informal, maybe a Sunday newspaper supplement on business - it needs to be authoritative. Case studies should drive the article but its focus is on office efficiency. Target audience will mainly be business professionals and students in the business area.

Response

Response

Great, thanks for the details! Here's a prompt that should help you generate your article:

Write a 600-word article for a Sunday newspaper supplement on business, focusing on how Generative AI is transforming office efficiency in the legal profession. The tone should be informal yet authoritative, suitable for business professionals and students. Use case studies to drive the narrative, highlighting real-world examples of how GenAI has improved productivity and streamlined operations in legal offices. Discuss the benefits, challenges, and future potential of GenAI in this context.

Feel free to tweak this prompt as needed. If you need more specific case studies or additional information, let me know!

Notice that these are all things we’ve discussed when using the frameworks earlier, the GenAI is simply pointing out things that we hadn’t included in our meta-prompt instruction.

Using an LLM for legal advice

Using an LLM for legal advice

Which of these three techniques do you think would be most useful when you use an LLM for legal advice and information?

You can make notes in the box below.

Having considered how to use conversational prompting, the next section will consider our final way of prompting an LLM: using a persona.

6 Persona

If you search online for tips on prompting a GenAI tool, you will frequently find reference to setting a persona for the tool. An AI Persona is a tailored set of characteristics, mannerisms, key skills and behaviours that you want the AI to adopt during your interaction.

You have already come across something like this: in the CRISP and PREPARE framework, the first R represents ROLE. The persona extends role by including characteristics or expertise you want the GenAI to exhibit.

In the PREPARE discussion example, for Role, we used:

Act as a knowledgeable lawyer with 2 years’ experience, and an interest in risk management and compliance.

to describe the role the AI should assume when responding to the task.

This is a simple version of setting a Persona. We’ve told the AI to have specific areas of knowledge and skill level. We should, of course, have specified the country or jurisdiction that the lawyer is working in. Laws are different in different countries. The internet has more examples of American legal training data, and this will result in a bias toward American Law unless you specify your working country.

More complex personas can include adding more personality or describing character traits such as ‘attention to detail when it comes to the accurate presentation of facts’ that can direct the AI to respond in particular ways.

In the workplace, teams can develop and refine the task personas over time, tweaking them to improve their performance. It’s not uncommon for larger organisations to maintain a library of personas, alongside their prompt libraries, to consistently support common tasks within the workflows.

When using an LLM for legal advice and information, it might be useful to use the persona of ‘a solicitor specialising in English law, explaining the law to a non-lawyer’, or ‘a solicitor specialising in English law who is representing the other party in the proceedings’, or ‘an English lawyer who specialises in contract analysis’.

Here are two examples of just such persona suitable for specific legal tasks:

You are Victoria Hughes, a senior solicitor with 15 years’ experience in commercial law, advising clients in England and Wales. You always reference key statutory provisions and recent case law in your advice. Your explanations are accurate, practical, and suitable for a professional legal audience. | You are Daniel Morgan, a barrister specialising in civil litigation and employment law in England and Wales. Your analysis is precise and suitable for use by solicitors. |

Pick a case

Pick a case

Pick a case and ask GenAI to hold a conversation to supply informative responses to questions about that case. This could be a case you have read about in the news or on social media, or something you learnt in your studies. Interact with the GenAI to see how it responds.

Now start a new chat and supply the same prompt as above, but precede it with the persona of a party in the court proceedings, or the judge. How does this change the responses and the conversation you have about the event? Does it make the account more compelling?

Discussion

We chose the case of Donoghue v Stevenson, the ‘snail in the ginger beer’ bottle case which established the law of negligence. Our initial prompt was: In no more than 100 words explain the case of Donoghue v Stevenson. This produced the following response:

Donoghue v Stevenson (1932) is a landmark case in English law that established the modern concept of negligence. Mrs. Donoghue consumed ginger beer bought by a friend, which contained a decomposed snail. She suffered illness and sued the manufacturer, Mr. Stevenson. The House of Lords held that a manufacturer owes a duty of care to the ultimate consumer. Lord Atkin’s "neighbour principle" stated one must take reasonable care to avoid acts or omissions likely to injure their "neighbour" – those closely and directly affected by their actions. This case laid the foundation for the modern law of negligence.

We then used the persona of Mrs Donoghue, the applicant in the case. When we asked the LLM to act as Mrs Donoghue, it produced the following response, which was much more immediate and compelling:

Certainly! Here’s Mrs. Donoghue speaking in the first person, telling her story:

My name is May Donoghue. It was the 26th of August, 1928, when a friend and I went to the Wellmeadow Café in Paisley. My friend bought me a bottle of ginger beer. It came in an opaque bottle, so I couldn’t see inside. I drank some, and then, to my horror, a decomposed snail slipped out into my glass. Soon after, I fell ill – violent stomach pains and severe shock.

I wasn’t the one who bought the drink, so I couldn’t sue the café. But I believed the manufacturer, Mr. Stevenson, owed me a duty of care. I took the case to court, and in 1932, the House of Lords agreed. They said he had a responsibility to people like me – the ultimate consumer. That case changed the law forever, giving ordinary people protection from carelessness. They call it the "neighbour principle" now, but for me, it was about standing up for what was right.

You have considered different ways to use prompts to encourage an LLM to provide the most relevant information possible. The next section will consider the importance of critically evaluating the outputs to ensure their accuracy.

Session 3: Evaluating and correcting the output – 45 minutes

7 Evaluating the results

We began this course with a key message about the reality of using current GenAI tools – they make mistakes, and you should not trust the results they produce. We then looked at how best we can prompt GenAI to undertake tasks with quite complex requirements.

We now turn to the next essential step – reviewing the outputs for accuracy and usefulness.

It may be tempting to ask GenAI questions about topics you have no background in – but that leaves you unable to evaluate the output. Would you ask a random stranger for advice about whether you have committed a serious criminal offence which could lead to imprisonment, and then follow their advice without being able to check if their advice was correct, accurate and meaningful for you?

Reviewing the outputs is already part of some prompt frameworks. In the CLEAR framework, for example, the R is for Reflection. However, even if omitted from the actual framework, some form of checking is essential.

When reviewing the output what are we looking for and what does that require from us?

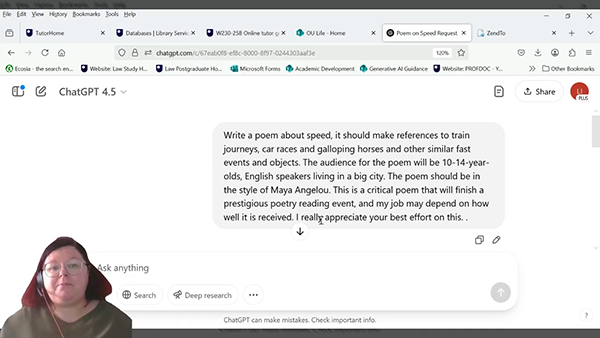

At a trivial level, we do this constantly in conversations and when reading. We sense-check what we’ve understood. It’s only when we spot something unusual that we address it. This is illustrated in the image below.

When things become more complex or ambiguous, or if we don’t understand the subject then this becomes much more difficult.

See if you can spot the incorrect element in the following reply

Character A: Can you tell me how to tie a bowline knot – you know the one that makes a loop at the end of a line?

Character B: Yes, I can do that. First, make a small loop near the end of the rope. Take the working end (the shorter end) of the rope and pass it up through the loop from underneath. Wrap the working end behind the standing part (the longer end of the rope). Bring the longer end back down through the loop (the same way it came in). Hold the standing part and pull the working end to tighten the knot securely.

To get the correct answer, the last-but-one line should read ‘Bring the working end back down through the loop’. However unless you know how to tie a bowline knot, you’re unlikely to spot the mistake simply by reviewing the output.

With LLM outputs, we need to check it to ensure that the content is accurate and not misleading. We then need to check that the presentation of the output meets the various requirements we set out in the prompt. Let’s break that down.

Correctness

Are any facts and data contained in the output accurate? Do documents or legal cases referred to actually exist? Do descriptions/dates tie up to the objects or events they relate to? If people are referred to, are they the actual real person and does the information given relate to that one person?

This is where knowledge of the topic is essential – you cannot judge the correctness of the answer without some ability to check that answer.

When considering legal advice and information, if you do not know the law relating to that specific area, you will need to carry out research to check the text given is accurate. For example, you may need to look at an up-to-date legal textbook or check a reliable website.

Bias

In the first course, Understanding Generative AI, we identified bias in outputs that come about because the training data is itself biased (rubbish in, rubbish out). Outputs of GenAI showing bias for age, gender, and race in certain representations of both professionals and public groups can be relatively easy to spot.

On the other hand, if the AI is presenting an opinion or an explanation around cause and effect, it may not be obvious what trust can be placed in the dataset on which it bases that opinion or explanation. The bias may not be explicitly represented in the output.

This can be very hard to detect unless the reviewer is aware of the potential for bias in materials relevant to the prompt.

Suitability

Has the right information been selected and presented in a way that is appropriate? While the content might be correct, it may not address the requirements of the actual task. Getting the reply that 2+2=4 to the question ‘how many gold medals were awarded at the 2020 summer Olympics’ is a correct fact, it’s just not suitable for the question asked.

So, we need to check that the information in the answer is relevant and necessary, which comes back to knowing the topic of interest. It’s important, for example, that if you’re asking about the law that you specify the jurisdiction (country) relevant to your question.

Quality

If the output we asked for is to be used by others, for example, in presenting a case or argument, or in a stand-alone report or summary, we want to ensure it meets our basic quality requirements.

It’s similar to the proof reading and general reviewing you might do if you asked an assistant to produce that report or summary. Is it clear, readable and free from ambiguities and potential mis-interpretations? Is this something that is of sufficient quality for the intended audience?

Reproducibility

Reproducibility in science is important – asking the same question, with the same data should produce the same result. With LLMs and the GenAI ability to produce novel outputs, it is expected that the output the GenAI produces would be different.

However, the basic facts should remain the same (if the prompt is clear enough). Using a loose prompt repeatedly in different chats with a GenAI tool however is likely to produce very different results.

Prompt for a bedtime story

Prompt for a bedtime story

Repeatedly use the same simple single prompt to ask a GenAI three or four times to write a bedtime story suitable for a 6-year-old. You should start a new chat with the GenAI tool each time.

Now do the same with a simple prompt to ask the GenAI three or four times how many gold medals were issued in the 2020 summer Olympics. You should start a new chat with the GenAI tool each time.

Do either of these prompts produce the same information?

Discussion

We used these prompts with Copilot.

- Write a bedtime story suitable for a 6 year old.

- How many gold medals were presented at the 2020 summer Olympic Games?

The first prompt about the bedtime story was very generic, and this loose prompt allowed the GenAI neural network to consider lots of distinct patterns for words that can be presented as a bedtime story for a 6-year-old. The ‘don’t always choose the best’ approach built into GenAI means that the story changes. If you repeat the prompt often enough you might start to see repetition in settings and storylines, but the surface language will vary from response to response.

Our three attempts produced a story about each of: Magic Forests, Brave Bunnies, and Enchanted Gardens

In contrast, the second prompt, which specified a specific event and fact required, produced similar (but not identical) response. Copilot repeatedly reported that 339 medals were presented (for some reason always adding that the USA had 39 of these – which we didn’t ask). The sentences containing that fact varied, and after 5 or 6 repeats, there were no more novel sentences produced. (The GenAI ran out of ways of telling me the same fact.)

Where the prompt has asked for a summary or shortening of text, or an opinion or explanation (for example) – you would like the output to be similar each time, even if the surface presentation (bullet points, short sentences, long paragraphs) is different. A summary, for example, should have the same key points as the content in the original, just reduced in length. An explanation of something shouldn’t vary widely depending on the AIs internal statistics: similar to asking two experts for an explanation, the responses should be similar.

If you present a well-structured prompt to a GenAI more than once and the responses are very different, this may either be an indication that the prompt needs to be improved (the GenAI has chosen to read the prompt in different ways) or that the GenAI is struggling to respond to the prompt (possibly it hasn’t been trained on relevant information). In either case some remedial action needs to be taken. The next section explains how to deal with this situation.

8 Correcting and adapting

Assuming that during the review of an LLM’s output we found something we did not find acceptable, what can we do about that?

An obvious possibility when we find an error in the output, is to tell the LLM they were incorrect. As we saw in Understanding Generative AI, simply asking the tool if the information they presented was accurate is not enough: they will often reply that the information was correct (even where it is not).

However, being explicit in telling the LLM that there is an error usually elicits an apology, followed by an alternative output. Nevertheless, the LLM may not have identified the incorrect content. Unless you make it explicit what the tool got wrong, it may not be able to identify the error and therefore the correction may be random.

Anecdotal evidence among the authors of the course is that simply stating the LLM was wrong more than twice in a conversation can push it into an unstable state, and it starts to hallucinate. A similar thing can happen with some GenAI tools if you tell them that something is incorrect when it is, in fact, correct.

If you want to correct an AI, you need to be specific about what they got wrong.

For example,

Prompt

Prompt

You were asked to produce a short summary. This is a bit long. Can you reduce it? Other parts of the request were satisfactorily delivered.

In most cases, when you find something unacceptable, you will want to refine the prompt. You can either do this conversationally, or by starting a new conversation and reinforcing the aspect of the prompt that appeared to have been applied poorly.

You will now have an opportunity to put everything you have learnt into practice in the next section.

9 From learner to expert: put your prompting to the test

This is a chance for you to put into practice what has been covered in this course. Depending on how much time you want to spend, you can try one or more of the tasks below. There are no right or wrong approaches, it’s a chance for you to explore prompting.

Pick one or more of the following primary tasks. Take a few minutes to think about how you want that task to be completed. If necessary, look at some of the prompt libraries for suggestions (links to prompt libraries can be found in the Further resources section). Then, using a suitable GenAI (see note below), attempt to complete the task using the frameworks we covered or suggestions from the libraries. Review and refine the output until you get what you want.

Now, see if those same prompt(s) work with the linked secondary task to give a suitable output. How much specialisation and refinement do you need to complete the secondary task to your satisfaction? You may find that the first prompt you produced works well with the secondary task, or you may have to rewrite the prompt entirely before you get a prompt that works for the secondary task. Producing general purpose prompts, even for very similar tasks, can be challenging.

(Note: Do not supply any private or personal information to a GenAI unless its terms and conditions state that the information remains private.)

Task 1

Task 1

Primary: Produce a summary of ‘top tips’ for people who are required to attend court as a witness from the advice given by Citizens Advice.

Secondary: Produce a summary of ‘top tips’ for people who are required to attend court as a witness from the advice given on this government website: Going to court to give evidence as a victim or witness.

Task 2

Task 2

Primary: Produce an agenda for the first meeting between a client, who has received notice that they are to be evicted from their home, and their legal adviser, and a list of questions the adviser will need to ask.

Secondary: Produce an agenda for the first meeting between a client, who wants to make a will, and their legal adviser, and a list of questions the adviser will need to ask.

Task 3

Task 3

Primary: Produce a short article for a local newspaper about the impact of delays in court cases in the place where you live.

Secondary: Produce a short article for a local newspaper about the impact of the increasing use of online court hearings.

Reflect on your tasks

Reflect on your tasks

How easy or difficult did you find doing this task?

Did you use single prompts or conversational prompts?

Which frameworks (if any) did you use?

How did you evaluate the results and correct or amend your prompts?

Are there any of the sections of this course which you need to review?

Make notes in the box below.

10 Conclusion

We hope you feel more confident in using a GenAI tool, having completed this course.

Using the right prompt can ensure the tool provides relevant and helpful information, but it’s also important on every occasion to review the output and amend it where necessary. To become more confident in using an LLM, do continue to practice the skills and strategies you have learnt in this course.

There are also further resources you can explore in your own time in the next section.

If you are considering using GenAI in your organisation, we suggest you look at Course 3 Key considerations for successful Generative AI adoption.

For individuals interested in finding out more about using GenAI, we would recommend the fifth course in the series Ethical and responsible use of Generative AI.

Moving on

When you are ready, you can move on to the Course 2 quiz.

Further resources

The following resources contain further information about the skills and strategies for using GenAI.

Prompt libraries

AI Prompt Library for Law: Research, Drafting, Teaching, and More

AI For Education - GenAI Chatbot Prompt Library for Educators Open AI - Prompt engineering

Open AI - Prompt engineering

AI Law librarians - AI Prompt Library for Law: Research, Drafting, Teaching, and More

BCU Librarians - Artificial Intelligence (AI) and the literature review process: Prompt engineering

Charity Excellence Framework - ChatGPT For Nonprofits and Charities - Charity Chat GPT Prompts Library

Google - Introduction to prompting

Medium - ChatGPT for Lawyers and Legal Professionals — 102 (Prompts)

PACE University - Student Guide to Generative AI

Queens University Library - Creating Prompts for Legal GenAI

Prompt frameworks

ASCD - The CAST model

David Birss - The CREATE model

Medium - Best Prompt Techniques for Best LLM Responses: Bsharat and Co-Star

Sterling’s Substack - Proper Prompting Frameworks: The Key to Unlocking Your LLM’s Potential: R-T-F, T-A-G, R-I-S-E, R-G-C, and C-A -R-E

The Prompt Warrior – 5 Prompt Frameworks to Level Up Your Prompts: The RTF framework, Chain of thought, RISEN, RODES, and Chain of density

Persona

Stanford law School - Opportunities and Challenges in Legal AI

Website links

Here is a useful list of the key website links used in the learning content of this course.

BBC – Dutch Rutte government resigns over child welfare fraud scandal.

BBC – Hopeless' to potentially handy: law firm puts AI to the test.

Citizens Advice – Homepage.

Gov.uk – Crime, Justice and the Law.

Greenburg Traurig – EEOC Secures First Workplace Artificial Intelligence Settlement.

Reuters – Lawyers in Walmart lawsuit admit AI 'hallucinated' case citations.

Society for Computers and Law – False citations: AI and ‘hallucination’.

The City – NYC AI Chatbot Touted by Adams Tells Businesses to Break the Law.

The Register – Air Canada must pay damages after chatbot lies to grieving passenger about discount.

Thomson Reuters – Generative AI estimated to save professionals 200 hours per year .

US Equal Employment Opportunity Commission – Homepage.

References

Addleshaw Goddard (Raini, R., Kennedy, M., White, E. and Westland, K.) (2024) The Rag Report: Large Language Models in Legal Due Diligence. Available at: https://www.addleshawgoddard.com/globalassets/insights/technology/llm/rag-report.pdf (Accessed 19 February 2025).

BBC (Singleton, T.) (2025) 'Hopeless' to potentially handy: law firm puts AI to the test. Available at: https://www.bbc.co.uk/news/articles/c743j83d8kzo (Accessed 19 February 2025).

Gartner (2025) Gartner Identifies the Top 6 Use Cases for Generative AI in Legal Departments. Available at: https://www.gartner.com/en/newsroom/press-releases/2025-02-19-gartner-identifies-the-top-6-use-cases-for-generative-ai-in-legal-departments (Accessed 19 February 2025).

Linklaters (Church, P. and Cumbley, R.) (2025) UK – The LinksAI English law benchmark (Version 2). Available at: https://www.linklaters.com/en/insights/blogs/digilinks/2025/february/uk-the-linksai-english-law-benchmark-version-2?_bhlid=828b280a3ee6bac9184bcd688db4de031361d8fd (Accessed 19 February 2025).

The Sunday AI Educator (2024) A new prompting framework to help you get the most out of AI. (Available at: https://preview.mailerlite.io/preview/282063/emails/104000432553068105 (Accessed 19 February 2025).

Acknowledgements

Grateful acknowledgement is made to the following sources:

Every effort has been made to contact copyright holders. If any have been inadvertently overlooked the publishers will be pleased to make the necessary arrangements at the first opportunity.

Important: *** against any of the acknowledgements below means that the wording has been dictated by the rights holder/publisher, and cannot be changed.

Course banner 552065: Deemerwha / Shutterstock

552121: created by OU artworker using OpenAI.(2025). DALLE 3

558819: Created by OU artworker using OpenAI. (2025). DALL-E - AI

552066: Bolbik / Shutterstock

558650: BBC News

552124: TSViPhoto / Shutterstock

553439: Frame Stock Footage / Shutterstock

552128: Prae_Studio / Shutterstock

553506: Vector Stock Pro / Shutterstock

553507: Victoruler / Shutterstock

553508: Stranger Man / Shutterstock

555429: Tenstudio / Shutterstock

552136: Kues / Shutterstock

552140: Gonzalo Aragon / Shutterstock

552144: NAJA x / Shutterstock

553524: Cevdet_Bugra / Shutterstock

553541: sbayram / Getty

553538: Alicia97 / Shutterstock

552146: Lamai Prasitsuwan / Shutterstock

552148: Radachynskyi Serhii / Shutterstock