Use 'Print preview' to check the number of pages and printer settings.

Print functionality varies between browsers.

Printable page generated Sunday, 23 November 2025, 2:17 AM

Key considerations for successful Generative AI adoption

1 Introduction

Generative AI (GenAI) has the potential to transform organisations and the way we work. It is already integrated into many everyday tools – such as email, word processing and web browsers – meaning it is already impacting on how people carry out their tasks. As the technology continues to evolve, organisations must not only keep pace with innovation but also actively prepare for an AI-driven future.

This preparation requires a strategic approach to adoption, integration and long-term readiness – one that allows organisations to harness the opportunities of GenAI while managing its risks effectively.

This course offers a practical guide to understanding those opportunities and risks, and to planning for successful GenAI adoption. This is a high-level overview which, while not intended to cover every aspect in depth, aims to highlight key questions for organisations to consider and discuss as they explore the adoption of GenAI. Please note that it does not constitute legal advice. Throughout, you will find links to related courses within this programme, as well as optional deep-dive sections that provide more in-depth exploration of key topics.

The first step is to demystify GenAI. For an organisation to make informed decisions, everyone – from leadership to frontline staff – needs a basic understanding of what GenAI is, what it can do, and where its limitations lie. This shared understanding is essential for meaningful conversations about its value and its risks.

An introduction to the course

An introduction to the course

Listen to this audio clip in which Francine Ryan provides an introduction to the course.

Transcript

You can make notes in the box below.

Discussion

Organisations must actively prepare for an AI-driven future – a strategic approach to adoption, integration and readiness that allows them to harness the opportunities of Gen AI – while managing its risks effectively.

This is a self-paced course of around 120 minutes including a short quiz.

A digital badge and a course certificate are awarded upon completion. To receive these, you must complete all sections of the course and pass the course quiz.

Learning outcomes

Learning outcomes

After completing this course, you will be able to:

Explain the key elements of an effective GenAI strategy.

Understand the risks associated with the adoption of GenAI.

Assess organisational readiness for adopting GenAI.

Glossary

Glossary

A glossary that covers all eight courses in this series is always available to you in the course menu on the left-hand side of this webpage.

Watch this video of Richard Nicholas, from Browne Jacobson LLP, where he discusses the importance of involving everyone within the organisation in the discussion .

Transcript

2 Strategy

Before considering how GenAI might be used in your organisation, it is essential to first develop a solid understanding of the technology – how it works, and the potential impact it may have on your sector.

Begin by reflecting on your organisation’s goals, the challenges you are aiming to address, and where GenAI might add genuine value. Any new technology should align with your overall organisational strategy to ensure you can maximise its benefits and avoid problems. While the fourth course, Use cases for Generative AI, explores specific use cases in greater detail, this course offers an overview of key considerations to guide your thinking.

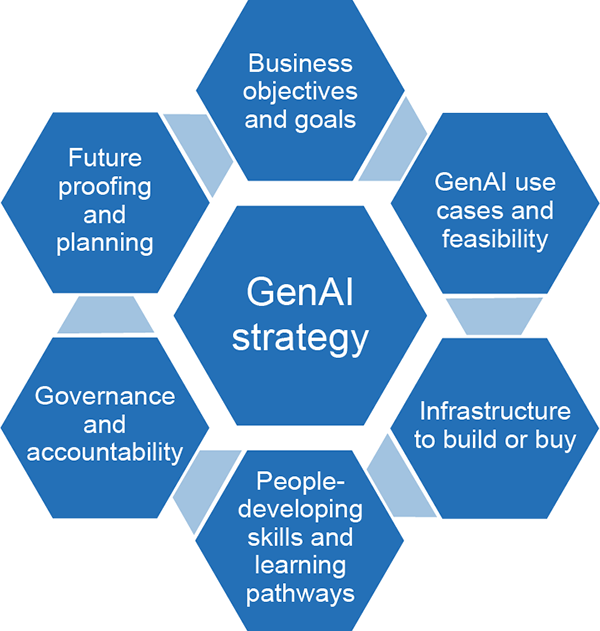

Understanding both the strengths and limitations of GenAI is critical. A structured and thoughtful approach to implementation helps ensure that the technology is embedded in a way that is sustainable, ethical and effective. Your GenAI strategy should be integrated with and support, your existing business strategy. Below we outline the key elements to consider when developing a GenAI strategy for your organisation.

3 Objectives and use cases

To decide what use cases there maybe for Gen AI it is important to determine your objectives and think about what success looks like for your organisation.

If, for example, your objective is to enhance productivity, what metrics will you use to decide if GenAI makes your organisation more productive. But be mindful of the unintended consequences − productivity may rise, but staff morale may drop, so it is important to have a holistic perspective on technology adoption.

Once you understand your objectives, map GenAI capabilities to specific business objectives and identify clear use cases for GenAI. For example, the London Stock Exchange System wanted to improved productivity and provide more consistent answers to customer queries so it is using AI to support its customer service team to answer questions more quickly and more consistently (World Economic Forum, 2025).

In the eighth course, Preparing for tomorrow: horizon scanning and AI literacy, we discuss the Gartner Hype Cycle and although there is a lot of discussion around GenAI, the World Economic Forum (2025) report that AI adoption is still in its early stages, and many organisations are only starting to explore AI or have single use cases. We are still in the experimentation phase and while the technology is developing rapidly and offers significant potential, organisations should avoid feeling pressured to adopt GenAI tools to quickly. Rushing into implementation without proper research, planning and consideration of the risks can lead to unintended consequences – whether legal, ethical or operational.

Instead, organisations should take a measured approach: explore and experiment but prioritise understanding how GenAI fits within their specific context. Careful planning and informed decision-making are essential to ensure that the technology delivers value and aligns with organisational goals. Be aware of some of the potential negative impacts that might flag that AI is not an appropriate solution such, as the loss of expertise, reducing human interaction or becoming over dependent on technology.

If GenAI is an appropriate solution to address the problem you have identified, then consider whether you buy an off-the-shelf solution, build a GenAI application in-house or outsource, or partner with a technology company to develop a bespoke solution. It is important to speak to vendors and do research and due diligence.

As part of the process you will need stakeholder engagement and you should bring together all parts of the organisation – operations, IT, HR, etc. to consider and plan the implementation of GenAI. You might form a working group to support the process of considering how the tool will align with your strategy and existing policies, and you may want to consider involving users of your service or including the public voice in your decisions.

In the fourth course, Use cases for Generative AI, we will consider how to include the public voice in AI decision-making. You could explore working with other organisations to share knowledge, resources and buying power.

Further reading

Further reading

The Government has published guidance on using AI in the public sector: A guide to using artificial intelligence in the public sector

Charity Excellence offers a Free Charity AI Ready Programme.

4 Model selection and customisation

As organisations begin to engage with GenAI, it is helpful to distinguish between ‘everyday’ AI tools, those integrated into existing software, and more specialist AI tools designed for specific tasks. For many, starting with everyday tools offers a practical first step before considering the adoption of more advanced or bespoke AI solutions.

Pre-trained models are more cost effective, quicker to implement, but might not be as useful for specific-use cases. Some models might be free as part of your existing IT package such as Google Gemini or Microsoft Co-Pilot, other models are likely to be subscription based. If you are a charity, you might be able to access Nonprofits offers, for example, both Microsoft and Google provide technology grants and discounts to nonprofit organisations.

Once you have considered model selection you should consider consequence scanning which is a method that allows you to explore the following three questions about your solution:

- What are the intended and unintended consequences of this product or feature?

- What are the positive consequences we want to focus on?

- What are the consequences we want to mitigate?

These questions provide an opportunity to share insights, raise concerns, and have structured conversations about potential impacts. You should document the answers and assign clear ownership for any follow-up actions. These outputs can then be integrated into your planning. If you want to learn more about how to do consequence scanning you can read this guide.

In the fourth course, Use cases for Generative AI, there are examples of purpose-built and custom-built models which have either been developed for the sector or in-house or in partnership with technology companies. Customer built and purpose-built models can be tailored to a business or an organisation but are more resource intensive and require technical expertise in model training and prompt engineering.

Further reading

Further reading

If you are interested, you can learn more about open and closed source AI by reading this article: What do we mean by open-source AI?

Before deciding on any tool, ensure that you trial and demo it first to determine its effectiveness and relevance to your purpose. It is essential to carefully read and fully understand the contractual terms associated with any GenAI tool or service.

Terms and conditions for GenAI providers can typically be found on their official websites, often linked in the footer or during the sign-up process. It's crucial to review these terms to understand the scope of services, any restrictions on use, and the legal implications of using the GenAI tools.

These terms typically cover critical issues such as:

- Data rights.

- Intellectual property ownership.

- Acceptable use policies.

- Geographical location of data storage.

- Liability clauses.

If you are a charity or non-profit organisation, consider pro bono legal advice to review the terms and conditions before making any commitments. A law firm can explain the terms and conditions in plain English, ensuring that you are fully aware of the implications and potential risks involved in using the technology. Taking this step can protect your organisation and the people you support. LawWorks brokers legal advice to small not-for-profit organisations on a wide range of legal issues.

The sustainability of the technology is also an important consideration – you need to think about not only the initial costs but also the ongoing costs of using the tool. The adoption of GenAI needs to be part of long-term planning and you need a clear understanding of what the exit plan is for any tool that you acquire.

5 Data management

If you are building a tool in-house or creating a bespoke solution in partnership with a technology company, you need to consider data quality, preparation and use.

You need to think carefully about the data you use to train a model – do you have data management systems? Are you using anonymised data? The inclusion of confidential information or personal data into these tools has serious consequences.

If you are processing personal data you must comply with GDPR requirements. This includes obtaining proper consent, ensuring data minimisation, and implementing appropriate security measures.

Assuring data security is an essential element when incorporating AI in line with data protection regulations. Implementing both technical and organisational strategies, including access controls, encryption and data backups and recovery processes, is vital in reducing potential risks and maintaining data protection standards.

Cyber firm Lasso did some research and found that 13% of employee-submitted prompts included security and or compliance risks – 13% of GenAI Prompts Leak Sensitive Data – Lasso Research. There is a need for clear internal policies and staff training if an organisation does decide to use GenAI, which is discussed further in section 8 of this course.

Whatever tool you are using (off the shelf, in-house or bespoke), you need to consider data protection and evaluate the tools for compliance with GDPR and data protection laws. The sixth course, Navigating risk management, explores risk management and the seventh course, Understanding legal regulation and compliance, explores legal regulation in more depth. The Law Society has produced some guidance on GDPR for solicitors | The Law Society.

6 Deployment and integration

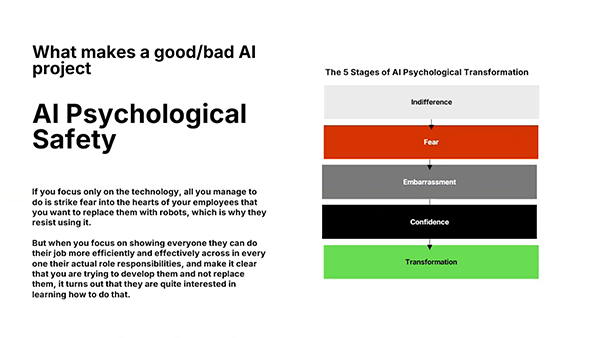

Whatever tool you choose (off the shelf, in-house or bespoke), staff will need training on how to use it, how to prompt it and a clear articulation of how it benefits them and their work.

There needs to be a data management plan to clearly define what data can be inputted into the tool considering both legal and ethical constraints. There should be a process for checking and verifying outputs for accuracy, reliability and liability.

When introducing any new technology, it is essential to adopt an incremental rollout strategy. Start small – with pilot projects – to evaluate the tool’s effectiveness before committing to a large-scale implementation. Pilots help surface important insights, but it’s also important to assess whether a demo or pilot model can produce reliable results at scale. Some tools perform well in controlled settings, only for quality to deteriorate once deployed more broadly.

For example, research by Magesh et al. (2024) found that LexisNexis’s Lexis+ AI answered 65% of legal queries accurately, while Westlaw’s AI-Assisted tool achieved a 42% accuracy rate. Both are custom-built tools designed for legal research. These findings highlight the importance of defining acceptable accuracy thresholds for GenAI tools in your own organisation and putting review mechanisms in place to regularly assess their outputs.

As adoption increases, so too can the risk of complacency—where users begin to rely too heavily on GenAI outputs without applying critical oversight. Ongoing training and regular checks are vital to maintain high standards and ethical use. It is also crucial to think about integration: how the GenAI tool will fit into your existing systems and workflows. Seamless integration can enhance productivity; poor integration can create friction and confusion.

Finally, consider transparency with clients. Clients should be informed when and how GenAI tools are being used in your work. Clear communication builds trust and supports ethical implementation. Ultimately, both the tool and its usage must be continually monitored and evaluated. A strong implementation strategy includes not only testing and deployment, but also long-term oversight to ensure the technology remains effective, accurate and aligned with your organisation’s goals.

7 Monitoring and evaluation

GenAI tools need to be continuously monitored to ensure their use remains relevant, reliable and aligned with the organisation’s strategy. Feedback channels should be in place to provide feedback on the tool’s performance, response quality, ethical compliance and user satisfaction.

There should be a lifecycle management process in place, which is an integrated system of people, tools and processes that supervise the tool or model from initial planning, development, testing, deployment, maintenance and eventual de-commissioning.

To learn more about application lifecycle management, read this article and watch this video.

8 Risk management

Watch this video of Richard Nicholas, from Browne Jacobson LLP, where he discusses the importance of governance, policy and training

Transcript

There are legal and regulatory compliance requirements in using GenAI including data privacy and cyber security (these are covered in more detail in the sixth course, Navigating risk management, and the seventh course, Understanding legal regulation and compliance). NIST is the National Institute of Standards and Technology at the U.S. Department of Commerce.

The NIST Cybersecurity Framework helps businesses of all sizes better understand, manage, and reduce their cybersecurity risk and protect their networks and data – Understanding the NIST cybersecurity framework | Federal Trade Commission (this is applicable to organisations in the UK).

It is a straightforward framework that is easy to incorporate into your business in these five areas:

Identify

Protect

Detect

Respond

Recover.

You should speak to your insurers to check that GenAI tools are covered under existing policies and understand how your insurance deals with the risks of tools producing deteriorating outputs. You should understand what systems and processes your insurers require in order for the organisation to use GenAI.

Key insurance considerations in relation to GenAI providers terms and conditions include ensuring that the provider offers sufficient liability coverage and indemnity provisions. This is essential for protecting the organisation in case the AI generates incorrect results or recommendations. Review any indemnities provided to assess whether they offer protection against infringement claims initiated by third parties. This is important because GenAI models can occasionally produce outputs that, unintentionally, infringe upon existing IP rights.

You should also review if the insurance includes coverage for data breaches or privacy violations resulting from using the AI services. This is important given the sensitive data that may be processed by GenAI systems. If there is no coverage for data breaches, other insurance policies such as cyber insurance should be considered.

You need to consider business continuity, create a plan to address a system failure or outage of service, and develop an incident response plan (IRP). Simulations of real-world scenarios in a controlled environment will support the development of an IRP.

It is essential to have a GenAI policy that covers ethical considerations, monitoring of outputs for potential ethical and/or biases issues, and responsible AI guidelines:

- Law firms who have access to Practical Law can access guidance and a template for creating a ‘Generative AI in the workplace policy (UK)’. LexisNexis also provide a template.

- Socitm (Society for innovation, technology and modernisation) has produced a sample GenAI policy that organisations can customise:

- Charity Excellence has an Example UK Nonprofit AI Policy Template.

- This article includes links to GenAI policy examples and guidance on how to develop a policy:

A GenAI policy should cover the following components:

Purpose and scope: define the goals of implementing GenAI, and outline how it will be used and monitored.

State who in the organisation the policy applies to and specify the areas of application within the organisation the GenAI will apply, for example, administrative function and document drafting, etc.

Usage guidelines: outline permissible uses and applications of GenAI.

Ethical considerations: address potential biases in AI outputs, ensure AI-generated content is clearly identified and that the use of AI is transparent to clients and stakeholders, and establish accountability mechanisms for AI-generated decisions and actions.

Compliance and legal standards: identify the legal and compliance obligations in relation to laws at the local, national and international levels, with special consideration given to areas such as copyright, data protection and the prevention of misinformation.

Data privacy and security: create safeguards to protect the data inputted into any GenAI technology, addressing data collection, storage and sharing. Make clear any prohibited activity such as entering private or personal information into any GenAI platform.

Operational guidelines: include any training available to staff to effectively use the technology, outline how GenAI will be integrated into existing systems and establish procedures for ongoing monitoring and evaluation of AI performance and impact.

Risk management: outline the risk management framework and consider conducting regular risk assessments to identify and mitigate the potential risks associated with AI.

Review and update: include a provision for regular reviews and that the GenAI policy may be updated from time to time in order to reflect technological advancements and any changes in the regulatory landscape.

The sixth course, Navigating risk management, looks at the importance of mitigating risk by having policies in place to address bias, misinformation or harm caused by outputs.

Further reading

Further reading

If you are interested, you can learn more on these websites:

9 Future-proofing

The adoption of Gen AI requires an ongoing strategy. GenAI is rapidly evolving and requires agile strategies to adapt to new tools, regulatory changes and market demands.

There are likely to be skills gaps, so there needs to be upskilling of staff or partnering with other organisations or external providers to bridge the gap.

Further reading

Further reading

The Law Society has produced a guide that sets out the process from exploration to use and review of GenAI tools.

Microsoft has produced a quick guide to implementing AI in your business: The quick-start guide to implementing AI in your business.

The Government has produced a Generative AI framework for UK Government which is a detailed guide to building and implementing GenAI tools.

10 Bringing it all together

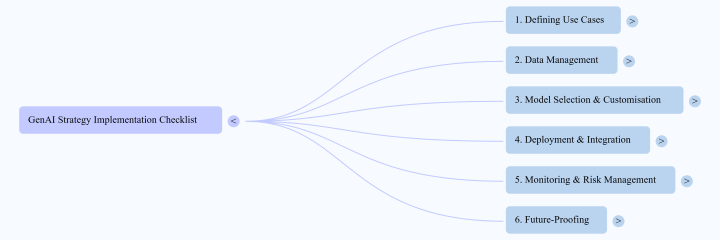

This is a checklist of some of the questions you might consider before you decide to implement GenAI into your organisation.

You can view a non-interactive version of the checklist.

You can download a PDF version of the checklist.

11 Developing a GenAI strategy

The following activity is designed as an initial starting point for developing your organisation’s GenAI strategy. The research and planning you undertake here will form the foundation of your approach, but it’s important to recognise that AI technologies – and their implications – are evolving rapidly.

As such:

- Your strategy should be considered a working document, open to regular review and revision as new opportunities, risks, and regulations emerge.

- Ongoing research, stakeholder consultation, and evaluation will be essential to ensure your strategy remains relevant, ethical, and effective.

- This activity is intended to stimulate reflection and forward thinking, helping you and your organisation take the first steps in navigating the adoption of GenAI.

How to develop a GenAI strategy for your organisation

How to develop a GenAI strategy for your organisation

Part 1: Research – understanding GenAI in your sector

Before creating a strategy it’s important to understand how GenAI is currently impacting your sector.

Use the prompts below to conduct research and collect insights.

| Focus Area | Your Notes / Research Findings |

| Current Gen AI Trends | What are the emerging GenAI applications in your sector? (E.g. document automation, triaging, case summaries.) |

| Case Studies | Are there examples of organisations in your sector successfully using GenAI? What are they doing? |

| Challenges and Risks | What concerns are being raised? (E.g. accuracy, bias, data security, ethics, regulatory compliance.) |

| Opportunities | What potential benefits could GenAI bring to your sector? (E.g. efficiency, creativity, cost reduction.) |

| Competitor Activity | How are your competitors or peer organisations adopting or experimenting with GenAI? |

| Regulations and Policies | What are the legal, ethical, or regulatory frameworks relevant to GenAI use in your sector? |

To do this you might want to look at industry reports from trusted sources (e.g. McKinsey, Gartner, Deloitte), explore professional associations or think tanks relevant to your field and examine news articles, case studies, or white papers about AI use in your sector.

In the eighth course, Preparing for tomorrow: horizon scanning and AI literacy, there is more discussion on different sources for researching about AI and you may want to come back to this activity after you have completed that course.

You can download the sector scan template

Part 2: Drafting your GenAI strategy

Once you have completed your research, watch this video.

Now use the following GenAI strategy template to draft a high-level strategy for your organisation.

| Section | Guiding prompts | Your response |

| Vision and objectives | What do you want to achieve with GenAI? How does this align with your organisation's mission/values? | |

| Priority areas for use | What processes or services could benefit from GenAI? | |

| Ethical and legal considerations | How will you address ethical concerns? (E.g. transparency, fairness, data privacy.) Are there compliance issues to consider? | |

| Risk management | What are the risks? (E.g. hallucinations, IP issues.) How will you mitigate them? | |

| Skills and training | What new skills or training will staff need to work effectively with GenAI tools? | |

| Governance and oversight | Who will oversee GenAI use? How will decisions about AI implementation and evaluation be made? | |

| Measuring success | How will you measure the effectiveness and impact of GenAI in your organisation? (E.g. KPIs, ROI.) | |

| Next steps | What are the immediate actions? (E.g. pilot projects, policy development, vendor assessment.) |

You can download the generative AI strategy template

Discussion

There is a lot to consider in this activity but you might want to reflect on what was the biggest opportunity you discovered or what risks are you most concerned about. These questions will help you think about how ready your organisation is to adopt GenAI and what support or resources would make implementation easier.

This activity is intended to stimulate reflection and forward thinking, helping you and your organisation take the first steps in navigating the adoption of GenAI. You may want to come back to this activity after you have completed the eighth course, Preparing for tomorrow: horizon scanning and AI literacy.

12 Conclusion

This course has provided an overview of the considerations that will support you in thinking about adopting and implementing GenAI into your organisations.

In the next course, Use cases for Generative AI, you will explore some examples of use cases in the advice and legal sector.

Moving on

When you are ready, you can move on to the Course 3 quiz.

Further resources

AI Strategy: Process to develop an AI strategy - Cloud Adoption Framework | Microsoft Learn.

ChatGPT Release Notes: OpenAI Help Center.

Consequence Scanning – an agile practice for responsible innovators.

Consequence Scanning Agile Event Manual – TechTransformed.

Guide to Generative AI: Law Society of Scotland.

GOV.UK: Generative AI Framework Report.

Human-Centred Artificial Intelligence: Stanford University AI Index.

Integrating Generative AI Into Business Strategy: Dr. George Westerman .

The Law Society: Generative AI Essentials.

Microsoft: Guide to implementing AI.

Public voices in AI: Ada Lovelace Institute.

Research: Greater Manchester People’s Panel for AI: Manchester Metropolitan University.

Responsible AI Toolkit - GOV.UK.

Small Business Cybersecurity Corner: NIST.

Tech Horizons Report 2024: ICO.

Artificial Intelligence Playbook for the UK Government

World Economic Forum: AI in Action Report 2025.

World Economic Forum: Leveraging Generative AI for Job Augmentation and Workforce Productivity.

World Economic Forum: Generative AI: Navigating Intellectual Property.

References

Magesh, V., Surani, F., Dahl, M., Suzgun, M., Manning, C.D. and Ho, D.E. (2024) ‘Hallucination‐Free? Assessing the Reliability of Leading AI Legal Research Tools’, Journal of Empirical Legal Studies. Available at: https://onlinelibrary.wiley.com/doi/pdf/10.1111/jels.12413 (Accessed 9 May 2025).

World Economic Forum (2025) AI in Action: Beyond Experimentation to Transform Industry, Available at: https://reports.weforum.org/docs/WEF_AI_in_Action_Beyond_Experimentation_to_Transform_Industry_2025.pdf (Accessed 9 May 2025).

Acknowledgements

Grateful acknowledgement is made to the following sources:

Every effort has been made to contact copyright holders. If any have been inadvertently overlooked the publishers will be pleased to make the necessary arrangements at the first opportunity.

Important: *** against any of the acknowledgements below means that the wording has been dictated by the rights holder/publisher, and cannot be changed.

Course banner 552239: Alexander Limbach / shutterstock

552244: kalhh / Pixabay

552245: geralt / pixabay

552246: TheDigitalArtist- / pixabay

558977: NotebookLM

555638: Created by OU artworker using OpenAI (2025) ChatGPT 4