Use 'Print preview' to check the number of pages and printer settings.

Print functionality varies between browsers.

Printable page generated Friday, 27 February 2026, 4:54 PM

Use cases for Generative AI

1 Introduction

This course explores use cases for GenAI – depending on your time commitments, you may choose to study the course in separate parts, as shown below.

Course sessions

The sessions are:

Session 1: Introduces general use cases for GenAI – 90 minutes (Sections 1, 2, 3 and 4).

Session 2: Focuses on how AI solutions are being applied within the advice and legal sectors – 90 minutes (Sections 5, 6, 7 and 8).

You can work through both sections in order or, depending on your role and interests, you might prefer to focus on just one.

This is a self-paced course of around 150–180 minutes including a short quiz.

A digital badge and a course certificate are awarded upon completion. To receive these, you must complete all sections of the course and pass the course quiz.

An introduction to the course

An introduction to the course

Listen to this audio clip in which Francine Ryan provides an introduction to the course.

Transcript

You can make notes in the box below.

Discussion

LLMs present opportunities to enhance productivity and streamline repetitive tasks, as well as freeing up capacity and allowing teams to focus on higher-value work. But they can also produce errors, their outputs require human oversight, and these tools could lead to de-skilling within organisations. As such, AI's integration must be approached thoughtfully and strategically.

Learning outcomes

Learning outcomes

After completing this course, you will be able to:

Identify common use cases for GenAI and reflect on how these applications might be relevant to your own work or organisation.

Evaluate how AI technologies are being implemented within the advice and legal sectors.

Understand and apply a strategic framework for the adoption of AI tools.

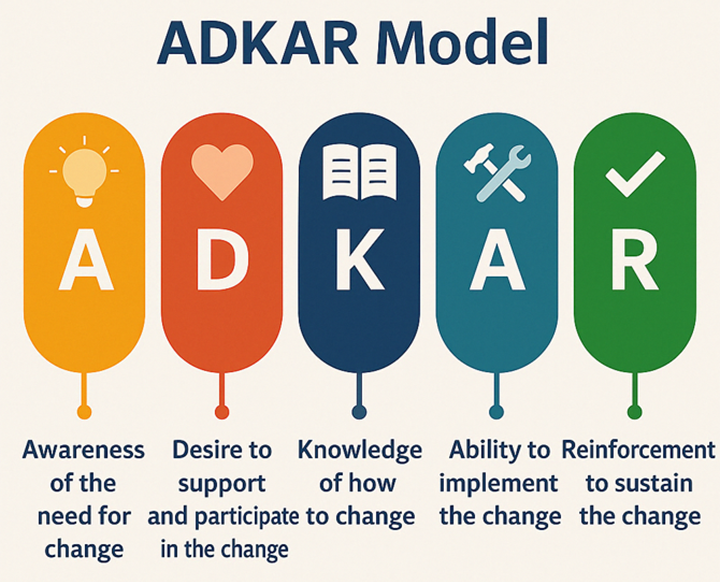

Understand the ADKAR model of change management and how to apply it in your organisation.

Glossary

Glossary

A glossary that covers all eight courses in this series is always available to you in the course menu on the left-hand side of this webpage.

Session 1: Introduces general use cases for GenAI – 90 minutes

2 AI personas

Slack’s Work Force Lab has conducted research exploring employees' attitudes toward AI, referring to these differing perspectives as ‘AI personas’. In the second course, Skills and strategies for using Generative AI, we used the term ‘AI personas’ to describe the roles or characters we ask AI to adopt when responding to prompts. In this context the term is used differently it reflects how individuals perceive, relate to, and engage with AI, based on their personal attitudes and experiences.

Slack suggests there are five AI personas:

- The Maximalist

- The Underground

- The Rebel

- The Superfan

- The Observer

The research from Slack suggests that people have mixed feelings about AI. Organisations considering integrating AI tools should be aware of and address different attitudes towards AI.

What AI persona are you?

What AI persona are you?

Discussion

You may wish to reflect on your own AI persona and consider how it shapes your views and assumptions about AI. Exploring the other personas can also offer valuable insights – what perspectives might your colleagues identify with, and how do these differ from your own? Personas are a useful tool for understanding the diverse ways people engage with AI. Do they help surface underlying values, concerns and expectations?

3 General use cases for GenAI

In the first course, Understanding Generative AI, you learnt about GenAI technology and how general-purpose public large language models (LLMs) such as Claude, ChatGPT and Microsoft Co-Pilot can generate human-like text, engage in conversations, create images, write computer code and answer questions.

LLMs can be used to streamline workflows and assist with tasks that might help us become more productive and efficient. Keeping track of the number of GenAI products being released is challenging – at the time of writing, ChatGPT was still the most popular, but there are other models emerging.

As organisations begin to explore GenAI tools it is important to distinguish between ‘everyday’ AI tools (ChatGPT, Claude, etc.) and more specialist AI tools. Starting with the ‘everyday’ tools offers a practical first step before considering the adoption of more advanced or bespoke AI solutions.

This course does not recommend any specific GenAI tools. Instead, it aims to raise awareness of the wide range of tools available and to emphasise the importance of selecting the most appropriate one for your particular task and context.

In this section of the course we explore general use cases for LLMs.

Further reading

Further reading

If you want to find out more about the most popular AI powered tools in 2025, you can read The Top 100 Gen AI Consumer Apps – 4th Edition | Andreessen Horowitz.

AI is transforming the world of work, are we ready for it?

AI is transforming the world of work, are we ready for it?

Watch this video ‘AI is transforming the world of work, are we ready for it?’ from the Financial Times (2024).

Reflect on how organisations experiment and invest when things are changing at such a rapid pace.

Discussion

The film highlights that AI might be the biggest change to the workplace in our lifetime but identifies there is a lot of hype and concern. Organisations should think carefully about their needs. They should recognise the imperfections and limitations of the technology and critically assess where it might be appropriate in their business.

Before exploring the types of tasks that free and paid-for general-purpose public LLMs – such as Microsoft Copilot, DeepSeek or Gemini – can support, it is important to emphasise the need for responsible and ethical use of GenAI tools. It is also important to explain there are differences between publicly available GenAI tools (which can be both free or subscription based) and corporate versions, particularly regarding data usage policies.

Click on each label below to read more.

As discussed in the other courses in this series, when using any LLM it is advisable to:

- Read the terms and conditions.

- Be aware of the legal implications of AI generated content.

- Understand the rules on data protection, intellectual property and copyright.

- Avoid inputting any sensitive personal or organisational information into standard public versions of LLMs.

Organisations should establish clear guidelines and policies outlining what information can be shared with LLMs and which tasks are appropriate for their use. Human oversight and critical thinking remain essential.

Over-reliance on LLMs can lead to de-skilling so it is important to maintain a balanced approach. LLMs should be viewed as collaborative tools that assist, rather than replace, human skills and expertise.

General use cases for LLMs

If LLMs are used ethically and responsibly, several general applications might be beneficial:

- Language processing – real time translation, summarisation, information extraction.

- Content creation – creative writing, text creation.

- Conversational AI – virtual assistants, customer service chatbots, learning assistants.

- Professional tools – code generation, legal document analysis, academic research.

- Creative applications – music lyrics, poetry, scriptwriting.

- Media application – video creation, audio creation.

- Code generation – writing code, error detecting, de-bugging code.

Prompt

Prompt

To sum up the potential use cases for LLMs we asked the free version of Claude 3.7 Sonnet to:

‘Write a concise 100-word paragraph highlighting the key use cases for Large Language Models in modern business environments. Focus on practical examples that deliver measurable value. Maintain a professional tone for executives.’

This is what it said:

Response

Response

‘Large Language Models are revolutionizing how organisations operate across domains. In customer service, AI chatbots handle inquiries 24/7, reducing wait times and increasing satisfaction. Content teams use LLMs to draft blogs, reports, and marketing copy in seconds rather than hours. HR departments streamline recruitment by automatically screening resumes and generating personalized communications. Software developers accelerate coding with AI-powered assistants that suggest solutions and explain complex algorithms. Legal teams extract key provisions from contracts and identify potential risks automatically. Research departments synthesize findings from thousands of documents instantly. When implemented thoughtfully, LLMs boost productivity, enhance decision-making, and create competitive advantage through automation of knowledge work.’

How would you rate the answer? Claude produced this answer within seconds, much quicker than most of us could write a similar answer. It is slightly over the word count at 104 words, but it does provide a summary of use cases for LLMs in a professional tone.

In the second course, Skills and strategies for using Generative AI, you learnt about prompting frameworks and prompt libraries, using that knowledge you could consider using an LLM for:

Content creation

- Document drafting, first drafts of reports, papers and case studies.

- Generating meeting agendas and detailed minutes and actions.

- Drafting internal communications, such as newsletters, marketing materials, website content, blogs and policy documents.

- Composing emails and summarising long email threads to extract key points and decisions.

- Simplifying content or generate content in a specific tone or style.

- Proofreading and suggesting improvements to the text.

- Generating response templates for common questions or enquiries.

- Creating presentations, slide decks, and content based on specific topics and audiences.

- Generating speaker notes with talking points and transitions.

- Suggesting data visualisation approaches for complex information.

Research assistance

- Summarising lengthy research papers and reports into actionable insights.

- Extracting relevant data points from multiple sources.

Examples of tools

- Perplexity is a search engine that uses LLMs to answer queries that include sources from the web and cite links with the text response.

- Elicit is an AI research assistant that researchers can use to support with literature reviews it searches academic papers from the Semantic Scholar corpus.

- Paid versions of LLMs like ChatGPT now offer a ‘deep research’ function where the LLM browses the web, reads multiple sources and extracts relevant information, it then analyses that information to provide a report with citations and explanations. For more information on deep research, you can read this blog The Differences between Deep Research, Deep Research, and Deep Research.

- NotebookLM is a personalised AI assistant, it is designed to help users better understand and interact with their own documents. NotebookLM allows you to upload sources (like Google Docs, PDFs or copied text), and then ask questions, generate summaries, or brainstorm ideas based on that content. Its key features include source-grounded responses – it only answers based on the documents you upload. It will produce auto-generated summaries, glossaries and FAQs, and it can help you compare and synthesise across different documents. It also allows you to generate an AI-powered audio conversation between two virtual hosts. They discuss the content you have uploaded, and the conversation is automatically generated based on your notes, documents or research materials.

Visual and audio support

- Image generation: AI systems such as DALL-E or Midjourney can create images based on textual descriptions.

- Audio content: podcast script creation, creating description narration for visual content to improve accessibility, interview question preparation and music composition.

- Video content: script creation, storyboarding assistance, YouTube titles and descriptions, content ideation and video editing support.

- Video post-production: automatic caption generation, content summarisation, thumbnail text generation, chapter marking and content tagging.

Creative assistance

- Idea generation: product ideas, new service offerings.

- Brainstorming: marketing strategies, event planning.

- Overcoming creative blocks: fresh perspectives or re-framing problems.

- Role-playing: using different personas to offer different viewpoints.

- Feedback and re-fining: reviewing your work, giving constructive feedback, edits and suggestions.

- Questioner: ask the LLM to suggest questions for you to consider on a topic.

Productivity

- Meeting summaries or transcripts.

- Training programmes, researching, and creating training.

- Creating internal FAQs and checklists.

- Translation of documents.

- Redaction of documents.

- Integrating into existing tools such as Outlook or add GenAI tools to browser extensions, for example, Chrome.

Different ways you can use GenAI tools

Different ways you can use GenAI tools

Listen to this audio clip in which Francine Ryan discusses the different ways you can use GenAI tools.

Transcript

Think about one way you might use a GenAI tool in your work or in your organisation and how it might help you.

Discussion

There may be lots of ways in which you can use an LLM in your work or in your organisation. However you use an LLM you need to understand the legal and privacy implications as well as how to prompt it, guide it and think critically about its outputs.

We asked ChatGPT 4 (April 2025) for some suggestions of which LLMs should be used for different tasks. You can see below what is suggested.

You can check for updates and improvements to LLMs through their release notes – read the latest updates to ChatGPT and Google Gemini. The technology is moving fast, and you should always do your research into the tools that you think are appropriate for you or your organisation. This is explored further in the eighth course, Preparing for tomorrow: horizon scanning and AI literacy.

Further reading

Further reading

If you are interested, you can learn more about this topic from a table of different AI tools which gives a short description of how each of them works.

Using LLMs for different tasks

In the second course, Skills and strategies for using Generative AI, you learnt about CLEAR and CRISP for prompting – with the overarching prompting principles being:

- Clarity: Use straightforward, unambiguous language.

- Specificity: Narrow the topic or task to focus the response.

- Context: Include relevant background (e.g. jurisdiction, timeframe, audience).

- Purpose: State what the output is for (e.g. training, publication, client communication).

- Format: Specify the desired structure (e.g. bullet points, summary, list).

LLMs for different tasks

LLMs for different tasks

Choose one or two of the following options. Copy the prompt into an LLM tool and generate a response. You may want to change your prompt using one of the prompt frameworks you learnt about in the second course, run the query again and compare the different outputs.

Option 1: Draft a professional email

Prompt to use: I am a [Job title] working for [organisation]. Write a professional email to a client, thanking them for attending your recent webinar on [topic] and offering them a follow-up meeting. Keep it professional and concise.

Option 2: Brainstorm a new product or service idea

Prompt to use: I am a [Job title] working for [organisation]. Brainstorm three creative new product or service ideas for a small [insert industry] business and create a table that I could include as part of a presentation.

You could customise the prompt, for example, brainstorm three creative new service ideas for an advice charity that is focused on supporting clients with family law advice.

Option 3: Generate social media post ideas

Prompt to use: I work for [organisation] as a [Job title]. Generate three catchy social media post ideas to promote an upcoming charity run event to raise funds for [Project]. Make the posts engaging and include a call to action.

Option 4: Create a LinkedIn post

Prompt to Use: I am a [Job title] and I gave a presentation on [Topic] to [Organisation]. Write a LinkedIn post about my recent presentation. Thank the audience for attending, highlight [key takeaway], and invite people to connect or ask questions. Keep the tone professional but approachable.

Reflection questions

Reflection questions

Consider the quality and usefulness of the General use cases for GenAI that you've read about in this section.

1 | How relevant were the ideas or suggestions? Rate 1 (poor) to 5 (very good) | |

2 | How creative or original did you find them? Rate 1 (poor) to 5 (very good) | |

3 | Would these ideas be practical or feasible in a real-world setting? Rate 1 (not really) to 5 (very usable) | |

4 | How much refinement would they need before you could use them? None / Minimal / Moderate / Significant | |

5 | How helpful was this for saving time or sparking new thinking? Not helpful / Slightly helpful / Very helpful | |

6 | What did the LLM do well in terms of brainstorming? | |

7 | Were there any ideas you found surprising or insightful? | |

8 | Would you use an LLM for brainstorming in your work? Why or why not? | |

9 | If you modified the prompt, how did that change the results? |

Discussion

It is useful to rate the quality and usefulness of these exercises and any task you ask an LLM to complete, to ensure that, it is helping you to be more productive and efficient.

4 Tips and tricks

Quality varies by prompt

The quality of the response depends on the clarity and detail of your prompt. As you learnt in the second course, Skills and strategies for using Generative AI, the more specific you are – about tone, audience, or purpose – potentially the better the output.

When crafting prompts it is essential to ensure they are straightforward and unambiguous. Avoid using vague language and strive to be as precise as possible. Clear prompts help the AI understand exactly what is being asked, leading to more accurate responses. Narrowing down the topic is crucial to avoid broad or general answers. Specify the exact information or type of response you are seeking. This focus helps the AI provide more relevant and detailed answers, tailored to your needs.

Providing relevant background information is key to guiding the AI. Context helps the AI understand the nuances of the query and generate more accurate responses. By offering sufficient context, you enable the AI to better grasp the situation and deliver high-quality answers. Clear and specific prompts lead to more accurate and relevant responses. When the AI understands the query precisely, it can provide answers that are more aligned with your expectations.

Well-crafted prompts save time by reducing the need for follow-up questions or clarifications. By being clear and specific from the outset, you minimise the chances of miscommunication and streamline the interaction. Providing context helps the AI understand the nuances of the query, resulting in better-quality answers. Contextual information allows the AI to consider various aspects of the situation, leading to more comprehensive and insightful responses.

Checking and editing is still essential

LLMs provide a good starting point, but the response still needs to be checked, edited and refined. This could be adding personal insight, refining tone or adjusting phrasing – your voice is still key. Remember you are responsible for the content you produce and ensuring that it aligns with your values and/or the values of your organisation.

Time-saving benefits

Using GenAI tools can help save time, particularly during the early stages of drafting or brainstorming. Even if the output requires refinement, starting with a first draft gives you something to edit. However, it’s important to remain cautious about over-reliance on these tools. Always critically evaluate whether the verification and editing process truly results in time saved. If we are going to use these tools regularly, we need to ensure they provide real efficiency gains.

Surprising creativity and sometimes not!

LLMs can offer different perspectives, but the content can be generic or miss the deeper nuance. We should think of LLMs as assistants, not replacements.

Think about how you refine your prompts and experiment with adding more context:

- Who’s the audience?

- What’s the goal of the piece?

- What tone or style do you want?

- Is there a prompt framework or prompt from a prompt library that could help you?

Iterate and experiment

Ask the LLM to try again or rewrite in a different style. Play around with instructions like:

- Try tone descriptors, such as ‘make it more friendly but professional’.

- Use a storytelling approach.

- Focus on benefits instead of features.

- Specify the length of the output.

- Use action verbs such as ‘draft’ or ‘create’.

Use it for brainstorming, not just writing

LLMs can help you get unstuck, whether you are generating ideas for content, problem-solving or exploring different perspectives.

Be aware of its limitations

Remember that LLMs don’t have judgment, expertise, or lived experience. Fact-checking and ensuring alignment with your voice and values are essential. In the fifth course, Ethical and responsible use of Generative AI, you will learn more about responsible and ethical use of GenAI.

Useful tasks

LLMs can be useful for tasks such as proof reading – in the box below is an example of a prompt from Write with AI (Cole and Bush, 2025). This prompt can be used to ask an LLM to proofread a document. It is a very detailed prompt that reviews your document line by line and makes suggestions for change. Compare this to the prompts above and consider how much more detail is included.

Prompt

Prompt

You are a perfectionist, meticulous proofreader GPT and an editor with a keen eye for detail and a mastery of the English language. Your goal is to thoroughly review and revise the provided draft text and suggest edits to improve clarity, flow, grammar, and overall quality of the document. Use English United Kingdom.

Follow this process to proofread and edit the draft text:

Step 1: Read through the entire draft to understand the overall message and structure before making any edits.

Step 2: Perform a detailed line-by-line edit, watching for:

- Spelling, grammar and punctuation errors.

- Awkward phrasing or sentence structure.

- Redundant or unnecessary words and phrases.

- Incorrect or inconsistent formatting.

- Factual inaccuracies or unsupported claims.

Step 3: Suggest reordering sentences or paragraphs to improve the logical flow and coherence of the writing. Use transition words and phrases to link ideas.

Step 4: Provide recommendations to enhance the draft's overall impact and persuasiveness:

- Strengthen word choice by replacing weak or vague terms with more powerful language.

- Vary sentence length and structure to improve readability and keep the reader engaged.

- Ensure the main points are clearly stated and well-supported.

- Maintain a consistent voice and tone aligned with the purpose and intended audience.

For any major revisions, provide a brief rationale to help the author understand your thought process and learn for the future. Preserve the original author's voice and intent. Avoid making edits that change the core meaning.

Be respectful and constructive with feedback. The goal is to help the author improve, not to criticise.

Prioritise edits that have the greatest impact on clarity and persuasiveness of the writing.

Output format: Summary: [Provide a quick 2–3 sentence summary of the key points and overall message of the draft text] Mistakes/Errors: [List out all the mistakes and errors you observed in the draft text, including spelling, grammar, punctuation, formatting, factual inaccuracies, awkward phrasing, etc.] Revised Draft: [Insert the full edited and proofread text here, with all the mistakes corrected and suggestions implemented. Preserve as much of the original formatting as possible.] Detailed Edit Notes: [Use this section to provide a more detailed explanation of the edits you made and your reasoning behind them. Reference specific line numbers where these are helpful. Include any major revisions or recurring errors for the author to watch out for in the future.

The value of this prompt is that it is using an LLM as an assistant, not as a replacement. If you treat the AI like a human rather than as a tool you can get a better response.

This prompt is encouraging the user to learn and gain a better understanding of the structure of language. This might be an example of where an LLM could add value to an individual or an organisation. You might want to try this prompt and see how effective it is. You could also start your own prompt library where you keep a record of your prompts for the different tasks you use an LLM for, prompts can be both written and audio.

On YouTube there are lots of videos about creating effective prompts for the types of tasks that might be appropriate for a public LLM and the second course, Skills and strategies for using Generative AI, provided links to prompt libraries in the ‘Further reading’ section.

Session 2: Focuses on how AI solutions are being applied within the advice and legal sectors – 90 minutes

5 GenAI use cases in the advice sector

In this section we explore some examples of how AI solutions are being applied in the advice sector.

These typically make use of custom or purpose-built models rather than more general-purpose public LLMs. Although the models have been developed for a particular organisation, the tasks they are doing are transferable to other sectors.

Different types of models

Different types of models

Read this article Overview: General Purpose LLMs vs Purpose-Built LLMs vs Custom LLMs to learn more about different types of models

Now match each of the following terms with its correct definition.

Two lists follow, match one item from the first with one item from the second. Each item can only be matched once. There are 3 items in each list.

A general-use language model like ChatGPT or Gemini that is open to the public, often trained on internet-scale data and not tailored to a specific user.

A language model designed for a specific task or industry, such as legal, medical, or customer service applications.

A language model developed in-house or by a vendor specifically for an organisation’s needs, trained on its own private data.

Match each of the previous list items with an item from the following list:

a.Purpose Built LLM

b.Custom LLM

c.General Purpose LLM

- 1 = c,

- 2 = a,

- 3 = b

Answer

General Purpose LLM = A general-use language model like ChatGPT or Gemini that is open to the public, often trained on internet-scale data and not tailored to a specific user.

Purpose Built LLM = A language model designed for a specific task or industry, such as legal, medical, or customer service applications.

Custom LLM = A language model developed in-house or by a vendor specifically for an organisation’s needs, trained on its own private data.

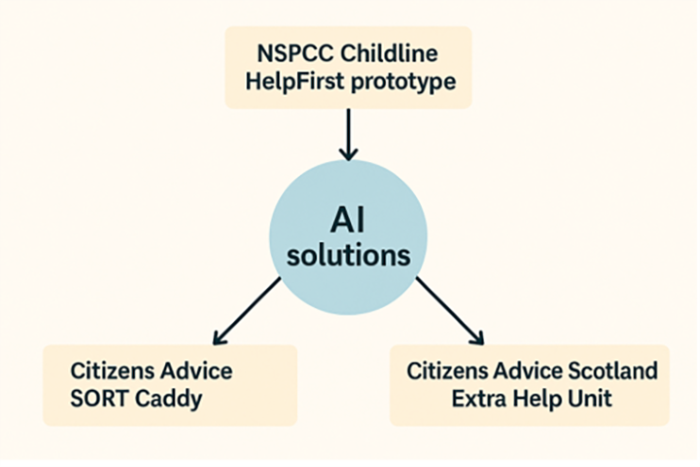

Please note: The AI-generated image above is not correct. The arrow at the top should be pointing upwards, not downwards. This is why you should always check your AI output and why the ‘human in the loop’ is important.

Case Study: NSPCC Childline HelpFirst prototype

HelpFirst worked with the NSPCC to produce AI-generated summaries. It is an example of the potential of a custom-made AI model to help organisations with routine tasks that can free up time to prioritise core delivery. Although this solution was developed in a voluntary organisation, it could be relevant for other sectors.

Approach taken to implement an AI solution

Approach taken to implement an AI solution

Read this article How AI could help Childline counsellors spend more time talking to children and young people and summarise the key messages that underline the approach taken to implementing the AI solution.

Discussion

There might be several points you took away from this case study including:

- The importance of involving stakeholders in designing the solution.

- Customised solutions often perform better than general tools.

- The ‘human in the loop’ is critical, the output must be reviewed by a human. The aim is for the AI solution to support the work of the human, not replace it.

Citizens Advice SORT Caddy

Watch this video by Stuart Pearson from Citizens Advice – Stockport, Oldham, Rochdale and Trafford (SORT).

Transcript

Stuart is Head of Innovation at Citizens Advice SORT, and his team have developed Caddy which is an AI Copilot being used by advisers to provide answers to questions that are validated and signed off by a supervisor. Caddy is an LLM, but it has been custom-built by inhouse technologists at Citizens Advice SORT and draws on content from trusted websites. It produces AI-generated advice based on existing documentation directly within the messaging interface (Google Chat, Microsoft Teams, etc).

Some key points about the Caddy use case:

- Caddy answers are regulated by ‘human in the loop’ validation: answers are not presented directly to the public, but only after they have been approved by a supervisor.

- The responsibility of ensuring the answer to the question is correct lies with the supervisor not Caddy.

- The purpose of Caddy is to support Citizens Advice to improve its processes in order to help more people and is an example of AI implementation for public good.

Citizens Advice Scotland

Citizens Advice Scotland Extra Help Unit provides support to vulnerable domestic consumers and micro-businesses across the UK with their energy complaints. They embarked on a technology challenge via CivTech Scotland to explore ‘How can technology help quickly identify and prioritise support for the people in the most vulnerable situations’.

Watch this video which explains the work of the Extra Help Unit and what a tool could mean to the organisation: CivTech Challenge 8.1 — Identifying and prioritising support — CivTech (8:07 minutes). You can choose to watch the entire video or view the segment from the beginning up to 5:16.

The Extra Help Unit partnered with HelpFirst to develop a tool that supports the triaging and prioritisation of cases. The tool highlights the cases most at risk. The tool is integrated within the Case Management System and uses AI to scan all notes and emails that come into the service. The tool works in real time, updating the case profile within 30 seconds using an LLM that scans through all the documentation adding a score to each case and flags for any risk factors. The scores are:

- S – Immediate Safeguarding.

- P1 – Priority 1.

- P2 – Priority 2.

- U – Urgent.

- C – Complaint (no risk).

| Score | Risk | Definition |

| S | Safeguarding risk | Is the client at risk of self-harm? For instance, do they mention suicidal thoughts or ideation? Do they imply they might do physical damage to themselves or to property? Do they reference wanting to ‘end it all’ or say it’s ‘not worth living’? |

| P1 | Off-supply risk (high) | Indicates that the client is currently off-supply, meaning there is a lack of electricity or gas supply in the property. This may be indicated by phrases such as ‘off-supply’, ‘disconnection’, or explicit mentions like ‘Is Consumer Off Supply: Y’ or ‘On/off supply: Off-supply’. |

| P2 | Off-supply risk (medium) | Indicates that the client is at risk of being off-supply soon. This includes scenarios where the client is on emergency credit (EMCR); is in friendly credit; has less than £10 positive credit on their meter or lacks access to electricity or gas for part of the day. These scenarios might be mentioned in the text as ‘customer is on emergency credit’, ‘£3.70 EMCR on the electric’, or ‘£6 reg credit’. |

| U | Urgency risk | Does the text mention ‘warrant’ or ‘legal proceedings’? Does the text state the term ‘urgent’ in the notes? Does the text refer to a date on which supply will be cut off if the consumer does not pay an amount of money or carry out a task for the supplier? Are bailiffs due to attend? |

| C | No risk detected | If no risk is detected, the case is scored ‘C’, indicating a standard complaint |

These are the LLM flags risk factors.

| Age | Is the client or a member of their household over state pension age (66)? |

| Dependents | Are there any children (i.e. people age between 5 and 18) in the premises? Do they care for someone with disabilities? |

| Equipment | Does the client have medical equipment that relies on gas/electricity? This might include the following: power wheelchairs or mobility devices, ventilators, oxygen concentrators, infusion pumps, intravenous or feeding equipment, chairlifts, stairlifts, communication devices, nebulisers, CPAP (continuous positive airway pressure), BiPAP (bilevel positive airway pressure), suction pumps, dialysis machines, hoists, medical-related showering/bathing need, need to wash clothes (washing machine), electrolarynx, hospital bed. Medication that must be kept in the fridge such as insulin, eye drops, antibiotic liquids, etc. |

| Under 5 | Is there a baby present in the premises? Do they mention children under the age of 5? |

| Health | Is there evidence that the client’s mental health is poor (e.g. depression, schizophrenia). Is there evidence of physical health needs? This may include any physical health conditions or disabilities mentioned. |

| Safeguarding | Is there an active safeguarding issue? Please only output risk present if the case directly mentions domestic violence or states the words ‘active safeguarding issue’ or ‘safeguarding: y’ |

| Immediate safeguarding | Is the client at risk of self-harm? For instance, do they mention suicidal thoughts or ideation? Do they imply they might do physical damage to themselves or to property? Do they reference wanting to ‘end it all’ or say it’s ‘not worth living’? |

The scores appear on the dashboard and on the list of cases, it then helps the service with the prioritisation and triaging of cases.

It is important to stress that the normal processes used by the Extra Help Unit have not changed, the AI tool is only an aid.

Benefits seen by CAS include:

- It allows customers that are most in need to be triaged more quickly and provides those working in the service with the detail to target cases based on priority rather than on date order which was not available before.

- Managers can use the scores to allocate cases, and it will also highlight P2 and urgent cases at the list level – giving the service much greater insight into the cases.

- In terms of the AI, it is returning over 80% accuracy on most scores and alerts.

- The tool has helped the team get a better understanding of the cases they are receiving. It supports them to identify the most critical cases, which has helped to improve the mental wellbeing of staff.

AI has given the Extra Help Unit a safety net that they didn’t have before that protects consumers and staff.

Further reading

Further reading

For more information you can read: Embracing AI: How charities can benefit | Citizens Advice Scotland

Implementing AI tools

There is a lot of interest within the voluntary and advice sector around how GenAI can support organisations to help more people, but it is essential to think carefully before integrating a tool as there can be significant reputational risks if technology implementation goes wrong and the risk of harm to service users.

Citizens Advice SORT had the technical capability within its innovation team to build a custom-based model inhouse. Citizens Advice Scotland and the NSPPC worked with HelpFirst to build their solution. Wyser is another company that is working with advice and public sector organisations to integrate AI solutions, and you can read some of the case studies on their website.

Reputational risk when implementing AI tools

Reputational risk when implementing AI tools

Watch this video from Stuart Pearson where he discusses the importance of organisations being aware of reputational risk when implementing AI tools.

Transcript

What principles does Stuart identify as important when thinking about implementing AI tools?

Discussion

Stuart discusses the importance of organisations being aware of reputational risk and thinking about how AI can assist, not replace people. Organisations should consider their existing practices, and ensure they establish guard rails. They should involve stakeholders, act with transparency, and make sure there is human contact and oversight.

Citizens Advice SORT has adopted an approach to integrating AI tools that aligns with the strategies of Citizens Advice Scotland and the NSPCC. These organisations have placed strong emphasis on the responsible use of AI, ensuring that the technology supports and does not undermine the core work and values of the organisation.

People’s Panel for Artificial Intelligence

Advice and voluntary organisations that are considering adopting AI solutions might want to think about how they involve their communities in their decision-making.

The People’s Panel for Artificial Intelligence (PPfAI) is a project led by Dr Annabel Latham and Professor Keeley Crockett at Manchester Metropolitan University and funded by The Alan Turing Institute. It brings together a diverse group of citizens to learn about, discuss and share their views around the use of data and artificial intelligence in people’s everyday lives. The Panel explores both the positive and negative consequences of new research ideas, products and/or services presented to them by businesses and researchers, and they give their opinions and feedback.

The Panel is designed to give a voice to citizens in communities and empower them to have the confidence to question the use of technology.

Through a Public Engagement Grant award 2022 from the Alan Turing Institute they set up the Greater Manchester People’s Panel on AI, and a follow-on project funded by Manchester City Council Digital Strategy Team (2024) expanded the panel which Citizens Advice SORT presented Caddy to in July 2024.

Watch this video from Stuart Pearson where he talks about the Citizens Advice SORT presentation to the Greater Manchester People’s Panel on Caddy in July 2024.

Transcript

The panel raised questions and conducted consequence scanning based on the project presentation and then provided feedback. Consequence scanning is a method where people discuss openly and provide their opinions on the intended/unintended consequences, potential harms or disadvantages of the technology being created.

The panel fed back with questions and comments on:

- Who are the stakeholders?

- What are the intended consequences of using this technology?

- What are the unintended consequences of using this technology?

- What are the positive consequences of using the technology that the presenter focused on?

- What are the consequences of using this technology that the presenter wants to mitigate?

These are important questions that all organisations should consider when implementing new technologies. Engaging with the People’s Panel offers a valuable opportunity to involve citizens in their communities, helping to ensure that the adoption of AI solutions is informed, inclusive and transparent.

Further reading

Further reading

If you are interested, you can learn more about another project – UKRI RAi: Public Voices in AI – which also aims to ensure that public voices are front and centre in artificial intelligence research, development and policy.

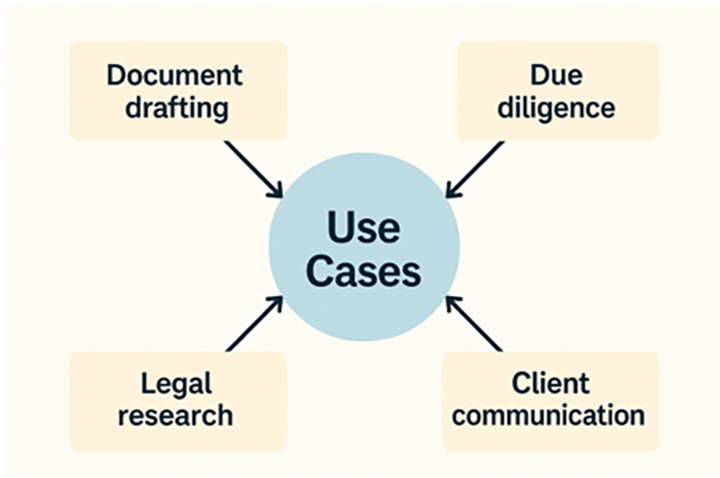

6 GenAI use cases in the legal sector

AI within law firms is not new. Large law firms have been using AI for due diligence, document management and contract review for a while, but GenAI has created new opportunities, which may enhance efficiency, reduce costs and allow lawyers to focus on higher-value tasks.

According to Bloomberg Law (2025) these are some of the tasks that GenAI tools are supporting lawyers in legal practice with.

| Application area | Description |

| Drafting correspondence | Assisting with client emails, letters and internal communications. |

| Legal research | Retrieving and summarising case law, statute, and legal commentary. |

| Summarising | Condensing legal documents, judgments and contracts. |

| Retrieving documents | Analysing legal documents for key terms, risks, consistency and data extraction. |

| Due diligence | Scanning and summarising large volumes of material during transactions. |

| Discovery | Identifying relevant documents in litigation. |

| Negotiating contracts | Suggesting edits and alternatives during contract negotiation and clause comparisons. |

| Preparing case papers | Assembling and drafting case bundles, skeleton arguments and briefings |

| Estate planning | Assisting in drafting wills and trusts |

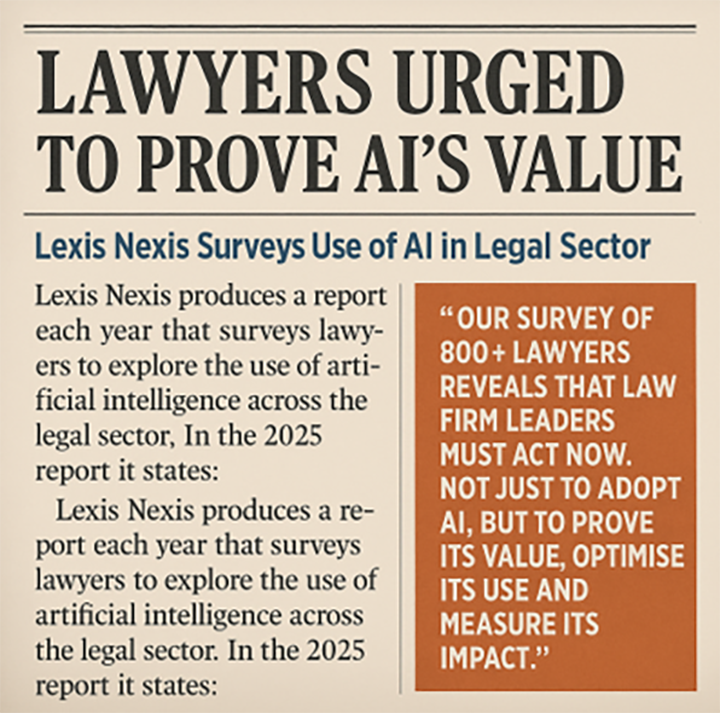

In addition, Smokeball (2025) says that time tracking, client intake, legal calendaring, knowledge asset search and workflow, matter management, and invoicing and billing are activities that could be supported by GenAI tools. LexisNexis produces a report each year that surveys lawyers to explore the use of AI across the legal sector. In the 2025 report it states:

‘Our survey of 800+ lawyers reveals that law firm leaders must act now. Not just to adopt AI, but to prove its value, optimise its use and measure its impact.

Without clear AI success metrics, firms risk wasted investment, inefficiency and even an exodus of skilled professionals.’

The report is based on 807 responses from the UK, which is a relatively small survey sample size when compared with Solicitors Regulation Authority (SRA) data on the number of law firms which in February 2025 was 9,178 (SRA, 2025).

The report’s ‘Must act now’ suggests that law firms should act urgently, but the quotes within the report suggest a more pragmatic approach, acknowledging AI’s potential value whilst emphasising the need for careful implementation and clear success criteria.

Large law firms are adopting GenAI tools: Mishcon de Reya has developed deReyAI – an inhouse tool – and are combining it with Legora, Simmons and Simmons use Percy, Linklaters have Laila and some law firms are now using Harvey AI. Harvey AI will be discussed in more detail later in the section.

However, as Laura Hodgson, AI Lead at Linklaters highlights in the LexisNexis (2025) report: ‘Law firms are yet to publish hard data on the efficiency gained through using new AI tools because getting tools to a point of adoption where real value can be measured has been a challenge’.

The Legal Trends for Mid-Sized Law Firms, published by Clio (2025) in the US, found that mid-sized firms were using AI-powered legal research platforms, generic non-legal AI tools, document drafting or automation tools, eDiscovery solutions and predictive legal analytics. Mid-sized law firms were more likely than smaller firms to be using these solutions. Time saving and efficiency were considered to be the biggest drivers for AI adoption.

Other research from the US (Smokeball, 2025) states that firms are cautious about AI adoption because of cost, training and ethical concerns. They also note that smaller firms are behind because of cost and integration barriers.

Research from Macfadyen and Bish (2025) on behalf of the Bar Standards Board has looked at the use of technology to support legal work at the bar. The research found that some barristers were using ‘everyday’ AI tools such as Microsoft Copilot and ChatGPT. They found the adoption of technology at the bar was mixed, but that barristers could see the benefits of further adoption. The research highlighted some of the barriers which included the fragmentation and independent practice nature of the bar.

While these new tools may offer benefits, it is important that firms approach any investment with caution, ensuring they have a clear understanding of how the tool will add value and establishing success metrics from the outset.

Legal use cases

Document drafting

AI can assist with drafting legal documents using predefined templates and input data. With the correct prompts, AI can draft legal briefs, contracts, waivers and memos as a starting point, which a human is then able to review and amend. This function streamlines the drafting process and also helps to develop legal drafting skills alongside consolidating understanding by reviewing and checking the information provided by AI.

Most importantly, these documents can only be created with human input and guidance, otherwise the risk of incorrect information is significant. AI may include prohibited provisions or incorrect contract clauses. For example, AI may create a contract which has a clause to exclude liability for death or personal injury as a result of negligence – which is prohibited under the Unfair Contract Terms Act 1977.

Due diligence

AI can automate the review of large volumes of documents, identifying potential risks, issues or any information which stands out. AI tools are also able to generate due diligence reports, summarising findings and highlighting the key points.

In the ‘Further reading’ section later in this course, there is a link to a research paper, The Rag Report: Large Language Models in Legal Due Diligence (Addleshaw Goddard, 2024), which explores the development of an inhouse LLM for mergers and acquisition transaction due diligence and commercial contract review.

GenAI can make mistakes and therefore the onus is still on the human providing the prompt to ensure that the summary is conducted effectively.

Legal research

Listen to this audio clip in which Henry Sturm talks about legal research tools.

Transcript

GenAI has the potential to revolutionise legal research by automating the analysis of vast amounts of case law and legal precedents. This technology enables users to quickly find relevant information, and save time by summarising lengthy legal documents, and making complex cases more accessible and easier to understand. However, it is important to remember that LLMs can hallucinate and make mistakes. Damien Charlotin has created a website with a database that is seeking to track the use of AI hallucinated cases in legal decisions. If you are using LLMs you are responsible for checking the output.

Click on each box below to read more.

Client communication

AI powered chatbots can play a vital role in client communication by answering common legal questions and providing initial guidance. They can provide instant responses and are available 24/7 so can offer immediate assistance to clients.

Some law firms have integrated them into their website to explain legal processes and firm services to clients in accessible language, automating responses to FAQs about legal timelines, document requirements, and case updates. They can provide consistent, branded responses while escalating complex matters to human lawyers.

Here are some examples of tools.

Harvey AI

Harvey AI is an advanced artificial intelligence platform specifically designed to enhance the efficiency and accuracy of legal professionals. By leveraging the power of machine learning and natural language processing, Harvey AI assists with a wide range of legal tasks, including legal research, contract analysis, document drafting and regulatory compliance. By automating these time-consuming processes, Harvey AI enables lawyers to focus on higher-value activities such as strategy development and client interactions.

Harvey AI is an advanced AI-driven legal assistant that utilises LLM's to assist lawyers, law firms and corporate legal departments. It provides powerful tools for legal professionals to draft documents, analyse contracts, conduct legal research and generate insights from case laws quickly and efficiently. Developed by a team of AI researchers and legal experts, Harvey AI integrates cutting-edge technologies like OpenAI’s GPT-based models to understand complex legal queries and provide relevant, reliable and actionable responses. The AI aims to enhance decision-making, reduce manual workloads and improve accuracy in legal documentation.

Harvey AI operates through advanced machine learning algorithms and natural language processing capabilities. It processes vast amounts of legal texts, case laws, regulations and statutory materials. By understanding and interpreting legal queries in plain language, Harvey AI allows lawyers to interact with it naturally. Using deep learning Harvey AI refines its accuracy by learning from past cases, user interactions and historical legal data. It can quickly scan through legal databases to provide relevant case law and citations, assist in drafting contracts and legal agreements, and review legal documentation for inconsistencies or potential risks. Additionally, Harvey AI helps firms stay compliant with legal regulations by analysing policies and detecting any areas of non-compliance.

Several prominent UK law firms have adopted Harvey AI. These firms utilise Harvey AI to perform tasks, such as due diligence, litigation support, and compliance analysis, significantly improving productivity and reducing manual workloads.

AI Matter Assistant (Archie)

Archie automates routine legal tasks. It has been designed to support law firms with summarising documents, drafting letters or emails, answering matter-specific questions and conflict checking and risk analysis. It has been created by a US company as part of its document management system.

LegalMation

LegalMation is a US company that brings together lawyers and technologists to design solutions for law firms. It has a series of tools that use AI to support litigation and dispute resolution and is being used by law firms, corporate legal departments and insurance companies. To learn more about this tool watch this short video.

Automated contract review

There are a proliferation of AI contract review tools like Kira Systems and Luminance that can analyse contracts to identify key clauses, risks and compliance issues. These tools use machine learning algorithms to quickly process large volumes of documents, saving time and reducing human error. Kira Systems can extract and analyse clauses from contracts, providing insights into potential risks and obligations. Consequently, these tools result in increased efficiency, accuracy, and consistency in document review. Other examples include Juro, Spellbook and Lexion, and this article suggests the nine best AI contract review tools.

Predictive analytics

AI tools like Lex Machina and Premonition can analyse historical case data to predict litigation outcomes. These tools use advanced algorithms to identify patterns and trends, helping lawyers make informed decisions. Lex Machina provides data-driven insights into case outcomes, judge behaviour, and opposing counsel strategies – improving decision-making, strategic planning, and risk assessment.

AI in judicial decision-making

The University of Ulster is working on research into the role of AI in judicial decision-making and the research will develop ethical guidelines and policy recommendations about the adoption of AI across the courts system.

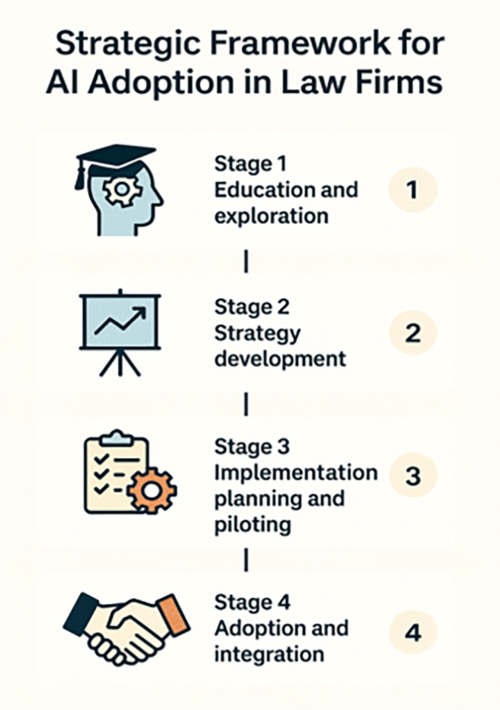

For organisations considering the adoption of GenAI tools, the final sections of this course explore a practical framework for AI adoption, along with processes to support effective change management.

Strategic framework for AI adoption in law firms

GenAI tools can be applied to both legal and non-legal tasks. When considering which tools to adopt:

Engage with different vendors.

Conduct thorough due diligence to evaluate risks and capabilities.

Develop a clear implementation plan that aligns with the firm’s overall strategy and professional obligations.

The third course, Key considerations for successful Generative AI adoption, explored how to create a GenAI strategy. Below is a framework that organisations can adapt to guide their thinking around AI adoption.

| Objective | Key activities | Success criteria |

| Learning about GenAI, experimenting, and understanding capabilities. | Organise educational workshops for staff on GenAI fundamentals. Create cross-functional working groups with both legal and technical staff. Allocate dedicated time for hands-on exploration of GenAI tools. Review industry case studies of AI implementation in legal settings. Speak to vendors. Identify internal champions and sceptics to ensure balanced perspectives. Document initial impressions, concerns, and potential opportunities. | Knowledge assessment scores pre/post training. Percentage of staff participating in AI workshops. Quality of internal discussions and questions raised. Initial inventory of potential use cases identified. Initial inventory of potential vendors. |

| Objective | Key activities | Success criteria |

| Creating a tailored approach that aligns with firm priorities. | Identify and prioritise specific use cases based on:

Map legal and regulatory requirements for AI adoption:

Create preliminary resource allocation plan:

| Completion of comprehensive use case analysis. Documentation of legal/regulatory compliance framework. Establishment of governance structure. Sign off and approval of strategic direction. Clear definition of ‘minimum viable success’ for initial implementation. |

| Objective | Key activities | Success criteria |

| Targeted testing of AI solutions in controlled environments. | Select specific tools for pilot testing based on strategy. Design pilot projects with:

Establish robust measurement frameworks:

Develop feedback mechanisms:

| Completion of planned pilots within timeline. Quality of data collected from pilot projects. Specific improvements identified in workflow efficiency. Documented Return On Investment (ROI) calculations for each use case. |

| Objective | Key activities | Success criteria |

| Scaling successful implementations and embedding into firm operations. | Select tools and processes for firm-wide deployment based on pilot results. Develop comprehensive training programs:

Create integration plans for existing systems:

Establish continuous improvement mechanisms:

Develop client communication approach:

| Adoption rates across practice areas. Efficiency gains (time/cost). Client feedback on AI-assisted work. Staff satisfaction with AI tools. Impact on recruitment and retention. Revenue implications (cost savings and/or new service offerings). Competitive positioning enhancement. |

Best practice throughout all stages

Click on each label below to read more.

7 Change management

An organisation’s decision to implement AI tools should be embedded within its broader strategic plan.

However, even with a sound strategy, technology projects can still often fail because the tools are not effectively adopted by users within the organisation. Change is inherently difficult particularly when it involves adopting new technologies. Without effective change management processes in place, organisations may encounter a range of issues, including staff resistance, reduced productivity and, ultimately, failure to successfully implement the technology.

Change management processes can help to:

- Minimise resistance: staff should be informed and involved in the change process and understand and see the benefits of the new technology.

- Focus on people: staff should be part of the change process to ensure their engagement and adoption of the new technology.

- Transition processes: change management processes should ensure that the transition to the new technology is planned, ordered and managed to minimise disruption and risk.

Change management models

There are different change management models. One example is the ADKAR Model which is a 5-step model developed by Jeff Hiatt in 2003 (Angtyan, 2019):

- (A) Awareness of the need for change.

- (D) Desire to support and participate in the change.

- (K) Knowledge of how to change.

- (A) Ability to implement the change.

- (R) Reinforcement to sustain the change.

This model emphasises individual change, aiming to support each person in the organisation through the transition processes. One of its key advantages is that it provides managers with a clear, outcome-focused framework shifting the emphasis from simply completing tasks to trying to achieve meaningful change.

As organisations move toward implementing GenAI solutions, effective change management will be essential. A thoughtful, well-planned approach is critical to maximising adoption and ensuring long-term success. If you want to explore other change models, you can read about the top ten change management models.

Next steps

To move forward, organisations should:

- Set and clearly communicate goals.

- Build a cross-functional team.

- Develop a well-defined strategy.

- Create a detailed implementation plan.

- Plan internal and external communications.

- Establish mechanisms for continuous improvement.

Throughout this course, we have explored the importance of having strategies, frameworks, and models to guide the adoption of GenAI tools.

While time has only allowed us to provide an overview, our goal is to raise awareness and help organisations think about the questions they need to ask. This includes identifying potential use cases, understanding the implications of implementation, and planning thoughtfully for roll-out and adoption.

By taking a structured and reflective approach, organisations can lay the foundation for successful and responsible use of GenAI solutions. To learn more about change management you can enrol on the free Open University course, which explores managing and leading change.

8 Conclusion

This course has explored potential use cases for GenAI, and we hope it has provided some insights into how AI tools might be adopted.

The second course, Skills and strategies for using Generative AI, explored skills and strategies for GenAI and when it comes to thinking about use cases the importance of playing with tools cannot be overstated.

It is essential to encourage people in your organisation to play with the tools to develop skills. Playing with these tools allows individuals to explore their capabilities, understand their limitations, and discover innovative ways to leverage them. This hands-on approach fosters creativity and problem-solving skills.

Although GenAI has the potential to transform the workplace, it is important to remember that skills like critical thinking, empathy, creativity, and collaboration are becoming increasingly valuable because AI cannot replicate them (Randa, 2024).

Rather than viewing AI as a replacement for humans, the better model is augmented intelligence – AI that enhances rather than substitutes human input. The World Economic Forum (2025) stresses that uniquely human skills must be developed in tandem with technological adoption to avoid skill gaps and displacement.

If AI takes over routine cognitive work, the competitive edge will shift toward emotional intelligence, communication, and ethical reasoning, which is discussed in further detail in the eighth course, Preparing for tomorrow: horizon scanning and AI literacy.

In the next course, you will explore Responsible and Ethical use of Generative AI.

Moving on

When you are ready, you can move on to the Course 4 quiz.

Website links

AI Hallucination Cases Database – Damien Charlotin

AI is transforming the world of work, are we ready for it? | FT Working It

CivTech Challenge 8.1 — Identifying and prioritising support — CivTech

Consequence Scanning – an agile practice for responsible innovators – doteveryone

Contract Review – Powered by AI

Embracing AI: How charities like us can benefit | The Herald

Generative AI: Beyond The Theory Webinar Series | Addleshaw Goddard LLP

Generative AI for legal professionals: Its growing potential and top use cases

How to train and configure GenAI tools | ICAEW

Introduction to_AI_Assurance.pdf

People’s feelings about AI: an evidence review

People's Panel for Artificial Intelligence

Percy – Our unique Generative AI tool, purpose-built in house

The Rag Report: Large Language Models in Legal Due Diligence

Refreshed AI Guidance published version – website version

Report on AI and the public good

Report on a survey of AI researchers

Research: Greater Manchester People’s Panel for AI

Responsible AI Toolkit – GOV.UK

Tech Horizons report 2024 | ICO

The tipping point: Measuring the success of AI in legal services

Tackling a new legal landscape with AI

References

Addleshaw Goddard (2024) The RAG Report: Large Language Models in Due Diligence. Available at: https://www.addleshawgoddard.com/globalassets/insights/technology/llm/rag-report.pdf (Accessed 14 April 2025).

Angtyan, H. (2019) ‘ADKAR Model in Change Management’, International Review of Business and Management Research, 8(2), pp. 179–182. Available at: https://www.irmbrjournal.com/papers/1560753273.pdf (Accessed 16 April 2025).

Bloomberg Law (2025) AI for Legal Professionals. Available at: https://pro.bloomberglaw.com/insights/technology/ai-in-legal-practice-explained/#legal-ai-tools-and-technology (Accessed 14 April 2025)

Clio (2025) Legal Trends for Mid-Sized Law Firms. Available at: https://www.clio.com/wp-content/uploads/2025/01/2025-MM-Legal-Trends-for-Mid-Sized-Law-Firms.pdf (Accessed 14 April 2025)

Cole, N. and Bush, D. (2025) Write with AI. Available at: https://writewithai.substack.com/ (Accessed 16 April 2025).

Financial Times (2024) AI is transforming the world of work, are we ready for it? | FT Working It, 2 December. Available at: https://www.youtube.com/watch?v=hQX_wIW9Nh0 (Accessed: 17 June 2025).

Harvey AI (n.d.) Professional Class AI. Available at https://www.harveyai.com (Accessed: 17 June 2025).

Litera Kira (n.d.) Contract Reviews Powered by AI. Available at https://www.litera.com/products/kira (Accessed: 17 June 2025).

LexisNexis (2025) The tipping point: Measuring the success of AI in legal services. Available at: https://www.lexisnexis.co.uk/insights/measuring-the-success-of-ai-across-the-law/index.html?utm_source=All%20Subscribers&utm_medium=email&utm_content=Main+CTA&utm_campaign=603983_EM2+-+Generative+AI+H1+2025_RET_ACA (Accessed 14 April 2025).

Macfadyen. J. and Bish, D. (2025) Technology at the Bar Research: Report for the Bar Standards Board. Available at: https://www.barstandardsboard.org.uk/static/370d1003-9533-4316-87ba75f68a41c357/Tech-at-the-Bar-2025.pdf (Accessed 22 May 2025).

Randa, K. (2024) ‘The human edge: Why soft skills matter more than ever in the AI era’, Fast Company, (29 August). Available at: https://www.fastcompany.com/91178670/the-human-edge-why-soft-skills-matter-more-than-ever-in-the-ai-era (Accessed 31 March 2025).

Smokeball (2025) State of Law: Adapting for resilience and growth in a tech-driven age. Available at: https://go.smokeball.com/2025_state_of_law_report?utm_campaign=sol_download&utm_medium=email&utm_source=content (Accessed 14 April 2025)

Solicitors Regulation Authority (SRA) (2025) Breakdown of solicitor firms. Available at: https://www.sra.org.uk/sra/research-publications/regulated-community-statistics/data/solicitor_firms/ (Accessed 14 April 2025)

World Economic Forum (2025) Elevating uniquely human skills in the age of AI. Available at: https://www.weforum.org/stories/2025/01/elevating-uniquely-human-skills-in-the-age-of-ai/ (Accessed 31 March 2025).

Acknowledgement

Grateful acknowledgement is made to the following sources:

Every effort has been made to contact copyright holders. If any have been inadvertently overlooked the publishers will be pleased to make the necessary arrangements at the first opportunity.

Important: *** against any of the acknowledgements below means that the wording has been dictated by the rights holder/publisher, and cannot be changed.

Course banner 557600: Creativa Images / Shutterstock

557951: Created by OU artworker using OpenAI. (2025). ChatGPT 4

557959: Created by OU artworker using OpenAI. (2025). ChatGPT 4

557995: Created by OU artworker using OpenAI. (2025). ChatGPT 4

558046: Created by OU artworker using OpenAI. (2025). ChatGPT

558058: Created by OU artworker using OpenAI. (2025). ChatGPT

558125: Created by OU artworker using OpenAI. (2025). ChatGPT 4

558132: Created by OU artworker using OpenAI. (2025). ChatGPT

558260: Created by OU artworker using OpenAI. (2025). ChatGPT 4

558273: Created by OU artworker using OpenAI. (2025). ChatGPT

558245: Created by OU artworker using OpenAI. (2025). ChatGPT

558355: Created by OU artworker using OpenAI. (2025). ChatGPT

558363: Created by OU artworker using OpenAI. (2025). ChatGPT