Use 'Print preview' to check the number of pages and printer settings.

Print functionality varies between browsers.

Printable page generated Sunday, 23 November 2025, 7:03 AM

Navigating risk management

Introduction

Use of AI tools and Generative AI (GenAI) tools in particular is rapidly increasing, with GenAI capabilities added to everyday technologies and software, as well as bespoke tools. This does not mean that the use of such tools is problem free, particularly for organisations.

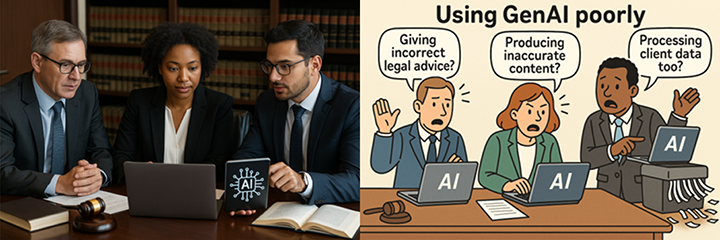

In May 2023, New York lawyer Steven A Schwartz, referenced a number of legal cases in legal documents that were entirely fictitious, having relied on GenAI (ChatGPT) to supplement his legal research. Six of the seven cases referred to were entirely bogus. When required to explain his actions, Schwartz indicated that he was “unaware that [ChatGPT] content could be false” (Armstrong, 2023).

This example may make you laugh or groan at the actions of legal professionals against the backdrop of GenAI (mis)use. However, even though a simple, small-scale example, it serves to illustrate some of the very real risks that arise with the use of GenAI tools in organisations – and how the use of such tools should be managed.

This course will explore the considerations and concerns surrounding the use of Generative AI tools in organisations. Particular areas of concern arise with issues such as data protection, professional accountability, and the integrity of client-facing services.

It considers some of the interplay between humans and non-humans and discuss some of the main risks associated with the uses of GenAI, including some considerations for managing and mitigating some of the risks posed by the growing use of GenAI tools.

It also assumes you that you have some understanding of how GenAI and Large Language Models (LLMs) work. If you are not sure about this, or would find a refresher useful, we recommend you start with the first course in the series, Understanding Generative AI.

This is a self-paced course of around 180 minutes including a short quiz. We have divided the course into sections, so you do not need to complete the course in one go. You can do as much or as little as you like. If you pause you will be able to return to complete the course at a later date. Your answers to any activities will be saved for you.

Course sessions

The sessions are:

Session 1: GenAI: To use or not to use? – 20 minutes (Section 1)

Session 2: GenAI: A risky business? – 120 minutes (Sections 2 to 11)

Session 3: Mitigating AI risks – 40 minutes (Sections 12 onwards)

A digital badge and a course certificate are awarded upon completion. To receive these, you must complete all sections of the course and pass the course quiz.

Learning outcomes

Learning outcomes

After completing this course, you will be able to:

Explain the key risks posed by using GenAI within your organisation.

Identify ways to mitigate the key risks of using GenAI.

Understand the importance of assessing and interrogating GenAI models before using them in your organisation.

Glossary

Glossary

A glossary that covers all eight courses in this series is always available to you in the course menu on the left-hand side of this webpage.

Session 1: GenAI: To use or not to use? – 20 minutes

1 Key areas of responsibility

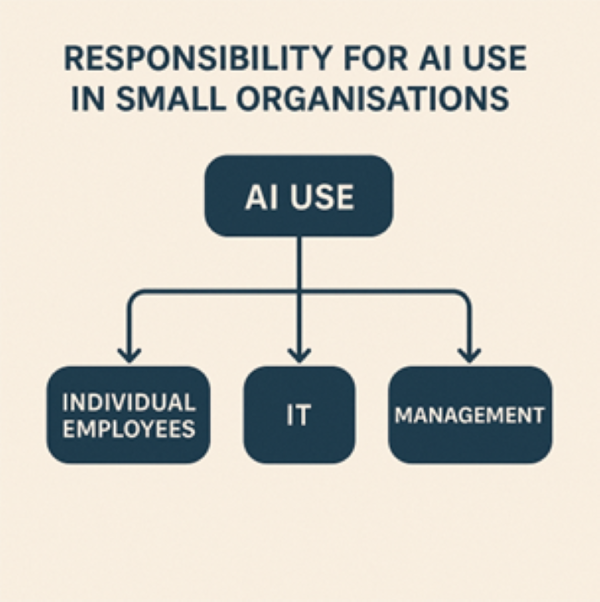

Effective and ethical use of AI in small organisations requires clear assignment of responsibilities across four core groups. The distribution of these roles ensures accountability, governance, and appropriate risk mitigation.

AI use (central activity):

Implementation, oversight, and outcome of AI tools and systems lie at the heart of operational responsibility.

This includes both the selection of AI tools and their ongoing use.

Individual employees:

Responsible for understanding acceptable uses of AI within their role.

Expected to follow organisational AI guidelines and data handling protocols.

Required to flag inappropriate use or unexpected AI behaviours.

IT function:

Ensures technical security, compliance, and infrastructure readiness for AI deployment.

Maintains and monitors AI systems to ensure reliability and ethical performance.

Provides support for integrating AI into business processes securely.

Management:

Defines organisational policy and strategic direction for AI use.

Oversees risk management, regulatory compliance, and ethical considerations.

Approves use cases and ensures alignment with business objectives.

Before using Generative AI effectively, it is important to understand what tools are available and how accessible they are to your organisation. This means checking whether the right technology and software are already in place and making sure staff can actually use them.

It’s also a good idea to begin with internal projects. This gives your team a chance to learn how to use AI safely and effectively in a low-risk setting before applying it to client-facing or public services. Investing in the right GenAI tools – those that match your goals and workflows – is a smart first move.

Transparency is essential when using GenAI (Gartner, 2024). It is important to always be clear when content or decisions involve AI. People should know when they’re seeing or using something that’s been created by a machine.

Which tools or processes are in place?

Which tools or processes are in place?

From your knowledge of your organisation, think about which tools or processes that are in place.

Make a note of:

a) Any GenAI tools that you know are currently used.

b) Are processes or tasks that could benefit from GenAI tools.

c) Areas where you think GenAI may be an area of future use.

Discussion

There are a number of different areas within organisations where GenAI tools may be beneficial or may be used to assist with efficiency.

Where GenAI tools are being deployed, it is important to understand the risk level, the training and knowledge needs of those using the GenAI tools, and the potential risk mitigation steps taken to avoid errors, inaccuracies, and transparency. This course considers these in more detail.

As technology and AI developments are rapid, you may also identify additional risks or areas of risk that have emerged or become prominent since this course content was developed.

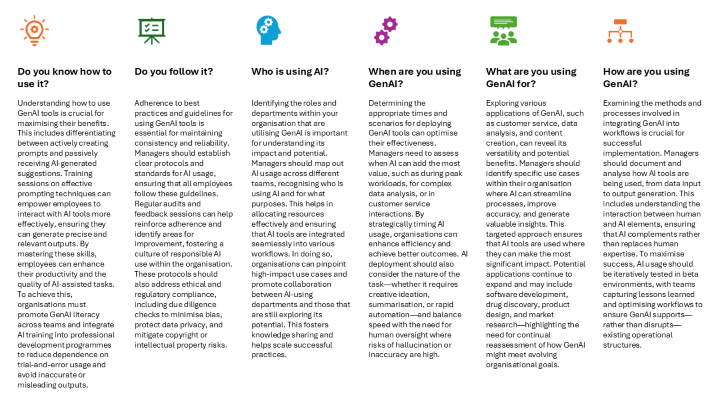

There are a number of key questions to ask when considering if, or how, an organisation should be using AI.

Each of these key questions are considered in the infographic below.

As you have learnt about the different practical questions surrounding when and why you are using GenAI as an organisation, hopefully you have already started to think about why it is important to be aware of these issues when using GenAI.

As well as the possibility of individual harms to employees, clients, or others, due to an inaccuracy, misleading or incorrect outputs, law firms and organisations can be harmed by an inappropriate use of GenAI too.

Your organisation against a backdrop of using GenAI

Your organisation against a backdrop of using GenAI

Thinking about the key questions you have just explored, take the time to consider – for your organisation – the answers to these:

Do you know how to use it?

Do you follow it?

Who is using AI?

When are you using GenAI?

What are you using GenAI for?

How are you using GenAI?

Discussion

This activity has asked you to consider the position of your organisation against a backdrop of using GenAI, and to think about the wider context to using GenAI.

The next session now uses that context to explore some of the risks that can arise when using GenAI.

Session 2: GenAI: A risky business? – 120 minutes

2 AI risks

GenAI is becoming much more integrated into decision-making processes across organisations and sectors. As it does so with rapid spread, the risks associated with its use are also increasing.

This session explores some of the main legal, ethical, and operational challenges that arise when AI systems produce inaccurate, biased, or opaque outcomes. It also highlights why accuracy and reliability are essential, how data governance and privacy frameworks apply, and what organisations should do to ensure AI is deployed responsibly.

What might some of the risks be?

What might some of the risks be?

Thinking about GenAI, what do you think some of the key risks might be?

Make a note of these below.

Discussion

This part of the course considers issues such as intellectual property infringement, algorithmic bias, and professional accountability – particularly in regulated sectors – and offer some considerations of what is needed to manage GenAI risks effectively, ensuring not just technical performance, but also public trust, legal compliance, and ethical alignment.

To ensure appropriate governance, managers must assess how extensively their teams are utilising GenAI tools – and define clear boundaries between automated processes and human judgment.

This evaluation should explore three key considerations.

Task criticality

Determine whether GenAI is being applied in high-risk or sensitive domains such as healthcare, legal advice, or regulatory reporting. In these cases, robust human oversight must be mandatory.

Frequency of use

Review whether GenAI is embedded in routine workflows or reserved for occasional, project-specific applications. This will inform appropriate oversight and resource allocation.

Level of autonomy

Clarify the role GenAI plays: is it generating final outputs independently, or is it functioning as a support tool offering content suggestions for human validation?

This evaluation process will help to identify where GenAI is being used or planning to be used within an organisation. Once this information has been identified, the risks can be considered in more detail.

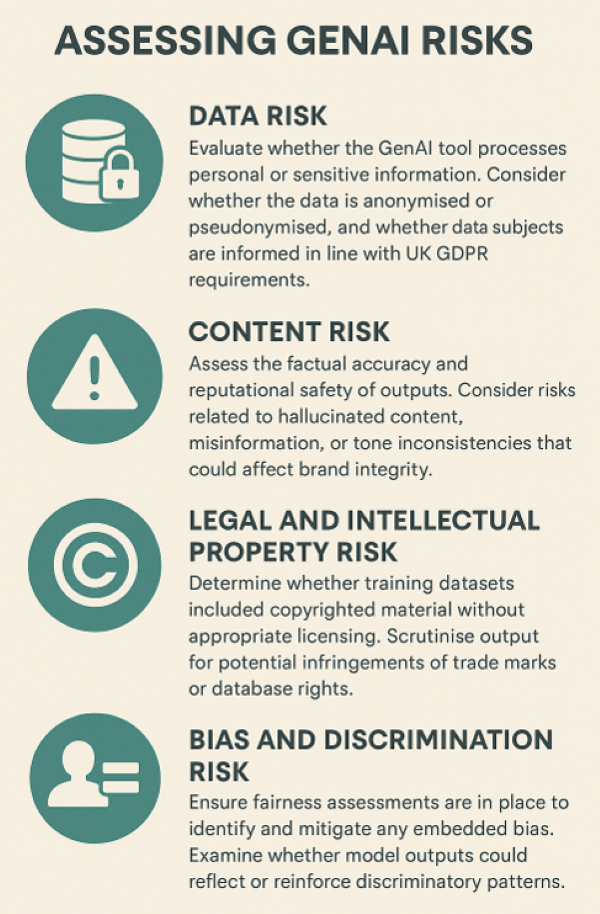

GenAI introduces a spectrum of emerging and complex risks, particularly in areas where data, ethics, and legality intersect. These risks encompass privacy breaches, algorithmic bias, misinformation, copyright infringement, cybersecurity threats, and potential reputational harm.

To mitigate these challenges, organisations are advised to undertake a comprehensive risk assessment for each GenAI use case. This process should be led by managers and informed by multidisciplinary stakeholders, including legal counsel, IT professionals, compliance officers, and ethics advisors.

Utilising a risk matrix – categorising risks by both likelihood and impact – can support effective decision-making and guide proportionate mitigation measures. It can also help to determine the key areas of risk.

As highlighted in the UK Government’s AI Opportunities Action Plan (2025), there is a growing imperative for organisations to implement robust risk governance frameworks. Proactive, structured oversight of AI systems will be essential to ensure ethical compliance and operational resilience for all organisations.

3 Accuracy and reliability

Ensuring that AI systems deliver accurate and reliable outputs is essential in fields that impact people’s rights and wellbeing – like healthcare, finance, and law.

Accuracy measures how closely an AI system’s outputs align with the correct or intended results. Reliability refers to the system’s ability to maintain this accuracy consistently across different scenarios and over time.

As GenAI adoption increases, and with increasing adoption of Large Language Models (LLMs), hallucinations – confident but incorrect outputs – have emerged as a particular concern affecting both accuracy, reliability, and trust.

Generative AI, particularly LLMs, is prone to these 'hallucinations'. These errors can erode trust, harm individuals, and undermine the credibility of services. In legal and medical settings, professionals remain accountable for AI-supported decisions. Regulatory bodies like the Solicitors Regulation Authority emphasise that AI cannot replace the need for competent, human judgment (Solicitors Regulation Authority, 2022).

To mitigate risks, organisations can invest in rigorous testing and monitoring. Benchmarking against industry and human standards, ensuring dataset diversity, using clear indicators of AI confidence, and training staff to critically evaluate AI outputs are all vital.

Real-time monitoring, feedback loops, and periodic retraining can help prevent performance drift and maintain operational standards. This should be an ongoing process and not be static. Some of the best practices that are emerging suggest that AI dashboards, performance metrics and automated systems (Evidently AI, 2025) for anomaly alerts are becoming more commonplace.

It is important to have rigorous processes in place for testing, validation, and monitoring across the AI lifecycle, particularly with the rapid evolution of GenAI.

4 Reverse engineering

Reverse engineering in AI allows experts to dissect how a system makes decisions, especially when dealing with opaque 'black box' models. This process can support accountability and fairness by revealing whether systems operate without discrimination and within legal boundaries.

What is Reverse Engineering?

Reverse engineering is the process of analysing and deconstructing an existing AI system. This process helps to understand how an AI system works, and helps us to understand the decision-making processes used in particular AI models.

Reverse engineering is important when it comes to dealing with opaque systems – so-called ‘black box’ models – as it can help to examine potentially biased or discriminatory outputs (Information Commissioner's Office and The Alan Turing Institute, 2022).

Reverse engineering is important in high-risk fields such as policing, hiring, and healthcare. However, it can raise concerns over intellectual property rights and confidentiality, especially where AI systems are protected under trade secret laws. UK law generally permits reverse engineering of legally acquired products unless restricted by contract.

From an ethical perspective, reverse engineering is crucial for identifying flaws that could affect public safety or violate rights. It allows regulators and researchers to simulate vulnerabilities and verify that systems behave as intended. Current UK and EU policy discussions are exploring how to balance innovation with transparency through regulated access to AI models for scrutiny and conformity assessments.

It is becoming increasingly important within Europe to ensure that reverse engineering can be facilitated to ensure that there is compliance with regulatory requirements introduced under the EU AI Act (European Parliament, 2023) for example.

5 Data and data transfers

AI systems rely on vast amounts of data, much of which includes personal and sensitive information (and which is subject to specific legal protections under data protection law in the UK, including the General Data Protection Regulation 2018). This presents significant legal and ethical challenges.

Organisations must ensure that personal data is processed lawfully, fairly, and transparently. This includes honouring principles such as purpose limitation and data minimisation. Under the Data Protection Act 2018 in the UK, organisations are required to process personal data lawfully, fairly, and transparently.

However, many GenAI models are trained on internet-scraped data without clear user consent, raising concerns about the legal basis for processing and potential violations of privacy. Furthermore, inferential capabilities of AI can generate sensitive information about individuals from seemingly harmless inputs, escalating privacy risks.

For instance, training LLMs using data scraped from the internet raises substantive concerns over non-compliance with data protection obligations. Care should be taken when using or training GenAI or LLMs given these issues.

Cross-border data transfers complicate compliance further. When data is sent to countries without adequate protections, organisations must use safeguards like Standard Contractual Clauses (SCCs). Yet these are often insufficient given global enforcement asymmetries.

Experts increasingly recommend collective governance strategies (Lynskey, 2021) – such as public registers of training datasets – to improve transparency and accountability in AI data practices.

Reflection

Reflection

Think about the first three risks posed by GenAI. Are any of the first three concerns addressed in your organisation’s use of GenAI? Which, if any of these require strengthening to ensure that your organisation is mitigating the risks of GenAI?

Make a note below of any potential mitigations your organisation could adopt.

6 Privacy

Privacy is at the heart of ethical and legal concerns surrounding AI. It is one of the most serious concerns, and while privacy considerations are separate from data and data transfers, they are connected.

The UK GDPR protects individuals from unfair automated decision-making and guarantees rights such as the ability to object or request human intervention. This is particularly critical in applications like legal support, recruitment, and credit scoring, and in other contexts where automated decision-making may be used.

Moreover, the Human Rights Act 1998 guarantees a right to privacy, and protection for a private life under Article 8. However, AI systems can pose direct threats to such rights, especially those that perform surveillance, profiling, or predictive analysis and do so without individuals’ knowledge. In such situations, the lack of knowledge may violate this right. Professionals using AI, particularly in law or healthcare, must adopt strong safeguards such as anonymisation, Data Protection Impact Assessments, and robust vendor contracts to ensure compliance.

Solicitors, for instance, must align their AI practices with Solicitors Regulatory Authority obligations, maintaining client confidentiality and transparency. The ethical use of AI must preserve trust and protect sensitive data through technical and organisational measures.

Organisations should be particularly mindful of the need for strict contractual controls with third-party AI vendors. Where organisations are working within regulated professions, clear guidance should be followed to avoid regulatory breaches (Solicitors Regulation Authority, 2023).

7 Client confidentiality

Using GenAI in professional services requires heightened attention to client confidentiality. Legal professionals, for instance, must ensure that no client data – however anonymised – is processed by AI systems without thorough oversight. Terms of service for AI tools often state whether providers retain or use input data, and unclear terms should prompt firms to seek written assurances (The Law Society, 2023).

Confidentiality obligations for solicitors under the Solicitors Regulation Authority guidelines for example (SRA, 2019), persist regardless of how advanced the tools are. Firms may need to obtain explicit client consent before entering their data into GenAI systems, particularly where sensitive legal or personal information is concerned. Failure to do so can lead to potential regulatory breaches, or to non-compliance with data protection regulations. Even seemingly generic data can carry implications when combined with other inputs or used in profiling.

Organisations must document data flows, ensure compliance with data minimisation principles, and regularly audit vendor practices. The responsible use of GenAI is not just about legal compliance but maintaining professional integrity and client trust. It is important for organisations to take proactive measures to reduce the potential for any misuse of data, and to limit unauthorised disclosures wherever possible.

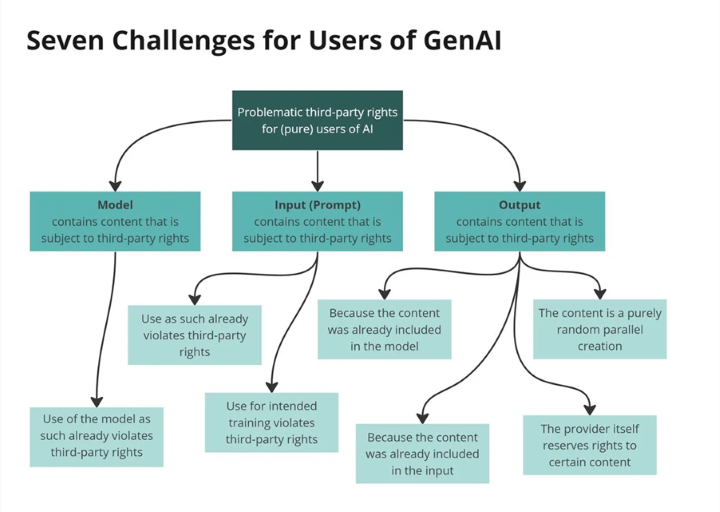

8 Intellectual property rights

A key legal risk with using GenAI tools is that the datasets used to train the AI models often use and contain copyright material. For example, the internet scraping of online content to train GenAI models can be regarded as an infringing act under UK law.

Current UK copyright law, based on the Copyright, Designs and Patents Act 1988 (CDPA), is ill-equipped to handle the challenges posed by GenAI.

AI developers often use copyrighted content in training datasets without permission, raising infringement concerns. Section 17(2) prohibits reproducing substantial parts of protected works without consent, an issue at the core of ongoing cases like Getty Images v Stability AI [2025] EWHC 38 (Ch).

Authorship under UK law for computer-generated works also remains ambiguous – and this applies to AI-generated outcomes. Section 9(3) of the CDPA 1988 assigns authorship to whoever made the arrangements for creation, but determining this in AI contexts – programmer, prompter, or developer – is complex. The originality requirement further complicates matters, especially for content merely recombined by GenAI.

The UK Government is continuing to explore broadening the exception for text and data mining to support AI research. However, creative industries raised concerns about the lack of technical safeguards for opting out. Broader exceptions could fuel innovation but risk weakening IP protections without enforceable transparency requirements.

Further reading

Further reading

If you are interested, you can learn more by reading A really risky business.

9 Ethical considerations and bias

Ethical deployment of GenAI involves ensuring fairness, transparency, accountability, and inclusiveness. AI's impact on employment is a major concern, as automation may displace lower-skilled roles while disproportionately benefiting those with digital expertise. This poses the risk of deepening socio-economic divides (Gmyrek et al. 2023).

Algorithmic bias occurs when AI systems reproduce and amplify discriminatory patterns found in training data. This is not a technical glitch – it reflects societal inequities embedded in data collection and labelling. Meanwhile, bias in training data can lead to discriminatory outcomes (Pump Court Chambers, 2023), particularly in recruitment, finance, or law enforcement. Ethical AI development requires diverse datasets, ongoing auditing, and human oversight. Organisations should also consider workforce and employee ethics – offering reskilling, protections, and equitable access to AI benefits.

Addressing bias requires using representative training datasets, identifying proxy variables that encode discrimination, and conducting fairness audits. Human reviewers must oversee automated decisions, particularly in sensitive domains. Inclusive design practices also help anticipate harms and build trust.

Inclusive design – engaging a wide range of stakeholders in AI development –ensures systems reflect diverse perspectives and societal values. Ethical AI also demands anticipatory governance – evaluating impacts before deployment, particularly in dual-use technologies like facial recognition that could threaten privacy and fundamental freedoms.

10 Responsibility and accountability

As GenAI becomes more integrated into decision-making, questions of accountability become critical.

When AI systems make mistakes – misdiagnosing a patient, generating biased decisions, or producing defamatory content – determining who is responsible is complex. However, this is exactly why it is so important to have clear lines of accountability – human accountability in the deployment of AI models (Floridi et al., 2018).

The legal position in the UK is still evolving to clarify liability. However, gaps remain. Ethical frameworks stress that developers, deployers, and end users must share responsibility.

Professionals, particularly in regulated sectors, cannot defer blame to algorithms. They remain accountable for ensuring outputs are fair, lawful, and appropriate.

Maintaining human oversight – the 'human-in-the-loop' – is essential to uphold autonomy, particularly when decisions have significant personal or legal consequences. Internal governance structures, documentation, and regular audits are critical tools for supporting accountability.

11 Security

AI systems face growing security threats, from data breaches to adversarial attacks. To mitigate these risks, organisations must adopt comprehensive security measures including:

Firewalls and Intrusion Detection Systems (IDS)

These act as a first line of defence by monitoring network activity and alerting teams to suspicious or unauthorised access attempts.

Data encryption

Protects sensitive data – such as customer information, intellectual property, or algorithmic models – both at rest and in transit (National Cyber Security Centre, 2020).

Regular security audits and penetration testing

Conducting frequent evaluations helps to identify system vulnerabilities and address them before exploitation can occur.

Access controls and authentication protocols

Implementing multi-factor authentication (MFA), least-privilege access, and strong identity verification helps reduce the risk of internal and external breaches (Srinivasan and Lee, 2022).

Risk and mitigation

Now that you have learnt about some of the key risks that can arise in the use of GenAI by organisations, look at the table below which matches the risk to the appropriate mitigation step.

| Risk | Mitigation |

|---|---|

| Inaccurate and unreliable data. | Accuracy and reliability checks are required. |

| AI is a ‘black box’ and it is hard to understand the decision-making processes. | Reverse engineering is required to deconstruct the model. |

| AI is heavily dependent on data collection and processing that is not always data protection compliant. | Data protection compliance requires protection for personal and sensitive data. |

| AI data collection and use is often cross border and pays no attention to jurisdictional limits. | Cross-border data transfers require safeguard steps to be taken, including standard contractual clauses to be used. |

| There is little opacity in the algorithmic decision-making adopted by AI systems. | Within regulated professions there are obligations to main confidentiality therefore as a minimum data protection impact assessments (DPIAs) are required. |

| AI systems can be vulnerable to cyber threats. | Key practices and comprehensive security frameworks are required to safeguard AI models and the data they use. |

| AI systems are not readily transparent, and are increasingly used for automated decision-making, reflecting biases in the training data which can be prejudicial. | Clear accountability is required where AI systems are used to make decisions. This requires – for regulated professions – human ownership of decisions. |

Having thought in depth about the potential risks that can arise with the use of GenAI, the next section explores managing GenAI tools and the considerations to be made when organisations consider the use of GenAI.

Session 3: Mitigating AI risks – 40 minutes

12 Human oversight

GenAI technologies are increasingly embedded in organisational operations, bringing both opportunities and complex challenges. To ensure responsible use, it is vital to manage associated risks – ethical, legal, and operational – through robust oversight, clear frameworks, and adaptive planning.

Based on what you have learnt about the risks and challenges posed by the use of GenAI in organisations, it is important to take appropriate steps to mitigate those risks. This will assist with regulatory compliance, and prevent regulatory breaches, particularly in regulated professions.

Human oversight is a foundational safeguard in the responsible deployment of GenAI. While these systems excel at automating tasks, they lack contextual judgment, ethical reasoning, and an understanding of nuanced social or legal implications.

Organisations should:

Define specific thresholds where human intervention is mandatory (e.g., legal, financial, or medical decisions).

Establish protocols for manual review, ethical audits, and expert escalation.

Maintain oversight as a continuous process – through regular review of outputs, decision documentation, and cross-functional review panels.

Importantly, regulatory bodies such as the Solicitors Regulation Authority (SRA) and the Information Commissioner’s Office (ICO) have reinforced that AI use does not negate human accountability. Professionals remain responsible for the outcomes of GenAI-supported decisions.

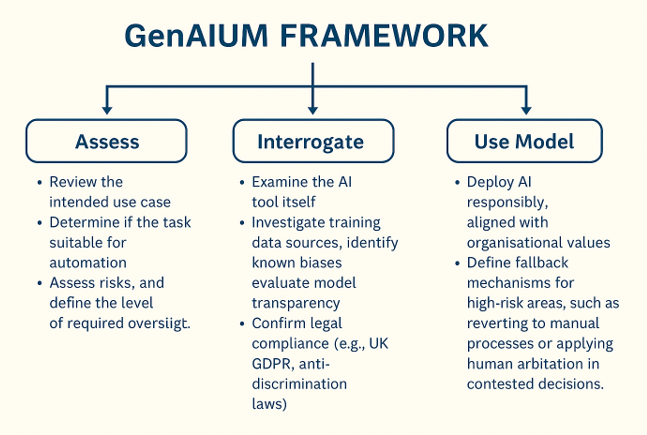

13 The GenAIUM framework

To guide ethical and effective AI integration, organisations can adopt the GenAIUM framework. This framework is designed to offer a quick start guide to considering the potential for using GenAI within organisations.

The GenAIUM Framework has three strands to it – assessment, interrogation and use.

The Organisation for Economic Co-operation and Development (OECD, 2023) underscores that risk management must span the entire AI lifecycle to ensure trustworthy systems.

14 Developing a risk management plan

A formal, evolving risk management plan is central to responsible GenAI use.

It should include the following.

Risk identification

Highlight risks such as hallucinated outputs, algorithmic bias, IP infringement, data privacy violations, and system failure.

Mitigation strategies

Implement targeted measures:

Use filters and prompt engineering to reduce hallucination.

Audit for bias and diversify training datasets.

Introduce encryption and monitoring for cybersecurity resilience.

Contingency measures

Prepare responses to adverse outcomes (e.g., system suspension, manual override, or legacy process fallback).

Establish regular review cycles informed by incidents, feedback, and regulatory changes.

Actions that help to mitigate risks

Actions that help to mitigate risks

You have learnt about some of the risks in this course. The risks presented by GenAI use in organisations include:

Accuracy and reliability.

Privacy.

Client confidentiality.

Intellectual property rights.

Ethics and bias.

Security.

Responsibility.

You have also learnt about some of the proactive steps that can be introduced to mitigate some of these risks.

Identify at least three key actions that can help to mitigate some of these risks and list them below.

Discussion

You should have been able to identify three key actions from the following list:

Implement use policies. Establish clear guidelines for appropriate and inappropriate use of GenAI tools within the organisation.

Conduct risk assessments. Regularly assess potential risks like data leakage, IP exposure, bias, and misinformation from GenAI outputs.

Train staff on responsible use. Provide basic training on prompt design, verifying GenAI outputs, and ethical considerations.

Restrict sensitive data input. Introduce controls to prevent confidential or personal data from being fed into GenAI systems.

Set up review and oversight processes. Mandate human review for high-stakes content generated by AI (e.g., legal, medical, or HR communications).

Reflection

Reflection

15 Conclusion

Having studied this course, you now know more about the risks posed by GenAI use and the challenges posed by using it in an ad hoc manner, or in a way that is not structured.

This course has outlined some of the ways in which individuals and organisations can identify risks and mitigate against them, including the importance of ensuring there is a ‘human in the loop’, that there are appropriate mechanisms in place to determine how, when, and why it is being used, and appropriate guidance.

If you are considering using GenAI in your organisation, we suggest you look at the fifth course, Ethical and responsible use of Generative AI, which considers the ethical uses and considerations of AI tools.

For individuals responsible for managing organisations and who want to know more about the compliance aspects of using GenAI, we recommend the seventh course, Understanding legal regulation and compliance.

For individuals interested in finding out more about using GenAI, we would recommend the eighth course, Preparing for tomorrow: horizon scanning and AI literacy, which considers the future of GenAI.

Further reading

Further reading

Want to know more?

Research and understanding of the risks posed by GenAI is ongoing. The legal and regulatory framework is complex and evolving, and updated legal provisions may have been enacted since these course materials were developed.

If you want to explore any of the risks covered in this course, you may wish to read A really risky business.

Moving on

When you are ready, you can move on to the Course 6 quiz.

Website links

References

Armstrong, K., BBC News (2023) ChatGPT: US lawyer admits using AI for case research. Available at: https://www.bbc.co.uk/news/world-us-canada-65735769.amp (Accessed 25 February 2025).

Department for Science, Innovation and Technology (DSIT) (2025). AI Opportunities Action Plan. Available at: AI Opportunities Action Plan - GOV.UK (Accessed 17 March 2025).

European Parliament (2023) EU AI Act: first regulation on artificial intelligence. Available at: https://www.europarl.europa.eu/topics/en/article/20230601STO93804/eu-ai-act-first-regulation-on-artificial-intelligence (Accessed 17 March 2025).

Evidently AI (2025) Model monitoring for ML in production: a comprehensive guide. Available at: https://www.evidentlyai.com/ml-in-production/model-monitoring (Accessed 16 March 2025).

Floridi, L., Cowls, J., Beltrametti, M., Chatila, R., Chazerand, P., Dignum, V., Luetge, C., Madelin, R., Pagallo, U., Rossi, F., Schafer, B., Valcke, P. and Vayena, E. (2018) 'AI4People – An Ethical Framework for a Good AI Society: Opportunities, Risks, Principles, and Recommendations', Minds and Machines, 28(4), pp. 689–707. Available at: https://doi.org/10.1007/s11023-018-9482-5 (Accessed 17 March 2025).

Gartner (2024) Generative AI. Available at: https://www.gartner.com/en/topics/generative-ai (Accessed 15 March 2025).

Gmyrek, P., Berg, J. and Bescond, D. (2023) 'Generative AI and jobs: A global analysis of potential effects on job quantity and quality', ILO Working Paper 96. Available at: https://www.ilo.org/sites/default/files/wcmsp5/groups/public/---dgreports/---inst/documents/publication/wcms_890761.pdf (Accessed 17 March 2025).

Information Commissioner's Office and The Alan Turing Institute (2022) Explaining decisions made with AI. Available at: https://ico.org.uk/media/for-organisations/uk-gdpr-guidance-and-resources/artificial-intelligence/explaining-decisions-made-with-artificial-intelligence-1-0.pdf. (Accessed 17 March 2025).

The Law Society (2023) Generative AI – the essentials. Available at: https://www.lawsociety.org.uk/topics/ai-and-lawtech/generative-ai-the-essentials (Accessed 17 March 2025).

Lynskey, O. (2021) 'Cross-Border Data Transfers & Innovation', Global Data Alliance. Available at: https://globaldataalliance.org/wp-content/uploads/2021/07/04012021cbdtinnovation.pdf (Accessed 21 March 2025).

National Cyber Security Centre (2020) Annual Review 2020. Available at: https://www.ncsc.gov.uk/files/Annual-Review-2020.pdf (Accessed 17 March 2025).

Organisation for Economic Co-operation and Development (2023) Advancing Accountability in AI: Governing and Managing Risks Throughout the Lifecycle for Trustworthy AI. Available at: https://www.oecd.org/publications/advancing-accountability-in-ai-2448f04b-en.htm (Accessed 19 March 2025).

Pump Court Chambers (2023) The Interplay between the Equality Act 2010 and the use of AI in recruitment. Available at: https://www.pumpcourtchambers.com/2023/06/19/the-interplay-between-the-equality-act-2010-and-the-use-of-ai-in-recruitment/ (Accessed 17 March 2025).

Solicitors Regulation Authority (2019) SRA Principles. Available at: https://www.sra.org.uk/solicitors/standards-regulations/principles/ (Accessed 17 March 2025).

Solicitors Regulation Authority (2022) Compliance tips for solicitors regarding the use of AI and technology. Available at: https://www.sra.org.uk/solicitors/resources/innovate/compliance-tips-for-solicitors/ (Accessed 18 March 2025).

Solicitors Regulation Authority (2023) Confidentiality and client information. Available at: https://www.sra.org.uk/solicitors/guidance/confidentiality-client-information/ (Accessed 17 March 2025).

Srinivasan, S., & Lee, J. (2022) 'AI Empowered DevSecOps Security for Next Generation Development'. Journal of Cybersecurity, 9(2). Available at: https://orbit.dtu.dk/en/publications/ai-empowered-devsecops-security-fornext-generation-development (Accessed 16 March 2025).

Acknowledgements

Grateful acknowledgement is made to the following sources:

Every effort has been made to contact copyright holders. If any have been inadvertently overlooked the publishers will be pleased to make the necessary arrangements at the first opportunity.

Important: *** against any of the acknowledgements below means that the wording has been dictated by the rights holder/publisher, and cannot be changed.

Course banner 560564: Microvone | Dreamstime.com

560594: Created by OU artworker using OpenAI. (2025). ChatGPT version o4

560597: Created by OU artworker using OpenAI. (2025). ChatGPT version o4

560599: Created by OU artworker using OpenAI. (2025). ChatGPT version o4

560607: Created by OU artworker using OpenAI. (2025). ChatGPT version o4

560609: Created by OU artworker using OpenAI. (2025). ChatGPT version o4

560613: Created by OU artworker using OpenAI. (2025). ChatGPT version o4

560616: Created by OU artworker using OpenAI. (2025). ChatGPT version o4

560625: Anyaberkut | Dreamstime.com

560632: Vischer https://www.vischer.com/en/knowledge/blog/part-14-copyright-and-ai-how-to-protect-yourself-in-practice/ This file is licensed under the Creative Commons Attribution-No Derivatives Licence https://creativecommons.org/licenses/by-nd/4.0/deed.en

560634: Created by OU artworker using OpenAI. (2025). ChatGPT version o4

560646: Created by OU artworker using OpenAI. (2025). ChatGPT version o4

560649: Created by OU artworker using OpenAI. (2025). ChatGPT version o4