Use 'Print preview' to check the number of pages and printer settings.

Print functionality varies between browsers.

Printable page generated Sunday, 23 November 2025, 1:37 AM

Understanding legal regulation and compliance

Introduction

This course explores the considerations and concerns surrounding the use of Generative AI tools in organisations. Particular care needs to be taken at three distinct points when considering the use of GenAI tools:

Before using GenAI tools.

While GenAI tools are being used.

After using GenAI tools.

For managers and leaders in organisations looking to understand these, and the steps to be taken before deploying any GenAI tools within an organisation, this course will serve as an introduction. It will outline the key things that should be given consideration and will highlight some areas of legal compliance that need to be accommodated before any uses of GenAI tools.

This course assumes you that you have some understanding of how GenAI and Large Language Models (LLMs) work. If you are not sure about this, or would find a refresher useful, we recommend you start with the first course in the series, Understanding Generative AI.

It also assumes that you have an understanding of the risks and concerns surrounding the use of GenAI in organisations. If you are not sure about this, or would like to explore this, we recommend that you complete – or revisit – the sixth course, Navigating risk management.

It further assumes that you have, or are working on, an organisational GenAI policy. If you are not sure about this, we recommend that you complete – or revisit – the third course, Key considerations for successful Generative AI adoption.

This is a self-paced course of around 180 minutes including a short quiz. We have divided the course into sections, so you do not need to complete the course in one go. You can do as much or as little as you like. If you pause you will be able to return to complete the course at a later date. Your answers to any activities will be saved for you.

Course sessions

The sessions are:

Session 1: Regulating and implementing – 60 minutes (Sections 1 to 4)

Session 2: Terms and conditions of GenAI providers – 60 minutes (Sections 5 to 7)

Session 3: Time for a GenAI policy? – 60 minutes (Sections 8 onwards)

A digital badge and a course certificate are awarded upon completion. To receive these, you must complete all sections of the course and pass the course quiz.

Learning outcomes

Learning outcomes

After completing this course, you will be able to:

Identify the key considerations before, during and after using GenAI tools within your organisation.

Explain the various regulatory frameworks applicable to GenAI providers that may mitigate some of the risks of GenAI tools.

Understand the importance of developing, managing, and updating GenAI use policies in your organisations, and what these policies should cover.

Glossary

Glossary

A glossary that covers all eight courses in this series is always available to you in the course menu on the left-hand side of this webpage.

Session 1: Regulating and implementing – 30 minutes

1 Regulating AI in the UK

The United Kingdom does not have a singular, comprehensive law specifically regulating artificial intelligence (AI). While this may be criticised as being slow and unhelpful, the UK approach to regulating AI and GenAI, is currently one that is in development.

The regulatory focus in the UK falls on a decentralised, pro-innovation approach that applies existing legal frameworks and empowers sector-specific regulators to oversee the use of AI technologies. This approach (Department for Science, Innovation and Technology, 2023) advocates for a flexible framework guided by five core principles:

- Safety

- Transparency

- Fairness

- Accountability

- Contestability

Rather than creating a standalone AI regulator or codified AI law akin to the European Union’s AI Act, the UK strategy relies on contextual oversight, where bodies such as the Information Commissioner’s Office (ICO), the Financial Conduct Authority (FCA), and the Competition and Markets Authority (CMA) apply existing laws to manage AI-related risks.

A significant legal pillar affecting AI deployment is the Data Protection Act 2018, which incorporates the UK General Data Protection Regulation (UK GDPR). This legislation governs the collection, processing, and storage of personal data, including by AI systems. Notably, Article 22 of UK GDPR confers individuals the right not to be subject to a decision based solely on automated processing that has legal or similarly significant effects. This provision is particularly relevant for AI systems used in recruitment, credit scoring, and other high-impact scenarios (Information Commissioner's Office, n.d.).

The Equality Act 2010 is another cornerstone of the current regulatory framework in the UK, especially when addressing concerns that arise over algorithmic bias, and the replication of that bias through GenAI outputs. If an AI system discriminates – either directly or indirectly – against individuals based on protected characteristics such as race, gender, or disability, it may violate anti-discrimination provisions. This risk is especially pronounced in sectors like employment, finance, and law enforcement, where biased training data or poorly designed algorithms can entrench systemic inequalities.

AI technologies must also comply with broader human rights obligations under the Human Rights Act 1998, particularly Article 8 (right to privacy) and Article 14 (protection from discrimination). These obligations bind public sector deployments of AI and require transparency, proportionality, and justification for any intrusion on fundamental rights. Much like the Human Rights Act provisions, elements of the Consumer Protection from Unfair Trading Regulations 2008 and Competition Act 1998 can also apply where AI is used, especially if AI is used to manipulate consumer choices, personalise pricing unfairly, or engage in anti-competitive behaviour.

Intellectual property law is another area of growing concern, particularly in relation to the ownership of AI-generated works and the status of AI as an inventor (Thaler v Comptroller-General of Patents, Designs and Trademarks, 2023). The UK Intellectual Property Office has clarified that, under current law, AI cannot be recognised as an inventor for patent purposes. While copyright may apply to AI-generated content if a human authorship link exists, the legal status remains unsettled, particularly where content is produced autonomously by generative models.

UK legal provisions and the GenAI challenges

UK legal provisions and the GenAI challenges

Match the UK legal provisions to the GenAI challenges.

Two lists follow, match one item from the first with one item from the second. Each item can only be matched once. There are 6 items in each list.

Data retention, user consent, personal data processing

Risk classification, prohibited uses, transparency obligations

Handling of special category data in AI input and output

Ownership of AI-generated content, reuse, and attribution

Transparency and fairness of AI-driven outputs to consumers

Bias and discrimination risks in AI systems

Match each of the previous list items with an item from the following list:

a.Consumer Protection from Unfair Trading Regs

b.UK GDPR (General Data Protection Regulation)

c.EU AI Act (Proposed)

d.Copyright, Designs and Patents Act 1988 (UK)

e.Equality Act 2010 (UK)

f.UK Data Protection Act 2018

- 1 = b,

- 2 = c,

- 3 = f,

- 4 = d,

- 5 = a,

- 6 = e

Discussion

The UK approach to regulating AI has not – yet – materialised in the form of bespoke legislation. However, that does not mean that there is no law applicable to AI and GenAI tools. There are lots of potential areas of law that can regulate uses – and abuses – of AI tools.

2 Before implementing GenAI tools

Before trialling, using, or adopting, any GenAI tools, it is important to develop an understanding of what GenAI is, and how it works. It is then equally important to develop an appreciation of how GenAI could assist you and your organisation, and add value (Thomson Reuters, 2024). The understanding of how GenAI can be of benefit is essential before implementing these tools into your workflows, tasks, and processes.

GenAI tools can be helpful in automating repetitive tasks or enhancing customer service. They can also – subject to a number of caveats – be helpful in generating content, though this can be fraught with dangers that include inaccuracies, hallucinations, and false information.

Tasks and processes

Tasks and processes

a.

Yes

b.

No

The correct answer is a.

a.

Yes

b.

No

The correct answer is b.

a.

Yes

b.

No

The correct answer is a.

a.

Yes

b.

No

The correct answer is a.

a.

Yes

b.

No

The correct answer is b.

a.

Yes

b.

No

The correct answer is a.

a.

Yes

b.

No

The correct answer is b.

a.

Yes

b.

No

The correct answer is a.

a.

Yes

b.

No

The correct answer is b.

a.

Yes

b.

No

The correct answer is a.

a.

Yes

b.

No

The correct answer is a.

a.

Yes

b.

No

The correct answer is b.

a.

Yes

b.

No

The correct answer is a.

a.

Yes

b.

No

The correct answer is a.

a.

Yes

b.

No

The correct answer is b.

Some of the most common examples of GenAI tools being used in organisations are to help with the most repetitive tasks.

This can include things such as automated meeting notes, or more complex tasks such as creating visual content. It can also assist with other aspects such as analysing data to determine trends. Not all of the potential uses will be suited to every organisation. It is important for each organisation and industry to determine what is most suitable for their particular needs (Calls9, 2025).

It is also important to remember that determining if and when GenAI tools should be used is a process in itself. Simply because GenAI can be used, it does not necessarily mean that this is the correct or desirable choice.

An assessment of which employees should be permitted or motivated to use GenAI is just one of the considerations an organisation needs to make (Practical Law, 2024). This assessment should be made before, during, and after using GenAI tools.

There are lots of things to think about before using GenAI. We explore some of the risks and challenges of GenAI tools in the sixth course, Navigating risk management. These include data protection, security, privacy concerns, intellectual property ownership, accuracy and reliability of GenAI outputs, consent to use and share data, and potential GenAI biases (Brown and Jones, 2021).

A huge amount of data is required to operate GenAI tools. This necessitates robust, specific, and reliable security measures to protect any misuse of data, or data breaches. This is particularly important for using GenAI tools because of the potential for algorithmic biases to manifest when using data for training GenAI tools.

Wherever there may be biases within the training data, GenAI models and tools are likely to replicate these in the outputs. This includes the replication of historical, cultural, and social factors that lead to bias and/or prejudice. It is important to be aware of the potential for these to be replicated in any GenAI outputs.

Planning to make GenAI tools available

Planning to make GenAI tools available

As a leader, you are planning to make GenAI tools available to your team next week.

Think about the following two questions:

- What is one thing it could radically improve in your organisation and its work?

- What is one thing that could go wrong if used carelessly?

Now identify:

- One potential use of GenAI in your area.

- One potential risk of using GenAI.

Make a note of the potential use and potential risk that you have identified in the box below.

Discussion

There are a number of real-world examples that you may have identified as offering potential uses. These may include for example, social media content creation, scheduling, HR processes, or data analysis. Wherever there are potential opportunities for using GenAI, you should be able to identify the potential pitfalls. These can include data breaches, misuse of personal data, lack of data security, replication of biases, or even a lack of human oversight.

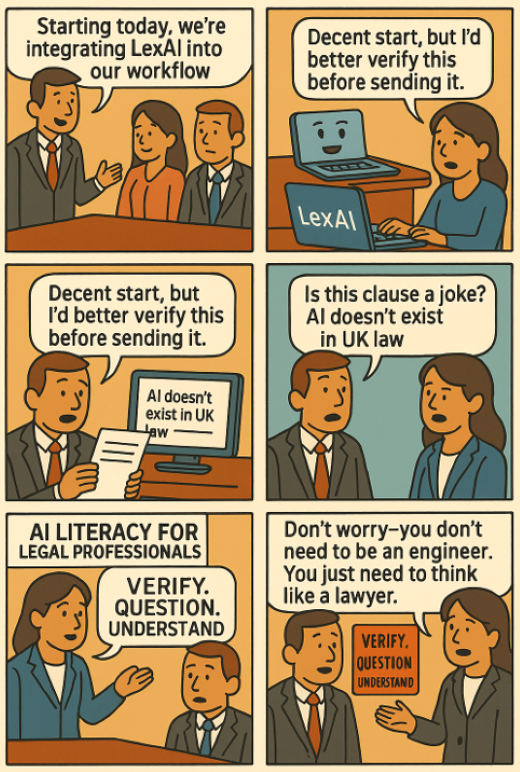

3 Implementing GenAI tools

Training on appropriate prompt techniques for the specific tools your organisation is implementing is a vital initial step before widely implementing GenAI. This will help your organisation to effectively generate outputs that are precise and most relevant for the required tasks (Saifan, 2024).

GenAI literacy is an essential element of any implementation process. Training should include aspects such as risks and limitations (Saifan, 2024) of GenAI tools (including copyright, hallucinations, data use, data protection, quality and reliability of GenAI outputs, prompt engineering) and should explicitly address the areas listed within a GenAI policy (this is covered further in Session 3 of this course).

It is essential to continually monitor the performance and impact of GenAI tools to evaluate their effectiveness and identify any necessary adjustments. Maintaining transparency throughout the use of GenAI is equally important. This includes documenting how and when GenAI is applied, as well as clearly disclosing when content has been generated by AI.

According to guidance from the University of Saskatchewan, best practices for transparency include explicitly citing the use of GenAI tools, specifying details such as the software name, version, and the date on which the output was generated (Moss, 2025).

If you want to explore further the practical considerations of adopting GenAI tools, we recommend the third course – Key Considerations for successful Generative AI adoption.

4 After implementing GenAI tools

Organisations should regularly update GenAI policies to stay compliant and ethical.

Gathering feedback from employees and stakeholders can highlight the impact and areas for improvement. This will be essential organisation-specific knowledge and learning to allow monitoring and enhancement of any GenAI policies that your organisation adopts.

Encouraging reporting and open reflection amongst your organisation on what is or perhaps is not working will allow enhanced uses and understandings as implementation unfolds.

It will also be essential to assess, monitor, and evaluate the ways in which GenAI tools are being used and/or is assisting your organisation. This knowledge can also support the updating, monitoring and evolution of GenAI risk assessments and continuing professional development needs.

The use of GenAI and an evaluation of how it is being implemented will also assist with remaining competitive within your industry while remaining legally compliant (Dhar, 2025).

Important stages in the adoption of GenAI tools

Important stages in the adoption of GenAI tools

a.

A. The availability of a trendy AI brand

b.

B. The potential for immediate cost savings

c.

C. Whether the task is repetitive, rule-based, or data-heavy

d.

D. If the AI tool has colourful graphics

The correct answer is c.

Comment

Correct answer: C

Organisations should evaluate the nature of the task to determine suitability for GenAI, ensuring it aligns with their organisational needs, processes and workflows.

a.

A. Continuously verifying AI outputs with a human review

b.

B. Relying solely on AI output to save time

c.

C. Allowing junior staff to operate it without oversight

d.

D. Letting the GenAI tool make final decisions on legal matters

The correct answer is a.

Comment

Correct answer: A

Human-in-the-loop oversight is critical to ensure ethical, accurate, and compliant use of AI-generated content.

a.

A. Turning off all user access

b.

B. Erasing the tool’s usage logs for privacy

c.

C. Assuming it needs no further evaluation

d.

D. Monitoring outcomes and documenting use cases

The correct answer is d.

Comment

Correct answer: D

Ongoing performance monitoring and transparent documentation can help identify risks, support compliance, and ensure continuous improvement.

Session 2: Terms and conditions of GenAI providers – 60 minutes

5 Finding and reading terms and conditions

As organisations adopt GenAI technologies to drive innovation and automate tasks, it becomes increasingly important to understand the legal frameworks that underpin their use.

Central to this are the terms and conditions (T&Cs) issued by GenAI providers. These contractual documents define user rights, provider responsibilities, and the legal boundaries of tool usage. You can usually find the terms and conditions for GenAI providers on their official websites. They are often linked at the bottom of the homepage or during the account sign-up process.

Key sections to look out for include user responsibilities, how your data may be used, ownership of content, liability limitations, and rules around acceptable use (Koley Jessen, 2024). Reviewing these terms carefully is essential to understand what the service offers, any limits on how you can use it, and the legal risks you might be accepting if you are planning to use the tool within your organisation, or to feed it with data from your organisation.

6 Regulatory considerations with GenAI terms and conditions

Organisations and managers need to be aware of various regulatory frameworks for GenAI providers. Many providers use third-party models and technologies, so compliance with third-party terms is crucial.

For instance, Google’s Generative AI Additional Terms of Service limit developing machine learning models. Understanding who owns the Intellectual Property (IP) Rights, both inputs and outputs, is essential – typically, users retain IP rights but there are exceptions.

Managers should check for standard indemnities from users to providers, especially in free subscriptions. It is important to ensure that any GenAI use complies with data protection regulations (including the General Data Protection Regulation) understanding how data is processed, stored, and protected (Koley Jessen, 2024).

Some of these regulatory considerations are explored in more detail below.

Data usage

A critical area addressed in the terms and conditions of GenAI tools is data usage. Many GenAI tools depend on substantial amounts of user input – such as text prompts or uploaded documents – to function effectively and improve over time.

Providers like OpenAI and Google often reserve the right to retain, log, or utilise input data to refine their models unless the user opts out or subscribes to an enterprise-tier plan that prohibits training on user data.

For instance, OpenAI specifies that unless users are using a business account, data entered may be used for model training and enhancement. This raises significant concerns for organisations handling confidential or personal data, especially under legal frameworks like the UK GDPR.

GenAI models are trained using vast amounts of data which often includes personal data. This causes significant privacy concerns under the GDPR because GenAI can generate incorrect information – called ‘hallucinations’ – which pose particular challenges if personal data is involved, and which are compounded by the lack of transparency in GenAI models (Lolfing, 2023b). Managers must ensure that the processing of personal data by GenAI systems complies with GDPR requirements (Lolfing, 2023a). This means taking steps to obtain proper consent (full, and informed consent), ensure data minimisation, and implement appropriate security measures.

Organisations should implement technical and organisational strategies to ensure data security when using GenAI tools. This includes implementing access controls, encryption, data backups and recovery processes (Lolfing, 2023b).

Intellectual property

Another essential consideration is intellectual property (IP). Users often assume that they fully own the outputs generated by GenAI. However, providers frequently indicate that the generated content may be non-exclusive and not necessarily subject to copyright protections. Many GenAI outputs may be derived from public datasets or resemble outputs provided to other users, thereby limiting exclusivity. It is imperative that users carefully review ownership clauses to comprehend whether, and how, content may be reused or commercialised.

Liability and disclaimers

Liability disclaimers are commonly included in GenAI T&Cs. Most providers stipulate that they do not guarantee the accuracy or reliability of outputs and disclaim responsibility for any harm caused by reliance on the tool. The Law Society of England and Wales emphasises that lawyers must remain accountable for the content they submit or rely on, even when AI is involved in its creation. This underscores the necessity for professional oversight and thorough verification when utilising GenAI, especially in regulated industries.

Acceptable use policies

Acceptable use policies are also prominent in provider terms. These clauses typically prohibit the use of the tool for unlawful purposes, including the creation of harmful, deceptive, or discriminatory content. Violations of these clauses may result in account termination and/or reputational damage. It is crucial that organisations understand not only what the tools can do but also what they are permitted to do within legal and ethical boundaries.

Model changes

GenAI T&Cs often include provisions for model changes, versioning, and service continuity. Providers may update their models or restrict access at any time, potentially affecting consistency or reproducibility. This dynamic nature of service delivery necessitates close attention to version controls and documentation, particularly when GenAI is integrated into operational or legal workflows.

Insurance

It is important to assess whether a GenAI provider offers adequate liability coverage and indemnity provisions. This is crucial for safeguarding the organisation in case the GenAI produces incorrect results. It is essential to review any indemnity clauses to determine whether they provide protection against infringement claims made by third parties (Talladoros, 2024), especially since GenAI models can sometimes generate outputs that inadvertently infringe upon existing intellectual property rights.

Managers should also examine if the insurance includes coverage for data breaches or privacy violations resulting from the use of GenAI tools. Given the sensitive nature of data processed by GenAI systems, this aspect is particularly important. If there is no coverage for data breaches, other insurance policies, such as cyber insurance, should be considered (O'Brien, 2024).

In certain instances, GenAI providers may require users to maintain specific types of insurance, such as professional liability or cyber insurance (Koley Jessen, 2024), to mitigate potential financial losses from claims related to the use of GenAI services.

Assessing whether there is appropriate cover in place, or needs to be obtained, should be done in conjunction with reviewing any exclusions in the insurance policy that deny coverage for specific scenarios, such as incidents arising from misuse of the GenAI systems.

7 Acceptable use policies of GenAI tools and providers

GenAI provider standard terms and conditions can be lengthy documents.

While these should be reviewed fully before any GenAI tools are used within organisations, there are some key aspects that require particular attention. Within these, Acceptable Use Policies (AUPs) specify permissible and prohibited uses of GenAI tools to prevent misuse, protect intellectual property, and ensure data security. They require users to comply with laws, maintain confidentiality, and avoid harmful activities.

AUPs typically include:

- Prohibited uses: Restrictions on using GenAI outputs, such as not training other AI models.

- Sensitive information: Prohibits sharing passwords or personally identifiable information.

- User conduct: Users must engage lawfully and respectfully, avoiding illegal activities and harmful purposes.

- Misuse of AI outputs: Restrictions on manipulating AI outputs to misrepresent facts or deceive audiences.

AUPs also cover compliance with laws, data protection, privacy regulations, and security (Kostadinov, 2014). Managers should review AUPs, ensure organisational awareness, and include these provisions in training sessions if necessary.

The following checklist highlights some of the core clauses within AUPs that need reviewing by managers and/or organisations considering GenAI tool use.

- Data usage

- Does the provider retain your input data (e.g. prompts, uploads)?

- Is your data used for future training or improvement of the model?

- Confidentiality

- Can you opt out of data retention?

- Are there risks if the content includes sensitive or client-specific information?

- Intellectual property

- Who owns the GenAI output?

- Can you use the content commercially or modify it freely?

- Can you share it without attribution? Or is there an attribution requirement?

- If there is no attribution requirement, is the content subject to license arrangement?

- GenAI use restrictions

- Are there any prohibited activities (e.g. use in legal, medical, or financial advice)?

- Does the provider restrict high-risk applications?

- Model reliability

- Are you expected to verify and supervise the content?

- Is it made clear that the provider is not liable for inaccurate or harmful outputs?

- Limitation of liability

- Does the GenAI provider disclaim, limit, or exclude responsibility for errors?

- Are you (the user) solely responsible for how the content is used?

- GenAI service and model updates

- Can the provider change the model or withdraw features with little notice?

- How might this affect your workflows?

- Transparency clauses

- Are you required to disclose when GenAI has been used in content creation?

- Are version numbers and dates of GenAI outputs important for compliance?

Why it is important to read key contractual clauses

Why it is important to read key contractual clauses

Discussion

Reading terms and conditions of GenAI providers is important, particularly if you are considering using GenAI tools for the work of your organisation, or to support some of the work of your organisation.

Session 3: Time for a GenAI policy? – 60 minutes

8 Designing a GenAI use policy

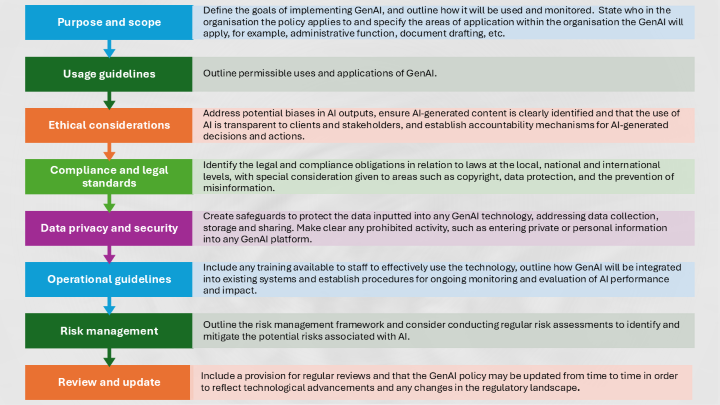

Part of any organisation-wide implementation should include designing a GenAI use policy for your organisation.

Having a GenAI policy can help to leverage the effectiveness of these tools, outline what is and is not acceptable use within your organisation, as well as minimising potential risks. The adoption of a policy could directly address accountability concerns within your organisation.

What should a GenAI Policy include?

What should a GenAI Policy include?

Thinking about your organisation, its needs, and potential opportunities for GenAI tools, what do you think a GenAI policy should include?

Make a note of at least five areas that the policy should cover.

A comprehensive GenAI policy will outline straightforward directions for its application. A well-rounded policy will regulate how your employees use GenAI within their work, help to prevent violations and/or legal compliance issues, assist with protecting sensitive data and will address ethical concerns (Verduyn, 2025).

Having a detailed policy will provide a point of reference for anyone working with or within your organisation who is using or expected to be using GenAI tools. It will also outline the organisation’s position in respect of GenAI tools, how to use them, when to use them, and the strategies for managing their risks.

GenAI policies should include details that cover the following aspects:

- Your protocols for data protection.

- Definitions of how sensitive data should be handled and processed within GenAI tools.

- Guidelines for data encryption.

- Guidelines for data anonymisation.

- Data access controls to manage and mitigate potential breaches of data protection and privacy regulations (ISACA, 2023).

- Legal compliance procedures for GDPR requirements.

- Procedures to validate and verify GenAI outputs (Dietzen, 2024).

- Strategies and details for identifying and mitigating risks with GenAI adoption within the organisation (ISACA, 2023).

- This may include:

- Scenario planning.

- Contingency measures.

- Periodic risk assessments.

- Identification of a manager in the organisation with ownership of, and responsibility for the GenAI Policy.

- Specific details of approved GenAI tools.

- Terms of use of the GenAI tools adopted by the organisation.

- Professional development and/or training needs for those using approved GenAI tools.

- Periodic audit details.

A policy should include specific details relating to the professional development and training needs of those who are using and are expected to be using GenAI tools. An understanding of how to use GenAI tools is required in order to safely, responsibly, and ethically use these tools but also to ensure that they are maximised for the benefits to the organisation.

9 Training on AI prompt techniques

Training on appropriate prompt techniques for the specific tools your organisation is implementing is a vital initial step before widely implementing GenAI. This will help your organisation to effectively generate outputs that are precise and most relevant for the required tasks (Saifan, 2024).

GenAI literacy is an essential element of any implementation process. Training should include aspects such as risks and limitations (Saifan, 2024) of GenAI tools (including copyright, hallucinations, data use, data protection, quality and reliability of GenAI outputs, prompt engineering) and should explicitly address the areas listed within your organisation’s GenAI Policy.

You can download a PDF version of this infographic as a takeaway.

10 Conclusion

Having studied this course, you now know more about the considerations that need to be made before adopting and implementing GenAI tools within an organisation.

This course has outlined some of the things that individuals and organisations need to think about to mitigate and manage risks posed by GenAI adoption, while supporting efficiency and responsible use. This includes assessing what and why GenAI is being used and monitoring its usage as part of a comprehensive GenAI policy.

Having appropriate policies in place that detail GenAI use within an organisation is particularly important given the fragmented legal and regulatory landscape within the UK at present.

If you are considering using GenAI in your organisation, we suggest you look at the fifth course, Ethical and responsible use of Generative AI, which considers the ethical uses and considerations of AI tools.

For individuals responsible for managing organisations and who want to know more about the risks and concerns posed by aspects of GenAI tools and their use in organisations, we recommend the sixth course, Navigating risk management.

For individuals interested in finding out more about using GenAI, we would recommend the eighth course, Preparing for tomorrow: horizon scanning and AI literacy, which considers the future of GenAI.

Further reading

Further reading

Want to know more?

Research and understanding of the risks and mitigations posed by GenAI is ongoing. The legal and regulatory framework is complex and evolving, and updated legal provisions may have been enacted since these course materials were developed.

If you want to explore any of the risks covered in this course, you may wish to explore resources that can help you to draft GenAI policies.

Have a look at the Orrick GenAI Policy Builder or the OECD Working Paper on Initial Policy Considerations for generative artificial intelligence.

Moving on

When you are ready, you can move on to the Course 7 quiz.

Website links

References

Brown, J. and Jones, A. (2021). 'Artificial Intelligence: Promises, Risks, and Regulation', Harvard Kennedy School. Available at: https://www.hks.harvard.edu/sites/default/files/2024-12/24_Barroso_Digital_v3.pdf. (Accessed 25 May 2025).

Calls9 (2025) Explore Generative AI use across 10 industries. Available at: https://www.calls9.com/blogs/generative-ai-use-cases-across-10-industries (Accessed 25 May 2025).

Department for Science, Innovation and Technology (2023) 'A Pro-Innovation Approach to AI Regulation', UK Government. Available at: https://www.gov.uk/government/publications/ai-regulation-a-pro-innovation-approach (Accessed 25 May 2025).

Dhar, J, (2025), 'GenAI Best Practices Are Starting to Emerge', Forbes. Available at: https://www.forbes.com/sites/juliadhar/2025/03/19/genai-best-practices-are-starting-to-emerge/ (Accessed 25 May 2025).

Dietzen, L (2024) 'Why your organisation needs to craft a comprehensive GenAI policy now', Today's General Counsel. Available at: https://todaysgeneralcounsel.com/why-your-organization-needs-to-craft-a-comprehensive-genai-policy-now/ (Accessed 25 May 2025).

Information Commissioner's Office (n.d.) Rights related to automated decision making including profiling. Available at: https://ico.org.uk/for-organisations/uk-gdpr-guidance-and-resources/individual-rights/individual-rights/rights-related-to-automated-decision-making-including-profiling/ (Accessed 25 May 2025).

ISACA (2023) Considerations for a Generative AI Policy. Available at: https://www.isaca.org/-/media/files/isacadp/project/isaca/resources/ebooks/considerations-for-a-generative-ai-policy-1023.pdf. (Accessed 25 May 2025).

Koley Jessen (2024), 6 Contract Considerations for Generative Artificial Intelligence Providers. Available at: https://www.koleyjessen.com/insights/publications/contract-considerations-generative-ai-providers (Accessed 25 May 2025).

Kostadinov, D (2014) 'The essentials of an acceptable use policy', Infosec. Available at: https://www.infosecinstitute.com/resources/management-compliance-auditing/essentials-acceptable-use-policy/ (Accessed 25 May 2025).

Lolfing, N (2023a) 'Generative AI and GDPR Part 1: Privacy considerations for implementing GenAI use cases into organizations', Bird & Bird. Available at: https://www.twobirds.com/en/insights/2023/global/generative-ai-and-gdpr-part-1-privacy-considerations (Accessed 25 May 2025).

Lolfing, N (2023b) 'Generative AI and GDPR Part 2: Privacy considerations for implementing GenAI use cases into organizations', Bird & Bird. Available at: https://www.twobirds.com/en/insights/2023/global/generative-ai-and-gdpr-part-2-privacy-considerations (Accessed 25 May 2025).

Moss, G, (2025) 'Being Transparent with the AI Use: How to Cite, Disclose, and Document', University of Saskatchewan. Available at: https://teaching.usask.ca/articles/2025-03-03-being-transparent-with-ai-use-how-to-cite-disclose-and-document.php (Accessed 25 May 2025).

O'Brien, K (2024) 'Cyber Insurance and AI: Are you fully covered and Secure?', Compass IT Compliance. Available at: https://www.compassitc.com/blog/cyber-insurance-ai-are-you-fully-covered-and-secure (Accessed 25 May 2025).

Practical Law (2024) AI in the workplace. Available at: https://uk.practicallaw.thomsonreuters.com/Document/Ieb1b49a4256d11ee8921fbef1a541940/View/FullText.html?transitionType=SearchItem&contextData=(sc.Search)#co_anchor_a366708 (Accessed 25 May 2025).

Saifan, D (2024) Upskilling the Workforce: How can businesses leverage GenAI's vast potential and efficiency?. Available at: https://www.pwc.com/m1/en/media-centre/articles/upskilling-the-workforce-how-can-businesses-leverage-genais.html#:~:text=As%20a%20result%20of%20the,with%20enhanced%20efficiency%20and%20precision (Accessed 25 May 2025).

Talladoros, M (2024) 'GenAI Platforms: their standard terms and conditions', Taylor Wessing. Available at: https://www.taylorwessing.com/en/insights-and-events/insights/2024/06/aq-genai-platforms (Accessed 25 May 2025).

Thaler v Comptroller-General of Patents, Designs and Trademarks (2023). Available at: https://www.supremecourt.uk/cases/uksc-2021-0201 (Accessed 25 May 2025).

Thomson Reuters (2024) 7 questions to consider before using GenAI in your work. Available at: https://www.thomsonreuters.com/en/insights/articles/7-questions-to-consider-before-using-genai-in-your-work (Accessed 25 May 2025).

Verduyn, M, (2025.) 'How to develop a Generative AI (ChatGPT) Policy + Free Template', Academy to Innovate HR. Available at: https://www.aihr.com/blog/generative-ai-policy/ (Accessed 25 May 2025).

Acknowledgements

Grateful acknowledgement is made to the following sources:

Every effort has been made to contact copyright holders. If any have been inadvertently overlooked the publishers will be pleased to make the necessary arrangements at the first opportunity.

Important: *** against any of the acknowledgements below means that the wording has been dictated by the rights holder/publisher, and cannot be changed.

Course banner 560835: Summit Art Creations / Shutterstock

560837: Created by OU artworker using OpenAI. (2025). ChatGPT version o4

560840: Created by OU artworker using OpenAI. (2025). ChatGPT version o4

560842: Created by OU artworker using OpenAI. (2025). ChatGPT version o4

560843: Created by OU artworker using OpenAI. (2025). ChatGPT version o4

561018: Created by OU artworker using OpenAI. (2025). ChatGPT version o4

561020: Created by OU artworker using OpenAI. (2025). ChatGPT version o4