Use 'Print preview' to check the number of pages and printer settings.

Print functionality varies between browsers.

Printable page generated Wednesday, 4 February 2026, 8:58 AM

Study Session 15 Monitoring and Evaluation

Introduction

Monitoring and evaluation (M&E) is a project management technique that is an integral part of any programme cycle. It includes the gathering and analysis of information, and the reporting of processes and outputs. In this study session, you will learn how M&E can be used to assess progress made in sanitation and waste management.

Any M&E system needs to ensure that the programme implementation is carried out as planned and is achieving the aims and objectives to an acceptable quality, and in the planned time period. The system should also provide assurance that sustainability and management issues are being addressed and that supporting organisations such as local community groups are in place and functioning.

The World Bank (2004) summarises the advantages of M&E as ‘better means for learning from past experience, improving service delivery, planning and allocating resources and demonstrating results as part of accountability to key stakeholders’.

Learning Outcomes for Study Session 15

When you have studied this session, you should be able to:

15.1 Define and use correctly each of the terms printed in bold. (SAQs 15.1, 15.2 and 15.4)

15.2 Explain the difference between monitoring and evaluation. (SAQ 15.2)

15.3 Describe the purpose of M&E and explain why it is important. (SAQ 15.3)

15.4 Identify the data and methods that can be used to monitor and evaluate the performance of urban sanitation and waste management schemes. (SAQ 15.4)

15.1 Introduction to monitoring and evaluation

Monitoring and evaluation are critically important aspects of planning and management of any programme. Monitoring is the systematic and continuous assessment of the progress of a piece of work over time, in order to check that things are going to plan. Evaluation is an assessment of the value or worth of a programme or intervention and the extent to which the stated objectives have been achieved. Evaluation is not continuous and usually takes place periodically through the course of the programme or after completion. Together, monitoring and evaluation are a set of processes designed to measure the achievements and progress of a programme. The two terms are closely connected and are frequently combined with the result that the abbreviation M&E is widely used.

A town health office is interested in finding out how many families practise solid waste sorting and reuse at household level. Is this monitoring or evaluation?

This is monitoring because it is an on-going activity concerned only with counting the number of something, in this case how many families were sorting their waste.

15.1.1 What is M&E?

Programmes, projects and other interventions can be described in five stages, as shown in Figure 15.1. The inputs, on the left, are the resources (funding, equipment, personnel) and activities that are undertaken. The results, on the right, are the outputs, outcomes and impacts (see Box 15.1).

An effective M&E system measures the inputs, outputs, outcomes and impacts resulting from implementation of a programme. To provide useful knowledge these results need to be compared with the situation before the programme started, which requires baseline data. Baseline data gives information about the situation at the start of an intervention (the baseline position) and provides a point of comparison against which future data, collected as part of a monitoring process, can be compared. Progress can be evaluated by comparing the two.

Box 15.1 Outputs, outcomes and impacts

There are several words used in M&E that can be confused. They sound similar but have important differences in their meaning.

- Outputs – the things produced by the project/programme/intervention. In sanitation and waste management, examples could include tangible products like new latrines or waste transfer stations or could be events and activities like running a training workshop for composting workers.

- Outcomes – the effects of the intervention, usually in the short to medium term. Some examples, following those above, could be the number of people in a kebele who now have access to improved sanitation or the number of people attending the training workshop.

- Impacts – long-term effects and consequences. Examples could be a fall in the incidence of diarrhoeal disease or a reduction in the amount of waste sent to landfill.

It’s very important to plan monitoring activities during the earliest stages of project development — they should be integrated into project activities and not be added on as an afterthought. Monitoring requires regular and timely feedback in the form of reports from implementers to project managers so they can keep track of progress. These reports provide information about activities and what has been achieved in terms of outputs. They also include financial reports that give information on budgets and expenditure. With this information, managers can assess progress and plan the next steps for their project.

15.1.2 Why is M&E so important?

A well-managed M&E system will allow stakeholders to:

- Track progress: M&E assesses inputs (expenditure), outputs and outcomes, which enables managers to track progress towards achieving specific objectives.

- Measure impact: M&E reduces guesswork and possible bias in reporting results by asking questions such as: What is the impact of the programme? Are the expected benefits being realised? Is sanitation improving? Are waste recovery rates increasing?

- Increase accountability: M&E can provide the basis for accountability if the information gathered by the M&E process is reported and shared with users and other stakeholders at all levels.

- Inform decision making:M&E provides evidence about the successes and failures of current and past projects that planners and managers need to make decisions about future projects. It should also encourage reflection on lessons learned in which managers ask themselves questions like ‘what worked well in this project?’ and ‘what can we do better next time?’.

- Encourage investment:a credible M&E system builds trust and confidence from government and donors which will increase possibilities of further investment.

- Build capacity: a sound M&E system supports community participation and responsibility. It encourages the user communities to look regularly at how well their sanitation and waste schemes are working, what changes need to take place in sanitation and waste behaviours, what health benefits are resulting and what more needs to be done. It enables a community to build its own capacity, recognise its own successes and record them regularly.

Reporting on monitoring activity is essential because otherwise the information cannot be used. It is no use collecting data and then filing it away without sharing it. As noted above, one of the reasons for undertaking M&E is to inform decision makers and enable lessons to be learned and therefore they need to be provided with the information in a timely way for that benefit to be realised.

15.2 Monitoring in practice

As you have seen, a key part of monitoring is the gathering of data.

15.2.1 Data types

Data can be classified into two types. Factual information based on measurement is called quantitative data. Information collected about opinions and views is called qualitative data.

Suggest examples of quantitative and qualitative data that could be collected about open defecation in a kebele.

Collecting data about the change in the proportion of people practising open defecation is an example of quantitative data. An example of qualitative data could be assessing people’s views about the reduction of open defecation. You may have thought of other examples.

If you look back to Figure 5.1 in Study Session 5, you will find an example of quantitative monitoring data. The WHO/UNICEF Joint Monitoring Party data for sanitation coverage is compiled from monitoring programmes in countries all over the world.

15.2.2 Key features of monitoring

Monitoring is a continuous or periodic review of project implementation focusing on inputs, activity work schedules and outputs. It should be designed to provide constant feedback to ensure effective (the extent to which the purpose has been achieved or is expected to be achieved) and efficient (to what degree the outputs achieved are derived from well organised use of resources) project performance. Monitoring should allow the timely identification and correction of deviations in a programme. It should provide early warning or the opportunity to remedy undesirable situations before damage occurs or gets worse.

Monitoring consists of three related activities, which are:

- Collection of data and information:for example, to monitor the proper use of sanitation facilities, data can be collected from various different sources such as regular household visits and observation of the amount and frequency of faecal matter outside the latrine. Information can be gathered from community questionnaires and reports from focus groups.

- Information analysis: data should be analysed in terms of place, time and who was surveyed in order to summarise the results. If the data suggests there are problems, appropriate management action needs to be taken.

- Action based on monitoring feedback:if, for example, after analysing monitoring data on household waste management, you find most households do not separate their wastes into organic and non-organic types, there are three types of action you can take:

- Corrective actions– you could provide practical demonstrations to show the people how to separate household wastes and how to store it properly.

- Positive reinforcement – if some households practise the required behaviour and are separating their waste they can be used as a good example to others.

- Preventive actions that will stop the problem arising in future – for example, arranging an education and promotion programme for the community. This could encourage behaviour change by showing the impact of non-compliance and the benefits to the wider community and the environment if wastes are separated at source. Peer pressure can be an important factor in communities. Highlighting the negative impact of non-compliance to individuals or communities can be a useful approach.

Monitoring should be a continuous process of regularly and systematically reviewing achievements, performance and progress towards the planned objectives of a programme. This will require a schedule for monitoring activities that should be prepared at the start and reviewed regularly. For example, a typical schedule for monitoring at the Woreda Health Office level would be part of an annual plan and might include:

- monthly field visits

- monthly, quarterly, annual and biannual reports

- annual and biannual review meetings.

15.3 Indicators

An effective monitoring programme needs precise and specific measures that can be used to assess progress towards achieving the intended goals. These are called indicators. An indicator is something that can be seen, measured or counted and that provides evidence of progress towards a target. Some examples of basic monitoring indicators for urban sanitation and waste management are:

- number of households with unimproved latrines

- number of schools with improved latrines

- number of people using communal latrines

- number of people using public latrines

- number of public toilets constructed

- number of Health Extension Workers trained in solid waste recycling and reuse

- number of institutions with improved VIP latrines and handwashing facilities

- number of households with access to improved sanitation facilities

- number of schools with access to adequate sanitation

- number of community members who received education and information on the safe handling and disposal of wastes

- number of community members trained on safe handling and disposal of wastes

- number of community meetings held on safe handling and disposal of wastes.

These are all examples of indicators that could be used to monitor progress towards specific programme targets.

15.3.1 Key performance indicators

The terms ‘performance indicator’ or key performance indicator (KPI) are often used by organisations to describe measures of their performance, especially in relation to the service they provide, and how well they have met their strategic and operational goals. KPIs can be measures of inputs, processes, outputs, outcomes or impacts for programmes or strategies. When supported with good data collection, analysis and reporting, they enable progress tracking, demonstration of achievement and allow corrective action for improvement. Participation of key stakeholders in defining KPIs is important because they are then more likely to understand and use them for informing management decisions.

KPIs can be used for:

- setting performance targets and assessing progress toward achieving them

- identifying problems to allow corrective action to be taken

- indicating whether an in-depth evaluation is really needed.

Sometimes too many indicators may be defined without accessible and reliable data sources. This can make the evaluation costly and impractical. There is often a trade-off between picking the optimal or desired indicators and having to accept indicators which can be measured using existing data.

The Ethiopian WaSH M&E Framework and Manual (FDRE, n.d.) uses the following KPIs for sanitation and hygiene:

- number and percentage of health facilities with water and latrines with water

- percentage of households with a functioning latrine meeting minimum standards

- percentage of households with a functioning handwashing facility.

- percentage of people washing hands after defecation.

The percentages are calculated from the data collected during monitoring surveys. For example:

Percentage of households with a functioning latrine =

- Number of households who have a functioning latrine x 100

- Total number of households

The advantage of KPIs is that they provide an effective means to measure progress toward objectives. They can also make it easier to make comparisons. For example, different approaches to a common problem can be compared to find out which approach works best, or results from the same intervention in a number of districts can be compared to find out what other factors affected the outcomes. It is important for KPIs to be carefully defined so they can be applied consistently by different organisations (Jones, 2015). For example, the definition of ‘functioning latrine meeting minimum standards’ in the KPIs listed above, should specify exactly what the minimum standards are. Without precise definitions, survey data could be collected and interpreted by different people in different ways which would make comparisons meaningless and useful analysis and evaluation impossible.

15.4 Evaluation

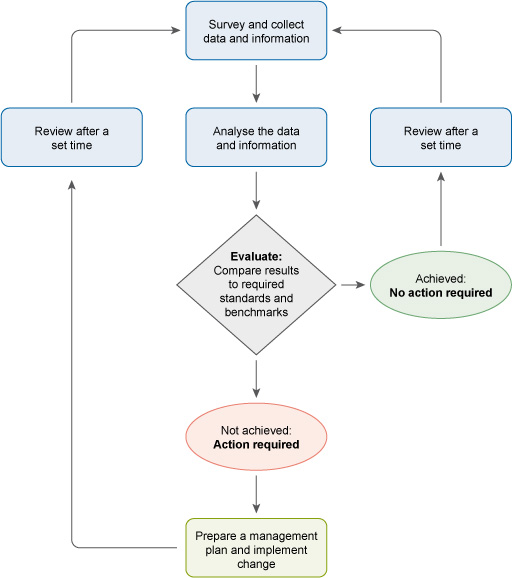

Evaluation should answer why and how programmes have succeeded or failed and allow desired changes to be planned for improvements in implementation. It is an activity that should allow space to reflect upon and judge the worth (value) of what is or has been done. Evaluation can be seen as a cyclical process as shown in Figure 15.2. You should read this diagram by starting at the top and then following the arrows down the middle. From the top, data are collected, then analysed and then evaluated. If the aims of the programme are being achieved (the arrow to the right), no action is required but results should be reviewed and feed into the next evaluation if there is one. However, if the aims were not achieved (the arrow pointing down), action is required in the form of review of the management plan and changes to the way the programme is implemented. This will then require further evaluation in due course (the arrow going back up to the top) to assess the success of the revised programme.

Unlike monitoring which is a regular activity, evaluation will be conducted only when there are evaluation questions that need to be answered. There is no fixed schedule for this but it may happen:

- mid-term

- at the end of a project

- three to five years after the project is completed (to assess the total impact).

Evaluation questions may arise from monitoring data or any other observations that lack in-depth information to explain observed levels of performance or effectiveness. In such cases, evaluation provides useful data on how and why programmes succeed or fail.

Evaluation as an activity may be related to processes, outcomes or impacts of a programme.

Process evaluation, as the name suggests, looks at process questions and can give insight into whether the project is on track or not, and why. At the end of a project, it would involve a review of all the project processes, from start to finish. Process evaluation aims to explain why things have happened in the way they have and answers questions such as:

- Was the intervention implemented according to expectations and the agreed management plan?

- Did the intervention meet the required standards of quantity and quality?

- Can the reasons for the success or failure of the intervention be assessed?

Outcome evaluation is the assessment of what the intervention has achieved. For example, if the intended outcome was to reduce open defecation in a target population, evaluation questions could be:

- Is there a change in the level of open defecation by the target population? And to what extent?

- What contribution did the project make to the observed level of change?

- Was the change that was attributable to the project adequate to meet project goals?

In most cases, outcomes or impacts are influenced by more than one factor and by other changes in a situation. For this reason, an outcome evaluation needs to be designed so that it is possible to estimate the difference between the current outcome level and that expected if the intervention was not in place.

Impact evaluation is the systematic identification of the effects (positive or negative and intended or not) on individuals, households or communities caused through implementation of a programme or project (The World Bank, 2004). Impact evaluations can vary in scale. They may be large surveys of target populations that use baseline data and then a follow-up survey to compare before and after. They could also be small-scale rapid assessments where estimates of impact could be obtained from a combination of group interviews, focus groups, case studies and available secondary data.

Impact evaluation can be used to:

- measure impacts of a programme and distinguish these from the influence of other external factors

- inform management decisions on whether to modify, expand or abolish programmes

- learn lessons for improving the design and management of future activities

- compare the effectiveness of alternative interventions

- help to clarify whether costs for a programme or activity are justified.

The advantages of impact evaluation are that it provides estimates of the magnitude of outcomes and analyses the impacts for different demographic groups, households or communities over time. It should show the extent of the difference that a programme is making and allow plans for improvement to be made. However, it needs competent managers and some approaches can be very expensive and time-consuming.

One year after a hygiene promotion programme has ended, the Regional Health Bureau is interested to see if child health has improved in the woredas where the programme was implemented. Is this monitoring or evaluation?

This is evaluation because it is concerned with the impacts of a particular programme. However, monitoring is also involved because the data collection required for the evaluation would probably have come from regular monitoring reports.

15.5 Tools for monitoring and evaluation

There is a wide range of tools available which can be used to generate information for monitoring and evaluation purposes.

Think back to Study Session 3 and list the main methods that can be used to gather data from communities and individuals about their access and use of sanitation and waste management services.

The main methods are:

- interviews with individuals and households

- observation in public places and in homes

- community discussions (Figure 15.3)

- focus groups (Figure 15.4)

- questionnaires that can be completed by large numbers of people or used to structure interviews.

Large-scale monitoring programmes can generate enormous amounts of data. Collating the data and organising it in a way that is meaningful for evaluation or other purposes is a significant task. This is the purpose of a management information system (MIS). An MIS is a computer-based system that provides tools for collecting, organising and presenting information so that is useful for managers and other stakeholders.

In Ethiopia, there are two national monitoring systems that are relevant to urban sanitation and waste management. The Health Management Information System/Monitoring and Evaluation(HMIS/M&E) is used to record data from routine services and administrative records across all woredas and all health facilities throughout the country (MoH, 2008; Hirpa et al., 2010).

In the WASH sector, the National WASH Inventory (NWI) is a country-wide monitoring programme that was initiated in 2010/2011. Its purpose is to provide a single comprehensive set of baseline data about water, sanitation and hygiene provision for the whole country. The early phases of data collection used paper-based surveys and questionnaires but later phases have moved to a system of collecting data using smart phones (as long as there is service) which is much quicker and more efficient. The WASH MIS has been developed to collect monitoring data and to enable production of reports from national to woreda levels.

There is a lack of coordination between the HMIS and WASH MIS and this is recognised as a problem. In addition, at present, there is greater emphasis on water supply than there is on sanitation and hygiene, and currently there is no national monitoring of solid waste management. Recent developments such as the One WASH National Programme, which you read about in Study Session 1, are signs of the move towards more collaborative and integrated working in the sector which will bring many benefits.

Summary of Study Session 15

In Study Session 15, you have learned that:

- Monitoring and evaluation (M&E) activities are a key management component of interventions and programmes. Monitoring is systematic and continuous assessment. Evaluation is an assessment of the value of a programme and the extent to which its objectives have been achieved.

- Baseline data should be collected at the start of a programme so that it can be compared with data collected later.

- M&E is intended to track progress, measure impact, increase accountability, inform decision making, encourage investment and build capacity.

- The main features of monitoring activities are collecting data, analysing information and acting on that information.

- Effective monitoring needs careful identification and definition of an appropriate number of measureable indicators.

- Evaluation is a cyclical process that should be regularly reviewed and repeated. It can be undertaken with respect to processes, outcomes or impacts.

- There are several tools for data collection for monitoring including surveys, observation, interviews, community discussions and focus groups.

Self-Assessment Questions (SAQs) for Study Session 15

Now that you have completed this study session, you can assess how well you have achieved the Learning Outcomes by answering these questions.

SAQ 15.1 (tests Learning Outcome 15.1)

Match the following words to their correct definitions.

Two lists follow, match one item from the first with one item from the second. Each item can only be matched once. There are 9 items in each list.

quantitative data

impacts

process evaluation

baseline data

qualitative data

outcomes

indicator

impact evaluation

outputs

Match each of the previous list items with an item from the following list:

a.identifying the effects on individuals, households or communities caused through implementation of a programme or project

b.a way of determining if a programme is on track to meet its aims

c.the things produced or objectives achieved by a project or programme

d.something that can be seen, measured or counted, providing evidence of progress towards a target.

e.data collected at the start of an intervention to provide a point of comparison against which future data can be compared

f.effects of an intervention, usually in the short to medium term

g.the long-term effects of a project or programme

h.measureable factual data

i.information collected about views and opinions

- 1 = h,

- 2 = g,

- 3 = b,

- 4 = e,

- 5 = i,

- 6 = f,

- 7 = d,

- 8 = a,

- 9 = c

SAQ 15.2 (tests Learning Outcomes 15.1 and 15.2)

Which of the following statements are false? In each case explain why it is incorrect.

- A.Collecting data is an important part of monitoring.

- B.Monitoring is an activity that should be done occasionally during a project’s lifetime.

- C.Monitoring will help you decide if any corrective action is needed.

- D.Evaluation can answer questions about project process, outcomes and impacts.

- E.Evaluation should always be left to the end of a project so that final outcomes are known.

Answer

B is false. Monitoring should be a continuous process, not just an occasional one.

E is false. Evaluation could, and probably should, be done at the end of a project but it also important to evaluate at other times.

SAQ 15.3 (tests Learning Outcome 15.3)

Give three reasons for incorporating plans for M&E during the early stages of a project’s development.

Answer

Three possible reasons for incorporating plans for M&E during the early stages of a project’s development are:

- so that progress can be checked at key stages of the project to ensure that plans are being followed, budgets spent appropriately and targets on track to be met

- so that the impacts of the project can be assessed to find out if the project has been effective and provided value for money

- to identify any problems or failures and learn from them so that the next project does not make the same mistakes.

You may have thought of other reasons.

SAQ 15.4 (tests Learning Outcomes 15.1 and 15.4)

Explain why an indicator based on quantitative data will be more useful than an indicator based on qualitative data. Use examples of indicators in your answer and describe how you might measure them.

Answer

For an indicator to be a useful tool for assessing a situation, it has to be something that can be seen, counted or measured. It also should be precisely defined. Quantitative data is factual information based on measurement so that would meet the requirements for an indicator. For example, the number of people using a latrine could be counted by observation on a small scale or, on a larger scale, could be measured by asking people about their habits using a questionnaire or in interviews. For the survey method, it would be important to specify exactly what type of latrine was being used.

Qualitative data would be much harder to use as an indicator because it is not so easy to measure. For example, you could gather qualitative data about the reasons why people did or did not use the latrine. This could be useful information but would not be a helpful indicator because it would not produce simple numerical answers.