Introducing engineering

Use 'Print preview' to check the number of pages and printer settings.

Print functionality varies between browsers.

Printable page generated Saturday, 20 April 2024, 4:02 AM

Introducing engineering

Introduction

Today's engineering society has developed step by step. Engineers have always worked within a context of scientific knowledge, available skills and tools, and set rules that govern their behaviour. The purpose of this course is to introduce you to some of the basics of engineering and to explore how engineering is done. Of course, you will first need to understand what engineering is.

You probably have your own ideas regarding what is meant by the term 'engineering', depending on your interests and background. Your ideas may be shaped by experiences you have had in the workplace or maybe by the different ways engineering is represented in the media. As you progress through this course, you will start to get a fuller understanding of exactly what is meant by the term 'engineering'.

For the moment, it is sufficient to consider engineering as being about problem solving. If you have ever put up some shelves, or exercised your DIY skills to make something useful from scratch, then you have probably done some engineering along with some element of design. If you tackled the problem yourself from scratch, without being provided with a starting point, then in general terms you have had to create a design to meet your purpose, organise your resources and fabricate your product. This type of approach is essentially the same as that taken by professional engineers; that is why we would consider shelf assembly to be engineering. However, professional engineers have to be able to handle projects of greater scale and complexity. To do this efficiently and effectively requires a wider range of skills, developed through education, training and experience.

Skills such as management, financial planning, mathematical aptitude and business strategy all fall within the scope of the professional engineer. This course does not attempt to teach these skills, but within it you will find pointers towards them and their significance in the engineering context.

This OpenLearn course provides a sample of level 1 study in Engineering http://www.open.ac.uk/ courses/ find/ engineering.

Learning outcomes

After studying this course, you should be able to:

understand the characteristics of ‘engineering’ and the role engineers have played in shaping engineering up to the present and into the future

understand a range of principles in science, mathematics and engineering in order to make well-founded decisions as part of a design process

have an appreciation of the design decision-making process when developing new products

recognise the effects on the conduct of engineering relating to issues such as patents, standards and risk

understand the use of appropriate candidate/potential materials and processes for the manufacture of a given artefact.

1 Engineering beginnings

1.1 What is engineering?

At seven o'clock this morning my manufactured alarm radio awoke me in my manufactured bed. I went to my bathroom, with its manufactured fittings, showered with manufactured shower gel, dressed in my manufactured clothes and came down the constructed stairs to eat my manufactured breakfast. My selectively bred (but otherwise unmanufactured) cat greeted me, and I opened a manufactured can of manufactured food for her. Outside, in the old tree in my garden (all organised, hybridised and fertilised by human intervention) a blackbird called his territory: the first wild thing of the day.

Look around yourself. Recognise just how much the material environment we live in is of our own making; it has been engineered. Even to provide a simple container of shower gel has required, if you think about it, interactions among lots of people engaged in an enormous range of activities.

Activity 1 (exploratory)

The soap used in a shower gel is usually made by reacting a fat (often a vegetable oil) with an alkali (a generic name for a type of chemical).

In three minutes, write down as many things/people/activities as you can think of that are involved in getting a shower gel from raw materials to the container in your bathroom.

Answer

- supply of vegetable oil, which requires farmers to grow oil seed

- oil processing plant

- harvester for crop

- plough to cultivate land

- fertiliser and pesticides

- roads to transport to and from farm

- bricks/cement to build factory

- people to make all these things

- fuel for tractors, trucks, etc.

- steel industry, which implies mining

- alkali industry

- soap-making plant

- shower gel production plant

- plastic container for shower gel

- printing for container – inks, dyes

- cardboard boxes to pack shower gel containers for transport to shops

- forestry for wood pulp for packaging

- electricity supplies for factory machines.

My three minutes are up and I've not got the shower gel to my home yet!

That simple example is sufficient to remind you of the great breadth of 'economic activity' that supports our modern industrialised lifestyle. Perhaps because we have been born into this 'civilisation', rather than chosen it, the depth of our dependence on its intricate, interlocking systems of supply is not so obvious. We expect our food to appear in shops, water to be on tap, sewage to disappear without thought or effort, power to come from a socket in the wall, communication to need but the touch of a few buttons. We expect to be provided with shelter from the weather, to be entertained, transported, kept in health and defended.

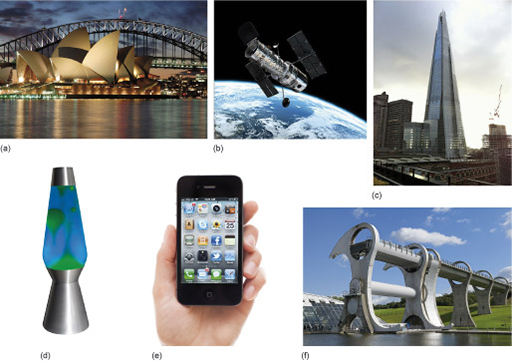

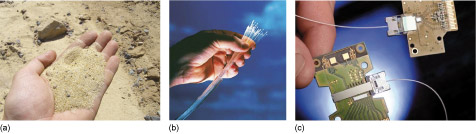

It is engineering that puts all this in place. All the material things – from containers of shower gel to satellites – that enable us to live our everyday life are the products of engineering (Figure 1).

Figure 1 shows an array of 6 photographs of engineered objects that could be considered as being fashionably attractive or impressive. Viewing left to right, top to bottom. The Sydney Opera House is shown lit up at night with the harbour bridge behind. The lighting accentuates the distinctive form of the large precast concrete roofs of the Opera House, generally referred to as "shells", each composed of sections of a sphere of about 75 metres radius. Next, the Hubble space telescope is in low earth orbit and comprises a cylindrical metallic housing, with two large solar panels on opposite sides and two radio antennae on the other two sides. The Hubble telescope has instruments designed to observe in the near ultraviolet, visible, and near infrared wavelengths. Picture C, The Shard, is the tallest building in the European Union being about 310 metres high with 72 habitable floors. It has a pyramidal shape and is covered in glass. Next, the lava lamp is a decorative artefact dating from the 1960s. It consists of a clear glass vessel containing a clear liquid and blobs of coloured wax. In the base there is an incandescent light bulb shining into the liquid which heats the liquid causing the wax blobs to rise and fall. The pictured Smartphone has more advanced computing capability and connectivity than a feature phone. Although small enough to be held in the hand, it can have a number of other functions as well as verbal communication e.g. playing music, taking pictures and video, and helping us find our way around. Instead of a key pad, it uses touch screen technology, which has been further developed for visually impaired and blind users. Finally, the Falkirk Wheel boat lift has two tanks, each containing about 360,000 litres of water, which are pivoted about a common central axis and the form of the rotating structure is inspired by the double-headed Celtic axe. When a boat enters one of the tanks, the weight remains the same as the boat displaces its own weight in water (known as Archimedes Principle). Because the structure is so well balanced it uses very little energy to raise and lower boats between two canals that are about 30 metres apart in height.

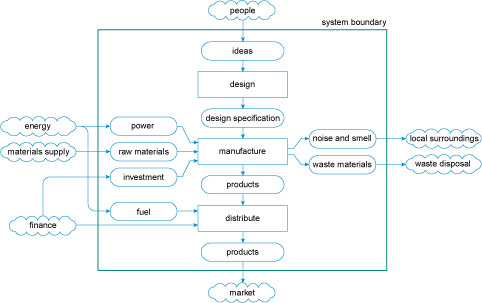

People have recognised a need for some function to be achieved (e.g. de-greased and cleansed skin), thought out or discovered how it can be done (soap in the form of a shower gel), designed, set up and run the means for making the thing (raw materials, process equipment, energy source, labour and finance) and delivered it to the consumer. You can see that this nutshell description of what engineering involves already identifies or implies quite complex interactions: many different people could be engaged in different bits of the scheme. There are so many things to be decided, such as the following.

- How is the function or 'need' identified?

- Who invents solutions?

- How is the decision made to accept a particular solution?

- How is it decided to set up a production facility?

- Who designs that production facility; what production level; what resources?

- Who builds it, runs it, pays for it?

- How is the product costed; will it succeed in the market?

Different societies at different times have different answers to these questions. In a capitalist society you'll find a host of professions getting in on the act: market researchers, bankers, lawyers, steel erectors, bricklayers and joiners, machinery sales people … and engineers. So who or what are engineers and what do they do?

Ask this question of people in the street and you are likely to get many different answers. Some may think of building things, such as bridges or skyscrapers; some may think of repairing things like cars or televisions. Others may relate the word to common phrases or job titles, such as genetic engineering , software engineering or civil engineering. Yet another may tell you that engineers run factories.

If you ask 'What is engineering?' of professional engineers you'll also get many different answers. Their responses will depend on their particular backgrounds, experiences and jobs of the moment. Some will say that engineering is about making things, or developing things. Others will answer in more general terms, saying that it is about generating profit for a company or wealth for a country, or about improving the quality of life.

Clearly engineers are responsible in several ways for making some of those decisions we listed for the would-be shower gel manufacturer. Engineers handle the material aspects of the business. They will know (or be able to work out) how to design a factory suited to making the shower gel, what plant (industrial-scale equipment and its housings) is required, which raw materials to buy, how to work out what power supplies will be needed, and so on. They may be able to calculate how much all this will cost, but they probably will not control the decision of whether to invest that much in the venture. Nor will they be asked to plan the advertising campaign.

The Oxford English Dictionary defines engineering as:

The branch of science and technology concerned with the development and modification of engines (in various senses), machines, structures, or other complicated systems and processes using specialized knowledge or skills, typically for public or commercial use; the profession of an engineer. Frequently with distinguishing word: chemical, civil, electrical, mechanical, military engineering, etc.

There is a lot wrapped up in this dictionary definition, reflecting the fact that engineering draws on many different skills and covers a large variety of specialisms. The following section explores the definition through some real examples of engineering.

1.2 Some case studies

The dictionary definition of engineering given in the previous section can carry us quite a distance in seeing what engineers do. Some examples will illustrate the point. But which to choose? Anything out of our whole history of manipulating the environment to fit our needs will do. For a fuller discussion I have picked four examples: the Pont du Gard, a beautiful Roman bridge in the south of France; a disposable ballpoint pen; muskets; and the plant for making the chemical ammonia. This set will enlighten us concerning varied aspects of engineers' practice. Apart from their intrinsic interest, the four case studies demonstrate the distinction between one-off engineering solutions and mass-produced solutions.

1.2.1 The Pont du Gard: one of a kind

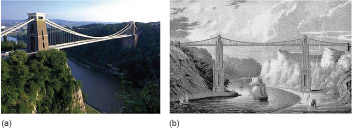

Bridging ditches, dips in the land, streams, rivers and roads is one obvious engineering task. Many of us will cross several bridges during a normal day, and there's sure to be one not far from where you live. You will be aware that there are many different designs of bridge, from little more than a beam across a gap to elegant suspension bridges.

For the makers of early bridges, such as the Pont du Gard, constructed around two millennia ago (Figure 2), the problem was that they were limited to materials like wood and stone. Metals, although in use for tools, armour and weapons, couldn't be produced in sufficient quantity or quality for bridge building until the nineteenth century.

Figure 2 is a photograph of the Pont du Gard revealing that the bridge spans a river valley and is made up of three tiers of arches. The bottom tier contains at least 6 arches, each 25 metres wide and one spans the river. The middle tier is made of at least 9 arches. They are the same size as the ones below, but more are required to cross the valley at that height. The top tier is made of about 30 much smaller arches which support the aqueduct. The bridge was designed to carry water and is not much wider than a footbridge. The bridge is 50 metres high, estimated to weigh 50,000 tonnes and uses 20,000 cubic metres of soft yellow limestone blocks, taken from a nearby quarry that borders the river.

Wood doesn't last too well, so let's turn our attention to stone. One of the problems with stone is that it is brittle : it is easily broken by an impact, and will tend to break rather than just deform. Stone is an example of a ceramic material (see Ceramics below). The pottery mugs you have at home are also ceramic, and they break easily if dropped onto a hard surface; a metal saucepan, on the other hand, would not break, though it might end up with a dent – metals tend to be tough.

When using stone, the trick is to ensure that it is used so that it is being compressed (see Compression and tension below). Think about the bricks used to make a house: they are stacked one on the other, so that each brick is compressed by those above it. This works fine. However, building a bridge is different from building a wall. It's impossible to make a stone bridge that is entirely compressed. So a way is required to minimise the areas that are in tension. The solution in the case of the Pont du Gard was to use arches: curved columns of stone which are compressed by the span of the bridge above them.

Ceramics

The term 'ceramic' covers a wide range of materials that are typically strong, hard and brittle. The minerals that make up rocks such as granite, sandstone and slate come into this category, as well as traditional pottery made from clay (the word ceramic comes from the Greek keramikos , meaning 'pottery') and manufactured rock-like construction materials including brick, cement and concrete.

Most ceramics have crystalline structures: they can withstand high temperatures and are resistant to chemical attack, which makes them useful for a wide range of applications. Glass, which also comes into this category, is unusual in having a relatively low melting point and a more random structure, but this gives it advantages in ease of processing and transparency. More recently a large number of advanced ceramics have been developed for a variety of specialist engineering applications.

Compression and tension

In construction particularly, and many other areas of engineering, the forces acting on the materials are critical to whether or not a structure will be safe. Materials have limits of strength which must not be exceeded.

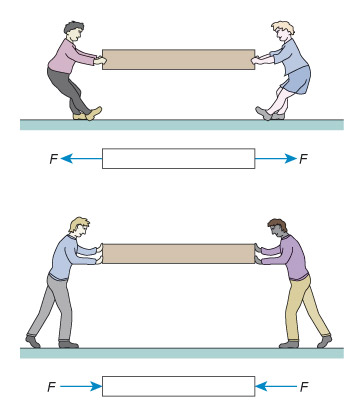

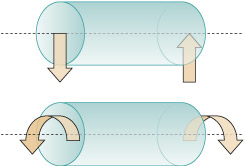

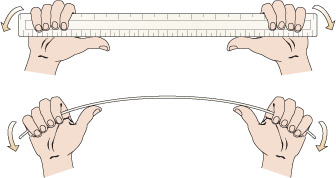

Forces are either compressive or tensile. You can think of compression as a 'squeezing' force, and tension as a 'pulling' force (see Figure 3). Modern structural materials (mainly metals) can withstand both tensile and compressive forces. Stone and similar ceramics are fine in compression, but their strength in tension is much lower.

Figure 3 consists of a pair of illustrations, one above the other, and each illustration consists of a cartoon and below it a diagram. All four images contain a rectangle which is approximately 8 times longer than its height. The rectangle is being used to represent any solid object.

In the top illustration, the cartoon shows two people attempting to pull the rectangle away from each other, rather like a tug of war. The rectangle is being put under tension. In the diagram below this cartoon, each person is replaced by an arrow pointing away from the rectangle. Each arrow has a letter F next to it. The letter denotes force and so the arrows are being used to show the force that the people are creating. The two arrows are pointing away from each other, indicating that they are forces that are creating tension in the rectangle.

In the bottom illustration, the cartoon shows two people attempting to push the rectangle towards each other. The rectangle is being put under compression. In the diagram below this cartoon, each person is replaced by an arrow, this time pointing towards the rectangle. Each arrow has a letter F next to it. Once again, the letter denotes force and so the arrows are being used to show the force that the people are creating. In this case, the two arrows are pointing towards each other, indicating that they are forces that are creating compression in the rectangle.

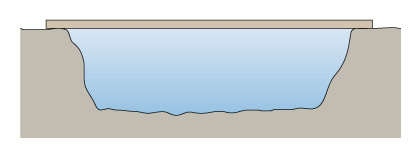

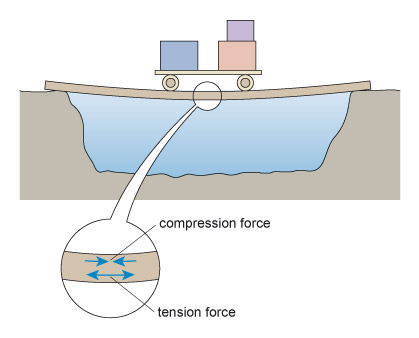

The simplest sort of bridge would be a slab over a ditch (Figure 4). When there is something on the bridge, it will bend – even if only very slightly. Bending puts tension onto the bottom of the slab, and compresses the top (Figure 5). So in building stone bridges, this tensile force must be minimised to below the level at which the slab would crack. This can be done either by making the slab shorter between its supports, or thicker thereby reducing the bending. Look how the design for the Pont du Gard has plenty of supports along its length, so that none of the stones will bend unduly. Forces due to bending crop up a lot in engineering: the simple act of walking across a room will bend the floorboards that support you.

Figure 4 is a simplified side view drawing of a slab spanning a ditch. Imagine that you are standing in the ditch, which is wider than it is deep. A line is used to represent the ground level. Reading from left to right, the line starts off straight and horizontal. It then drops suddenly and becomes slightly irregular. The line now represents the steep side of the ditch. The line than becomes horizontal again but is still irregular. The line now represents the bottom of the ditch which is roughly horizontal. The line then suddenly rises and now represents the other steep side of the ditch. When the line reaches the same level at which it started on the left hand side of the drawing, it becomes straight and horizontal again. The slab is depicted by a long narrow rectangle spanning the distance between the two sides of the ditch. Since the drawing is a side view, you know that the slab is longer than the ditch is wide but no exact dimensions are given.

Figure 5 is a drawing of the slab in Figure 4 but now there is a loaded cart halfway across the slab and the slab has deformed into a curve under the influence of the weight of the cart. The slab is sagging under the weight of the cart. Included in the illustration is a magnified view of the slab under the cart. In this magnified view, two pairs of arrows have been drawn on the slab. In the upper half of the slab the arrows are pointing towards each other indicating the presence of compression forces and that the top half of the slab is in compression. In the lower half of the slab the arrows are shown pointing away from each other indicating the presence of tension forces and that the bottom half of the slab is in tension.

Unlike most bridges, the Pont du Gard was not designed to carry people or animals, although tourists with a good head for heights can walk across it. It was built under the patronage of the Roman Emperor Agrippa in 18 BC to carry water to Nîmes in southern France. The water channel is nearly 50 m above the river, and traverses a distance of 270 m. The channel is nearly 2 m 2 in cross-sectional area and was lined with cement to make it waterproof. The arches, however, were all constructed of accurately cut stones using no cement at all.

With the Emperor backing the construction of the bridge, the financial side of the enterprise was presumably not a concern. But consider the necessary organisation of materials and labour. First it had to be decided exactly what was to be built. The design had to be worked out in meticulous detail so that the water channel would be at the correct height with the correct fall, so that the bridge would fit the site, and so that the stones could be cut to fit together to form the arches. The very existence of the bridge is proof that the Roman engineers had accurate methods of measuring and could transfer their calculations in instructions to the artisans (see Measuring sizes – length below). A quarry had to be established and means of bringing stone to the site provided: appropriate roads and carts. Workshops for preparing the stones to prescribed measures would most likely have been on site so that flexibility could be maintained during construction. Then there was a need for plenty of timber and skilled carpenters. Arches are built on a 'centring', which is a timber scaffold in the shape of the arch. Only when all the stones are in place for the whole curve of the arch can the centring be removed; the stones drop slightly and the arch becomes stable under its own weight. You will also realise that some heavy weights, both stones and timber, had to be shifted – which called for ropes, pulleys, levers …. And day by day the project had to be supervised, or kept on track, by an engineer who understood both what to do and how to do it. Evidently this engineer did it rather well: the result is still standing over 2000 years later.

Measuring sizes – length

The metric system was defined in France in the 1790s, following the French Revolution, to bring order to a confusion of vague and inaccurate standards. In fact the existing units were well defined – officially – but petty corruption and fraudulent trade made a new standard necessary.

The SI unit of length is the metre. The original intention was for it to be simply related to the size of the Earth. The distance from the North Pole to the equator along the meridian through Paris was to be ten million metres. This distance could be measured by astronomical methods using the official standards of the existing units. The result of this measurement was transferred onto a bar, made of an alloy which does not corrode and is very stable, as two scribed lines, now defined as one metre apart. The bar became the standard from which copies could be made for distribution.

To give you a rough idea of a metre, it is a big walking step – actually quite an exaggeratedly big step unless you are tall. Of course you can measure it more accurately using a ruler or a tape measure.

In practice we have need of both bigger and smaller units of length for measurement, so the metre is multiplied or divided by factors of ten. There are special names for these, and a selection is shown in Tables 1 and 2.

| Factor | Symbol | |

|---|---|---|

| deca metre | × 10 | |

| hecta metre | × 100 | |

| kilo metre | × 1000 | km |

| mega metre | × 1 000 000 | Mm |

| giga metre | × 1 000 000 000 | Gm |

Of these only the kilometre is in common use.

The prefixes shown in italic in the left column are common to all metric units to describe the multiplying factor to be applied to the base unit. By agreement, the Système International (SI) of units recognises factors going up or down in steps of one thousand, although below a thousand factors of ten are also recognised. Thus all the factors shown in Tables 1 and 2 are recognised by the Système International. Although in the UK we still tend to use the mile when discussing large distances, the standard measure in most other countries is the km. Other units such as the mm, µm and nm find extensive use in engineering measurement, as you will see.

| Factor | Symbol | |

|---|---|---|

| deci metre | × 1/10 | dm |

| centi metre | × 1/100 | cm |

| milli metre | × 1/1000 | mm |

| micro metre | × 1/1 000 000 | μm |

| nano metre | × 1/1 000 000 000 | nm |

To get some idea of scale, kilometres are useful for measuring place-to-place distances (e.g. London to Paris is 340 km). By definition of the metre it is 40 Mm around the Earth. It's about 400 Mm to the Moon, and 150 Gm to the Sun. On the small side, a UK 2 pence coin is about 2 mm thick. A micrometre (also colloquially called a micron) is almost as small as can be seen with a good optical microscope (a human hair is about 100 microns in diameter), and a nanometre is taking us towards the size of atoms. Nanotechnology is a rapidly growing field of engineering which deals with very tiny structures – from simple carbon nanotubes to complex protein-based molecular motors.

Activity 2 (example)

Which is the larger distance in each of the following pairs?

- a.2000 mm or 1.8 m

- b.1 mm or 1 km

Answer

- a.2000 mm is 2.000 m, which is larger than 1.8 m

- b.1 mm is 1/1000 m whereas 1 km is 1000 m, so the kilometre is larger.

Activity 3 (self-assessment)

- a.Which is the larger distance in each of the following pairs?

- i.50 cm or 0.45 m

- ii.100 μm or 0.1 mm

- b.In a photograph taken using a camera attachment with a particular microscope, features that are really 2 μm wide appear as 2 cm wide. What is the magnification? In other words, what factor does the lens multiply the 2 μm object size by in order to provide the 2 cm image size?

Answer

a.

- i.50 cm is 0.5 m, which is larger than 0.45 m.

- ii.100 μm and 0.1 mm are the same: 100 μm is 100 × 10−6, or 10−4m, and 0.1 mm is 0.1 × 10−3= 10−4m.

- b.The magnification is:

1.2.2 Disposable pens and mass production

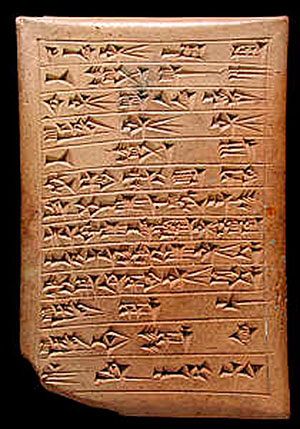

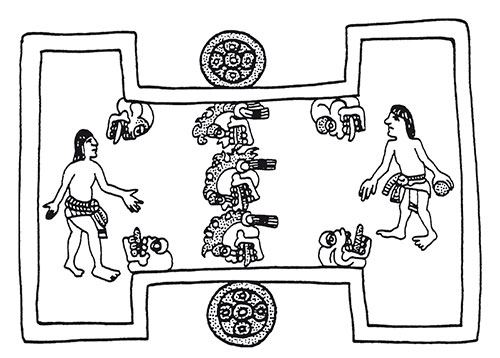

The idea of writing – setting language into a visible and permanent form through a set of symbols – is young relative to the age of our species, only a few thousand years. Among its earliest forms was the cuneiform script. The writing was a series of wedge-shaped (that's what cuneiform means) indentations in tablets of damp clay. When fired, the clay became solid, making a permanent record (Figure 6). Tonnes of these have been excavated by archaeologists and deciphered to reveal the records of the bureaucracy of the Sumerian and other Mesopotamian civilisations.

Figure 6 is a photograph of a rectangular clay tablet with rows of symbols. The width of the tablet is about two-thirds of its height. The symbols are combinations of small wedge shapes and lines. The rows of symbols are separated by long horizontal lines and everything (symbols and lines) is contained in a rectangle. The symbols, lines and rectangle are formed by indentations in the surface. They were actually created when the clay was soft using a blunt reed as a stylus, like using a small pointed stick to write in plasticine.

The Egyptians invented paper – the word derives from 'papyrus', the reed of the Nile which they used for paper making – so inks were needed in order to make a mark on the paper, with a 'pen' to carry the ink onto the paper in just the right quantity for legibility. In China the pen was a bamboo stick, shredded at one end to make a brush. Thanks to the skill of some brush users, calligraphy became an art form. Another simple pen was the quill, just a large feather cut across the stem at an angle and split to form a nib.

But now, as I scribble with a ballpoint pen, engineering has come onto the writing scene. Pens are manufactured and sold rather than home-made. My ballpoint pen is so cheap that when it is empty of ink I shall throw it away and get a new one – an example of how developments in engineering have led to the possibility of products that have a short life and are then discarded. As issues of sustainability grow in importance our priorities may change to make disposable items a rarity, but for now the interesting engineering question is how to make the pen so cheaply that we don't mind throwing it away.

Again, it starts with design. Obviously the thing must be designed to function as a pen, but the cost of its materials and its manufacturing process must be carefully thought about in order to get its cost very low. How is it done?

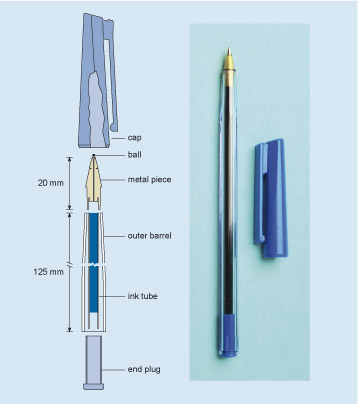

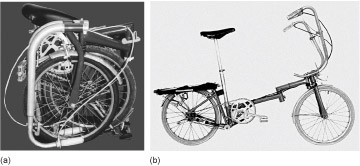

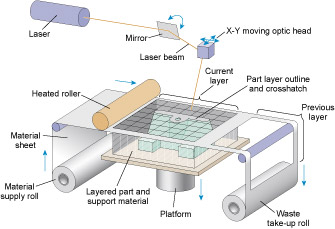

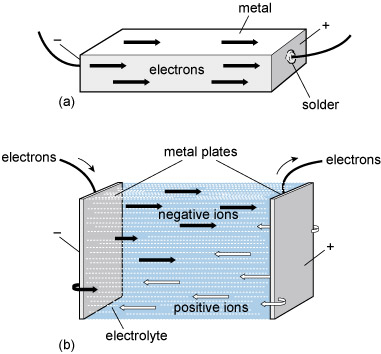

The right-hand part of Figure 7 is my pen; the left-hand part is a longitudinal section (see Engineering drawings ). It is not a unique design, and you will know that there are many similar designs in use. Indeed, it is one of the interesting features of design that many solutions can be conceived within the boundaries of a specification. What all such designs have in common is the use of a ball to transfer ink to the paper as the ball rolls across the page.

Figure 7 consists of a schematic drawing and a photograph. Both images are representations of the same ball point pen with the top removed and placed with it. The photograph of the pen is there to help the viewer interpret the drawing so we should concentrate on the drawing. The drawing of the pen is called a longitudinal section, which is a form of engineering drawing. A longitudinal section is a bit like cutting a baguette in half lengthways – each half reveals what it is like inside the loaf. Imagine the pen cut in half along its longitudinal axis so that you can see the insides which are also cut in half. Various components of the pen can now be identified and depicted. In this case, the pen is shown as having 6 components – an end plug, an ink tube filled with ink, an outer barrel, a ball, a metal piece designed to hold the ball and a cap. In this case, the pen is shown in a partly assembled state. The ink tube is in the barrel but the end plug, metal piece, ball and cap are shown separately. All these components are laid out in a straight line as if the pen had been literally pulled apart. Since the pen has a transparent outer barrel, all the parts with the exception of the ball can be seen in the photograph. Even the ball could be seen with close physical inspection. The section drawing comes into its own when the internal components and in turn their internal features cannot be seen or felt. Engineers also use their drawings to convey sizes by using a system of arrows and lines. Lines are used to identify the positions of parts of the object and a line with an arrow head at each end, which is called a double headed arrow, is used to denote the distance between those lines. Next to the line is written the dimension. In this drawing two dimensions are identified. The length of the outer barrel is 125mm and the length of the metal piece is 20 mm.

Engineering drawings

Figure 7 gives an example of a cutaway drawing: a representation of what you would see if, in this case, you were to slice the pen through the middle. It's easier to illustrate the different parts on a diagram like this than it would be using a photograph, or just words. It also allows us to show clearly the dimensions of the various parts, by marking these on the diagram, and it is used to communicate all the necessary information from the engineer who designed the component to the person who will eventually make it. You should not find diagrams like this difficult to decipher.

The pen in Figure 7 is made of six solid parts plus the ink. The outer barrel and a lid which fits over it are made of two different types of plastic. The metal piece contains the ball, and both the outer barrel and the ink tube fit its other end. The plastic end-plug prevents ink loss from the back of the pen and holds the far end of the ink tube. Each part has to be made separately and then they have to be assembled.

Activity 4 (exploratory)

- a.What are the problems that must be overcome in order for a ballpoint pen such as the one in Figure 7 to work successfully? Put yourself in the position of an engineer who has been given the basics of the design: ball, ink, and barrel, and has to solve the problems to enable it to be made. See if you can come up with three of these problems.

- b.What changes could you make to the design of the disposable pen to reduce potential environmental impact? Suggest one change that would make it easier to reuse and another that would improve recyclability.

Answer

This is what I came up with.

- a.The ball must fit sufficiently tightly that the ink doesn't ooze out. On the other hand, the ink must not be completely blocked. Also, the ball must not be damaged during the assembly process. (Maybe that counts as three problems, rather than one.)

The ink must not be too fluid or it will leak from the pen. (In many pens the end of the ink holder is open; you may have carried such a pen in a pocket, and discovered that the ink becomes more fluid at body temperature, leading to an unfortunate accident.)

The join between the metal top and the ink holder must be a good seal.

You may have come up with equally valid problems which need to be addressed for the product to work successfully.

- b.To reuse the pen a method must be provided for replenishing the ink supply. This might be done by supplying the ink tube (perhaps in combination with the end cap) in the form of a removable cartridge. The cartridge would need to be sealed before use, so that the ink does not leak: fountain pen cartridges typically use a small glass ball, or a very thin layer of plastic, for this purpose. The seal is broken when the cartridge is pushed onto the nib.

Recyclability could be improved by reducing the number of different materials involved, and making them easy to separate. The cap, outer barrel and end piece could be made from the same type of plastic. The nib section and the ball could be made from the same metal and be easily detached from the plastic parts. The ink tube would probably have to remain disposable.

You may have come up with other valid suggestions. Of course these changes may well make the pen more expensive, or compromise some other aspect of performance, so the different demands need to be weighed up against each other.

The engineering secret that enables such a thing to sell in the shops for 20 pence is to devise machinery that will make millions of pens. A million pounds invested in machinery that will make fifty million pens gives a unit cost of 2 pence per pen. That leaves 18 pence for materials, distribution costs and profit. My sums are probably in the right ballpark, but much more complete and accurate costings would be done in reality. For instance, exactly how long a period should the tooling costs be spread over? What about buildings and labour costs? And how do issues such as taxation come into the equation?

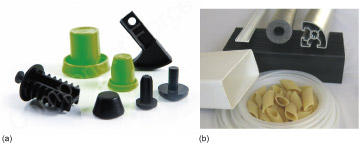

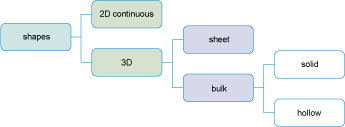

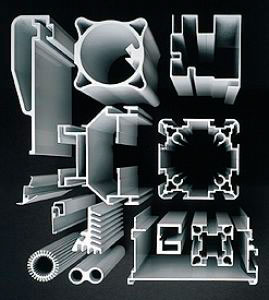

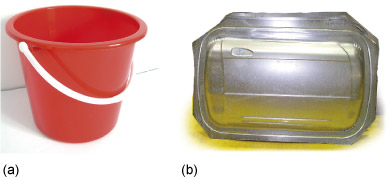

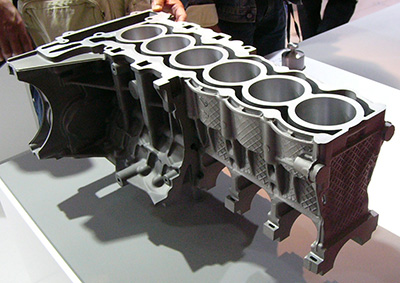

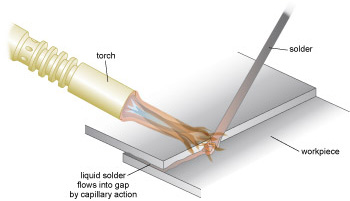

With the advent of low-cost plastic materials provided by the chemical industry, typically using oil as the raw material, such cheap, disposable objects have become open to manufacture (see Plastics and polymers ). Thermoplastics, which are a type of polymer that soften when heated and solidify when cooled, can be moulded rapidly and relatively easily to close dimensional tolerances (in an engineering context, tolerance refers to the limit of acceptable deviation from an intended value). The outer barrel, cap and end plug will be made by moulding. The ink tube is also plastic, but being of constant cross section, can be made even more rapidly by extrusion (that is, squeezing through a suitably shaped gap – think of toothpaste coming out of a tube). The tube can then be filled with ink and cut to length. Both these processes involve molten plastic being forced into a die which produces the required shape (Figure 8).

Figure 8 has two photographs each showing collections of plastic components. The left-hand image shows 7 items produced by moulding. It is difficult to tell what they are from the photograph but their shapes and sizes vary. They are all solid and their sectional shapes will vary depending on where the section is actually taken. 5 are symmetrical about an axis but the other two have no symmetry. The left-hand image contrasts sharply with the right-hand image that has five items produced by extrusion. Four of these items are made from different forms of plastic. The sections of the four plastic extrusions are a hollow rectangle with rounded corners, a tube with a thick wall, a flat solid rectangle with raised portions and a very complex hollow section. The fifth item is a type of pasta called penne, which is tubular with a thin wall. The image shows a bowl of penne.

Plastics and polymers

The oil-based plastics referred to in the main text are a small subset of an important class of materials known as polymers. Polymers include a huge range of materials, both natural and synthetic, with a very wide range of properties. The one thing that they all have in common is that the molecules (two or more atoms held together by chemical bonds) from which they are made are very large, consisting of hundreds, thousands or even millions of sub-units, chemically joined together to form long chains.

A long chain can take up many different arrangements. Think of the different things you can do with a piece of string: you can stretch it out, coil it up neatly, knit it into a sheet, knot it into a net or tangle it up randomly … The possibilities are endless and each will result in a different type of structure, with different properties. The same applies to polymers: the arrangements that the chains adopt are influenced by the length of the chain, by the chemical nature of the sub-units and by the processing conditions, and can lead to materials with very different physical properties. A few examples are shown in Figure 9.

Figure 9 is an array of 8 images arranged into 2 columns and 4 rows. The first column shows 4 products and the second column shows four representations of how polymers can be structured. The four pairs of product and polymer structure are intended to give some indication of how the structure is related to the properties required of the polymer when used in the product. Starting at the top of the illustration, the first product is a high performance sports car. The polymer structure is represented by a number of straight parallel lines. This polymer is called Kevlar, whose rigid rod-like polymer chains align to produce a very stiff and strong material. Next is a plastic bucket. The polymer structure is represented by a single line shown folded in a concertina fashion. This polymer is called high density polyethene (HDPE). The flexible polyethene chains fold up into crystals producing a tough material. The third product is a rubber (elastic) band. The polymer structure is represented by a number of wavy lines criss-crossing each other with dots at some of the cross-overs to indicate that the lines are joined at these points. This polymer is called rubber. In rubber the chains are loosely linked together in a random network to produce an elastic material. The fourth product is a T-shirt. The polymer structure is not represented by lines but by small circles, coloured blue, grey and white, joined by lines to form a three-dimensional lattice structure. The circles represent atoms and the lines represent their bonds. This structure is therefore representing a lot higher magnification than the previous structures. This polymer is called cellulose which is a biopolymer found in cotton, wood and other plant materials. The structure consists of long chains of sugar molecules linked together to form fibres and networks.

The correct chemical name for the PE in HDPE (and its close relation LDPE) is 'polyethene', but you will also find it referred to as 'polyethylene' (an older but commonly used version) or 'polythene' (a trade name). All three names may be used in this course.

The term 'plastic' tends to be reserved for synthetic polymers derived from oil, such as polyethene (PE, usually sub-divided into high density HDPE and low density LDPE) or polyvinylchloride (PVC). Many of these are thermoplastics: they soften when heated and solidify when cooled, making them relatively easy to process. The word plastic actually pre-dates the discovery of polymers by a few centuries, and originally referred to the capacity of certain materials, such as clay or wax, to be moulded or shaped. 'Plastic', in this original sense, describes the properties of some, but not all, polymer materials.

The writing end is more tricky to make. A typical ballpoint pen will inscribe many kilometres of writing during its use, hence the material for the ball needs to be hard, so that it does not wear away too quickly. Fortunately, tungsten carbide (a hard ceramic material) is often used for ball bearings, and these can be made to a very close tolerance. That is, they can be made with a high degree of accuracy, with very little variation in diameter observed across a batch of ball bearings. Thus the balls for the pen can be bought ready-made from a ball bearing manufacturer.

The pen maker has to make the metal piece to receive the ball with just enough clearance to allow the ink to flow round the ball. It can be turned on a lathe from a metal bar. If this were to be done by hand, the pen would become expensive simply because of the labour cost involved. So it is likely that a computer-controlled lathe would be used. To enable the machining to be done at high speed, for rapid output, a material that can be easily cut, and that is not brittle (so it won't chip) must be chosen. Brass (an alloy of copper and zinc) meets this requirement, but is quite an expensive material. The design/costing exercise thus has to balance material costs against tooling costs. The balance will depend on the quantity of brass used and the time needed to machine each piece. The metal piece that holds the ball at the end of my cheap ballpoint pen is indeed a yellowish metal: brass.

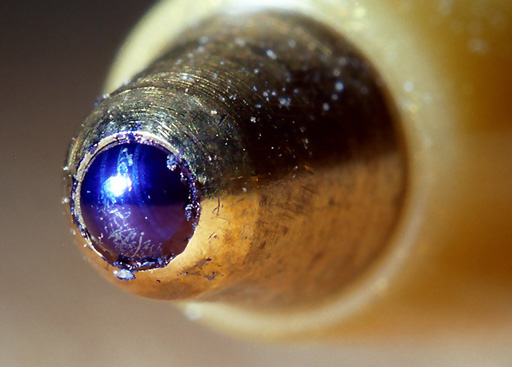

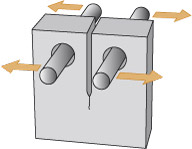

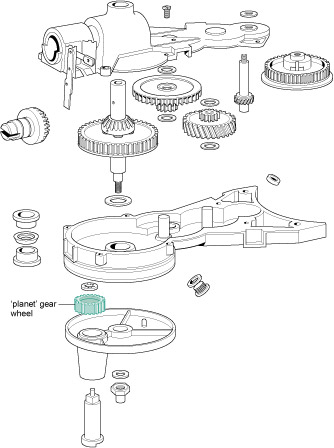

A production sequence is needed that will provide the top metal part, insert the ball and reduce the diameter of the end to trap the ball (Figure 10). Finally, all the parts have to be assembled. Once again, a machine must do this in order to maintain a high production rate. So a critical factor is that all parts of each sort must be interchangeable. It would be useless, for example, if a batch of end plugs that came to the assembly machine were too big to fit into the outer barrel. So this brings us on to the key part of these case studies: mass production.

Figure 10 is a highly magnified close up photograph of the writing tip of a ball point pen. The rest of the pen is visible in the background but it is out of focus. Part of the ball protrudes from the metal part that holds it. It is difficult to judge how much of the ball is visible but it must be less than a hemisphere otherwise the metal part could not hold the ball in position. The ball is covered in ink and there is additional ink at the gap between the ball and the metal part that holds it. There is spillage around the ball onto the metal part and there are also bits of dried ink on the metal part.

1.2.3 Muskets and mass production

Achieving interchangeability was an essential breakthrough to mass production. Look around your room right now; you will see many artefacts which have been assembled from parts: your furniture is likely to have been made this way for example. Whether the parts have to be made to fit accurately, as with the ball in the ballpoint pen, or can be a sloppy fit with gaps filled with glue (as with furniture, if gaps and glue are hidden from the eye) will depend on the function to be met by the product.

Close-tolerance interchangeability was achieved in manufacturing only after a long and difficult path. It is now a characteristic difference between industrial and craft manufacture: essentially it can only realistically be achieved by machine-made parts.

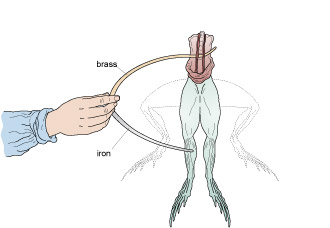

It is perhaps unfortunate that many major developments in engineering have come about as a consequence of war. Tremendous efforts were made towards interchangeability in the state armaments industry of eighteenth-century France, but with very limited success. The infantry weapon of the time was the muzzle-loaded musket, where the gun was loaded by pouring gunpowder down the barrel, and shoving the ball (the precursor of the modern bullet) down on top. The gunpowder charge was detonated by a spark caused by a flint striking a steel plate, and the 'flintlock' was a critical sub-system of the gun.

Figure 11 is a photograph of a musket. There is a rectangle shape superimposed on the photograph to highlight the area that contains the lock mechanism. The phrase "lock, stock, and barrel" refers to the three main parts of a musket. The stock takes up about quarter of the total length of the musket and the lock mechanism is located where the stock joins the barrel.

The lock was a complex assembly of levers, pivots, plates and springs – some twenty parts in all. To equip a large army, many thousands had to be made, and this task fell to the esteemed profession of the locksmith. (Note that the 'lock' in 'locksmith' refers to the gun mechanism, rather than the door-fastening mechanism of modern usage.)

Master locksmiths first forged the parts from iron stock, and then complete locks would be made by sorting through piles of component parts to find a combination that could be made to fit together and work – with some judicious application of the file along the way. Thus, to make a working lock required a good deal of hand-filing and assembly (using hired labour); each lock was an individual mechanism, and the parts were rarely interchangeable between locks. Imagine how unfeasible such methods would be for the manufacture of the disposable ballpoint pen.

Production rates by such methods were very slow, so there were several hundred craftsmen in the trade working in small groups in workshops scattered around the armament factories. Locksmiths were highly skilled, and would protect their own interests, which they naturally saw as preserving the status quo. However, in 1723,

at the Hôtel des Invalides, in front of august witnesses, Guillaume Deschamps disassembled fifty flintlocks and recombined their parts to produce fifty functioning flintlocks. The minister of war ordered Vallière [a French artillery officer] to supervise the inventor's attempts to expand his system. By 1727 Deschamps had manufactured 660 locks judged interchangeable by Vallière's own inspectors, 'all properly reassembled without a single stroke of the file'. Each lock, however, had cost five times the current price. Undaunted, Deschamps proposed a larger manufacture to produce 5000 identical gunlocks, which he expected to cost only one twentieth of the current price. Tasks were to be divided among specialist workers, who would use dies, gauges and filing jigs to shape parts precisely. Master pattern locks would be distributed to the War Office, Grand Master, inspector, controller and examiner. Deschamps noted that with six hundred locksmiths in the St. Etienne region, he could locally staff a manufacture to produce 40 000 gunlocks a year.

In fact none of this came to pass. In spite of the advantages of the new process, both in the initial assembly of guns and in their subsequent repair, especially in the field, it was by no means clear that productivity could be increased or unit costs brought down. Indeed it took locksmiths longer to make parts which all matched the gauges than to make sets which could be individually assembled functionally. With large quantities of parts being made by the existing routes, it was always possible to find a set of components that would fit together suitably, even if this meant no interchangeability from one lock to the next. The locksmiths were also less than cooperative towards the introduction of the new process, fearing that their craft was being de-skilled so that they would lose their powerful position. Nor were the administrative and technical problems of organising the dispersed skilled labour force easy to overcome.

Fifty years later we read of Honoré Blanc, controller of musket production at the three factories in France, repeating the demonstration of interchangeability with the locks of the standard French musket known as the M1777. The same arguments raged and little was done. Eventually, in post-revolutionary France at Blanc's own manufacturing base at Roanne, interchangeability of flintlock parts was achieved on a large scale. New organisations of the processes, new divisions of labour and new machines contrived to keep the costs of interchangeable locks to no worse than 30% greater than the individual locks made by the old methods. When Napoleon's armies marched across Europe, the bases of mass production had been set.

1.2.4 Ammonia synthesis by bulk production

To make high explosives, such as TNT, requires chemicals called 'nitrates'. At the time of the First World War these were obtained from a natural mineral, which was mostly supplied from South America. Germany was cut off from these mineral supplies, so its chemical industry was charged with the task of manufacturing nitrates artificially.

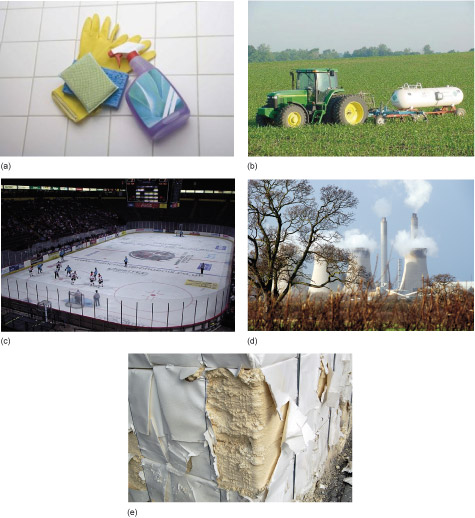

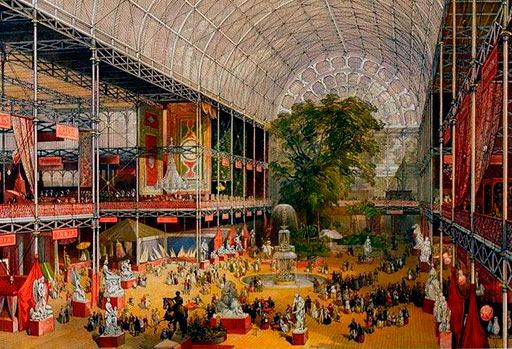

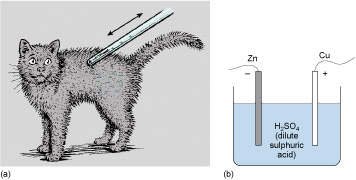

Nitrates are essentially composed of two gaseous elements: nitrogen and oxygen, which are chemically combined. Nitrogen is abundant in the atmosphere, around 76% by mass, but in an unreactive form. The task therefore came down to coaxing atmospheric nitrogen into a more reactive condition. A particularly good method of achieving this is to combine nitrogen with another gaseous element, hydrogen, to form ammonia. The Haber–Bosch process for making ammonia was the outcome of the German war-driven research and enabled Germany to maintain its supplies of munitions. The process is now the mainstay of ammonia production world-wide each year, with ammonia mainly used as the basis of nitrogenous fertiliser. That said, it is also used as a constituent of many household cleaners, as a refrigerant in industrial refrigeration systems, to scrub sulphur dioxide from the burning fossil fuels used in power plants, and for the pulping of wood in the paper industry (Figure 12).

Figure 12 is an array of 5 photographs. Reading left to right, top to bottom, the first image shows cleaning materials (rubber gloves, sponges and a hand-held spray dispenser) on a tiled floor. The next image shows a farm tractor pulling a fertilizer spreader across a crop field. The next image shows an ice rink being used for a game of ice hockey. The next picture shows a view of a power station identified as such by the row of cooling towers with steam coming from them and two taller chimneys with smoke coming from them. The final image is a close up photograph of a bale of wood pulp.

Activity 5 (video)

Watch Video 1 which describes the background to the discovery of how to manufacture ammonia artificially.

Transcript: Video 1 Manufacturing ammonia artificially (2 minutes)

The production of a chemical like ammonia is rather different from the mass production used for a ballpoint pen. This is because the product, ammonia, is not particularly useful in itself: it becomes the starting material in yet further processes. The product does not come out as discrete items, and production may be continuous. This type of production is often referred to as 'bulk production'.

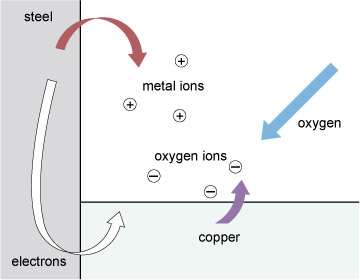

Ammonia production is a good example of engineering as the 'appliance of science', the science in this case being chemistry (see Engineering with atoms ).

Engineering with atoms

When chemistry is applied as a means to an end, where that end is a product with a function, it can be thought of as engineering with atoms. Clearly chemistry is large-scale engineering, and not one atom at a time. The chemical reactions involved often progress without any intervention from humans, indeed some reactions can only be prevented by ensuring that the chemicals involved are kept well apart. However, there is still engineering skill required for chemical manufacture, such as creating the ideal conditions for bringing atoms together to produce the required product, be it a drug, a fertiliser or furniture polish.

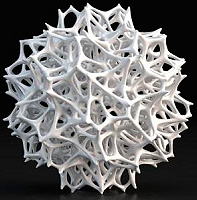

True 'atomic engineering' is beginning to emerge in the field of nanotechnology, where atoms and molecules are manipulated singly, in small groups, or in layers a few atoms thick. Many products are coated with thin films for special purposes. One can think of examples such as the anti-reflective coatings on lenses, hard coatings of titanium nitride on drill bits, and self-cleaning glass windows. These are coated with a very thin layer of titanium dioxide that breaks down dirt particles with the help of sunlight and the oxygen in the air, and have been available to consumers since the early 2000s.

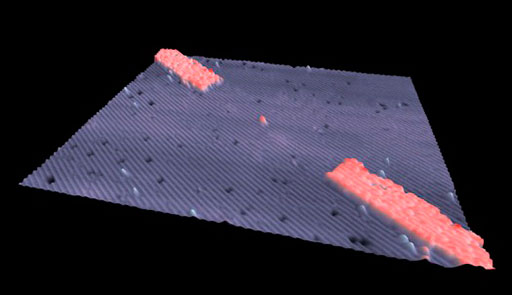

Manipulating atoms one by one takes this a step further, and the ability to do this is especially interesting to computer chip manufacturers. In February 2012, a group of researchers at the University of New South Wales announced that they had made a transistor consisting of a single atom of phosphorus. (A transistor is a device used for amplifying and switching electronic signals.) Figure 13 shows the transistor sitting in the space created by removing an atom of silicon from the surface of a silicon crystal. The two wide bands running diagonally across the image are the electrodes; the gap between them, where the phosphorus atom sits, is 20 nanometres wide. This particular transistor would be difficult to use as it behaves well only if cooled to −233 °C, about 40 K above absolute zero, but the ability to create this device will undoubtedly lead to more practical applications.

Figure 13 may be a photograph or a 3D computer model but it is clearly intended to represent the microscopic world. A square piece of silicon is shown as a ridged but otherwise flat surface that is lightly covered in small specks of other substances. The two electrodes are shown as additional substantial lines of material on the surface, starting at opposite corners and laid out along a common diagonal line. They are not long enough to meet and in the middle of the gap between them there is a single atom of phosphorus.

For the production of ammonia, the elements nitrogen and hydrogen have to be coaxed to react together: ammonia is the product of this reaction under the right conditions. The right proportions are also needed: 1000 kg of ammonia requires 820 kg of nitrogen to react with 180 kg of hydrogen (see Units of mass ). The reactants (i.e. the chemicals that are reacting) are gases, and so is the product. So one constraint on the production system is that it has to supply the ingredients and remove the product in the gaseous state.

Units of mass

Before defining the units of mass it's very important that the distinction between 'mass' and 'weight' is clear. In everyday language we use the term 'weight' when we're really talking about mass.

For example, many people are interested in their body mass index as a measure of health. When I visit my doctor she asks me to stand on the scales (Figure 14) so that she can 'weigh' me. The reading from the scales is 72 kilograms (kg). To calculate my body mass index my doctor also needs to know my height in metres, which is 1.74 m.

Figure 14 is a photograph of someone standing on platform weighing scales. Only the feet of the person are shown in the picture. The scales have a flat surface on which to stand and a large round instrument face with a pointer that rotates when the person stands on the platform. The face has two scales. The inner scale is graduated in kilograms and the outer scale is graduated in stones. The position on the scales where the pointer stops indicates the mass in either kilograms or stones.

Now, when my doctor wants to find my body mass index (BMI), she does the following calculation

Fortunately, this is in the healthy range of 20–25.

You may be wondering why I have put 'weight' in inverted commas and why BMI isn't referred to as 'body weight index'. Well, what my doctor is interested in when I stand on the scales is how much of me there is – that is, my mass. And in SI units we measure mass in kilograms. So, the equation should say

But what the scales are actually doing is measuring the force my body exerts on the scales when I stand on them. This force is produced by the gravitational effect of the Earth pulling on my body and this, in engineering terms, is my weight. Weight is measured in 'newtons' however, the scales are calibrated to give a read out in kilograms.

If my doctor and I were on the Moon, where the effect of gravity is much less than it is on Earth, the force that my body exerts on the scales would be less and my weight would be lower. But my mass would not change – there would still be the same amount of me!

The basic unit of mass is the gram, but it is actually the kilogram (1000 grams) that is standardised and which provides the SI unit for mass. A litre of pure water was defined to have a mass of 1 kilogram. Again the definition was translated into a single prototype, this time as a cylinder of a platinum–iridium alloy, which is dense and uncorrodable. The standard kilogram is actually quite a small cylinder (Figure 15), about 4 cm high and wide, housed in an environmentally controlled safe at the BIPM (the International Bureau of Weights and Measures) in Paris.

Figure 15 is a photograph of the standard kilogram cylinder housed in a small glass bell jar. Covering this bell jar is another larger glass bell jar and covering that bell jar is another even larger glass bell jar. On the top of the largest external bell jar there is a tap and tube connection, used presumably to alter the atmosphere in the jars once they have been placed in position over the standard. Shown next to the jars is a pair of tongs designed for picking up the kilogram cylinder thereby avoiding contact by human hand, which could possibly alter the mass of the cylinder.

Once again – as with length – multiples and subdivisions of the basic unit, the gram, are useful; and, because the SI generally uses a factor of 1000 to define multiples and subdivisions, the prefixes that you met in connection with length are also used with mass.

| Factor | Symbol | |

|---|---|---|

| microgram | × 1/1 000 000 | μg |

| milligram | × 1/1000 | mg |

| gram | 1 | g |

| kilogram | × 1000 | kg |

| tonne | × 1 000 000 | t |

Notice that 1000 kg (a megagram) is actually called a tonne. (Its spelling distinguishes it from the imperial 'ton', though they are very close – to within about 2%. The imperial system of units is discussed further later in this section.)

You will find examples of SI units of mass in everyday situations, often in combination with other units. Listen out for figures describing air pollution in micrograms per cubic metre (μg m −3 ). The legal limit (in 2012 in the UK) for the concentration of alcohol in a driver's blood is 80 mg per 100 cm 3. Chocolate is often sold in bars of 100 g and sugar in 1 kg bags.

Theoretical chemistry provides us with a clear understanding of what conditions are needed to produce ammonia. There is no need to go into great detail here regarding the chemical principles involved, but it is worth looking at their consequences as an example of the fundamental physical laws that constrain much of engineering. Making ammonia is not as simple as stirring the raw materials together in a bucket; and understanding the process by which ammonia is formed allows us to make it as efficiently as possible.

First, the reaction between nitrogen and hydrogen proceeds better at high pressures. If the engineer can provide a high-pressure system for making the ammonia, it will be much more efficient, and so more productive, than one operating at normal atmospheric pressure.

Secondly, chemical reactions often produce heat, and the formation of ammonia certainly does this. However, if the temperature becomes too high, the reaction can become slow; so the engineer can help production by getting rid of the heat generated by the reaction, thereby keeping production high.

Thirdly, it's important to extract the ammonia as it is being made. As more and more ammonia is produced in the reaction chamber, using up the nitrogen and hydrogen, the reaction will slow down and eventually stop. This would happen before all the nitrogen and hydrogen were used up: the presence of the ammonia slows the reaction. Extracting the ammonia product and pumping in more ingredients will keep production continuous.

This is an example where the engineering is driven by the chemistry. Without an understanding of how ammonia is made by this reaction, and how chemical reactions behave in practice, it would be an extremely inefficient process, and indeed perhaps impossible to manufacture ammonia on any viable industrial scale. Yet the chemical knowledge is insufficient without the engineering expertise to build a plant to the required specification: a plant that will fulfil the conditions that I have outlined above.

Having collected the arguments from chemistry on the conditions to make ammonia it remains to ask how to make it quickly. Typically plant will only be economic at production rates of a few thousand tonnes per day. The optimum pressures and temperatures need to be found. That is a job for theory and for development research.

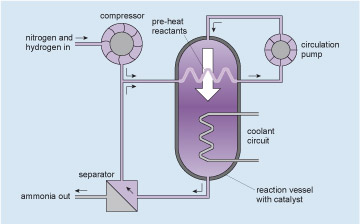

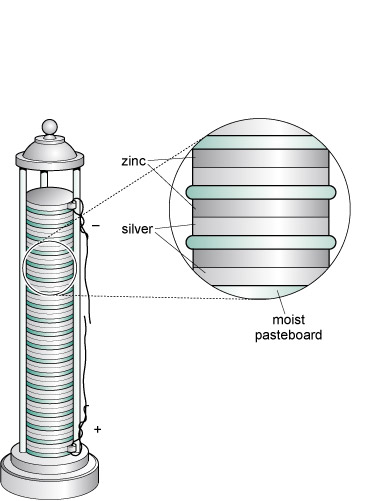

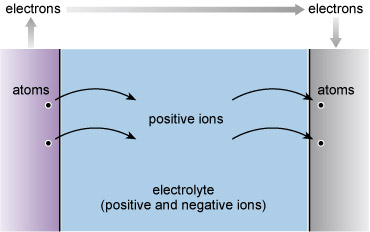

So, with all this science understood, our chemical engineers have to design and build a system that takes advantage of it. Figure 16 indicates the principles of the plant.

Figure 16 is a schematic simplified layout or map of an ammonium production system which has 4 components connected by pipelines. The largest component is a reaction vessel with catalyst and this is located in the centre of the layout. It is shown in section form so that the internal details can be displayed. The top and bottom are sealed with semicircular ends. There is a vertical inlet at the top and a vertical outlet at the bottom. A horizontal but zigzagged pipeline crosses through the vessel which represents a heat exchanger that takes heat out of the gases in the reaction vessel and transfers it into the gases flowing through the pipe. Further down the vessel there is another zigzagged pipeline passing through the reaction vessel which represents another heat exchanger. This heat exchanger takes more heat out of the gases in the reaction vessel and transfers it into a coolant circuit. The details of the coolant circuit are not shown. A compressor is located in the top left-hand corner and is shown as a round object with internal rotating vanes. The vanes are curved to symbolise the ability to increase pressure. There is a horizontal pipe inlet on its left side and a vertical pipe outlet at the bottom. In the top right hand corner of the layout there is a circulation pump. The symbol for the pump is the similar to the compressor but it is smaller with straight vanes. The straight vanes indicate that this pump increases flow but not pressure. There is a vertical inlet pipe at the bottom and a vertical outlet pipe at the top. In the bottom left hand corner there is a separator unit. This is shown as a square with a diagonal line going from the top left corner to the bottom right corner. There is a horizontal inlet pipe on its right side, a vertical outlet pipe on the top and another horizontal outlet pipe on its left side. There are pipelines shown connecting these components. They are depicted using two parallel lines about 1 mm apart and they are either horizontal or vertical. An arrow next to the pipeline indicates the direction of flow which can be up or down, or to the right or to the left.

A horizontal pipeline enters the layout at the top left hand corner and carries nitrogen and hydrogen into the system, flowing to the right. It connects to the left inlet of the compressor. From the bottom outlet of the compressor, a vertical pipe flows down to meet a vertical pipe flowing up from the separator. At the meeting of these pipelines there is a T junction. Imagine the T is on its side with the normally vertical part of the T now horizontal flowing to the right. The two vertical pipelines flow into the T and then flow out of the T to the right. The pipeline continues to the right and connects with the left side inlet of the upper heat exchanger that is in the reaction vessel. Connected to the right side outlet of the heat exchanger is a horizontal pipeline which continues to flow to the right. It then turns left to become vertical and flows up to connect with the bottom inlet of the circulation pump. Connected to the outlet of the circulation pump is vertical pipeline which flows up. It then turns left to become horizontal and flows to the left. It then turns left again to become vertical and flows down. This pipeline then connects to the top inlet of the reaction vessel. Connected to the bottom outlet of the reaction vessel is a vertical pipeline which flows down. It then turns right to become horizontal and flows to the left. This pipeline then connects to the inlet on the right side of the separator unit. A vertical pipeline is connected to the top outlet of the separator unit and carries unreacted nitrogen and hydrogen which flows up to connect to the T junction mentioned before. A horizontal pipeline is connected to the other left outlet of the separator and flows to the left, carrying ammonia out of the system. The pipeline leaves the layout at the bottom left hand corner.

The gases at high pressure are pumped around the circuit shown (follow the arrows starting at the circulation pump, which pumps the mixed, but unreacted, ingredients into the reaction vessel). The gases enter the reaction vessel at the top and some of the nitrogen and hydrogen react to form ammonia as they pass over a catalyst within the reaction vessel (see Catalysts and converters ). The mixture leaving the reaction vessel at the bottom passes to the separator where the ammonia product is removed. The unreacted nitrogen and hydrogen, together with enough extra nitrogen and hydrogen injected from the compressor to make up for what has reacted, return to the pump via a heat exchanger within the reaction vessel which both pre-heats the reactants and removes some of the reaction heat. More excess heat is removed from the reaction vessel by the coolant circuit. Thus a continuous production of ammonia is achieved. I will not go into the subtleties of the internal design of the reaction vessel which make the process efficient, nor of the control systems which link the heat and gas flows. Rather I will concentrate on the engineering of the reaction vessel itself.

Catalysts and converters

You may have come across the term catalytic converter , probably in the context of cars and exhaust emissions. A catalyst is something that speeds up or otherwise aids a chemical reaction. A true catalyst doesn't participate in the reaction at all: it just helps it on the way, and is unaffected itself, being left behind as good as new once the reaction is over. In petrol- and diesel-engined cars, a chamber with a platinum-based catalyst is fitted as part of the exhaust system. Some gases in the exhaust, particularly nitrogen oxides and unburnt fuel molecules, decompose into more benign gases when they come into contact with the catalyst.

In the case of ammonia production, iron granules with a large surface area are the usual catalyst.

The ammonia reaction vessel is large, perhaps a cylinder 20 m high by 2 m in diameter, which has a volume of about 63 m 3 (see Units of area and volume ). For efficient performance, the pressure needs to be about 350 times atmospheric pressure (or '350 atmospheres').

The contents of the reaction vessel, at a temperature of several hundred degrees Celsius, include a poisonous gas, ammonia, and an explosive one, hydrogen. The consequences of a leak could be extremely dangerous. So, beyond the primary engineering to provide function, an additional issue here is safety. How do you make a vessel of that size, capable of holding the pressure, with at least six pipes going into it, and guarantee that it is safe? The answer lies largely in 'past practice'. Because pressure vessels have been made for a long time, for many purposes (for example, high-pressure steam boilers in power stations), the 'know how' has gradually developed. Each increment of extra performance demanded from time to time has pushed knowledge a bit further, and the occasional accident serves as a salutary reminder that we don't always get it right.

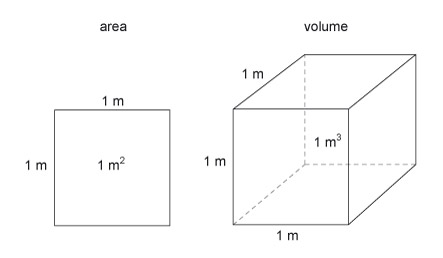

Units of area and volume

The basic units of area and volume are the 'square metre' (m 2 ) and the 'cubic metre' (m 3 ) (Figure 17). These are simply shapes which have sides that are 1 m in length.

Figure 17 consists of two diagrams; one represents a unit of area and the other represents a unit of volume. The unit of area is represented by a square. Each side is labelled as being 1 metre long and the space bounded by the square is labelled as being 1 metre squared. The unit of volume is represented by a cube. The cube is drawn using a technique of drawing called oblique projection. Whenever a cube is viewed, only 3 of the 6 faces will be visible and their shape will always be distorted by the effects of perspective. Perspective drawing is very difficult and time consuming and so engineers utilize other methods of drawing a solid object. Oblique projection is perhaps the easiest as one face of the object is drawn using its true geometric shape. In this case, one face of the cube is drawn as a square. The right hand surface and the top surface are also visible. Consider the top surface. The edge shared with the front surface is horizontal, and the edge shared with the rear hidden surface is also horizontal. The side edges are drawn at an angle which in this case is about 38 degrees. The sides are drawn a little shorter. The top surface is therefore drawn as a parallelogram. Consider the right hand side surface. The edge shared with the front surface is vertical, and the edge shared with the rear hidden surface is also vertical. The top edge is shared with the top surface and is at the same angle of about 38 degrees. The bottom edge is shared with the hidden bottom surface of the cube and is parallel with the top edge. The side surface is therefore drawn as a parallelogram. A cube has 12 edges but only 9 edges are visible. Although a square has 4 edges and three square surfaces are visible, the side and top surfaces each share an edge with the front surface and share another edge with each other. The cube has another 3 edges and 3 surfaces that are not visible to the viewer. The hidden edges are shown using another technique of drawing – they are drawn with dashed lines. One of these hidden edges is vertical, one is horizontal and the other is at the angle of about 38 degrees. A cube has 8 corners but only 7 are visible. The three hidden lines join at that hidden corner and as a result the three hidden surfaces are revealed by the geometry of the lines. Each side of the cube is labelled as 1 metre long and the space inside the cube, which represents the volume of the cube, is labelled as 1 metre cubed.

Square metres are inconveniently small for measuring land holdings so the French Revolutionary Committee decided to define 100 m 2 (i.e. 10 m × 10 m) as 1 are (pronounced 'air'). A more frequently used measure of land area is the 'hectare'.

Activity 6 (example)

Use Table 1 in Section 1.2.1 to remind yourself what 'hect' means and then work out how many square metres there are in a hectare.

Answer

'Hect' means × 100, so 1 hectare is 100 are, which is

- 100 × 100 m 2 = 10 000 m 2.

Cubic metres, on the other hand, are inconveniently large for measuring a person's daily intake of food or drink. You will often come across the cubic centimetre, cm 3 (i.e. 1 cm × 1 cm × 1 cm, or 0.01 m × 0.01 m × 0.01 m) or other units derived from the metre. A related unit of volume, the litre, has also been defined and is commonly used to measure volumes of liquid. A litre is the volume of a cube of 1 decimetre – or 10 cm edge length. The symbol for 'litre' is its initial letter, l, but this is so easily confused with the number 1 (for example, eleven litres can be written as 11 l) that it is usually safest to write out the word litre.

An even smaller unit of volume, the millilitre, is also useful. It is one thousandth of a litre. The symbol for millilitre is ml, which is not as ambiguous as the litre symbol.

Activity 7 (self-assessment)

- a.Use Table 2 in Section 1.2.1 to remind yourself what 'deci' means, and then work out how many litres there are in a cubic metre.

- b.A bottle of water has a volume of 500 ml. How many cl is this?

- c.What is the relationship between 1 ml and 1 cm 3 ?

- d.The standard size of a British brick is 215 mm × 102.5 mm × 65 mm. First guess its volume in litres, then calculate it.

- e.How many bricks make a cubic metre of wall (ignoring the mortar)?

Answer

- a.The 'deci' prefix means × 1/10, which is equivalent to ÷10, so there are 10 dm in one metre. Hence,

- 1 m 3 = 10 × 10 × 10 dm 3 = 1000 litres.

- b.The 'milli' prefix means ÷1000. The 'centi' prefix means ÷100. So there are 10 ml in 1 cl and, therefore, 500 ml = 50 cl.

- c.10 cm = 1 dm so

- 10 × 10 × 10 cm 3 = 1 dm 3 = 1 litre.

- So 1000 cm 3 make 1 litre, and hence 1 ml is the same as 1 cm 3.

- d.The dimensions of the brick in cm are 21.5 cm × 10.25 cm × 6.5 cm.

- The volume of the brick in cm 3 is therefore

- 21.5 × 10.25 × 6.5 cm 3

1432 cm 3.

1432 cm 3. - But 1 ml is the same as 1 cm 3 so the volume of the brick is 1432 ml, or 1.432 litres.

- My guess, thinking of a litre box of drink by comparison, was that the brick was 'similar' and would have a volume of about one litre. I was wrong by a factor of 1.432.

- e.There are 1000 litres in a cubic metre. We know from (d) that each brick is 1.432 litres in volume, so we'll need 1000/1.432 = 698.3 bricks; in other words, approximately 700 bricks.

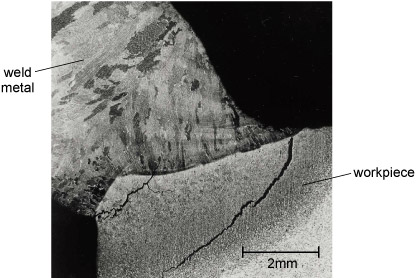

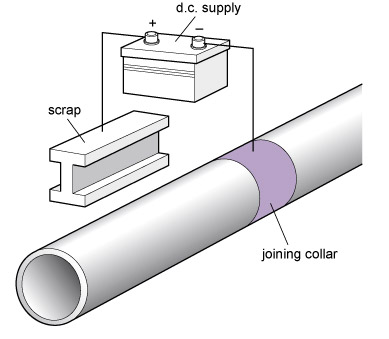

1.3 Standards

When boilers were first made for steam engines, little was known about steel. Plates were riveted together and engineers were ignorant of the stresses in the material. Of course there were successes and there were failures; both can be learned from, even if the lessons are only 'use thicker steel plates'. Later it became possible to weld plates, and new lessons had to be learned.

All this accumulated experience is brought together into a 'standard'. The standard will dictate critical features of the design, construction methods and safety testing of pressure vessels. For example it will guide the designer in relating wall thickness to diameter and pressure. For the ammonia vessel I described in the previous section, the standard specifies that the plate would have to be 150 mm thick. Then permitted types of steel and the welding methods will be specified. Finally the whole thing has to be tested to beyond its working pressure.

Standards govern the design and construction of virtually everything which carries any safety implications. Any company that transgresses the standards, and whose product is then responsible for an accident, will find itself in serious trouble. For the moment though it is interesting to contemplate the apparent conflict between opportunities for innovation and the requirement to adhere to standards. As standards derive from past practice, they are apparently not conducive to innovation. What is the way out of this dilemma? In reality standards are revised, amended, and superseded to account for changes in knowledge and practice.

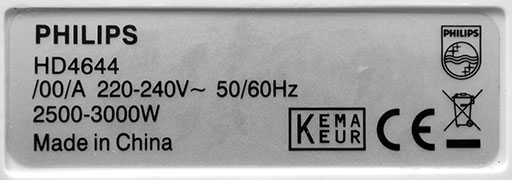

You can look around your home to try to find evidence of this – you should find the phrase 'Conforms to BS xxxx ' (the BS standing for 'British Standard', and the xxxx being a number) or similar. Electrical goods, or their packaging or instruction manuals, would be a good place to search. For example, I found in the instructions for a French-made electric kettle that its moulded-onto-the-wire mains plug conformed to BS 1363 and that only fuses approved to BS 1362 should be used as replacements. Furthermore the kettle was constructed to comply with the radio interference requirements of EEC directive 87/308/EEC. I have also noticed the number BS EN 228 (where the EN indicates that the standard is applicable throughout Europe, not just in the UK) on fuel pumps for unleaded petrol. These last two regulations appear to control the function or composition of the product rather than its safety.

Activity 8 (self-assessment)

Which of the following list would you put into the categories of one-off production, mass production, and bulk production?

- a.A modern 3-bedroom house on a development of 30 houses.

- b.Liquid oxygen for a chemical laboratory.

- c.A USB flash drive.

- d.A Blu-ray Disc of a movie.

- e.The Channel Tunnel.

- f.A photocopier.

- g.Garden compost.

- h.Car tyres.

- i.A hand-made paperweight.

Answer

- a.A modern 3-bedroom house on a development of 30 houses is a one-off (no economy of scale here).

- b.Liquid oxygen for a chemical laboratory is produced in bulk.

- c.A USB flash drive is mass produced.

- d.A Blu-ray Disc is mass produced.

- e.The Channel Tunnel is a one-off.

- f.A photocopier is mass produced.

- g.Garden compost is produced in bulk.

- h.Car tyres are mass produced.

- i.A hand-made paperweight is a one-off.

1.4 Additional thoughts

So where have we reached so far?

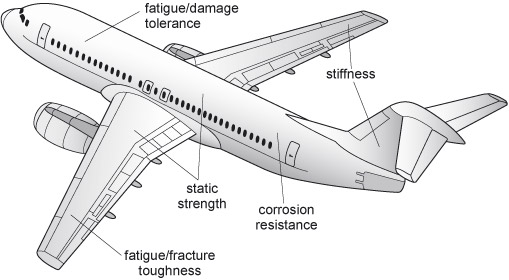

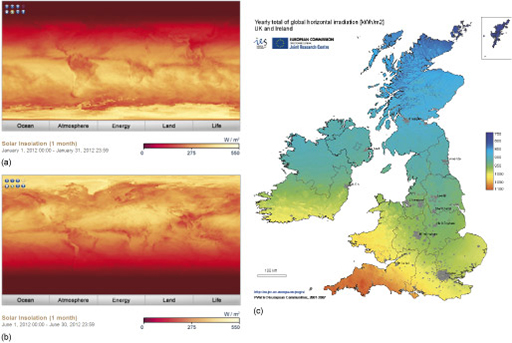

First we have seen that, beyond the shelf-building level of engineering, which I suppose most of us can undertake with more or less skill, there is a profession of engineering which dates back a long way. We shall examine the origins of this profession over the coming sections. Professional engineers, working either alone or in teams, can undertake much more complex tasks by virtue of their talents, training, knowledge and experience. The great diversity of engineered products has led to many specialisms within the profession.