Session 2: GenAI: A risky business? – 120 minutes

2 AI risks

GenAI is becoming much more integrated into decision-making processes across organisations and sectors. As it does so with rapid spread, the risks associated with its use are also increasing.

This session explores some of the main legal, ethical, and operational challenges that arise when AI systems produce inaccurate, biased, or opaque outcomes. It also highlights why accuracy and reliability are essential, how data governance and privacy frameworks apply, and what organisations should do to ensure AI is deployed responsibly.

What might some of the risks be?

What might some of the risks be?

Thinking about GenAI, what do you think some of the key risks might be?

Make a note of these below.

Discussion

This part of the course considers issues such as intellectual property infringement, algorithmic bias, and professional accountability – particularly in regulated sectors – and offer some considerations of what is needed to manage GenAI risks effectively, ensuring not just technical performance, but also public trust, legal compliance, and ethical alignment.

To ensure appropriate governance, managers must assess how extensively their teams are utilising GenAI tools – and define clear boundaries between automated processes and human judgment.

This evaluation should explore three key considerations.

Task criticality

Determine whether GenAI is being applied in high-risk or sensitive domains such as healthcare, legal advice, or regulatory reporting. In these cases, robust human oversight must be mandatory.

Frequency of use

Review whether GenAI is embedded in routine workflows or reserved for occasional, project-specific applications. This will inform appropriate oversight and resource allocation.

Level of autonomy

Clarify the role GenAI plays: is it generating final outputs independently, or is it functioning as a support tool offering content suggestions for human validation?

This evaluation process will help to identify where GenAI is being used or planning to be used within an organisation. Once this information has been identified, the risks can be considered in more detail.

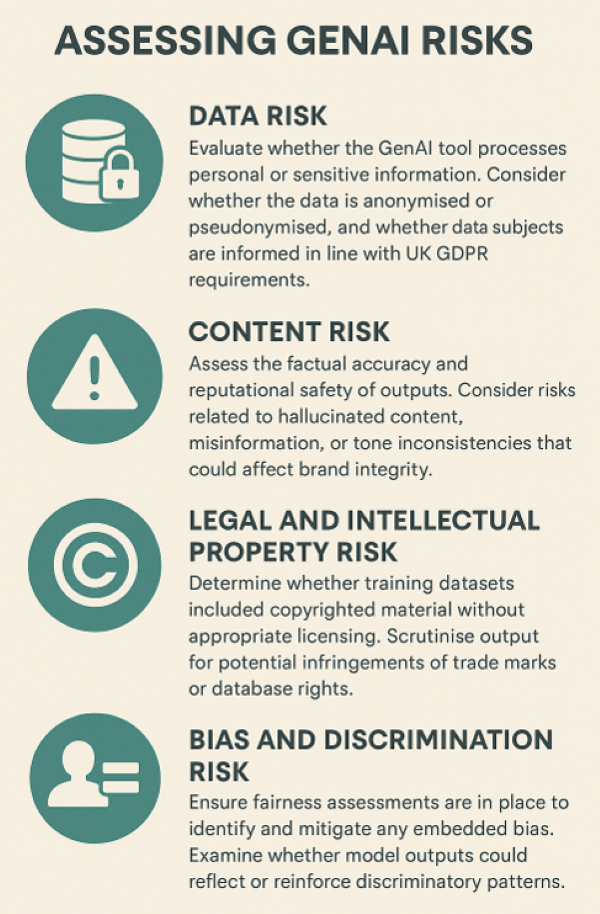

GenAI introduces a spectrum of emerging and complex risks, particularly in areas where data, ethics, and legality intersect. These risks encompass privacy breaches, algorithmic bias, misinformation, copyright infringement, cybersecurity threats, and potential reputational harm.

To mitigate these challenges, organisations are advised to undertake a comprehensive risk assessment for each GenAI use case. This process should be led by managers and informed by multidisciplinary stakeholders, including legal counsel, IT professionals, compliance officers, and ethics advisors.

Utilising a risk matrix – categorising risks by both likelihood and impact – can support effective decision-making and guide proportionate mitigation measures. It can also help to determine the key areas of risk.

As highlighted in the UK Government’s AI Opportunities Action Plan (2025), there is a growing imperative for organisations to implement robust risk governance frameworks. Proactive, structured oversight of AI systems will be essential to ensure ethical compliance and operational resilience for all organisations.

Session 1: GenAI: To use or not to use? – 20 minutes