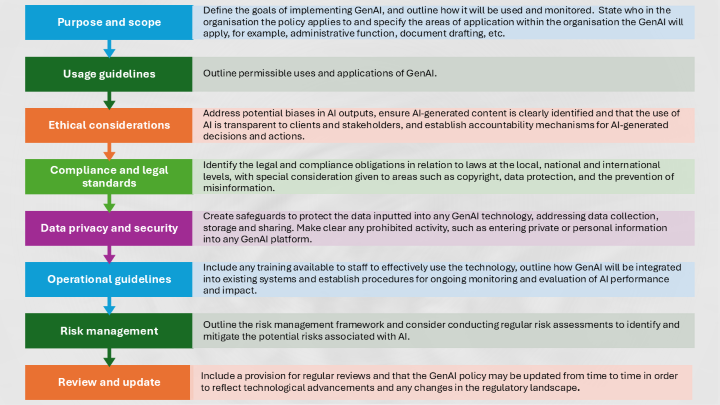

1. Purpose and scope: Define the goals of implementing GenAI, and outline how it will be used and monitored. State who in the organisation the policy applies to and specify the areas of application within the organisation the GenAI will apply, for example, administrative function, document drafting, etc. 2. Usage guidelines: Outline permissible uses and applications of GenAI. 3. Ethical considerations: Address potential biases in AI outputs, ensure AI-generated content is clearly identified and that the use of AI is transparent to clients and stakeholders, and establish accountability mechanisms for AI-generated decisions and actions. 4.

Compliance and legal standards: Identify the legal and compliance obligations in relation to laws at the local, national and international levels, with special consideration given to areas such as copyright, data protection, and the prevention of misinformation. 5. Data privacy and security: Create safeguards to protect the data inputted into any GenAI technology, addressing data collection, storage and sharing. Make clear any prohibited activity, such as entering private or personal information into any GenAI platform. 6. Operational guidelines: Include any training available to staff to effectively use the technology, outline how GenAI will be integrated into existing systems and establish procedures for ongoing monitoring and evaluation of AI performance and impact. 7. Risk management: Outline the risk management framework and consider conducting regular risk assessments to identify and mitigate the potential risks associated with AI. 8. Review and update: Include a provision for regular reviews and that the GenAI policy may be updated from time to time in order to reflect technological advancements and any changes in the regulatory landscape.