2 Total Management: What hope for leadership?

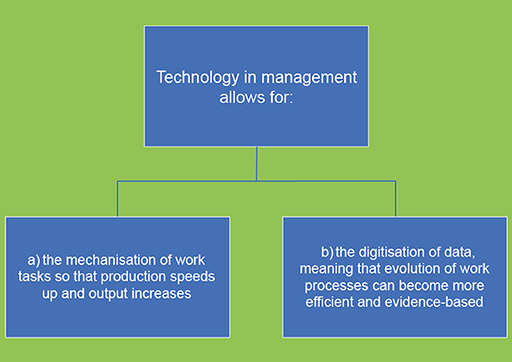

Management goes hand in hand with technology, which is deployed to automate decision-making, managing situations to make them routine.

In the workplace, technology enables greater division of labour, or no human labour at all, as people are replaced by machines. Such developments remove human discretion from systems, meaning that ever greater proportions of our lives are automated: most obviously the number of tasks one should perform at work, and in what order they should be completed. This tendency for ever greater use of automated technology for decision-making is known as ‘Total Management’, under which ‘workers (and indeed managers) are increasingly subjected to automated work processes, with performance and judgments about aptitude assessed by algorithms rather than people’ (Grint and Smolović Jones, 2022, p.4; Smolović Jones and Hollis, 2022).

On one level, digital technology and automation promise liberation. More routine, dirty and unpleasant work can be outsourced to machines. Why would we want to clean toilets ourselves if a robot could do so for us? In theory, outsourcing to machines in this way would give people more freedom to pursue their passions.

However, the intensification of digital technology and the ways in which it manages our lives can normalise and lock in prejudice and injustice. In a fundamental sense, algorithms are very human. Being programmed by humans, they start off informed by human values and priorities. Ideally, they would then be set to work at enacting our values in ways that maximise freedom, care, health and happiness. However, we know that humans can be prejudiced, and such tendencies can be unconscious as well as conscious. What algorithms do, at their worst, is lock in assumptions, attitudes and values of a particular time and group of people and normalise them into decision-making.

The word ‘automation’ therefore works on two levels. The first is physical, meaning on the level of the technology itself, which we regard as increasingly normal in directing our lives. Smartphones, for example, have become extensions of our bodies, always with us and always connecting us. The second level is ideological, which works by normalising certain values and assumptions through technology (Berardi, 2019). Racism, as well as other forms of inequality, can become routinised and locked in to everyday decisions and behaviour.

You will now explore the dangers of automated racism – as well as the possibilities for leadership in challenging it – through an activity.

Activity 2 Racist algorithms?

Watch the following video, where ‘poet of code’ and founder of the Algorithmic Justice League, Joy Buolamwini, talks about the dangers of algorithms.

As you watch, try to think of an example in your life where algorithms have created and/or normalised an injustice. If not, you might have seen an instance in the news. You could do some internet research, or ask some friends and colleagues what they think. Plenty of examples exist, for example, in the realms of policing, education and business. After you do this, try to think about the role Black leadership could play in the future in changing how societies and organisations approach algorithms in their activities.

Transcript: Video 1 Joy Buolamwini – how I’m fighting bias in algorithms

Discussion

In England in 2020, there was mass anger as the government used an algorithm to determine the A level results of school pupils who were unable to sit their exams because of the COVID-19 pandemic. The result was that ‘students from disadvantaged backgrounds were more likely to be downgraded’ from the predicted grades submitted by their teachers, whereas ‘private schools received more than twice as many A and A* grades as comprehensives’ (Duncan et al., 2020). The effects of the algorithm were disproportionately more damaging for racial and ethnic minority students, who were more likely to be studying at comprehensive schools in more deprived areas.

Black leadership could have a powerful influence in shaping the values that inform the design of algorithmic code. Automation and artificial intelligence hold the capacity to enrich everyone’s lives. Ensuring that they are used for the ends of racial equity and justice, however, will be a matter of ongoing struggle.

Management can feel safe and even comforting. It tells us that the world can be tamed, and indeed it often does just that. However, when management becomes too dominant it starts to become Total Management, with serious ethical consequences. There is a role for leadership in disrupting organisations and societies that become too reliant on management.

You will now move on to explore the other practice often confused with leadership – command.