3.3 Failures and limits of empiricist approaches

You have seen that the empiricist approach succeeded where the rationalist approach failed. The empiricist approach conquered the field with the availability of sufficient data and computing power. The rationalist approaches failed as a result of the difficulty of turning commonsense information into computer input and the inherent limitations of computers. However, there is no happy ending yet. The empiricist approach has its own limitations, which are becoming ever clearer.

In a rationalist approach, the idea is that the computer is provided with axioms, that is certain knowledge, from which further certain knowledge can be derived in a transparent and convincing way. In contrast, the empiricist approach relies on data, which doesn’t necessarily need to represent true knowledge, and the conclusion is arrived at in ways that lack transparency. Each of these points can be illustrated, starting with data.

Bias in data

Data can represent not only useful information but also biases. A striking example of this problem was highlighted by Carole Cadwalladr, a journalist with the The Observer and winner of the 2018 journalism prize of the Orwell Foundation (Figure 13). In 2016, she wrote about the disconcerting results of some experiments with the Google ‘autocomplete’ feature:

I typed: “a-r-e”. And then “j-e-w-s”. Since 2008, Google has attempted to predict what question you might be asking and offers you a choice. And this is what it did. It offered me a choice of potential questions it thought I might want to ask: “are jews a race?”, “are jews white?”, “are jews christians?”, and finally, “are jews evil?” … Next I type: “a-r-e m-u-s-l-i-m-s”. And Google suggests I should ask: “Are Muslims bad?” And here’s what I find out: yes, they are. That’s what the top result says and six of the others. Without typing anything else, simply putting the cursor in the search box, Google offers me two new searches and I go for the first, “Islam is bad for society”. In the next list of suggestions, I’m offered: “Islam must be destroyed.”

As explained on Google’s blog, the ‘predictions’ that autocomplete provides are based on ‘real searches that happen on Google and show common and trending ones relevant to the characters that are entered and also related to your location and previous searches’ (Sullivan, 2018). If there is a sufficiently large number of people who are preoccupied with a question, the rest of the Google searchers will receive it as a prediction. YouTube, which recommends videos, uses a similar algorithm, with an artificial neural network at its heart (Covington et al., 2016).

Google appears to have manually adjusted its algorithms to avoid some of these results. (The autocomplete examples discovered by Cadwalladr no longer appear.) However, it is unlikely to ever succeed in eliminating all completions that are biased in one way or another. For instance, with most of the world’s population following a religion, it may surprise you that when we typed in ‘religion is’ (February, 2019), the completions offered by Google were: ‘poison, islam, bad, dying, the root of all evil, brainwashing, mass delusion, a disease, control’. Google relies on users of the service for reporting predictions that are ‘inappropriate’.

Opacity of algorithms

You have seen that data can contain biases that are difficult to detect without human help. There is a second problem which concerns how neural networks process data. It turns out that, even when an algorithm produces plausible results on a data set, small changes to its input can lead to results that are entirely unexpected.

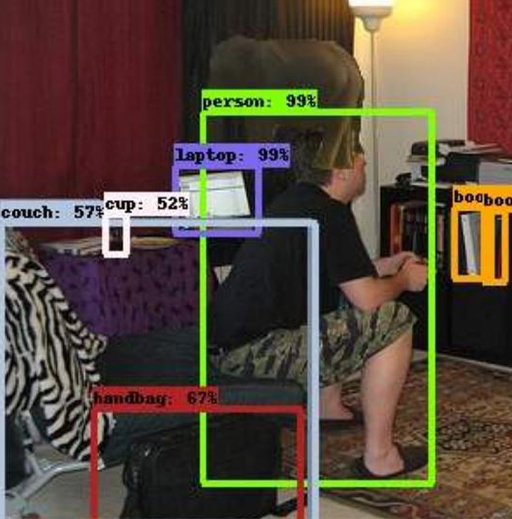

Rosenfeld et al. (2018) report on a wonderful study in which they pasted an object from one image into another image. For instance, they experimented with pasting an elephant into a living room. This resulted in the elephant not being detected at all, as well other objects changing their label. For example, an object that was previously labelled ‘chair’, switched its label to ‘couch’ with the elephant in the room (Figure 14).

As you have seen, the quest for thinking machines or artificial intelligence has a long history, grounded in both rationalism and empiricism. You saw that both rationalistic and empiricist approaches have their shortcomings. This has, however, not stopped the current high expectations for artificial intelligence. The next section looks at the wider implications of the currently prevalent narrative of a future world filled with artificial intelligence.