The explosive growth in the capabilities of AI throughout the 1950s and 1960s proved to be unsustainable. Many of the problems once thought relatively trivial to solve turned out to be anything but. Most notoriously, attempts to develop near instantaneous language translation for the CIA and US military. Despite millions of dollars of investment and the minds of some of the brightest researchers in the World, the project turned out to be a failure. According to legend, one high-profile demonstration translated the English phrase: "the spirit is willing, but the flesh is weak” into Russian and then back into English to produce: "the vodka is good, but the meat is rotten”.

One of highest profile failures of this first wave of AI lay the perceptron. First unveiled in 1958, the perceptron was a novel form of computer hardware emulating operations of a single brain cell. Lavishly funded by the United States Navy, the perceptron’s creators hoped it could be used for tasks such as recognising targets in photographs and understanding human language. Early, positive results led to high expectations that truly intelligent computers built around multiple perceptrons were close at hand. An excited New York Times wrote:

The Navy revealed the embryo of an electronic computer today that it expects will be able to walk, talk, see, write, reproduce itself and be conscious of its existence. Later perceptrons will be able to recognize people and call out their names and instantly translated speech in one language to speech and writing in another language, it was predicted.

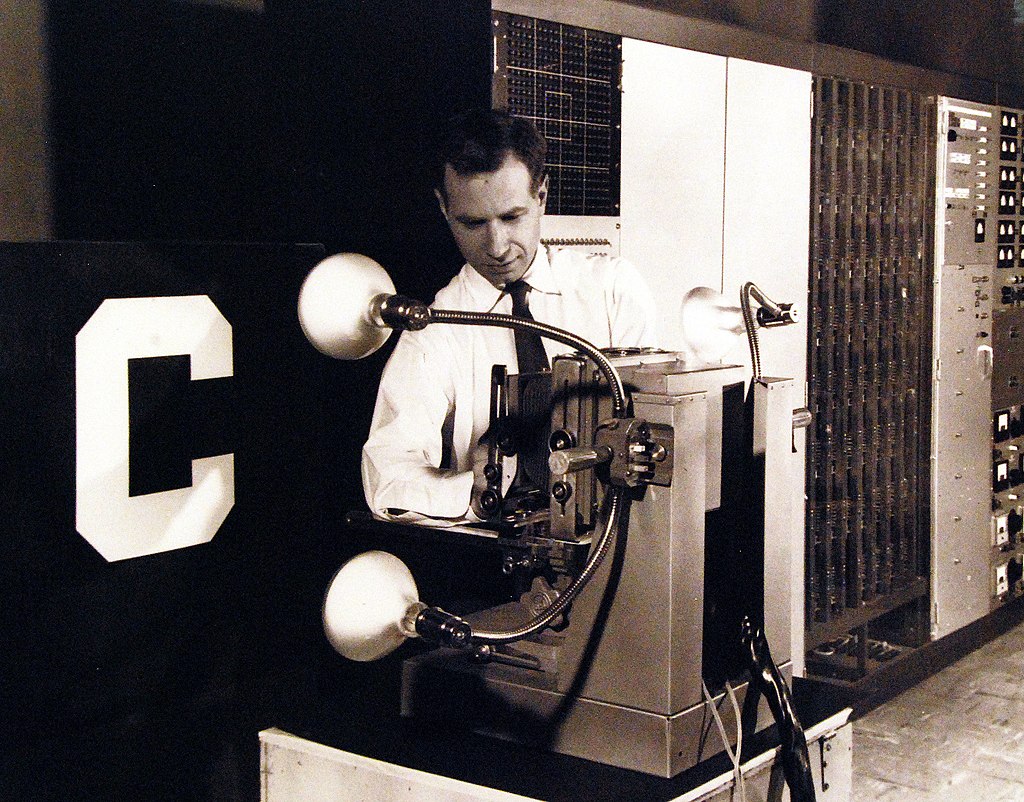

The Mark 1 Perceptron, being adjusted by Charles Wightman, a project engineer.

The Mark 1 Perceptron, being adjusted by Charles Wightman, a project engineer.

As you have probably guessed, the perceptron did not live up to these dazzling expectations. The 1969 book ‘Perceptrons’ by Marvin Minsky and Seymour Papert demonstrated their weaknesses and is often credited with bringing an end to the generous funding perceptrons had previously received. The slashing of funding was not just restricted to perceptrons; almost every aspect of artificial intelligence reliant on US Government funding saw massive reductions in their budget. Consequently, artificial intelligence research slowed greatly in the early 1970s; a period that has gone down in history as ‘The First Artificial Intelligence Winter’.

There was no longer talk of trying to recreate human intelligence; instead, researchers turned to much smaller, and simpler, goals. Many of these new forms of AI replicated the decision-making processes of humans; using large numbers of rules to make determinations. Quietly, AI began to make significant progress in many areas; including diagnosing diseases, exploring scientific and engineering data, and driving ever more sophisticated robots. These rule-based systems proved to be highly effective and useful in specific domains; they proved to be as good, or even better than humans in making decisions within specific areas of knowledge. An AI designed for diagnosing childhood diseases or for viewing seismic data used in oil exploration would not be useful in other areas of knowledge.

Optimism about the potential for artificial intelligence rose steadily through the 1980s. As memories of the first winter faded, talk returned of developing sophisticated general purpose artificial intelligences using advanced rules-based technologies. Once again, this excitement proved premature; the artificial intelligences of the 1980s proved to be hard to maintain, and failure prone. Colossally expensive research programmes in both Japan and the United States never met expectations and once again funding and interest in artificial intelligence dwindled. The Second Artificial Intelligence Winter had arrived.

Just as with the first winter, artificial intelligence did not die out – in fact, in many discrete areas, it continued to quietly thrive and expand. Writing in 2002, the artificial intelligence guru Rodney Brooks said:

‘…there's this stupid myth out there that AI has failed, but AI is around you every second of the day.’

Once again, despite all the bad news about big projects, AI was quietly getting to work behind the scenes of our everyday lives; and the field was going to take a giant leap forward by returning to a maligned technology from the past.

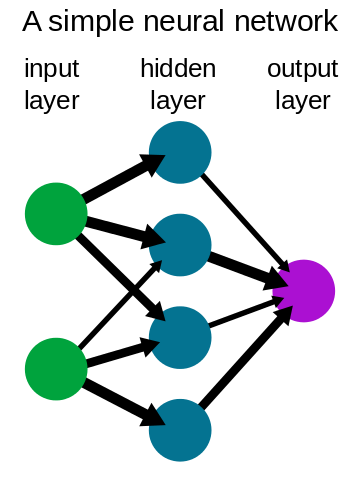

From the mid-1980s onwards perceptrons, unfairly dismissed as another discredited technology, began to make a comeback as the building blocks for artificial neural networks - a technology inspired by the structure of animal brains. Neural networks are built from layers of interconnected software perceptrons, called nodes. Every node has several inputs, each of which can be more or less important (important inputs are said to have greater ‘weight’). The node sums up the values of its inputs using an ‘activation function’, and if the total exceeds a value (the ‘threshold’), the node outputs a value. If the threshold is not met, the output is zero.

The

original – and simplest - form of neural net is called a feedforward neural

net. Here, data ‘feeds forward’ from an ‘input layer’ of nodes towards an

‘output layer’. When data is sent to the input layer of the net, every node

independently sums its inputs and passes its result to multiple nodes in a second ‘hidden layer’. These nodes

repeat the process of summing and then send their outputs to nodes in the third

‘output layer’.

The

original – and simplest - form of neural net is called a feedforward neural

net. Here, data ‘feeds forward’ from an ‘input layer’ of nodes towards an

‘output layer’. When data is sent to the input layer of the net, every node

independently sums its inputs and passes its result to multiple nodes in a second ‘hidden layer’. These nodes

repeat the process of summing and then send their outputs to nodes in the third

‘output layer’.

Thirty years after perceptrons were largely relegated to history; their descendants began to change the world. Today, neural nets are used for an incredible range of tasks. They identify images, speech and music, they translate between languages, recognise handwriting and identify trends in social media feeds. Neural nets buy and sell stocks and shares, identify gene sequences that lead to disease, drive vehicles, filter spam email, monitor computers for malicious software and, perhaps appropriately, analyse the workings of our own brains.

The 21st Century has seen artificial intelligence move to the centre of many modern technologies. Around the world, unimaginable amounts of public and private money are being poured into artificial intelligence research and the development of products. Economic experts predict that the adoption of AI might add between 13 and 16 trillion dollars to the global economy every year by 2030. These extraordinary figures have been driven in good part by a form of AI known as generative artificial intelligence which allows neural nets to create new text, music, pictures, videos and voices.

Artificial intelligence was getting creative.

Rate and Review

Rate this article

Review this article

Log into OpenLearn to leave reviews and join in the conversation.

Article reviews