10 Speaking robots

It seems a very obvious point, but English is a human language, and the previous sections have all focused on this. English is spoken by humans when they speak to other humans. A lot of people might address their pets in English, but they have no expectation that the pets will also reply in English. Animal communication in a range of different species can be quite sophisticated, but it never has the same level of complexity and flexibility as human language does.

But how about machines and robots? As various types of machine become ever more integrated into our everyday lives, teaching them to ‘speak’ seems to be a logical next step. After all, spoken language is the most direct and intuitive form of interaction for humans. But can machines ever really ‘speak’ and communicate in the way that humans can? What are the challenges faced by researchers into trying to teach a machine how to speak? And what does this tell us about the nature of language itself?

These are the question explored in the video below.

Activity 11

Watch the video and make some notes in the text box below and save your answer. There is no comment for this activity.

Transcript: Video 12 Speaking robots

[MUSIC PLAYING]

BARIS SERHAN: What is this?

ROBOT: Just a second. It may be a cup.

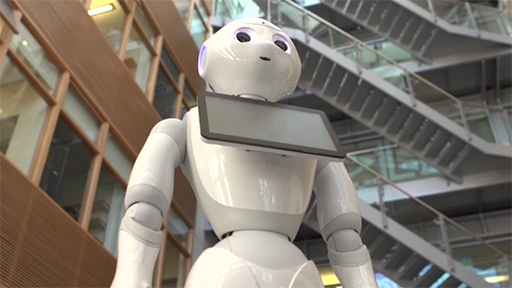

As you can see, I’m a humanoid robot created by SoftBank Robotics.

PAUL PIWEK: So the history of computers and using language actually goes back to Alan Turing. During the war, actually, people started developing the first digital computers. After the war, they were then also developed for commercial purposes. Turing worked specifically on this.

And at the time, these computers, they were often referred to, not just by researchers, but also in the popular media, as electronic brains. And so there was a whole discussion about, can these machines-- can they actually think or not? Or will they ever be able to think? What does it actually mean for a computer to think?

OLD MAN: Hello.

ROBOT: Hello.

OLD MAN: Hello.

ROBOT: Hello.

OLD MAN: Hello.

ROBOT: Hello.

OLD MAN: Oh, dear.

ROBOT: We could do this forever.

OLD MAN: I know we could.

ANGELO CANGELOSI: We are trying to teach machine to speak. In particular, we are working with humanoid robots. And the reason we are doing this is because in a context where you have a humanoid robot living in your environment with you, helping you, for example, to prepare food, you want to be able, of course, to communicate via language, which is our natural form of interaction.

ROBOT: Would you like to talk about my impressive hardware?

ANGELO CANGELOSI: For example, the latest one is the Pepper robot, developed by a company in France, SoftBank Aldevron Robotics.

ROBO: I am a sophisticated combination of hardware and software designed to interact with humans and bring them joy. I attach sensors on my head, each of my hands, and inertial sensors in my chest and legs to help me keep my balance.

I can detect and avoid obstacles using the sonars, lasers, and bump sensors built into my legs.

ANGELO CANGELOSI: Communication between people, it’s not only a speech-based approach, but it's a non-verbal approach-- so, for example, emotional communication. We communicate expressing and understanding emotions through body movements or through face or emotional expressions.

ROBOT: I'm still learning how to understand human emotions. But I can analyse your face and notice when you smile or frown.

WOMAN: OK. Hmm.

ANGELO CANGELOSI: The off-the-shelf version of the Pepper actually is very similar to home assistants like Alexa, Siri, all these systems, in the sense that they are pre-programmed with a long list of words, a complex set of grammars, and they can talk about these without really referring to the meaning.

ROBOT: I play an instrument called music boxes. They are--

ANGELO CANGELOSI: This is a prerecorded, pre-programmed set of words and grammatical rules. And the Pepper, in some way, has no understanding of the meaning of words. It just can babble.

ROBOT: Don’t be shy. Put your hands on top of mine.

PAUL PIWEK: In more recent years, in the last decade or so, in the wider area of artificial intelligence, machine learning has become very popular, in particular, the use of what’s known as neural nets. What the machine learning algorithm or system does is it represents the English sentence as a number or a mathematical structure.

Basically, these neural nets, at some point, became very successful for recognising images. So if you give it an image, it will tell you, oh, this is an image with a house in it or something like that.

[MUSIC PLAYING]

BARIS SERHAN: Where is the red hammer? Pick up the blue cup.

ANGELO CANGELOSI: The iCub project is now a 10, 12-year-old project. The iCub that you see here will be nine years old on the 31st of March ’18. So it’s a nine-year-old boy. But I think it speaks, still, like a probably two-year-old child.

BARIS SERHAN: Learn ‘cup’.

ROBOT: Cup.

BARIS SERHAN: Learn ‘ball’.

ROBOT: I like to learn.

ANGELO CANGELOSI: Our approach is called developmental robotics. The scope is the ideas of developmental psychology. So we understand and we study how children learn, looking at psychology experiments.

We implemented the same protocols and the same strategies into our robots. And up to here, we are achieving progress in technology.

MOTHER: Where’s the cooker?

BABY: Cooker.

MOTHER: Cooker. Very clever. Where’s the kettle?

BABY: Kettle.

MOTHER: Kettle.

ANGELO CANGELOSI: One of the challenges is to understand speech, so to decode a wave sound into actual words. But I think the biggest challenge is to attach a meaning to these sounds. So as a baby, I can hear words like ‘dog’ and ‘red’. But the real challenge is how do I connect the word ‘dog’ to the actual perception of interaction with an animal and ‘red’ to the actual perception of the colour for the specific object?

MOTHER: Where is the yellow one. Can you say ‘yellow’? That’s yellow, isn’t it?

SPEAKER 5: [BABY TALK]

GRANDMOTHER: That’s right, yeah.

BARIS SERHAN: Learn ‘ball’.

ROBOT: I like to learn. This is a ball.

ANGELO CANGELOSI: In this demonstration, we are replicating the behaviour of two-year-old children between 18 and 24 months of age. This is when children are starting to name objects, individual words, or one or two words in maximum.

FATHER: What is it? What’s that?

BABY: [BABY TALK]

MOTHER: Good girl.

FATHER: This one?

BABY: Yeah.

FATHER: Yeah, but what’s it called?

BABY: Kum-bah.

FATHER: Kum-ba-bah.

BARIS SERHAN: Find ball.

ROBOT: OK. Now I’m looking for a ball.

ANGELO CANGELOSI: So you see in the demonstration that the robot is paying attention to the object. The tutor is moving the object around so that the robot can keep its attention there. And then, via joint attention with the human teacher, the robot pays attention to the same direction of vision, which is the object focus here. You can now say the name of the object.

BARIS SERHAN: What is this?

ROBOT: I think this is a ball.

ANGELO CANGELOSI: The simultaneous experience of seeing the object and hearing the label, the word for the object, allows the artificial intelligence algorithm behind the robot architecture, called artificial neural network, to learn the association. So it’s a pure speech, sound, and visual architecture, associative learning, like in Hebbian learning, the human brain. And this shows that the robot is the basis for further later acquisition of complex skills like grammar, combining two or three words together.

BARIS SERHAN: Stop detection.

ROBOT: OK. I will stop it.

ANGELO CANGELOSI: Our approach is really highly interdisciplinary. It means it requires the collaboration of experts from different fields. In particular, we require expertise from roboticists, who build our machines, from programmers, and AI people, artificial intelligence, who can program the AI techniques, but, of course, psychologists, a linguist, because they are those that tell us, what’s our current understanding of the way language and meaning and sound works, and, of course, how this applies, for example, in child development for language acquisition.

MOTHER: Where’s the toaster?

TOY: What shall we bake today?

BABY: [BABY TALK]

MOTHER: Toaster. You clever girl.

PAUL PIWEK: What I’ve really learned from doing research on human-computer interaction is-- and especially computer interaction using language-- is that you very quickly realise that things are a lot more complicated than you initially might have expected.

MOTHER: But there’s no water coming out of that tap, is there?

PAUL PIWEK: So usually, when I say ‘hello’ to somebody in the street, they might say ‘hi’ or ‘hello’ or ‘how are you?’ back. So there’s a fairly limited number of things.

But you can see where it sort of gets tricky. So when I say, ‘OK, what have you been up to today?’ Then it’s unlikely that you’re going to find the answer to that question in a huge collection of things that have been said previously, because it’s really what my plans and intentions and beliefs are right now that matter here. And those are not necessarily explicitly represented in any text or even in any prior dialogue that exists.

MOTHER: I know you’d like it to have water. But we can just pretend.

ANGELO CANGELOSI: I don’t believe that it’s possible to achieve human-level capabilities, at least in the medium-term or maybe during my lifetime, just because of the complexity of the task. Language is what makes us special amongst other skills, let’s say, as a human species. And I think we are very far from achieving this, in my lifetime at least.

BARIS SERHAN: What is this?

ROBOT: Sorry. I do not know this object.