1. Getting Started: Readings

5. The Assessment System Lifecycle

The Assessment System Lifecycle

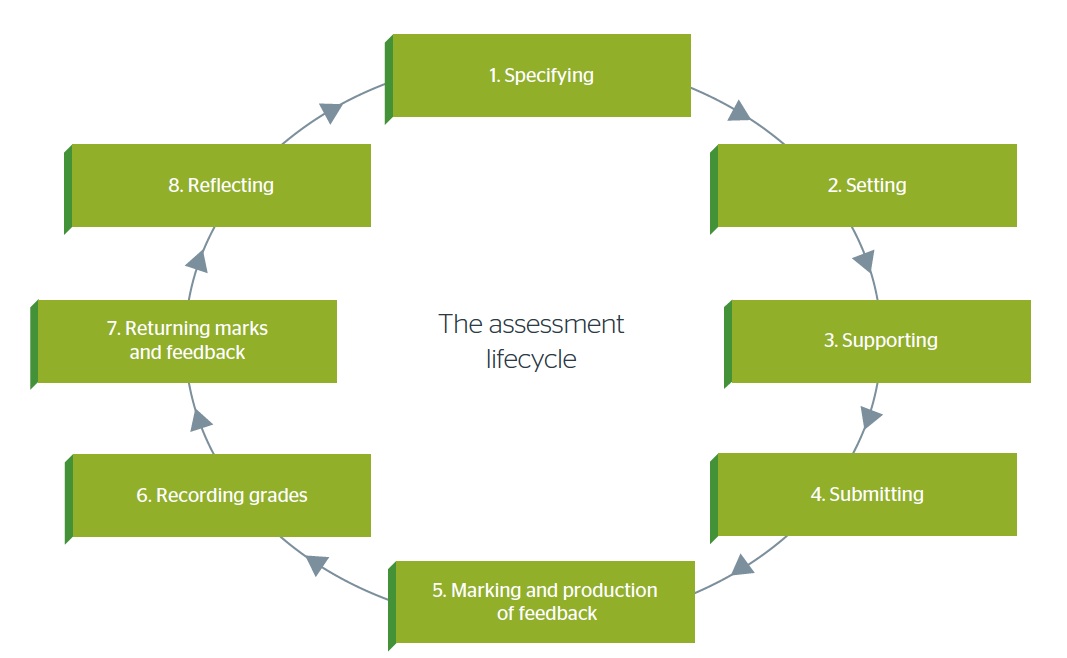

The concept of an ‘Assessment Lifecycle’ is useful and fits very well with the approach we are taking in this guide - to encourage a systematic way of looking at things. The image below provides a useful overview of the concept.

Caption: The Jisc Assessment Lifecycle, Jisc / Gill Ferrell, (Image License BY). Based on Forsyth, R., Cullen, R., Ringan, N., & Stubbs, M. (2015). Supporting the development of assessment literacy of staff through institutional process change. London Review of Education, 13(34-41).

The lifecycle model provides a useful means of mapping the processes involved and the potential for technologies to support this – it can be used for analysing a single course or across an institution. The use of a shared model like this can be useful when dealing with different groups in an institution[1] to help them understand how their work interacts with others, by emphasising the connected nature of these activities. Below we provide brief summaries of each stage in the lifecycle to help you think about interpreting the model in your own situation.

Specifying – the key stage

Although colleges frequently design programmes of study, the qualifications content on which these are, in general based, are in general, specified by the SQA in consultation with subject experts. Similarly, SQA specifies the assessment criteria but colleges choose the assessment methods and instruments. So you might think that this part of the cycle is taken care of, but before we move on it is worth taking a closer look at this important stage.

Even where the assessment specifications appear to be ‘nailed down’ by an awarding body, it is worth bearing in mind that they might still contain some errors, contradictions and lack of clarity (especially when translated into a technological form from a previous paper-based assessment) so it is essential to check them out. This is crucial when adopting new modes of assessment as in some cases the unit specifications may be quite dated and appear to exclude e-assessment by its use of language. The main thing to bear in mind when redesigning your assessments to use technology is that as long as the original assessment criteria and methods are followed and the evidence generated matches them it should not matter if the mode of assessment is digital and the evidence is digital – as long as it conforms to the specifications. The SQA wants colleges to adopt e-assessment methods, as their forward to this guide makes clear.

Because digital instruments and evidence might appear to be different from the original unit descriptor / specification, then it is always sensible to record the changes (however small) in the relevant college quality systems and the rationale for making the change – as part of the Internal Verification (IV) process. Different colleges have different systems for doing this; many map the changes explicitly onto the unit descriptors making clear where the changes are. We think it is good practice to also record the reasons for these changes and provide the ‘story’ for doing so in the form of a simple narrative (we have produced some design templates for this available from the project website). This is important to store with the rest of the quality control records for a particular unit so that future members of staff will be able to understand what is going on.

Providing this information is also crucial for the external component of the quality control process – External Verification (EV) – where external subject experts and teachers ‘inspect the books’. The EV process checks that the unit specification has been adhered to (including assessments) and that the student work submitted is of a sufficient standard. It is in the interests of the colleges to make the task of the EV’s as easy as possible. The EV process is often still largely paper based with copies of the units specifications / descriptors kept in folders together with records of the Internal Verification (IV) process that have been ‘stamped’ as accepted by the internal quality officers as well as copies of the student work. When this process moves into the digital realm it is important for the colleges to have clear procedures for where to store the digital equivalents of the paper folders. This includes simple but essential things like naming conventions for files and folders/directories and ways of storing content and controlling access etc. There are different methods and technologies used for doing this (simple is usually best!), the main thing is that this is done. The quality function of the college is a good ally to cultivate in this process – we discuss this further in the Collaborative Frameworks section.

Setting the assessment

This is where the criteria, methods and instruments from the specification stage are used / interpreted in detail for a specific context– i.e. a college, a cohort of students, a department, lecturers etc. What we found in our project in moving from paper to electronic means of assessments is that this stage is the point where deep reflection can occur and creative solutions start to appear. Thus it is important to provide lecturers with support and time at this critical point. In practice this equates to the design stage of our toolkit. This is a good opportunity to think about the timing of assessment in courses and if possible to move some assessment to an earlier stage in the course rather than having it all bunched up at the end. Having early formative / diagnostic e-assessments is a good idea and objective / MCQ testing can provide rapid feedback to students. At this stage you also need to think about how the timings of your assessment plans fit into the workload of your students.

Changing the assessment technology from paper to electronic can be a kind of prompt to see things differently. We found that teachers often used the opportunity to fix or improve aspects of their courses that they were unhappy with.

Supporting

This part of the lifecycle is concerned with how you support the students while they are in the process of doing the assessment. As we explain in the Analysis section of the toolkit you need to think about the digital skills the students and staff [2] will need in order to complete the assessment using whatever systems the college uses. The Jisc EMA report makes some really useful observations about things to check at this stage:

- Do the students understand the type of assessment that they are being asked to do? Are they familiar with these methods? Ask them to make sure! Getting them to explain their understanding of the assessment can be a useful diagnostic technique, you could do this using a classroom voting system

Make sure you and your colleagues use the same terminology about the assessment with the students – in fact agree a glossary of terms beforehand (small things make a big difference)

You might need to put in place tutorials and seminars workshops etc. that deal specifically with the new assessment methodology

If using Objective / MCQ formative tests for summative assessment, then it is essential to do a ‘trial run’ beforehand to familiarise your students with the technology and the college facilities – doing so with a formative assessment is an efficient approach

If you want students to submit draft assignments for marking and feedback before the final submission it will be sensible to set up two ‘Dropboxes’ on the college system that are clearly labelled and with the dates and have the draft one ‘disappear’ to leave only the final one visible

Submitting

The advantages of electronic online submission are listed in the sections below called ‘Why change? Some Advantages of e-Assessment’. This is also a definite ‘pinch point’ where things can go wrong such as the online test or ‘dropbox’ not being correctly set up, the students not knowing how to use the system or being confused by it. There is definitely a need to develop contingency plans here for system failure and human error. So, have a clear ‘Plan B’ for emergencies and make sure that you, your colleagues and your students know what that is – be especially clear about how students can let you and the system admin know that something might be wrong. This may include deadline extensions, student email alerts, system alerts to students (via the VLE or email etc.), having a helpline for students and making sure they know about it, a backup email address for emergency submission and even allowing paper submissions if needed.

Marking and Feedback

As the Jisc EMA report makes clear this is where the usability of existing technical systems present some challenges. This is why starting with pilot projects for low stakes formative e-assessments makes a lot of sense. It allows you and your colleagues (and students) to understand the systems you are using and to work out methods that are simple and robust to work around some of these limitations. Then, with that foundation, you can undertake summative assessment.

In our project the use of online rubrics was a revelation for lecturers and they all took to it immediately – seeing the benefits of greater marking consistency, clear feedback for students and speeding up the whole marking cycle. For similar reasons, they also really like the creation of templates in the e-Portfolios system for students to complete.

Recording / Managing Grades

Many of the SQA units in qualifications taught in colleges are marked on criteria that leads to either or pass or a fail. However, there is a presumption built in to many of the technical systems that marking will be in numerical figures or percentages. This can be problematical in technical systems that expect percentages or figures to be used to assign grades of performance to students. With some thought this can be made to work in these systems

The grading systems in VLE’s and e-Portfolios can be difficult to use, often requiring a great deal of scrolling to view the correct data for a student. A common issue is that the student record system in a college is regarded as the definitive version of grading information for students, but this is rarely linked to the VLE or e-portfolio systems where online marking takes place. This results in the marks having to be manually transferred between systems with the potential for error and delay. There is also the issue of lecturers keeping marking information outside the college systems due to skills / trust / access problems, again leading to the risk of error, delay and loss.

Returning Marks and Feedback

Students need to know how to submit an e-assessment but just as importantly they need to know how to receive and find their marks and feedback. Current systems do not make it clear to lecturers whether a student has received their marks and feedback. This is where getting both students and lecturers used to the system and how to work around the limitations is essential. Students (and lecturers) need clear information about when and how they will receive their marks and feedback.

Reflecting

Below we quote a useful example of institutional guidance about the Reflection part of the assessment lifecycle from Manchester Metropolitan University, who have been closely involved with Jisc in developing the lifecycle model. The change management process it describes maps onto the Internal Verification process used in Scottish FE colleges. The inclusion of a suggested annual review by unit and programme leaders is a particularly useful piece of advice. The guidance acknowledges the time and resource constraints that might get in the way of this. To make this happen we would advise that it is formalised – as it is such an essential element in maintenance of your e-assessment system.

“There are two parts to reflection on each assignment task: encouraging students to reflect on their own performance and make themselves a personal action plan for the future, and tutor reflection on the effectiveness of each part of the assessment cycle from setting to the return of work. It can be difficult to make time for either, with assessment usually coming at the end of a busy year, but it is worth making the effort.

If you wish to change any part of the assignment specification following review, then you will need to complete a minor modification form.

What Unit leaders need to do

Review the effectiveness of assignment tasks annually and report back to the programme team as part of Continuous Monitoring and Improvement

Encourage students to reflect on their previous assessment performance before beginning a similar assignment, even if in a different unit and at a different level.

What Programme leaders need to do

Review assessment across each level annually, using results and student and staff evaluations as a basis for discussion”.

[1] Research by Etienne Wenger and others into the management of knowledge and communities of practice within organisations identifies these kinds of tools as ‘boundary objects’ that help people to see how their work fits into the bigger picture.↩

[2] https://jisc.ac.uk/guides/developing-students-digital-literacy↩

[3] http://www.celt.mmu.ac.uk/assessment/lifecycle/8_reflecting.php↩