1.1 Understanding brightness

The ultimate aim of an astronomical observation is usually to answer a question about the intrinsic properties of an astronomical object. For example, we might want to understand how much energy a star is producing, or perhaps how many stars are likely to be present in a distant galaxy.

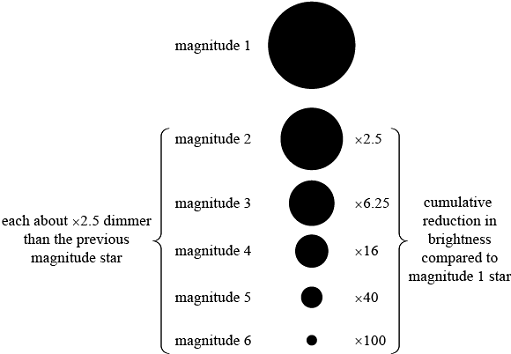

Astronomers refer to the brightness of astronomical objects in several ways. When referring to how bright a star or galaxy appears to be, astronomers use the concept of apparent magnitude. The brightest objects in the night sky have apparent magnitudes of around 1, whereas the faintest that can be seen with the naked eye have apparent magnitudes of around 6. Because of the way in which human eyes work, objects with magnitude 6 are in fact about 100 times fainter than those with magnitude 1: each decrease of one magnitude corresponds to roughly a 2.5 times reduction in brightness. Using a large telescope, objects as faint as magnitude 25 may be detected. A 25th magnitude star is around a hundred million times fainter than those near the limit of perception for unaided human eyes.

There are two reasons why different stars may have different apparent magnitudes: they may emit different amounts of light or they may be at different distances away from us. Two identical stars situated at different distances from us will have different apparent magnitudes, purely because the light emitted by the more distant star is spread out over a larger area by the time it reaches us. In order to allow comparison between the brightness of stars at different distances, the concept of absolute magnitude is required. The absolute magnitude of a star is (arbitrarily) the value of its apparent magnitude if it were placed at a standard distance away of 10 parsecs (about 3 x 1017 m). The absolute magnitude of a star therefore gives a measure of its intrinsic brightness, or the amount of energy it emits per unit of time.

While apparent and absolute magnitudes are a useful convenience for astronomers, it is often helpful to use more conventional measurements of brightness when measuring stars and galaxies. We may therefore distinguish between the flux of light from a star or galaxy and its luminosity. Luminosity is a measure of how much energy in the form of light (or other electromagnetic radiation) is emitted by a star or galaxy in a given time interval. It is an intrinsic property of the star or galaxy itself and may be measured in the SI unit of power, which is watts (W) or equivalently joules per second (J s−1). Luminosity can be related to absolute magnitude. On the other hand, flux is a measure of how much energy in the form of light (or other electromagnetic radiation) we observe from a star or galaxy in a given time interval. It therefore depends both on the luminosity of the star or galaxy in question and on its distance away from us. It is measured in the SI unit of power per unit area, which is watts per square metre (W m−2). Flux can be related to apparent magnitude.