Privacy in Design

Earlier in the course, we discussed some design- and architectural-level considerations for implementing systems that can fulfill users' privacy rights. But what other resources are out there to guide you in creating privacy-preserving software architectures and UI designs?

For UI and high-level design considerations, start with Deceptive Patterns. This site (now with accompanying book) documents design choices that deceive or manipulate the user ("dark patterns"). These often occur in the user interface, but can also be process or business decisions, for example increasing a user's monthly bill for the remaining months of their subscription if they decide to cancel. (This would be illegal in multiple jurisdictions, yet businesses still think they can get away with it.) Spend a few minutes checking out their Hall of Shame.

- Have you come across deceptive patterns like this in your work or as a user?

- For each design, consider how it could be modified to be transparent and fair to the user. Do they need more information? Clearer labeling or icons? A "no" button that isn't so small it's hard to see, never mind click?

- Also ask yourself who in the company you think was responsible for each deceptive pattern you see. Was this an executive decision, or was it made by product management, product architecture, or by a developer? Do you think the manipulation of the user was deliberate, or could it have been an honest mistake? Who could have prevented this?

"Dark patterns" is an umbrella term referring to a wide variety of practices commonly found in online user interfaces that lead consumers to make choices that often are not in their best interests.

Many dark patterns influence consumers by exploiting cognitive and behavioural biases and heuristics, including default bias, the scarcity heuristic, social proof bias or framing effects.

They generally fall in one of the following categories:

- Dark Commercial Patterns by Nicholas McSpedden-Brown, OECD Digital Economy Papers No. 336 (2022)

- forced action, e.g. forcing the disclosure of more personal data than desired.

- interface interference, e.g. visual prominence of options favourable to the business.

- nagging, i.e. repeated requests to change a setting to benefit the business.

- obstruction, e.g. making it hard to cancel a service.

- sneaking, e.g. adding non-optional charges to a transaction at its final stage.

- social proof, e.g. notifications of other consumers’ purchasing activities.

- urgency, e.g. countdown timer indicating the expiry of a deal.

Some other excellent resources are:

- Privacy Patterns - a repository of "design solutions to common privacy problems". Just like with the software patterns and architectural patterns you are familiar with, this is a collection of reusable privacy solutions you can apply again and again across different designs.

- Fair Patterns - while this site exists to advertise training and consultancy services in fair (instead of dark) patterns, it offers a helpful selection of visual examples of dark UIs together with examples of what the alternative fair versions could look like. Check out also their explanation of why you should care about avoiding dark patterns in your designs.

- The OWASP Top 10 Privacy Risks Countermeasures list - a great checklist you can use as a starting point to ensure you have the privacy essentials in place for a design at all levels, from the technical to the organizational. The only countermeasure (in the v2.0 list) that we cannot recommend is "ask local DPA to audit you". Depending on your local authority, this request may be ignored (as they are swamped with requests) or could lead to fines for your organization if you are discovered to be non-compliant.

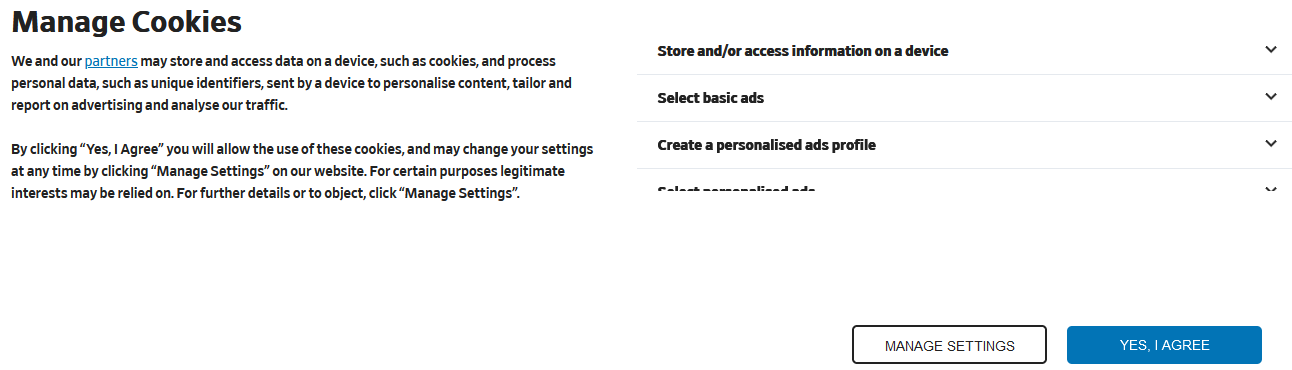

- If you ever need to design a cookie consent banner - or integrate one provided by a third-party vendor - make sure you understand the design requirements in detail. For example, the EDPB's Report of the work undertaken by the Cookie Banner Taskforce lays out how fine-grained the requirements of the GDPR and ePrivacy Directive are. You need to ensure you provide a "reject" button on the first UI layer, don't pre-tick any consent boxes, avoid using deceptive colors or contrast ratios, classify your "essential" cookies correctly, and allow the user to easily withdraw their consent at any time afterward. Don't fall into the trap of copying other European websites - most are violating the law here, and - thanks to activism efforts - the law is slowly starting to be enforced.

An example of a deceptive cookie dialog that is illegal under GDPR. The "privacy unfriendly" option of clicking "Yes, I Agree" is displayed in a different color. This highlights it and makes it more likely the user will choose this option if they are rushing. It also makes the "Manage Settings" button look like it is disabled and potentially unclickable. What's more, there is no top-level option to reject all non-essential cookies...And let's not get started on the text.

If you're interested instead in how you could build privacy-preserving cloud architectures, check out these examples:

- AWS's excellent free Architecting for Privacy on AWS workshop discusses how to minimize, mask, delete, and segregate access to personal data in a serverless web application using AWS Lambda, AWS DynamoDB, AWS S3, and AWS CloudWatch. If you needed further convincing, the application is a 🦄 unicorn ride-sharing app! (Note that while the content is free, setting up and using the described architecture in your AWS account will be billed at the standard rate.)

- A GDPR-Compliant Data Lake Architecture on the Google Cloud Platform considers how to implement retention periods and fulfill personal data deletion requests in a data lake built with GCP DataProc, GCP Cloud Composer, GCP BigQuery, and GCP Data Catalog.